-

Posts

151 -

Joined

-

Last visited

Content Type

Profiles

Forums

Downloads

Store

Gallery

Bug Reports

Documentation

Landing

Report Comments posted by turnipisum

-

-

Still it continues! on 6.9.1 now but same it can go over 30 days, 4 days or 1 day very random. ☹️

-

On 11/22/2020 at 8:13 PM, turnipisum said:

Just found the posts about power supply idle and c-states so trying that see what happens.

Thanks @ich777 but i gave that a try months ago lol

-

AMD 3970x all spec below. The issue is the 3 VM's are in daily use i will have to build temp machines to kill them off for days.

Case: Corsair Obsidian 750d | MB: Asrock Trx40 Creator | CPU: AMD Threadripper 3970X | Cooler: Noctua NH-U14S | RAM: Corsair LPX 128GB DDR4 C16 | GPU: 2 x MSI RTX 2070 Super's | Cache: Intel 660p Series 1TB M.2 X2 in 2TB Pool | Parity: Ironwolf 6TB | Array Storage: Ironwolf 6TB + Ironwolf 4TB | Unassigned Devices: Corsair 660p M.2 1TB + Kingston 480GB SSD + Skyhawk 2TB | NIC: Intel 82576 Chip, Dual RJ45 Ports, 1Gbit PCI | PSU: Corsair RM1000i

-

Update to my last post.

It didn't fix it!☹️ I got a random uptime of 47 days then now i'm back to 1-4 ish days then crash.

I have just disabled PCIe ACS override see if that does anything.

Same error as i always have in logs.

Mar 6 19:50:21 SKYNET-UR kernel: Hardware name: To Be Filled By O.E.M. To Be Filled By O.E.M./TRX40 Creator, BIOS P1.70 05/29/2020 Mar 6 19:50:21 SKYNET-UR kernel: RIP: 0010:__iommu_dma_unmap+0x7a/0xe8 Mar 6 19:50:21 SKYNET-UR kernel: Code: 46 28 4c 8d 60 ff 48 8d 54 18 ff 49 21 ec 48 f7 d8 4c 29 e5 49 01 d4 49 21 c4 48 89 ee 4c 89 e2 e8 8f df ff ff 4c 39 e0 74 02 <0f> 0b 49 83 be 68 07 00 00 00 75 32 49 8b 45 08 48 8b 40 48 48 85 Mar 6 19:50:21 SKYNET-UR kernel: RSP: 0018:ffffc9000468f9f8 EFLAGS: 00010206 Mar 6 19:50:21 SKYNET-UR kernel: RAX: 0000000000002000 RBX: 0000000000001000 RCX: 0000000000000001 Mar 6 19:50:21 SKYNET-UR kernel: RDX: ffff888102d55020 RSI: ffffffffffffe000 RDI: 0000000000000009 Mar 6 19:50:21 SKYNET-UR kernel: RBP: 00000000fed7e000 R08: ffff888102d55020 R09: ffff8881525b2bf0 Mar 6 19:50:21 SKYNET-UR kernel: R10: 0000000000000009 R11: ffff888000000000 R12: 0000000000001000 Mar 6 19:50:21 SKYNET-UR kernel: R13: ffff888102d55010 R14: ffff88813d301000 R15: ffffffffa00da640 Mar 6 19:50:21 SKYNET-UR kernel: FS: 0000148f90fb0740(0000) GS:ffff889fdd840000(0000) knlGS:0000000000000000 Mar 6 19:50:21 SKYNET-UR kernel: CS: 0010 DS: 0000 ES: 0000 CR0: 0000000080050033 Mar 6 19:50:21 SKYNET-UR kernel: CR2: 0000150fa8003340 CR3: 000000014cd8a000 CR4: 0000000000350ee0 Mar 6 19:50:21 SKYNET-UR kernel: Call Trace: Mar 6 19:50:21 SKYNET-UR kernel: iommu_dma_free+0x1a/0x2b -

It was after upgrade to 6.9.0 rc2 when i got issues changed from machine type 5.1 to 4.2 but sounds like other issue with yours.

Have you tried recreating the VM, as that often sorts some issues.

-

Is it just the VM crashing or unraid as well and do you pass through gfx card?

I had issues related to qemu with my VM's crashing vm as well as unraid solution was change machine type to older one.

-

-

Update!

Looks like i have finally found the fix to my lock ups! It would appear to be a VM qemu issue. I changed my machine type to q35-4.2 from q35-5.1 and have not had a issue since. Now on 18 days up time.

I had already change from i440fx to q35 but had both on 5.1 so i'm guessing that i440fx-4.2 would work fine in my case as well. I want to get 30 days up time to be sure, then i will try i440fx-4.2 see what happens.

-

I'm still getting crash/lock up about every 3-5 days on rc2 i have no clue what's going on tried so many things I'm out of idea's now!

-

Update!

I went to 6.9.0 rc1 updated Nvidia drivers on both vm's and got to almost 9 days up! Then updated to rc2 and within 48hrs i had 2 lock up's so it's still plaguing me! 🤷♂️

I have just redone the 2 vm's on new templates using q35 5.1 (was on i440fx) and new virtio drivers 0.1.190 on them so we will see if that makes any difference.

But in all lock up's it seems to be iommu issue in my case.

Dec 22 21:30:45 SKYNET-UR kernel: RIP: 0010:__iommu_dma_unmap+0x7a/0xe8 Dec 22 21:30:45 SKYNET-UR kernel: Code: 46 28 4c 8d 60 ff 48 8d 54 18 ff 49 21 ec 48 f7 d8 4c 29 e5 49 01 d4 49 21 c4 48 89 ee 4c 89 e2 e8 8f df ff ff 4c 39 e0 74 02 <0f> 0b 49 83 be 68 07 00 00 00 75 32 49 8b 45 08 48 8b 40 48 48 85 Dec 22 21:30:45 SKYNET-UR kernel: RSP: 0018:ffffc900018239f8 EFLAGS: 00010206 Dec 22 21:30:45 SKYNET-UR kernel: RAX: 0000000000002000 RBX: 0000000000001000 RCX: 0000000000000001 Dec 22 21:30:45 SKYNET-UR kernel: RDX: ffff888100066e20 RSI: ffffffffffffe000 RDI: 0000000000000009 Dec 22 21:30:45 SKYNET-UR kernel: RBP: 00000000fed7e000 R08: ffff888100066e20 R09: ffff8881596d6bf0 Dec 22 21:30:45 SKYNET-UR kernel: R10: 0000000000000009 R11: ffff888000000000 R12: 0000000000001000 Dec 22 21:30:45 SKYNET-UR kernel: R13: ffff888100066e10 R14: ffff88813da76000 R15: ffffffffa00e0640 Dec 22 21:30:45 SKYNET-UR kernel: FS: 000014ebd85ae740(0000) GS:ffff889fdd180000(0000) knlGS:0000000000000000 Dec 22 21:30:45 SKYNET-UR kernel: CS: 0010 DS: 0000 ES: 0000 CR0: 0000000080050033 Dec 22 21:30:45 SKYNET-UR kernel: CR2: 000014ebd8740425 CR3: 000000015c5a2000 CR4: 0000000000350ee0 Dec 22 21:30:45 SKYNET-UR kernel: Call Trace: Dec 22 21:30:45 SKYNET-UR kernel: iommu_dma_free+0x1a/0x2b -

Well i updated my 2 win10 vm's with the latest nvida drivers and not had lockup since. I got to almost 5 days but now i've updated to RC1 and done a few other things, like put memory back to 2666Mhz change some cpu pinning and swapped around some usb pass through. So we will see how it goes 🤞

-

All seems good on the update but i did get call trace on boot. Everything seems to be running fine and didn't lock up.

Dec 10 19:15:26 SKYNET-UR kernel: ------------[ cut here ]------------ Dec 10 19:15:26 SKYNET-UR kernel: WARNING: CPU: 3 PID: 7743 at drivers/iommu/dma-iommu.c:471 __iommu_dma_unmap+0x7a/0xe8 Dec 10 19:15:26 SKYNET-UR kernel: Modules linked in: nfsd lockd grace sunrpc md_mod nct6683 wireguard curve25519_x86_64 libcurve25519_generic libchacha20poly1305 chacha_x86_64 poly1305_x86_64 ip6_udp_tunnel udp_tunnel libblake2s blake2s_x86_64 libblake2s_generic libchacha bonding atlantic igb i2c_algo_bit r8169 realtek mxm_wmi wmi_bmof edac_mce_amd kvm_amd kvm crct10dif_pclmul crc32_pclmul crc32c_intel ghash_clmulni_intel aesni_intel crypto_simd cryptd btusb glue_helper btrtl btbcm rapl btintel r8125(O) ahci bluetooth libahci ecdh_generic ecc nvme i2c_piix4 nvme_core ccp k10temp i2c_core wmi button acpi_cpufreq [last unloaded: atlantic] Dec 10 19:15:26 SKYNET-UR kernel: CPU: 3 PID: 7743 Comm: ethtool Tainted: G O 5.9.13-Unraid #1 Dec 10 19:15:26 SKYNET-UR kernel: Hardware name: To Be Filled By O.E.M. To Be Filled By O.E.M./TRX40 Creator, BIOS P1.70 05/29/2020 Dec 10 19:15:26 SKYNET-UR kernel: RIP: 0010:__iommu_dma_unmap+0x7a/0xe8 Dec 10 19:15:26 SKYNET-UR kernel: Code: 46 28 4c 8d 60 ff 48 8d 54 18 ff 49 21 ec 48 f7 d8 4c 29 e5 49 01 d4 49 21 c4 48 89 ee 4c 89 e2 e8 90 df ff ff 4c 39 e0 74 02 <0f> 0b 49 83 be 68 07 00 00 00 75 32 49 8b 45 08 48 8b 40 48 48 85 Dec 10 19:15:26 SKYNET-UR kernel: RSP: 0018:ffffc90001b7ba40 EFLAGS: 00010206 Dec 10 19:15:26 SKYNET-UR kernel: RAX: 0000000000002000 RBX: 0000000000001000 RCX: 0000000000000001 Dec 10 19:15:26 SKYNET-UR kernel: RDX: ffff889fd593e820 RSI: ffffffffffffe000 RDI: 0000000000000009 Dec 10 19:15:26 SKYNET-UR kernel: RBP: 00000000fed6e000 R08: ffff889fd593e820 R09: ffff889f7effdb70 Dec 10 19:15:26 SKYNET-UR kernel: R10: 0000000000000009 R11: ffff888000000000 R12: 0000000000001000 Dec 10 19:15:26 SKYNET-UR kernel: R13: ffff889fd593e810 R14: ffff889f99db6800 R15: ffffffffa012a600 Dec 10 19:15:26 SKYNET-UR kernel: FS: 000015297c8f9740(0000) GS:ffff889fdd0c0000(0000) knlGS:0000000000000000 Dec 10 19:15:26 SKYNET-UR kernel: CS: 0010 DS: 0000 ES: 0000 CR0: 0000000080050033 Dec 10 19:15:26 SKYNET-UR kernel: CR2: 000015297ca8b425 CR3: 0000001f7d09c000 CR4: 0000000000350ee0 Dec 10 19:15:26 SKYNET-UR kernel: Call Trace: Dec 10 19:15:26 SKYNET-UR kernel: iommu_dma_free+0x1a/0x2b Dec 10 19:15:26 SKYNET-UR kernel: aq_ptp_ring_free+0x31/0x60 [atlantic] Dec 10 19:15:26 SKYNET-UR kernel: aq_nic_deinit+0x4e/0xa4 [atlantic] Dec 10 19:15:26 SKYNET-UR kernel: aq_ndev_close+0x26/0x2d [atlantic] Dec 10 19:15:26 SKYNET-UR kernel: __dev_close_many+0xa1/0xb5 Dec 10 19:15:26 SKYNET-UR kernel: dev_close_many+0x48/0xa6 Dec 10 19:15:26 SKYNET-UR kernel: dev_close+0x42/0x64 Dec 10 19:15:26 SKYNET-UR kernel: aq_set_ringparam+0x4c/0xc8 [atlantic] Dec 10 19:15:26 SKYNET-UR kernel: ethnl_set_rings+0x202/0x258 Dec 10 19:15:26 SKYNET-UR kernel: genl_rcv_msg+0x1d9/0x251 Dec 10 19:15:26 SKYNET-UR kernel: ? genlmsg_multicast_allns+0xea/0xea Dec 10 19:15:26 SKYNET-UR kernel: netlink_rcv_skb+0x7d/0xd1 Dec 10 19:15:26 SKYNET-UR kernel: genl_rcv+0x1f/0x2c Dec 10 19:15:26 SKYNET-UR kernel: netlink_unicast+0x10c/0x19d Dec 10 19:15:26 SKYNET-UR kernel: netlink_sendmsg+0x29d/0x2d3 Dec 10 19:15:26 SKYNET-UR kernel: sock_sendmsg_nosec+0x32/0x3c Dec 10 19:15:26 SKYNET-UR kernel: __sys_sendto+0xce/0x109 Dec 10 19:15:26 SKYNET-UR kernel: ? exc_page_fault+0x351/0x37b Dec 10 19:15:26 SKYNET-UR kernel: __x64_sys_sendto+0x20/0x23 Dec 10 19:15:26 SKYNET-UR kernel: do_syscall_64+0x5d/0x6a Dec 10 19:15:26 SKYNET-UR kernel: entry_SYSCALL_64_after_hwframe+0x44/0xa9 Dec 10 19:15:26 SKYNET-UR kernel: RIP: 0033:0x15297ca13bc6 Dec 10 19:15:26 SKYNET-UR kernel: Code: d8 64 89 02 48 c7 c0 ff ff ff ff eb bc 0f 1f 80 00 00 00 00 41 89 ca 64 8b 04 25 18 00 00 00 85 c0 75 11 b8 2c 00 00 00 0f 05 <48> 3d 00 f0 ff ff 77 72 c3 90 55 48 83 ec 30 44 89 4c 24 2c 4c 89 Dec 10 19:15:26 SKYNET-UR kernel: RSP: 002b:00007fffc535d018 EFLAGS: 00000246 ORIG_RAX: 000000000000002c Dec 10 19:15:26 SKYNET-UR kernel: RAX: ffffffffffffffda RBX: 00007fffc535d090 RCX: 000015297ca13bc6 Dec 10 19:15:26 SKYNET-UR kernel: RDX: 000000000000002c RSI: 000000000046f3a0 RDI: 0000000000000004 Dec 10 19:15:26 SKYNET-UR kernel: RBP: 000000000046f2a0 R08: 000015297cae41a0 R09: 000000000000000c Dec 10 19:15:26 SKYNET-UR kernel: R10: 0000000000000000 R11: 0000000000000246 R12: 000000000046f340 Dec 10 19:15:26 SKYNET-UR kernel: R13: 000000000046f330 R14: 0000000000000000 R15: 000000000043504b Dec 10 19:15:26 SKYNET-UR kernel: ---[ end trace 91c54fcae68e89eb ]--- -

I'm hitting the update button 🤞

-

1

1

-

-

45 minutes ago, almulder said:

Yes Bio is set to IOMMU and set to enabled (Not Auto).

However I had not tried "VFIO allow unsafe interrupts" (Did not even notice that option) and now it seems to be working with both being pased through. I will test more once windows gets installed and then pass through graphics, but nvme was found without issue and installing.

Thanks so much for this info.

Great news! hopefully you'll be all good then. 👍

-

1

1

-

-

Last thing i can think of to try is set "VFIO allow unsafe interrupts: " to yes in vm manager if your still getting nowhere.

-

Ok what about bios then is set for iommu? what motherboard is it?

-

4 hours ago, almulder said:

That will have no effect on the USB that needs to be passed through. This seems to be an issue with QEMU needes to be updated within the code. Looks like there is another user that dug into this. Just seems odd other 35 beta users that do pass through have not reported the issue. I am trying to create a VM and it keeps failing, and nobody else seems to speak up. I was hoping it was an easy fix as I upgraded my server to also run a daily driver VM, but unable to set that up yet due to the errors comming up.

Still looking for a solution

The creation error in your first post is related to the iommu group 14 which is the disk your trying to pass through is it not? So usb might be working but it just doesn't get past the disk issue.

-

On 12/1/2020 at 5:58 PM, almulder said:

So does nobody else have issue passing devices through to VM in the beta 35? Really want to get this working so I can make the VM my daily driver and Gaming Setup. I need to pass through an NVME that is group 14, and group 19 for my usb, and then I have an RTC 2070 to pass through for graphics. I have tried to just pass through group 14 and no luck same error, tried passing through just group 19 again same error. Have not tried graphics yet as I have heard that is best to wait until after you get the VM up and working corectly.

Also here is my System info.

You could pass it as vdisk or try and pass it via unassigned devices via dev/mnt...

Also are you editing existing vm setup? If so have you tried new vm setup with what you want.

-

57 minutes ago, sittingmongoose said:

Any idea if @limetech has looked at this? Its been quite a while and I don't see any attempts to look at this problem. Its affecting beta 35 as well. Presumably this issue would follow forward into the next release as well...

Nothing new other than than the c-states, typical current idle or memory speed tricks to try.

Yes i'm on beta 35 now still having issues! I'm leaning to kernel issue or Nvidia drivers on the two 2070 supers on my vm's maybe. tried a lot of tweaks losing track now lol.

I have seen posts on other forum's about bare metal ryzen and linux rig's having lock up's as well.

I'm hoping that 6.9.0 release will solve it but who knows.

-

Update! so looking in my bios again i had power supply set to "low current idle" so i've now set it to "typical current idle" and got almost 4 days uptime so far! 🤞

Also i did some logging of psu usage just in case. most i've seen while gaming on 2 vm's with 2070 supers on each one was 760 watts so don't think i'm hitting limit on the hx1000i but i am going get a 1600i as soon as i can but at £460 it's gonna have to wait to new year as i will need bigger ups as well another £700-1k 🤪

-

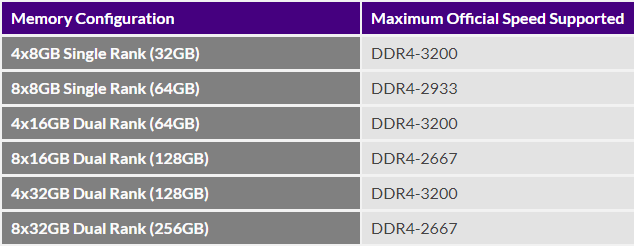

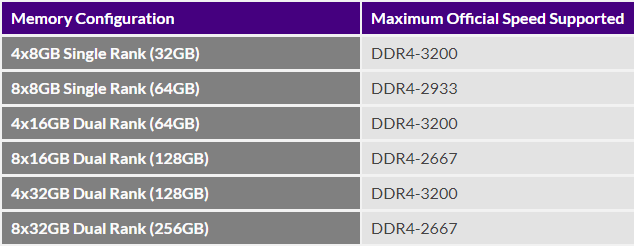

5 hours ago, trurl said:

What about the RAM recommendations at that link?

I've got 8x 16gb strips running at 2133mhz so well within spec. I was running it at 2666mhz which is still in with suggest max. Dropping ram speed was one of the first things i did when i started getting issues as well as memory test.

-

59 minutes ago, trurl said:

Have you seen this FAQ?

Yep given it a go! I've disabled c-states and set power to typical idle but still no cigar 🚬🤪

-

-

38 minutes ago, sittingmongoose said:

I am having a similar issue. Started with Beta 29, beta 25 was good. Beta 25 was the Nvidia build with a quadro p2000 and using hardware accel in my dockers. Never crashed.

Now on beta 29 and higher, I get crashes every few hours. Ive been running for like 3 days now and I have had 12 crashes already. Completely unresponsive, can't access anything, I need to do a power cycle.

Using the Nvidia Driver on beta 35 but on beta 29 I was using the Nvidia build. Absolutely nothing in my logs....Server is pretty much useless now, I have no idea when it dies, don't get any notifications or anything. So only way I know its down is someone messages me saying they can't access Plex.

Attaching Diagnostics and Enhanced Syslogs

Oh crap i don't get it that many times a day revert back to a beta that worked for you. mines random can be 3-5 days a day or like 12 days longest up on beta 35 is 17 days i think.

What is your server hardware?

[6.9.0 beta 30] Server hard lock up

-

-

-

-

-

in Prereleases

Posted

Well i'm hoping i have finally sorted it! Never had that long uptime since it was built.

I found out the 128gb of Corsair LPX 16gb dimms in the server have different version numbers which relates to different chip sets! luckily i had more dimms in another machine so i have managed to sort a 128gb set with same chips and looks like it has got me sorted at long last.

Link to the below quote from reddit about version numbers.