tknx

-

Posts

245 -

Joined

-

Last visited

Content Type

Profiles

Forums

Downloads

Store

Gallery

Bug Reports

Documentation

Landing

Posts posted by tknx

-

-

Sonoma 14.1.2 and Unraid 6.12.4

I feel like I am constantly battling to just get simple finder access to my share. I have no idea why that is...

I have my user account set to read/write. I log in through command-K > smb://tower.local

And then I can't copy files, delete files, or whatever. When I do a "get info" the lock icon appears.

I tried with and without the nsmb.conf file suggested in the pinned thread

-

@gevsan did you get this working? I tried your same variable setup and I don't see an option to download as CBZ anywhere appearing.

-

I started logging outside of Unraid to see what might be causing Unraid to crash. Running 6.12.2 and just seeing some instability.

I attached the past day, seems like it crashed right around midnight

-

I came to look at my server and found this:

I tried turning off the docker service and then on again and it said docker service failed to start.

Anyone know what is going on here?

-

Hit stop array, stuck at stopping. I already killed the nginx process that was getting stuck at /mnt/user.

This sort of thing happens ALL the time. I have never once successfully shut down an array without a hard power down. I would love to know why and have it work properly for once. It is hot today and I don't want to run a parity check in the heat.

root@Tower:~# tail -f /var/log/syslog Jul 2 18:06:23 Tower emhttpd: Unmounting disks... Jul 2 18:06:23 Tower emhttpd: shcmd (3779): umount /mnt/disk4 Jul 2 18:06:23 Tower root: umount: /mnt/disk4: target is busy. Jul 2 18:06:23 Tower emhttpd: shcmd (3779): exit status: 32 Jul 2 18:06:23 Tower emhttpd: Retry unmounting disk share(s)... Jul 2 18:06:28 Tower emhttpd: Unmounting disks... Jul 2 18:06:28 Tower emhttpd: shcmd (3781): umount /mnt/disk4 Jul 2 18:06:28 Tower root: umount: /mnt/disk4: target is busy. Jul 2 18:06:28 Tower emhttpd: shcmd (3781): exit status: 32 Jul 2 18:06:28 Tower emhttpd: Retry unmounting disk share(s)...root@Tower:~# lsof | grep /mnt/disk4 root@Tower:~#root@Tower:~# mount | grep /mnt/disk4 /dev/md4p1 on /mnt/disk4 type xfs (rw,noatime,nouuid,attr2,inode64,logbufs=8,logbsize=32k,noquota) /mnt/disk4/system/docker/docker-xfs.img on /var/lib/docker type xfs (rw,noatime,attr2,inode64,logbufs=8,logbsize=32k,noquota) -

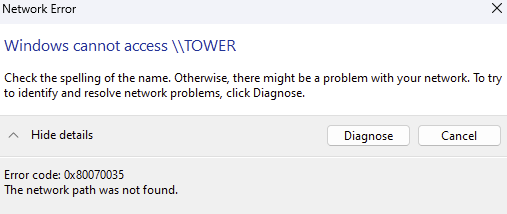

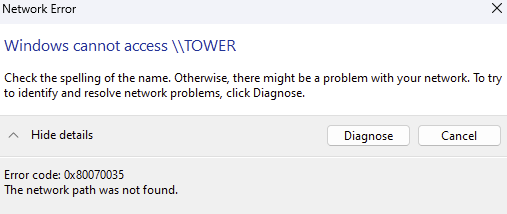

Well, it was working yesterday for me and now it isn't. A file server that can't serve files is useless and fighting with Windows is exhausting.

I enabled insecure SMB shares and SMBv1, no joy - still getting network path not found errors. But honestly it feels like why should I have to be enabling deprecated features?

-

rename / prename would be nice

-

I can't get the web interface to load. Can someone tell me what i did wrong here?

Settings are attached.

Logs show:

s6-rc: info: service cron: starting s6-rc: info: service cron successfully started s6-rc: info: service _uid-gid-changer: starting s6-rc: info: service _uid-gid-changer successfully started s6-rc: info: service _startup: starting s6-rc: info: service _startup successfully started s6-rc: info: service pihole-FTL: starting s6-rc: info: service pihole-FTL successfully started s6-rc: info: service lighttpd: starting s6-rc: info: service lighttpd successfully started s6-rc: info: service _postFTL: starting s6-rc: info: service _postFTL successfully started s6-rc: info: service legacy-services: starting s6-rc: info: service legacy-services successfully started ServerIP is deprecated. Converting to FTLCONF_LOCAL_IPV4 [i] Starting docker specific checks & setup for docker pihole/pihole [i] Setting capabilities on pihole-FTL where possible [i] Applying the following caps to pihole-FTL: * CAP_CHOWN * CAP_NET_BIND_SERVICE * CAP_NET_RAW * CAP_NET_ADMIN [i] Ensuring basic configuration by re-running select functions from basic-install.sh [i] Installing configs from /etc/.pihole... [i] Existing dnsmasq.conf found... it is not a Pi-hole file, leaving alone! [✓] Installed /etc/dnsmasq.d/01-pihole.conf [✓] Installed /etc/dnsmasq.d/06-rfc6761.conf [i] Installing latest logrotate script... [i] Existing logrotate file found. No changes made. [i] Assigning password defined by Environment Variable [✓] New password set [i] Added ENV to php: "TZ" => "America/Los_Angeles", "PIHOLE_DOCKER_TAG" => "", "PHP_ERROR_LOG" => "/var/log/lighttpd/error-pihole.log", "CORS_HOSTS" => "", "VIRTUAL_HOST" => "f611cac0d28a", [i] Using IPv4 [i] Preexisting ad list /etc/pihole/adlists.list detected (exiting setup_blocklists early) [i] Setting DNS servers based on PIHOLE_DNS_ variable [i] Applying pihole-FTL.conf setting LOCAL_IPV4=192.168.1.24 [i] FTL binding to custom interface: br0 [i] Enabling Query Logging [i] Testing lighttpd config: Syntax OK [i] All config checks passed, cleared for startup ... [i] Docker start setup complete [i] pihole-FTL (no-daemon) will be started as root Checking if custom gravity.db is set in /etc/pihole/pihole-FTL.conf [i] Neutrino emissions detected... [✓] Pulling blocklist source list into range [✓] Preparing new gravity database [i] Using libz compression [i] Target: https://raw.githubusercontent.com/StevenBlack/hosts/master/hosts [✓] Status: Retrieval successful [i] Imported 182498 domains, ignoring 1 non-domain entries Sample of non-domain entries: - 0.0.0.0 [i] List stayed unchanged [✓] Creating new gravity databases [✓] Storing downloaded domains in new gravity database [✓] Building tree [✓] Swapping databases [✓] The old database remains available. [i] Number of gravity domains: 182498 (182498 unique domains) [i] Number of exact blacklisted domains: 0 [i] Number of regex blacklist filters: 0 [i] Number of exact whitelisted domains: 0 [i] Number of regex whitelist filters: 0 [✓] Cleaning up stray matter [✓] FTL is listening on port 53 [✓] UDP (IPv4) [✓] TCP (IPv4) [✓] UDP (IPv6) [✓] TCP (IPv6) [✓] Pi-hole blocking is enabled Pi-hole version is v5.16.2 (Latest: v5.16.2) AdminLTE version is v5.19 (Latest: v5.19) FTL version is v5.22 (Latest: v5.22) Container tag is: 2023.03.1 -

So I am running zigbee2mqtt on my IOT network and when I assign it a static IP there, the front end port mapping doesn't work.

If I leave it on br0, then it works fine.

Anyone have any thoughts on why?

PS

@Thomas_H nice to see another loxone user!

-

1 hour ago, itimpi said:

That is the part of Unraid that supports the User Share capability.

So that is a bug right? That it didn’t kill shares on shutdown?

-

OK, diagnosed it. Posting for posterity:

lsof | grep /mnt/user

Got a random bash process:

bash 21148 root cwd DIR 0,56 4096 648799827764686976 /mnt/user/media/anime-movieskill -9 21148

it shut down. No idea what process that was... maybe an open session somewhere.

-

OK

So when I run

root@Tower:~# mount | grep "/mnt/user", I get the following:

shfs on /mnt/user type fuse.shfs (rw,nosuid,nodev,noatime,user_id=0,group_id=0,default_permissions,allow_other)I have no idea what that means

-

I have seen a couple of messages around this, but no real answer..

My array can't be stopped, when I try I get the following repeated in console:

Mar 13 10:25:35 Tower root: rmdir: failed to remove '/mnt/user': Device or resource busy Mar 13 10:25:35 Tower emhttpd: shcmd (370295): exit status: 1 Mar 13 10:25:35 Tower emhttpd: shcmd (370297): /usr/local/sbin/update_cron Mar 13 10:25:35 Tower emhttpd: Retry unmounting user share(s)... Mar 13 10:25:40 Tower emhttpd: shcmd (370298): umount /mnt/user Mar 13 10:25:40 Tower root: umount: /mnt/user: target is busy. Mar 13 10:25:40 Tower emhttpd: shcmd (370298): exit status: 32 Mar 13 10:25:40 Tower emhttpd: shcmd (370299): rmdir /mnt/userDiagnostics attached.

My questions:

1. How do I shut down my system safely right now - ideally without needing a parity run?

2. How do I fix this on a permanent basis?

-

OK, I read through everything here, but am hesitant to do anything since some posts are quite old.

Running Windows 11 and 6.11.1. I can see my shares just fine and they are set to public. I mapped them to a network drive. But despite having R/W access, I can't get it to let me write. Windows says I need permission.

I cleared my credentials, I unmapped and remapped the network drive, etc. No luck. The folder I want to write in is owned by nobody:users.

-

Ok doing the extra setting worked, should just update the docker to include it i suppose.

Also I am getting an error on the version update:

-

My docker just shows either a white or black screen and no calibre interface. I tried deleting appdata/calibre and reinstalling from scratch without luck.

Tried private tabs and both chrome and firefox. Anyone have any thoughts?

-

I found my server wasn't running and the attached screen showed the above message - seems like a kernel panic?

Anything I can do to identify the issue?

-

No - I have noticed that any questions related to networking issues rarely get answered. People just say delete 'network.cfg' as the only solution.

-

So I have my server setup to be on the default LAN 10.0.0.0/22 and the IOT VLAN 10.0.20.0/24

When i go the client list for my Unifi router, I only see the 10.0.20.86 entry for the VLAN. I don't see the 10.0.0.101 address it should have for the default LAN.

Any idea what is going on here?

-

So I was told elsewhere to enable Wireguard to access VLANs, I needed to add CIDR ranges to the AllowedIPs field. When I added 0.0.0.0/0 to "Peer allowed IPs:" it kills Unraid's connectivity.

Right now I have the default lan on 10.0.0.0/22 and an IOT lan on 10.0.20.0/24 as well as a guest lan and a couple of other specialty ones. So what is the best way to enable VLAN access here?

-

Solved it sort of - it was Wireguard.

I think when I added 0.0.0.0/0 to allowed peers, it created problems. I need to experiment more with it since i want VPN clients to be able to access both my VLANs

-

1

1

-

-

So it worked fine until I started adding dockers back. I added Jellyfin and then I could not connect or add any other dockers or ping.

So either it is that particular config or it is the docker service itself?

-

Yeah - I will try and copy over the files as you suggest to my install.

This is totally weird, right?

-

@JorgeB

Used a fresh install and can ping (although it seems slow). So definitely feels like an Unraid problem, at least partially.

PING cnn.com (151.101.131.5) 56(84) bytes of data. 64 bytes from 151.101.131.5 (151.101.131.5): icmp_seq=1 ttl=56 time=2.58 ms 64 bytes from 151.101.131.5 (151.101.131.5): icmp_seq=2 ttl=56 time=2.90 ms 64 bytes from 151.101.131.5 (151.101.131.5): icmp_seq=3 ttl=56 time=2.77 ms 64 bytes from 151.101.131.5 (151.101.131.5): icmp_seq=4 ttl=56 time=2.95 ms 64 bytes from 151.101.131.5 (151.101.131.5): icmp_seq=5 ttl=56 time=2.65 ms ^C64 bytes from 151.101.131.5: icmp_seq=6 ttl=56 time=2.64 ms --- cnn.com ping statistics --- 6 packets transmitted, 6 received, 0% packet loss, time 25026ms rtt min/avg/max/mdev = 2.580/2.749/2.954/0.139 ms

[PLUG-IN] NerdTools

in Plugin Support

Posted

Requesting zoxide to be added, it is life changing