sonofdbn

Members-

Posts

492 -

Joined

-

Last visited

Content Type

Profiles

Forums

Downloads

Store

Gallery

Bug Reports

Documentation

Landing

Everything posted by sonofdbn

-

Getting helium warning on non-helium HGST drive

sonofdbn replied to sonofdbn's topic in Storage Devices and Controllers

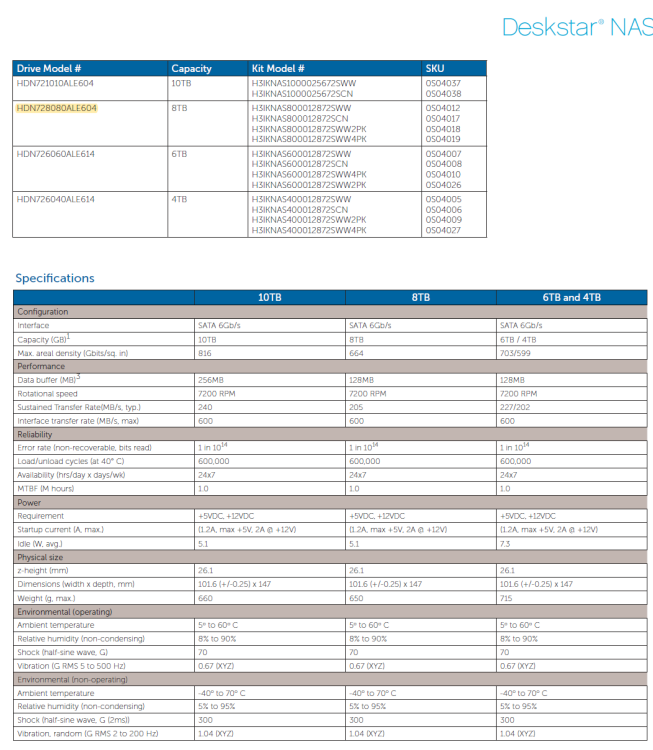

The drive is still in the array, so I can't see what the top looks like. But here's where I saw the model number in some HGST document. It's a Deskstar NAS and there's no mention of helium anywhere. Casual Googling shows that most (all?) HGST helium drive model numbers start with HUH and are Ultrastars. Or perhaps I'm so far behind the tech that most modern drives are helium drives so they don't bother mentioning it? -

I have an HGST Deskstar NAS drive in my array. Model is HDN728080ALE604, and from what I can find, it's not a helium drive (and I don't recall ever buying a helium drive). But I'm getting this in my notification email from the server: Subject: Warning [TOWER] - helium level (failing now) is 22. I ran a short SMART self-test and no error was reported. This is the third time I've seen the message (the number is going up: 7, 16, 22) but have so far ignored it. First was in in February this year. Should I be concerned?

-

Can't connect to custom network after unclean shutdown

sonofdbn replied to sonofdbn's topic in General Support

I tried deleting and then recreating proxynet, but that didn't help. Then I tried creating a new network, proxynet2. I assigned my Swag and Nextcloud containers to it and they seemed to be OK. Then I reassigned the containers back to proxynet and lo and behold, they're now working. To tidy up I deleted proxynet2. So far, so good. If things run fine for a few days, I'll mark this as solved. -

I'm on 6.12.6. I ran into some problems that led to an unclean shutdown. I got some advice here to fix the problems, and followed those steps (switched from macvlan to ipvlan, recreated the custom docker network (same name "proxynet") and recreated the docker image. But now my docker containers (Swag and Nextcloud) aren't connecting to proxynet. Host access to custom networks is enabled, as is Preserve user defined networks. Searching a bit on the forum there did seem to be some cases of problems with custom docker networks not working after an unclean shutdown and possibly the Host access to custom networks setting being shown as enabled when in fact it had not been enabled. The suggested solution was to disable and re-enable this setting, which seemed to work in some cases. I've tried that, also rebooted and tried that again, and I still have the same problem. Here's what I have: root@Tower:~# docker network ls NETWORK ID NAME DRIVER SCOPE bd5116d783dc br0 ipvlan local a3b3aaa3ce56 bridge bridge local 83cf0e1b1ef5 host host local 9e74c89874cb none null local 69ed37939ece proxynet bridge local root@Tower:~# So it looks like proxynet is running? There was also a suggestion that the problem was caused by a race condition, where the docker container tried to connect to the custom network before the network was up. I tested that as well by restarting Swag, same problem. Then also tested by setting Swag autostart to off, disabling docker service, re-enabling docker service, waiting a few minutes and then starting Swag. Still had the same problem. Any suggestions on how to fix this? tower-diagnostics-20240331-2100.zip

-

I've switched to ipvlan, recreated the custom docker network (same name "proxynet") and recreated the docker image. But now my docker containers (Swag and Nextcloud) aren't connecting to the custom network. Host access to custom networks is enabled, as is Preserve user defined networks. I'm sure I've done this before, so I think I'm missing some obvious step. Here's the result of docker network ls: root@Tower:~# docker network ls NETWORK ID NAME DRIVER SCOPE 16453c5dced9 br0 ipvlan local 7c5d56aee35f bridge bridge local 83cf0e1b1ef5 host host local 9e74c89874cb none null local 69ed37939ece proxynet bridge local tower-diagnostics-20240331-1848.zip

-

Thanks so much. Will carry out the fixes once the parity check has completed.

-

The GUI has become unresponsive at least twice more in the last month. Not sure if it's the same problem, but would like to know if there's something obvious that I should be doing. Still on 6.12.6. This resulted in unclean shutdowns each time. Diagnostics and syslog are attached. Syslog is lightly redacted; hope I haven't removed anything that's pertinent. (\\IP address\Tower_repo is a Tailscale connection.) This time the GUI got stuck while updating the DuckDNS container - I saw some error message (unfortunately can't remember what now) and then the GUI gradually froze - various tabs became unresponsive. tower-diagnostics-20240329-1010.zip syslog-192.168.1.14.log

-

New Unraid OS License Pricing, Timeline, and FAQs

sonofdbn replied to SpencerJ's topic in Announcements

Just want to confirm: we can still buy current (Legacy) licences before 27 March at the prices shown, but that deadline doesn't apply to activation of the licence? In other words, I could buy the licence tomorrow and activate next year and not pay anything more. -

I'm with @Sissy on this. For a long time I used an adapter to run the flashdrive off a USB header on the motherboard, so it was of course inside the case. Was quite happy until one day the flashdrive died. Then I had to open up the server to replace the drive. Much as I love my Fractal Design R5, for me the glass side panel is incredibly difficult to align (too much flex) and closing it up while it's vertical involves a bit of non-techie thumping. (I could place the server on its side, where replacing the panel is a bit easier, but my SATA cables seem to be quite sensitive, so didn't want to do that. Also, with 10 hard drives, it's not a trivial matter to move the server around.) I had to test a few times to find out whether the problem was with the flash drive or the adapter - I think in the end it was indeed a dying flash drive. But after that I thought I might as well just stick the flash drive into one of the USB ports on top of the case. At the very least there would be no worrying about whether the adapter was working. No one else comes near the server, and in my (untidy) situation, there are some many other things that are more likely to be dislodged or knocked over (ethernet cables, UPS cables, network switches and their power cords, etc.) One possible additional advantage to mounting the flashdrive externally, admittedly not tested yet, is that it might be useful if I have a dualboot server. On my other server, I have Win11 installed on an NVME drive that is passed through to a VM. Usually unRAID runs on the server and I access Win11 via the VM. The BIOS boot order is unRAID first, then the Win11 drive. A week or so ago, I managed to mess up the VM (I think when I tried to run WSL2) and could only access Windows by booting into the NVME drive directly. This meant fiddling with the BIOS to change boot device order so that I could boot directly into Windows, trying a Windows fix, and then resetting the boot order to go back to unRAID to test whether the VM was working. This had to repeated every time the fix didn't work, and I ended up having to do this a few times. I think that if I had had the flash drive mounted externally, I could simply have removed it, so that when the BIOS couldn't find it, it would boot into the next item, the Windows NVME drive. After that I could just plug in the flash drive again to boot into unRAID. Haven't tested this out because there hasn't been any reason to open up the case (another R5) to move the internally mounted flash drive). I'm sure there's a better alternative boot process involving GRUB or something similar, but I haven't looked into that.

-

Unable to get video output on VM passthrough

sonofdbn replied to Maxime Courtemanche's topic in VM Engine (KVM)

I can't help on this specific situation, but I got passthrough working using the guide here: https://forums.unraid.net/topic/133563-gpu-passthrough-is-easy-heres-how/ I have a Win 11 VM and an nVidia GPU. -

Well it so happens that I forgot to disable syslog server from some past investigation, so I actually have a syslog. I've removed earlier things and lines after rebooting, but can add those back if needed. Looks like the crash was around 3.00 am on Feb 24. syslog_1.log

-

This morning I found my server (6.12.6) had hung: couldn't access the GUI or the shares. IPMI showed an unRAID screen with a field for user name, but I couldn't type anything into the field, even using the virtual keyboard. In the end I had to reboot, so there was the dreaded unclean shutdown, and now parity-check is running. Don't think I've done anything drastic, although over the last few weeks fiddling with docker container access I switched from ipvlan back to macvlan and vice versa a couple of times, but the server has been running fine (on macvlan without any further changes) for at least a week. Hoping someone can make sense of the diagnostics and tell me what caused the problem. tower-diagnostics-20240224-0945.zip

-

Did I mention the great support in the forums? Now let me find something else to complain about.

-

I like saving money as much as anyone else, but where I am, the current Pro licence is roughly the cost of an 8TB hard drive. If I want to avoid subscription/extension fees and go for a new Pro/Lifetime licence, I'm guessing it will cost less than a 16TB hard drive (I currently have four in one array, two in another). Of course unRAID isn't perfect (I absolutely hate the clickfest Alerts/Warnings/Notices system in the GUI), not all my queries here get answered and I will never understand the difference between macvlans and ipvlans, but me and unRAID have come a long way since I wrestled with PATA drives and thought a 400GB drive was huge. I was going to say I'm not a fanboi, but actually I think I am 😀

-

Specifically re Firefox on Android on a tablet: when I use FF there is no tab bar, which I find makes it almost unusable. I did try an extension, but it could display tabs only on the bottom. Am I missing a setting? I'd love to be able to use FF on the tablet so that it can sync with my PC. I've seen occasional advice about not using FF with the unRAID GUI. Here's one I managed to dig up: In practice I've been using FF almost all the time with no problems, but have just kept in mind that if there's an unRAID GUI related issue it might be a FF issue.

-

Today Firefox (latest version) decided to show black screens. Only the FF windows; Vivaldi is fine. Turns out I had to turn off hardware acceleration, so that fixes it, but why? What's changed? Certainly not my hardware. And I haven't updated FF since last night when it was working fine. But yes, the password savers/managers are great. If only FF didn't insist on positioning password options right over the dropdown box where I need to select something on the airline website. It's really ridiculous that I have to choose a browser depending on which website I want to go to. I exaggerate, of course, but only a little.

-

Did you ever look at Synology Photos? A long time ago I used the old DS Photo (?) from Synology. It wasn't a good experience, but at first glance Synology Photos looks quite nice. While I do think that Synology hardware is over-priced, if you can make good use of their software the price is much more palatable. unRAID is much more flexible of course, but as this thread shows, we could do with better apps in some areas.

-

@rheumatoid-programme6086 A bit late to this, but just wondering exactly what you did to run unRAID after you switched from the USB HDD DAS enclosure. You mention the 6x SATA adapter in the NVME slot, but since you're running a mini PC, where do the hard disks go? (I'm assuming the mini PC is one of those small ones that don't have any HDD slots, like this HP Elite Mini 600 G9 Desktop PC.)

-

I'm on 6.12.6 and am trying out virtual-dsm. It seems a little different from other docker containers: it's essentially DSM running in a VM, installed as a container. I've got it set up using custom network br0, which is a macvlan, but I don't know how to get Swag/Letsencrypt set up to allow me to reverse proxy to it. For example, if the container sits at 192.168.1.100, how do I get mydomain.duckdns.org to point to that IP address? Swag doesn't have a template for virtual-dsm, so I've tried various templates, but no joy.

-

I tried out btrfs on a cache pool some years back - maybe 5 years? Not sure if cache pool is the right term - basically I had two duplicate SSDs in a RAID-1 type arrangement - this was before unRAID allowed multiple cache pools, I think. I followed the official setup process, so no weird customisation involved. I ran into some problems with the filesystem and spent some time trying to fix it with help from this forum. I can't remember whether I was actually able to fix things, but whatever the outcome I do recall that my impression at the time was that it was just too risky for me to continue with btrfs. So I went back to a single cache drive on xfs and have never used btrfs since then. In fact I might have done what you did and reformatted the drives as xfs. (Unfortunately I can't find the thread now - but it's long outdated.) I do believe that btrfs has matured since then and should be better. And it does seem that lots of people use it quite happily. My understanding is that at one time if you wanted redundancy/RAID 1 type protection on any pool device (in effect duplicating the pool drive), you had to use btrfs; xfs didn't support this. Perhaps these days you can do it with zfs, but that's too complicated for me. For me, one important and sometimes overlooked feature of any data protection policy is that the user must have a high degree of confidence in the system. Whether this confidence is subjective, justified, etc. is in some ways immaterial. You want to be able to rest easy. I just couldn't trust btrfs after my experience with it, but I do accept that it's perfectly fine for others.

-

I'm finding it surprisingly difficult to settle on one browser to handle my needs, which I don't think are overly demanding. One thing I really want is vertical tabs - with current monitor aspect ratios I would much rather have my tabs using up the width rather than the height. Admittedly some of my other prejudices are largely subjective, but here's a brief summary: Chrome Don't entirely trust Google (and yes, my phone is an Android phone), but it's more the sneaky things they seem to try with privacy settings. The one thing where Chrome is really good is its auto-translate. Other browsers do it, but not so seamlessly. Edge Can't abide the constant pushing of Edge and Microsoft services. Firefox My current main browser. Quite nice, but doesn't play nicely with unRAID sometimes (maybe this has changed?). Also, although it doesn't offer vertical tabs natively, the Tree Style Tab extension is good. It has the unfortunate quirk of occasionally opening a tab in a new window when I click on the tab - don't know if it's the extension or Firefox or my mouse-clicking ability - so I end up having to drag the tab back to the original window. Not a big deal, but happens often enough to be mildly annoying. Firefox also has this truly frustrating habit of popping up its password suggestions right over the part where you need to see or select an item. I travel reasonably frequently and when I try to pay for my air ticket it throws up password suggestions right on top of the dropdown box where I have to choose a credit card, so I can't see any of the credit cards listed. Vivaldi Previously my go-to browser, largely because it has native vertical tabs. (It might be the only browser with this feature.) But over the past year or so there have been additions that seem to get in the way (like Workspaces), and I get odd behaviour. Right now, it won't launch - probably need to reboot my PC. When it was the default browser it wouldn't open links, and on some websites it won't download files. Sometimes tabs go missing in the tab bar, although they're still active. On the Vivaldi forums I always read that it must be because of extensions, but I don't think I have anything too weird: Ublock Origin, Bitwarden, Google Translate mainly. Vivaldi also has this annoying habit of resetting the address bar spacing and the search engine when it updates (no way I would have selected Bing as the default search engine.) Others - Opera: used it way back when it was the new kid on the block, but when I looked at it recently it seemed to have some odd marketing gumph and it didn't seem to be worth trying to work through it. - Safari: does it still exist for Windows? - DuckDuckGo: nice idea in terms of privacy, but I found it klunky and didn't pursue further. - Bravo: never tried So currently I use Firefox on my main PC, with Vivaldi as a fallback (and for booking plane flights and unRAID). Annoyingly I also have to use Edge because some corporate thing only seems to work with Edge. I also end up having to use Vivaldi on my tablet because Firefox Android doesn't think people need tabs. So syncing my browsing is essentially impossible. I end up sending links to myself or via Joplin. I sort of miss the (perhaps not so) good old days when it was either Internet Explorer or Netscape Navigator. I don't test out browsers frequently, so some of my points might be out of date. And I'm sure there must be ways of dealing with the various irritations, but it's rarely intuitive or easy. (For example, try adjusting the spacing on Vivaldi's address bar.) All of this makes me think that I might surrender to our Google overlords and just use Chrome on everything. Resistance is proving to be quite futile at the moment.

-

I managed to install a container (virtual-dsm) and it seems to be running fine. Now I'd like to set up a reverse proxy to the container via Swag. There's no prebuilt nginx config file, but I'm happy to fiddle around with this. Is there anything different about a docker container installed in the "normal" unRAID way (download from Community Apps and click install) vs a container installed via docker compose manager? Particularly anything that might affect what needs to be done for a reverse proxy? (I'm not well-versed in linux, docker containers or reverse proxying.)

-

@Cirion Would be interested to hear your experiences with Immichh if you go ahead. I tried out the docker a few months ago, and it seemed quite good, but it missed a few photos on importing. Back then and possibly still now, the author of the software warned that it was under heavy development, and I found that after an update some things were broken and some significant reinstallation was needed. Since I need something that was stable and reliable for the family to use, I didn't continue with Immichh. It does seem like a great solution, but I'm going to wait until it's a bit more settled.

-

Read errors - OK to replace with larger disk?

sonofdbn replied to sonofdbn's topic in General Support

I do have SMART warning on 6 out of the other 9 disks, but they are all UDMA CRC Error count warnings. I suppose it sounds bad, but from what I've read, this type of error is usually an indication of a loose wiring connection and the system has had to rely on this error correction to ensure that the data being read/written is correct. (For casual readers, please don't assume this is correct - it's just my impression from reading.) Generally these error counts don't change unless I've opened up the case and replaced a hard disk or perhaps during a parity check. So I assume vibration or movement has loosened something. But after a few warnings the error count doesn't increase. I've taken a look at the various SATA connectors and can't see anything obviously wrong. So now I just ignore the errors unless the error count increases consistently. (My server runs 24x7.) Think this might have happened once or twice, but not recently. And I think I "fixed" it by jiggling SATA connectors or just swapping them. -

I'd be interested to know if anyone else has Kohya running successfully in the docker. I ended up going back to running everything natively in my Win11 VM, largely because I don't know enough to go into the docker and do all the fiddling around that the various packages always seem to require. But while I can natively run Automatic1111 and ComfyUI, native (Windows) Kohya constantly gives me problems. I've only been able to run it successfully under Pinokio.