-

Posts

10620 -

Joined

-

Days Won

51

Content Type

Profiles

Forums

Downloads

Store

Gallery

Bug Reports

Documentation

Landing

Posts posted by CHBMB

-

-

It's because you've installed a different PMS container, Plex thinks you have two different servers.

-

1 minute ago, SJOWG said:

Thanks. I guess that's what I get for following that old YouTube video.

Is there an idiot's guide to converting?

Not that I'm aware of. And you haven't really done much in the way of setup, probably as easy to nuke and pave.

-

As an aside I know our container has some advantages that the LT version doesn't if you end up using hardware transcoding

-

Just now, SJOWG said:

I'm sure you're telling me something important, but I'm not getting it. Are you saying I'm in the wrong thread? Or that I've configured my system incorrectly? Something else?

This is the support thread for the LinuxServer version of Plex, the run command you posted is for the LimeTech version of Plex.

Depending on how you look at it, you're either in the wrong thread (Use the LimeTech support thread) or you've configured your machine incorrectly (You should be using our version)

-

4 hours ago, leejbarker said:

Got my 1650 Super and prior to this release (of the Unraid / NVIDIA Drivers) I couldn't get it to pass through to my VM.

Since this driver release, the card should be supported by the NVIDIA driver. However, under the Info Panel, GPU Model and Bus it just shows as a Graphics Device. I know on my previous card, it had the model number etc.

Is something wrong here? I'm going to pull the card out tomorrow and try it in my baremetal windows PC, as it might be a bad card.

3 hours ago, leejbarker said:Further to this, it does seem to be hardware transcoding fine!

2 hours ago, saarg said:You don't need this build to pass through the card to a VM and it doesn't have anything to do with what it is recognized as in Linux. It is the system devices list you are talking about?

I think it's working fine now @saarg although the more I read it the more I'm getting confused.....

-

56 minutes ago, SJOWG said:

This is my command:

root@localhost:# /usr/local/emhttp/plugins/dynamix.docker.manager/scripts/docker run -d --name='PlexMediaServer' --net='host' --log-opt max-size='50m' --log-opt max-file='1' --privileged=true -e TZ="America/Los_Angeles" -e HOST_OS="Unraid" -v '/mnt/user/appdata/PlexMediaServer':'/config':'rw' 'limetech/plex'

e98fb6545baa9978e101367ab338143fdaf2d9eaca29d655b83f0eb3fe5286c5

More to the point, you're not even using the LinuxServer container 'limetech/plex'

-

1

1

-

-

6 hours ago, wreave said:

I can confirm the RTL8125 driver is working now. Thanks!

Great, thanks for letting me know

And thanks for all the kind words people!

-

1

1

-

-

This was an interesting one, builds completed and looked fine, but wouldn't boot, which was where the fun began.

Initially I thought it was just because we were still using GCC v8 and LT had moved to GCC v9, alas that wasn't the case.

After examining all the bits and watching the builds I tried to boot with all the Nvidia files but using a stock bzroot, which worked.

So then tried to unpack and repack a stock bzroot, which also reproduced the error. And interestingly the repackaged stock bzroot was about 15mb bigger.

Asked LT if anything had changed, as we were still using the same commands as we were when I started this back in ~June 2018. Tom denied anything had changed their end recently. Just told us they were using xz --check=crc32 --x86 --lzma2=preset=9 to pack bzroot with.

So changed the packaging to use that for compression, still wouldn't work.

At one point I had a repack that worked, but when I tried a build again, I couldn't reproduce it, which induced a lot of head scratching and I assumed my version control of the changes I was making must have been messed up, but damned if I could reproduce a working build, both @bass_rock and me were trying to get something working with no luck.

Ended up going down a rabbit hole of analysing bzroot with binwalk, and became fairly confident that the microcode prepended to the bzroot file was good, and it must be the actual packaging of the root filesystem that was the error.

We focused in on the two lines relevant the problem being LT had given us the parameter to pack with, but that is receiving an input from cpio so can't be fully presumed to be good, and we still couldn't ascertain that the actual unpack was valid, although it looked to give us a complete root filesystem. Yesterday @bass_rock and I were both running "repack" tests on a stock bzroot to try and get that working, confident that if we could do that the issue would be solved. Him on one side of the pond and me on the other..... changing a parameter at a time and discussing it over Discord. Once again managed to generate a working bzroot file, but tested the same script again and it failed. Got to admit that confused the hell out of me.....

Had to go to the shops to pick up some stuff, which gave me a good hour in the car to think about things and I had a thought, I did a lot of initial repacking on my laptop rather than via an ssh connection to an Unraid VM, and I wondered if that may have been the reason I couldn't reproduce the working repack. Reason being, tab completion on my Ubuntu based laptop means I have to prepend any script with ./ whereas on Unraid I can just enter the first two letters of the script name and tab complete will work, obviously I will always take the easiest option. I asked myself if the working build I'd got earlier was failing because it was dependent on being run using ./ and perhaps I'd run it like that on the occasions it had worked.

Chatted to bass_rock about it and he kicked off a repackaging of stock bzroot build with --no-absolute-filenames removed from the cpio bit and it worked, we can only assume something must have changed LT side at some point.

To put it into context this cpio snippet we've been using since at least 2014/5 or whenever I started with the DVB builds.

The scripts to create a Nvidia build are over 800 lines long (not including the scripts we pull in from Slackbuilds) and we had to change 2 of them........

There are 89 core dependencies, which occasionally change with an extra one added or a version update of one of these breaks things.

I got a working Nvidia build last night and was testing it for 24 hours then woke up to find FML Slackbuilds have updated the driver since. Have run a build again, and it boots in my VM. Need to test transcoding on bare metal but I can't do that as my daughter is watching a movie, so it'll have to wait until either she goes for a nap or the movie finishes.Just thought I'd give some background for context, please remember all the plugin and docker container authors on here do this in our free time, people like us, Squid, dlandon, bonienl et al put a huge amount of work in, and we do the best we can.

QuoteWhere it at yo? 😈

QuoteYes please. I am getting tired of the constant reminders to upgrade to RC7. Cant because my PLEX server will lost HW Transcoding.

Comments like this are not helpful, nor appreciated, so please read the above to find out, and get some insight into why you had to endure the "exhaustion" of constant reminders to upgrade to RC7.

On 11/27/2019 at 8:10 PM, leejbarker said:Hi,

Completely understand you guys do this in your spare time and I really am thankful for your work so far.

I've got a 1650 super recently and I'm just wondering when we might see a driver update...

Thanks

On 11/29/2019 at 9:30 AM, the1poet said:Hi aptalca. Appreciate the work the team does.

Comments like this are welcome and make me happy.....

EDT: Tested and working, uploading soon.

-

3

3

-

10

10

-

1

1

-

-

Post your docker run command, link to this is in my signature, you may need to enable signatures on your forum setting.I"m sorry, I'm a complete noob, and I'm completely lost. Please excuse my ignorance, egregious though it may be.

I'm trying to enable the Plex Media Server. I created a new Docker container, and walked through the (dated) video as best I can.

I used the unRaid cheat sheet at https://ronnieroller.com/unraid#setup-notes_plex-media-server to set the container path.

Plex runs, but it doesn't see my Media share. When I go to Add Library, and then Add Folder, I can get to /mnt, but it doesn't show any subfolders--I can't see /mnt/user, let alone any of the shares underneath that.

I read the unRaid Docker Guide at https://lime-technology.com/wp/docker-guide/, and frankly can't understand a damn thing it's saying.

If there's anything resembling a manual, or instructions, I'd greatly appreciate someone pointing me in the right direction. I'd gladly RTFM if I could find the FM.

Sent from my Mi A1 using Tapatalk

-

My feeling is if that warning isn't enough, then nothing can save them........

-

2

2

-

-

On 11/26/2019 at 1:09 PM, wreave said:

The Realtek RTL8125 (driver added in 6.8.0-rc2) does not work on Nvidia Unraid 6.8.0-rc5.

I have a full break down of tests and diagnostics over on this thread. Any insight here would be appreciated as I really would like to be able to continue using the Nvidia builds.

The summary is that the RTL8125 works on both stock Unraid 6.8.0-rc5 and rc7 but it fails to work on Nvidia Unraid 6.8.0-rc5.

I think this is resolved, we just need to be able to make the actual Nvidia build work now. No ETA

-

4

4

-

-

See the post above yours tvdb changed their APISame here.... not working any more..

Sent from my Mi A1 using Tapatalk

-

2 hours ago, aistee235 said:

How can i update the nvidia driver to 440.36? my new 1650 Super arrived today

You can't and we don't control the upstream driver version either. So you will have to play a waiting game.

-

-

Yeah, I can see that, you offer it for half price maybe you get twice as many people buying. I don't work for LT, just a happy customer, like I said, historically there haven't been BF sales, so I wouldn't hold your breath though.I definitely partially agree with you. However, from a marketing point of view, I think it would also open the door to sales that otherwise won't happen and/or would happen but perhaps in course of a year or two. Then is a question of whether waiting that time was worth it to receive X that would of other wise been the discount offered to receive it now. IMO.

Sent from my Mi A1 using Tapatalk

-

6 minutes ago, XiuzSu said:

I would personally love to see a sale, at least just on upgrades.

Well, yeah, everyone who wants to purchase anything would like it cheaper, but historically Unraid doesn't normally do "sales", I think it's important to remember that LT is a small company, not a massive multinational, they got to put food on the table the same as the rest of us.

-

3 hours ago, gx240 said:

Ah, that's a shame. Has Unraid ever gone on sale at any other time, or is it just always the same price?

It's never been on sale as far as I know, although when I bought my license in 2011 the pricing was a little different with the option to buy a second reduced price key, to cover for a potential USB stick failure as online replacement keys weren't a thing back then.

I think the general consensus from the community when it's been discussed before is that Unraid represents good value for money at it's usual price and we have understood that has meant LT don't offer Black Friday deals.

-

1

1

-

-

rc7 not building with the same issue as rc6.

Waiting for some advice from LT

If I don't post anything else, it's cos I don't have anything to add, no need to ask.

-

1

1

-

5

5

-

-

4 hours ago, dave234ee said:

hey guys thanks for the great docker. is there anyway i can secure the webpage with letsencrypt docker useing an proxy config file ?

You'd have to make your own proxy config file if we don't have one (I haven't checked) but in theory it should be possible. Although you don't need to for the VPN to work, just the VPN port forwards, the webui port can remain closed and only LAN accessible.

-

1 minute ago, MothyTim said:

Letsencrypt docker.

OK, so identical to me, so things that might be worth looking at that could potentially be different.

config.php

nextcloud reverse proxy conf

Nextcloud version (I'm on 17)

Default file

If you post I'll check, but I need a bit more to go on.

-

1 hour ago, MothyTim said:

Yes did that?

How are you reverse proxying it?

-

9 minutes ago, MothyTim said:

Hi, I have an issue with warnings and I'm stuck with one of them, I read back a few pages and found the fixes and ran some database comands all good. Added trusted proxies to config.php and deleted default file. rebooted and had the following warnings.

So I edited the default file to add:

add_header Strict-Transport-Security "max-age=15768000; includeSubDomains; preload;";And then the webpage won't load, I get 502 bad gateway?

My default file:

default.txt 3.41 kB · 0 downloads

Hopefully I done something daft?

Also I still have a big problem when people download files from the server with the docker image growing to 100%! It kills some of my other dockers!

Cheers,

Tim

For the warnings then delete the `default` file and it will be recreated on container restart. Alternatively take a look at the version on Github.

-

10 hours ago, Fizzyade said:

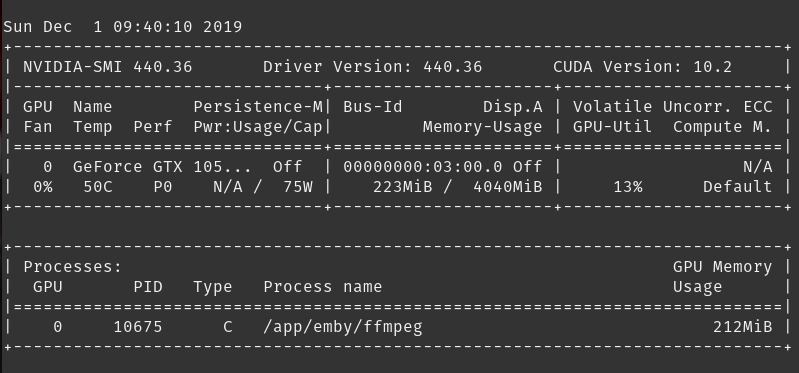

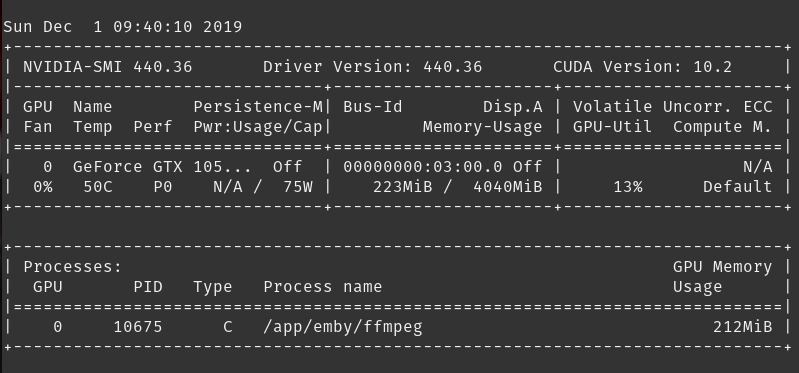

I installed a ffmpeg docker container which has a nvidia build of ffmpeg as the entrypoint, I added the docker socket to the tvheadend container and can quite happily run ffmpeg using hardware transcoding using pipe:0 for input and pipe:1 for output (docker exec -t ffmpeg <ffmpeg options> inside the tvheadend container), running nvidia-smi on the host shows ffmpeg using transcoding.

For the life of me though I can't get it to work by adding the ffmpeg command in tvheadend, nothing happens. There's no log file or anything either, so it makes it difficult to see what the actual problem is. I feel I'm close to having this working, but it's eluding me.

Edit:

got it working.

Basically I created a modified entry point and map that over the existing one, the entry point installs docker and sets the permissions on docker.sock so it's accessible by the uid that tvheadend runs under.

I created a ffmpeg.hw file which does a ffmpeg exec on the ffmpeg container, this is mounted into /usr/local/bin.

Then it's just a matter of creating a stream profile that uses the new ffmpeg passing in the appropriate nvdec nvenc parameters.

Verified that both hardware decoding of the incoming stream and hardware encoding of the output stream is happening.

Awesome. Now I can set about tweaking everything for optimum performance.

To recap, I now have tvheadend using nvidia hardware encoding/decoding.

That's the sort of thing you really should write up so others can benefit from

-

3 hours ago, sittingmongoose said:

Any updates on this?

No, otherwise I'd have posted something here.....

2 hours ago, BRiT said:Still doesn't build correctly. 🤣

Or so I assume until there new version shows up.

@BRiT You're like Sherlock Holmes

-

2

2

-

[Plugin] Linuxserver.io - Unraid Nvidia

in Plugin Support

Posted

Sent from my Mi A1 using Tapatalk