bashNinja

Community Developer-

Posts

32 -

Joined

-

Last visited

Content Type

Profiles

Forums

Downloads

Store

Gallery

Bug Reports

Documentation

Landing

Everything posted by bashNinja

-

[Support] bashNinja - Dockers; Starbound, Pritunl, Demonsaw, etc.

bashNinja replied to bashNinja's topic in Docker Containers

@CoZ There are some workarounds listed here: https://github.com/aldostools/webMAN-MOD/issues/333#issuecomment-703761014 The Unraid Devs came back in that post and said it was a programming issue with webMAN-MOD, but I have concerns with that decision. I have moved my box over to FreeNAS and not looked back. As such, I won't be digging into this bug. If you want, you can go back to the webMAN devs and see what they say. Until then, the workaround is probably good enough. -

[Support] bashNinja - Dockers; Starbound, Pritunl, Demonsaw, etc.

bashNinja replied to bashNinja's topic in Docker Containers

@Snickers As far as I can tell, it is an error with UnRaid's latest versions. I submitted a bug report back in May, but so far it has been ignored by the UnRaid Team. If you would like to use the PS3NETSERV application on Unraid, use a version of Unraid older than 6.8.0 or use it inside of a VM. I've left it up on CA because there are users willing to stay on a pre-6.8.0 version to use this app. Personally, I am in the process of moving away from Unraid and to another system. Having a Urgent bug report ignored for this long has lowered my confidence in this product and makes it hard for me to enjoy using it. -

[6.8.0 to 6.8.3] scandir d_type and stat errors

bashNinja commented on bashNinja's report in Stable Releases

I'm curious if there is any update on this bug report? Has it been received? Do you plan on fixing it? If not, I need to pull specific applications off the Community Applications and I need to move from UnRaid to another platform. I've upgraded the report to Urgent since it's a "show stopper" and makes the array unusable for certain applications. -

Background Info I've been troubleshooting an application running in a docker container. I pulled it out of the docker container and ran on baremetal and am having the same issues. You can see my and others' troubleshooting here: https://github.com/aldostools/webMAN-MOD/issues/333 We started having issues with UnRaid 6.8.0 and higher. I limited it down to a small `.cpp` files to highlight the issue. Small Files: https://gist.github.com/miketweaver/92c61293f16ef3016f9a57472fff1ff3 Here is my filesystem: root@Tower:~# tree -L 3 /mnt/user/games/ /mnt/user/games/ └── GAMES/ └── BLUS31606/ ├── PS3_DISC.SFB ├── PS3_GAME/ └── PS3_UPDATE/ 4 directories, 1 file ( / added by me to highlight what are dirs ) root@Tower:~# tree -L 3 /mnt/disk*/games/ /mnt/disk2/games/ └── GAMES/ └── BLUS31606/ ├── PS3_DISC.SFB ├── PS3_GAME/ └── PS3_UPDATE/ /mnt/disk3/games/ └── GAMES/ └── BLUS31606 └── PS3_GAME/ 7 directories, 1 file ( / added by me to highlight what are dirs ) As you can see, I have files spread apart across 2 disks. 1. Scandir Issue When I compile `scandir.cpp` and run it on 6.7.2 (good). I get this output: PS3_UPDATE, d_type: DT_DIR PS3_GAME, d_type: DT_DIR PS3_DISC.SFB, d_type: DT_REG .., d_type: DT_DIR ., d_type: DT_DIR When I take the same file and run on 6.8.0 and higher (bad). I get this output: PS3_UPDATE, d_type: DT_UNKNOWN PS3_GAME, d_type: DT_UNKNOWN PS3_DISC.SFB, d_type: DT_UNKNOWN .., d_type: DT_UNKNOWN ., d_type: DT_UNKNOWN It appears that the changes to SHFS in 6.8.0 have caused it so that `d_type` isn't supported with `shfs` 2. Stat Issue When I compile `stat.cpp` and run it on 6.7.2 (good). I get this output (every time): root@Tower:~# ./stat.o PS3_UPDATE, PS3_GAME, PS3_DISC.SFB, .., unknown is a Directory! ., unknown is a Directory! When I take the same file and run on 6.8.0 and higher (bad). I get this output, it varies every time I run it: root@Tower:~# ./stat.o PS3_UPDATE, unknown is a File! PS3_GAME, unknown is a File! PS3_DISC.SFB, unknown is a File! .., unknown is a Directory! ., unknown is a Directory! root@Tower:~# ./stat.o PS3_UPDATE, PS3_GAME, PS3_DISC.SFB, .., unknown is a Directory! ., unknown is a Directory! root@Tower:~# ./stat.o PS3_UPDATE, PS3_GAME, PS3_DISC.SFB, .., unknown is a Directory! ., unknown is a Directory! root@Tower:~# ./stat.o PS3_UPDATE, unknown is a FIFO! PS3_GAME, unknown is a FIFO! PS3_DISC.SFB, unknown is a FIFO! .., unknown is a Directory! ., unknown is a Directory! root@Tower:~# ./stat.o PS3_UPDATE, unknown is a CHR! PS3_GAME, unknown is a CHR! PS3_DISC.SFB, unknown is a CHR! .., unknown is a Directory! ., unknown is a Directory! Please visit Tools/Diagnostics and attach the diagnostics.zip file to your post. Plugins: I have many plugins, but I tested this in a virtualized unraid with 0 plugins and it has the same issue. Bare Metal Only: Yes. This is bare metal tower-diagnostics-20200524-1139.zip

-

[Support] bashNinja - Dockers; Starbound, Pritunl, Demonsaw, etc.

bashNinja replied to bashNinja's topic in Docker Containers

@flinte Yes. That error is expected. It's saying it doesn't have a valid SSL certificate. You need to accept the certificate. I also updated pritunl to the latest, in the event it was another issue. -

[6.7.2] Can't login as root after changing password via Webgui

bashNinja commented on m4ck5h4ck's report in Stable Releases

I was able to reproduce this bug and had the same error. I'm on 6.7.2 -

[Support] bashNinja - Dockers; Starbound, Pritunl, Demonsaw, etc.

bashNinja replied to bashNinja's topic in Docker Containers

@gxs I have pushed an update. Please try now. -

[Support] bashNinja - Dockers; Starbound, Pritunl, Demonsaw, etc.

bashNinja replied to bashNinja's topic in Docker Containers

I don't use these variables in my container.... I'm not sure where you're getting them from. -

[Support] bashNinja - Dockers; Starbound, Pritunl, Demonsaw, etc.

bashNinja replied to bashNinja's topic in Docker Containers

The fact that you see pritunl generating keys and the like means that it's not picking up the config file. I'll see if I can get that fixed. Give me a bit. -

[Support] bashNinja - Dockers; Starbound, Pritunl, Demonsaw, etc.

bashNinja replied to bashNinja's topic in Docker Containers

@gxs Thank you. I haven't needed to build a new container in a while, so I haven't noticed the change in the way pritunl gets the default user. I'll have to fix that, as I get the same error as you. What I don't get in my testing is the issues with the port binding in your logs: panic: listen tcp 0.0.0.0:443: bind: address already in use I'll take a look at this and see if I can get it fixed. -

[Support] bashNinja - Dockers; Starbound, Pritunl, Demonsaw, etc.

bashNinja replied to bashNinja's topic in Docker Containers

You'll need to post more specific information. This container still works for me and I have no issues. What do your logs say? -

[Support] bashNinja - Dockers; Starbound, Pritunl, Demonsaw, etc.

bashNinja replied to bashNinja's topic in Docker Containers

@nuhll actually, this server will work with the Steam Version. You'll just have to grab the files and put them in the mount point. Unfortunately, I don't own the steam version so I can't provide a guide or official support, but it *will* work, and there are other (non-unraid) docker containers built specifically for the Steam Version. -

[Support] bashNinja - Dockers; Starbound, Pritunl, Demonsaw, etc.

bashNinja replied to bashNinja's topic in Docker Containers

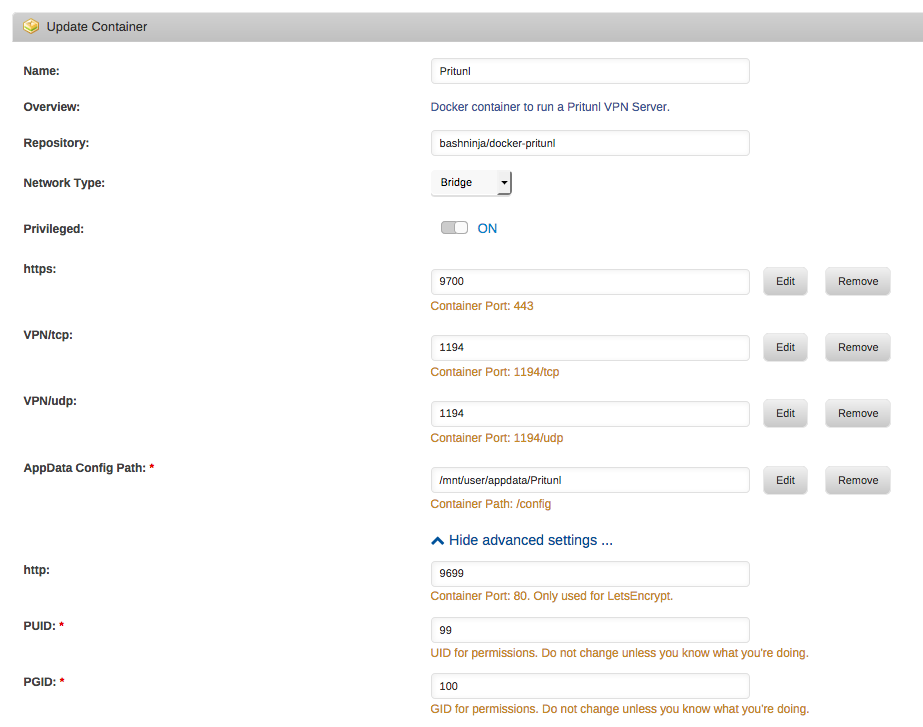

@hus2020 I have not tested this on 6.4 yet, as I haven't upgraded. But it's working as intended for a few other machines I've seen. That "No handlers could be found for logger" error appears for every user, but it still works just fine. You probably need to check your port mappings. Here's an example of mine: You don't need to add this docker manually, as it is in the community applications as a beta container. -

[Support] bashNinja - Dockers; Starbound, Pritunl, Demonsaw, etc.

bashNinja replied to bashNinja's topic in Docker Containers

Hey, I'm really sorry about that! Losing a save game really sucks. From my understanding of Starbound this is how it works (this could be completely wrong): character inventory and ship is saved on your local computer Autosaving happens continuously - 3 backups are actually made and pushed out sequentially. When I played it has always just saved itself and I never had to worry about it. Nonetheless, I'd love to make it more resilient to issues like yours. Give me a few days to dig into this. Also, what cron job are you setting? I can set this within the docker container itself so that it takes effect for everyone that uses the container. Edit: I did some quick digging. Yes, it looks like it saves the game files within the appdata folder. This is in the appdata/starbound/game/storage folder on my box. root@Tower:/mnt/user/appdata/starbound/game/storage# ls -alh total 20K drwxr-xr-x 1 root root 146 Oct 23 09:09 ./ drwxr-xr-x 1 root root 60 Oct 23 09:08 ../ -rw-r--r-- 1 root root 1.3K Oct 23 09:09 starbound_server.config -rw-r--r-- 1 root root 4.1K Oct 23 09:09 starbound_server.log -rw-r--r-- 1 root root 5.2K Oct 23 09:09 starbound_server.log.1 drwxr-xr-x 1 root root 112 Oct 23 09:11 universe/ root@Tower:/mnt/user/appdata/starbound/game/storage# ls universe/ tempworlds.index universe.chunks universe.dat universe.lock -

[Support] bashNinja - Dockers; Starbound, Pritunl, Demonsaw, etc.

bashNinja replied to bashNinja's topic in Docker Containers

Hey! I'm glad you got it working. This command might be better than your chown: chown -R nobody:users /mnt/user/appdata/starbound But, honestly, what you did was great! If you have any issues, let me know! -

[Support] bashNinja - Dockers; Starbound, Pritunl, Demonsaw, etc.

bashNinja replied to bashNinja's topic in Docker Containers

@silverdragon10 I went ahead and updated that. Demonsaw 4.0.2 should be working now! Sorry it took so long. It took a month to recover from Defcon... -

I would like to request that you enable TPM features within UnRAID. This will allow me to run secure and fully encrypted VMs without fear that a stolen system will leak important data. Use case: Use TPM enabled Bitlocker for a Windows 10 UnRAID virtual machine. Use case 2: At some future point, maybe unRaid would like to run encrypted. This would allow a secure method of encrypting the filesystem. In my specific case, I would like to enable BitLocker for a Windows 10 UnRAID vm. 1) Virtual machines in UnRAID use KVM. Source: http://lime-technology.com/unraid-6-virtualization-update/ 2) TPM passthrough is possible in KVM. Source: http://wiki.qemu.org/Features/TPM 3) It's fairly simple to add TPM to the XML Source: https://docs.fedoraproject.org/en-US/Fedora_Draft_Documentation/0.1/html/Virtualization_Deployment_and_Administration_Guide/section-libvirt-dom-xml-tpm-device.html I have run this within my proxmox server (KVM based) and it works quite well. Can you enable TPM support to UnRAID? CONFIG_TCG_TPM=y Thank you.

-

VNC Remote - Laggy, Slow and drops w/ 6.3

bashNinja replied to Tomahawk51's topic in VM Engine (KVM)

I'm having these very issues. It's really been causing me issues with the servers I have. -

This is an issue that's still around. Here's my post with the issue: https://lime-technology.com/forum/index.php?topic=48370.0 @jonathanm Browsing http is not a viable method while logged into the forum. That opens to having my session jacked by a mitm attack. In order to view the youtube video I'd have to log out of my account, switch to http, and then browse to the post with the video.

-

I'm getting an error with reporting the statistics. I get an error code: 7. Version: 2016.12.21 Image attached.

-

unRAID 6 NerdPack - CLI tools (iftop, iotop, screen, kbd, etc.)

bashNinja replied to jonp's topic in Plugin Support

I'm not sure if this is the right location, but I'd like to request tcpdump in these tools. It's super useful for determining network issues. I've been installing it manually with installpkg and it seems to work great. Here's the package I've been using: https://pkgs.org/slackware-14.2/slackware-x86_64/tcpdump-4.7.4-x86_64-1.txz.html Be sure to get a recent version as the older version can't dump from virtual interfaces using Unraid's newer kernel. -

Thank you! Using @johnnie.black's suggestion I was able to repair my drive. For @BullDog656 or anyone else having this issue here's how I solved it. There's a great article in the wiki talking about repairing filesystems. https://lime-technology.com/wiki/index.php/Check_Disk_Filesystems I started the array in maintenance mode, and ran a test filesystem check using the gui. You can see the results of that in filesystem-check.txt attached. The highlight of that file was: Metadata corruption detected at xfs_agf block 0x1ffffffe1/0x200 flfirst 118 in agf 4 too large (max = 118) I then continued the guide and ran this command via SSH: xfs_repair -v /dev/md6 which didn't work and gave me the error: The filesystem has valuable metadata changes in a log which needs to be replayed. Mount the filesystem to replay the log, and unmount it before re-running xfs_repair. This same error was addressed in another forum topic https://lime-technology.com/forum/index.php?topic=52644.0, but the gist of it was to run to run xfs_repair with -L, hoping there wouldn't be any data loss. You can see the results of xfs_repair -vL /dev/md6 in filesystem-repair.txt, but it ran successfully. I was then able to get the array up and running and I haven't noticed any data loss. Thanks for your help and the great wiki! filesystem-check.txt filesystem-repair.txt

-

-

I'm having some issues getting the array working again with unraid. Version: 6.2.4 I was doing an update to a plex docker container and the system hung. It never finished updating the container and the server became completely unresponsive. I came home a while later and rebooted the server, as it wasn't responding. It brings up the web ui just fine now, but whenever I start the Arrays using the WebUI it hangs and never starts. I'm still able to ssh to the device, so I was able to grab a syslog. I've also attached a picture of my drives. Around line 3165 of the syslog I see: Nov 14 19:36:32 Tower emhttp: err: shcmd: shcmd (205): exit status: -119 Nov 14 19:36:32 Tower emhttp: mount error: No file system (-119) Nov 14 19:36:32 Tower emhttp: shcmd (206): umount /mnt/disk6 |& logger Nov 14 19:36:32 Tower root: umount: /mnt/disk6: not mounted Which seems like drive 6 is failing? But why wouldn't the array just start with that drive in a 'failed' state? Edit: Smart seems to report no errors with disk6. I attached the smart test report. syslog.txt smart-drive6.zip

-

BTSync is broken. The URL is no longer active. I've added the changes to update BTSync to Resilio and get it working again. https://github.com/limetech/dockerapp-sync/pull/1 For those looking for an immediate fix: https://hub.docker.com/r/bashninja/dockerapp-sync/ Note: I'm hoping this will be merged into the limetech docker, so I won't be keeping my version of the docker updated.