-

Posts

2801 -

Joined

-

Last visited

-

Days Won

9

Content Type

Profiles

Forums

Downloads

Store

Gallery

Bug Reports

Documentation

Landing

Everything posted by DZMM

-

Guide: How To Use Rclone To Mount Cloud Drives And Play Files

DZMM replied to DZMM's topic in Plugins and Apps

Post your script and rclone config please - remember to remove your passwords -

Guide: How To Use Rclone To Mount Cloud Drives And Play Files

DZMM replied to DZMM's topic in Plugins and Apps

You can use your current remote gdrive_media_vfs in the upload script if you want - it's just best practice to get another remote for uploading. It's easy. Just repeat what you did to create the remotes gdrive and gdrive_media_vfs - the only difference is create a different access token. -

Guide: How To Use Rclone To Mount Cloud Drives And Play Files

DZMM replied to DZMM's topic in Plugins and Apps

No just another pair of almost identical remotes gdrive_upload and gdrive_upload_vfs, except for a different access token, that point to the same gdrive. -

Guide: How To Use Rclone To Mount Cloud Drives And Play Files

DZMM replied to DZMM's topic in Plugins and Apps

Much easier to post direct: 14.10.2022 00:10:01 INFO: *** Rclone move selected. Files will be moved from /mnt/user/local/gdrive_vfs for gdrive_upload_vfs *** 14.10.2022 00:10:01 INFO: *** Starting rclone_upload script for gdrive_upload_vfs *** 14.10.2022 00:10:01 INFO: Script not running - proceeding. 14.10.2022 00:10:01 INFO: Checking if rclone installed successfully. 14.10.2022 00:10:01 INFO: rclone installed successfully - proceeding with upload. 14.10.2022 00:10:01 INFO: Uploading using upload remote gdrive_upload_vfs 14.10.2022 00:10:01 INFO: *** Using rclone move - will add --delete-empty-src-dirs to upload. 2022/10/14 00:10:01 INFO : Starting bandwidth limiter at 12Mi Byte/s 2022/10/14 00:10:01 INFO : Starting transaction limiter: max 8 transactions/s with burst 1 2022/10/14 00:10:01 DEBUG : --min-age 15m0s to 2022-10-13 23:55:01.599038088 +0200 CEST m=-899.976808163 2022/10/14 00:10:01 DEBUG : rclone: Version "v1.59.2" starting with parameters ["rcloneorig" "--config" "/boot/config/plugins/rclone/.rclone.conf" "move" "/mnt/user/local/gdrive_vfs" "gdrive_upload_vfs:" "--user-agent=gdrive_upload_vfs" "-vv" "--buffer-size" "512M" "--drive-chunk-size" "512M" "--tpslimit" "8" "--checkers" "8" "--transfers" "4" "--order-by" "modtime,ascending" "--min-age" "15m" "--exclude" "downloads/**" "--exclude" "*fuse_hidden*" "--exclude" "*_HIDDEN" "--exclude" ".recycle**" "--exclude" ".Recycle.Bin/**" "--exclude" "*.backup~*" "--exclude" "*.partial~*" "--drive-stop-on-upload-limit" "--bwlimit" "01:00,off 08:00,15M 16:00,12M" "--bind=" "--delete-empty-src-dirs"] 2022/10/14 00:10:01 DEBUG : Creating backend with remote "/mnt/user/local/gdrive_vfs" 2022/10/14 00:10:01 DEBUG : Using config file from "/boot/config/plugins/rclone/.rclone.conf" 2022/10/14 00:10:01 DEBUG : Creating backend with remote "gdrive_upload_vfs:" 2022/10/14 00:10:01 Failed to create file system for "gdrive_upload_vfs:": didn't find section in config file 14.10.2022 00:10:01 INFO: Not utilising service accounts. 14.10.2022 00:10:01 INFO: Script complete Script Finished Oct 14, 2022 00:10.01 Here's your problem: 2022/10/14 00:10:01 DEBUG : Using config file from "/boot/config/plugins/rclone/.rclone.conf" 2022/10/14 00:10:01 DEBUG : Creating backend with remote "gdrive_upload_vfs:" 2022/10/14 00:10:01 Failed to create file system for "gdrive_upload_vfs:": didn't find section in config file You don't have a gdrive_upload_vfs remote in your config. Create another remote with a new API key or service account if you've setup teamdrives (recommended as you will likely need teamdrives in the future). The upload uses a diff remote and API to try and reduce the risk of an API ban (very rare if you set things up correctly) affecting the mount responsible for playback. -

Guide: How To Use Rclone To Mount Cloud Drives And Play Files

DZMM replied to DZMM's topic in Plugins and Apps

Add them as extra paths to merge into your mergerfs mount, and then upload those paths to your gdrive: # OPTIONAL SETTINGS # Add extra paths to mergerfs mount in addition to LocalFilesShare LocalFilesShare2="/mnt/user/Series" LocalFilesShare3="/mnt/user/Movies" Logs would help! -

Guide: How To Use Rclone To Mount Cloud Drives And Play Files

DZMM replied to DZMM's topic in Plugins and Apps

Don't worry everything is correct. The mergerfs folder merges the contents of the local and rclone mount into one folder. This is the folder you add to Plex i.e. Plex doesn't know (or care) if the actual file is local or in the cloud. Your rclone_mount folder is empty, because you haven't moved or uploaded any files to the cloud yet - you do this with the upload script. Once you do, files will move from the local folder to rclone_mount. But, because mergerfs merges files from both locations, to Plex, radarr, sonarrr etc the file hasn't moved i.e. is still visible in the mergerfs folder. In summary, just ignore the local and rclone_mount folder in normal day-2-day usage, just look at the mergerfs folder, and let the upload script do its thing in the background. -

Guide: How To Use Rclone To Mount Cloud Drives And Play Files

DZMM replied to DZMM's topic in Plugins and Apps

Is anyone else having a problem (I think since 6.11) where files become briefly unavailable? I used to run my script via cron every couple of mins, but I've found over the last couple of days or so that the mount will be up but Plex etc need manually restarting i.e. I think the files became unavailable for such a short period of time, that my script missed the event and didn't stop and restart my dockers? I'm wondering if there's a better way than looking for the mountcheck file e.g. write a plex script that sits alongside the current script that says stop plex if files become unavailable and they rely on the existing script to restart plex? -

Guide: How To Use Rclone To Mount Cloud Drives And Play Files

DZMM replied to DZMM's topic in Plugins and Apps

@Kaizac @Roudy Thanks. I rebooted to upgrade yesterday, and everything stopped working and I panicked when I saw NerdPack was incompatible. After rolling back, I think the problem was actually because of changes I'd made recently to my mount script - I'd added about 5 more tdrives to get some of my tdrives under 100K files used, but my backup tdrive was still at 400k and was messing up rclone and wouldn't mount properly. It was so messed up I couldn't even delete old backups to make room, so I just deleted it from gdrive and created a replacement tdrive. All seems ok, so I'm going to upgrade again once I've worked out how to replace the NerdPack elements I need for other non-rclone activites. -

Guide: How To Use Rclone To Mount Cloud Drives And Play Files

DZMM replied to DZMM's topic in Plugins and Apps

Has anybody had any problems running the scripts on 6.11? Does the mergerfs install still work without NerdPack? -

Guide: How To Use Rclone To Mount Cloud Drives And Play Files

DZMM replied to DZMM's topic in Plugins and Apps

Are you using the scripts in this thread? It sounds like your trying to do something different with rclone which probably should be posted somewhere else. -

Guide: How To Use Rclone To Mount Cloud Drives And Play Files

DZMM replied to DZMM's topic in Plugins and Apps

How stable is your connection? The mount can occasionally drop and the script is designed to stop Dockers, test, and re-mount. I'm convinced mine's only done this about 3 times though -

Guide: How To Use Rclone To Mount Cloud Drives And Play Files

DZMM replied to DZMM's topic in Plugins and Apps

Sounds dangerous and a bad idea sharing metadata and I think they'd need to share the same database, which would be an even worse idea. I'd try and solve the root cause and see what's driving the API ban as I've had like 2 in 5 years or so e.g. could you maybe schedule Bazarr to only run during certain hours? Or, only give it 1 CPU so it runs slowly? I don't use Bazarr but I think I'm going to start as sometimes in shows I can't make out the dialogue or e.g. there's a bit in another language and I need the subtitles, but I've no idea how Plex sees the files. I might send you a few DMs if I get stuck -

Guide: How To Use Rclone To Mount Cloud Drives And Play Files

DZMM replied to DZMM's topic in Plugins and Apps

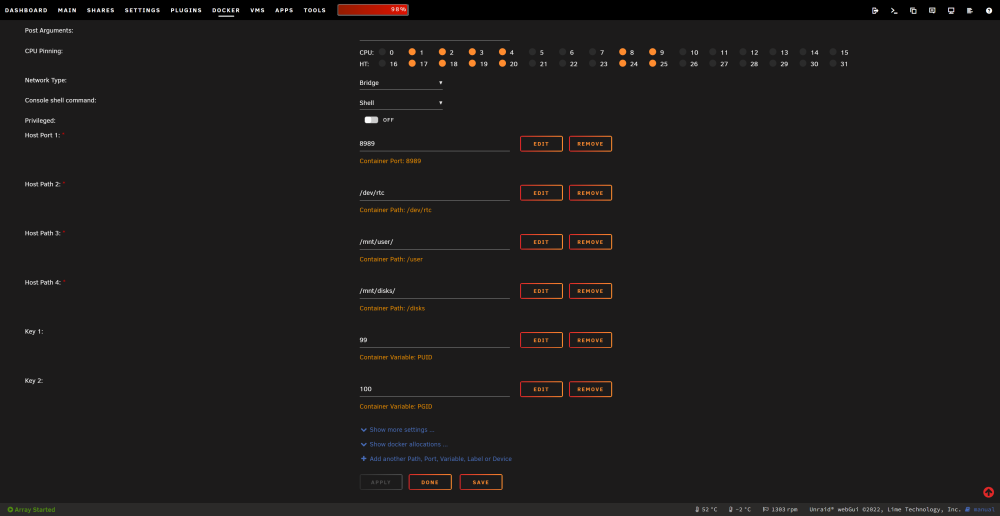

IMO you can't go wrong with using /user and /disks - at least for your dockers that have to talk to each other. I think the background to Dockers is they were setup as a good way to ringfence access and data. However, we want certain dockers to talk to each other easily! Life gets so much easier settings paths within docker WEBGUIs when your media mappings look like my Sonarr mappings below: -

Guide: How To Use Rclone To Mount Cloud Drives And Play Files

DZMM replied to DZMM's topic in Plugins and Apps

"disappear" - when and where? I'm guessing that you're not starting the dockers after the mount is active. -

Guide: How To Use Rclone To Mount Cloud Drives And Play Files

DZMM replied to DZMM's topic in Plugins and Apps

Easily fixed in Plex ( a bit harder in sonarr). I've covered it somewhere in this thread in more detail, but the gist of the change is; 1. Add new /mnt/user/movies paths to Plex in the app (after adding path to docker of course) 2. Scan all your libriaries to find all your new paths 3. ONLY when the scan has completed delete old paths from libraries 4. Empty trash This only works if you don't have the setting to auto delete missing files on. Sonarr is a bit of a pain as it tends to crash a lot for big libraries, so do backups! 1. Add new paths to docker and then app 2. Turn off download clients so doesn't try to replace files 3. Go to manage libraries and change location of folders to new location. Decline move files to new location 4. Do a full scan and it'll scan the new paths and find the existing files 5. Turn download clients back on Works well if your files are all named nicely. https://trash-guides.info/Sonarr/Sonarr-recommended-naming-scheme/ -

Constantly High CPU resources after power cut + Slow VM (Diagnostics)

DZMM replied to DZMM's topic in General Support

Thanks. I left it going overnight and it seems ok this morning. The VM is working well now and I'm frantically catching up on work -

I had a power cut this afternoon and after rebooting my server is running constantly at 90% CPU usage + my main W11 VM is ultra-slow (prob because of the CPU usage). Is there anything in my diagnostics that indicates what's wrong and how to fix? All help appreciated as I need my VM for work as I'm about 50% as effective on my laptop. highlander-diagnostics-20220830-2223.zip

-

Guide: How To Use Rclone To Mount Cloud Drives And Play Files

DZMM replied to DZMM's topic in Plugins and Apps

yes seedbox ---> gdrive and unraid server organises, renames, deletes files etc on gdrive - no use of local bandwidth or storage except to play files as normal. One day I might move Plex to a cloud server, but that's one for the future (or maybe sooner than expected if electricity prices keep going up!) -

Guide: How To Use Rclone To Mount Cloud Drives And Play Files

DZMM replied to DZMM's topic in Plugins and Apps

I agree. The only "hard" bits on other systems is installing rclone and mergerfs. But, once you've done that the scripts should work on pretty much any platform if you change the paths and can setup a cron job. E.g. I now use a seedbox to do my nzbget and rutorrent downloads and I then move completed files to google drive using my rclone scripts, with *arr running locally sending jobs to the seedbox and then managing the completed files on gdrive i.e. I don't really have a "local" anymore as no files exist locally but the scripts can still handle this. -

Guide: How To Use Rclone To Mount Cloud Drives And Play Files

DZMM replied to DZMM's topic in Plugins and Apps

I'm not sure. I don't think so - I'm investigating -

Guide: How To Use Rclone To Mount Cloud Drives And Play Files

DZMM replied to DZMM's topic in Plugins and Apps

Is anyone who is using rotating service accounts getting slow upload speeds? Mine have dropped to around 100 KiB/s even if I rotate accounts.... -

Guide: How To Use Rclone To Mount Cloud Drives And Play Files

DZMM replied to DZMM's topic in Plugins and Apps

interesting - I've noticed one of my mounts slowing, but not my main ones. But, I haven't watched much the last couple of months as work has been hectic -

Guide: How To Use Rclone To Mount Cloud Drives And Play Files

DZMM replied to DZMM's topic in Plugins and Apps

I would however recommend if you are going to be uploading a lot, to create multiple tdrives as you are halfway there with the multiple mounts, to avoid running into future performance issues. I've covered doing this before in other posts, but roughly what you do is: - create a rclone mount but not a mergerfs mount for each tdrive - create one mergerfs mount that combines all the rclone mounts - one rclone upload mount that moves all the files to one of your tdrives - run another rclone script to move the files server side from the single tdrive to their right tdrive e.g. if you uploaded all your files to tdrive_movies, then move tdrive_movies/TV to tdrive_tv/TV Note - you can only do this if all mounts use the same encryption passwords -

Guide: How To Use Rclone To Mount Cloud Drives And Play Files

DZMM replied to DZMM's topic in Plugins and Apps

1. If all your mounts are folders in the same team drive, I would have one upload remote that moves everything to the tdrive. Because rclone is doing the move "it knows" so each rclone mount knows straightaway there are new files in the mount, so mergers is also happy. 2. Problem goes away 3. Yes you need unique combos. 4. They are different -

[Support] Linuxserver.io - Plex Media Server

DZMM replied to linuxserver.io's topic in Docker Containers

Has anyone else been having problems with Plex over the last couple of weeks where it crashes overnight, and the docker can't be stopped or killed? I'm having to reboot every couple of days because of this. Or, are there other commands I can use instead of docker stop plex and docker kill plex?