BasWeg

Members-

Posts

49 -

Joined

-

Last visited

Recent Profile Visitors

The recent visitors block is disabled and is not being shown to other users.

BasWeg's Achievements

Rookie (2/14)

12

Reputation

-

Also bei mir funktioniert es erst seit 6.12.6 (hatte vorher 6.11.5). Meine einzigste Änderung ist in sylinux cfg das amd_pstate=passive Im go file war kein modprobe nötig label Unraid OS menu default kernel /bzimage append rcu_nocbs=0-15 isolcpus=6-7,14-15 pcie_acs_override=downstream,multifunction initrd=/bzroot amd_pstate=passive vfio_iommu_type1.allow_unsafe_interrupts=1 video=efifb:off idle=halt

-

I've created a new VM, using the old vdisk. Added the same xml for graphic and sound. <hostdev mode='subsystem' type='pci' managed='yes'> <driver name='vfio'/> <source> <address domain='0x0000' bus='0x05' slot='0x00' function='0x0'/> </source> <alias name='hostdev0'/> <rom file='/mnt/SSD/Vms/Bios/Asus.GT710.2048.170525.rom'/> <address type='pci' domain='0x0000' bus='0x04' slot='0x00' function='0x0' multifunction='on'/> </hostdev> <hostdev mode='subsystem' type='pci' managed='yes'> <driver name='vfio'/> <source> <address domain='0x0000' bus='0x05' slot='0x00' function='0x1'/> </source> <alias name='hostdev1'/> <address type='pci' domain='0x0000' bus='0x04' slot='0x00' function='0x1'/> </hostdev> And its working. 🤷♂️ The old VM with the same config is not.

-

Hi, I've upgraded today from 6.11.5 to 6.12.6 I've following issue now. I can not start any VM in the GUI. Only at some linux VMs, I can use the "Start with Conosle (VNC)". The normal Start, Restart, Pause, Hibernate, Stop, force stop does not work any more -> no reaction. The dialog stays open. For my Win10 VM, I can enable Autostart, disable/enable VM itself, then Win10 VM is running. It is not possible to remove any VM either. The gui is asking if I want to proceed, but clicking at proceed is ignored. (I've flushed the cache already) An additional Problem, the primary gpu passthrough is not working any more in 6.12.6unraidserver-diagnostics-20231221-1013.zip I hope, you habe any ideas best regards Bastian unraidserver-diagnostics-20231221-1013.zip

-

Since I also use the znapzend plugin, does your solution just work with the old stored znapzend configuration?

-

I'm going to try @Marshalleqsolution: https://forums.unraid.net/topic/41333-zfs-plugin-for-unraid/?do=findComment&comment=1250880 and

-

ok, then I need to rename all my dockers, too. NAME SIZE ALLOC FREE CKPOINT EXPANDSZ FRAG CAP DEDUP HEALTH ALTROOT HDD 5.45T 1.79T 3.67T - - 2% 32% 1.00x ONLINE - NVME 928G 274G 654G - - 8% 29% 1.00x ONLINE - SSD 696G 254G 442G - - 13% 36% 1.00x ONLINE - SingleSSD 464G 16.6G 447G - - 1% 3% 1.00x ONLINE - HDD and SSD are raidz1-0 with 3 discs each. So, to update, I should remember the zpool status (UIDs for each array), and create arrays afterwards with these UIDS?

-

Me, too. But I'm afraid if this is still working with the build in version of zfs. So, I'm still on the latest release with zfs plug-in. My understanding is, that my solution to have the mountpoints to /mnt/ is not ok anymore?

-

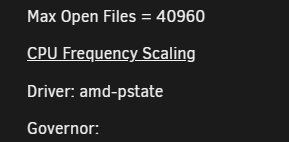

Hi, sorry, but I do not get this working for my Ryzen 3700X I've added modprobe.blacklist=acpi_cpufreq amd_pstate.shared_mem=1 and of course the modprobe amd_pstate amd_pstate is loaded, but cpufreq-info shows: analyzing CPU 0: driver: amd-pstate CPUs which run at the same hardware frequency: 0 CPUs which need to have their frequency coordinated by software: 0 maximum transition latency: 131 us. available cpufreq governors: corefreq-policy, conservative, ondemand, userspace, powersave, performance, schedutil current CPU frequency is 3.59 GHz. analyzing CPU 1: driver: amd-pstate and if I look into corefreq-cli the system is always running at full speed. before the system has a minimum of 2200MHz. Tips and Tweaks tells me, that there is no governor Any Idea? best regards Bastian

-

Hi, I've just updated the plugin and corrected the exclusion pattern. Nevertheless the Main Page does show following warning: "Warning: session_start(): Cannot start session when headers already sent in /usr/local/emhttp/plugins/dynamix/include/DefaultPageLayout.php(522) : eval()'d code on line 3" Any idea? (I've cleared the browser cache of course) UNRAID Version 6.9.2

-

Ah ok. It was possible in the past, but since zfs is a kernel Module it is not possible anymore.

-

is it possible to update without reboot?

-

Does the user 99:100 (nobody:users) have the correct rights in the folder citadel/vsphere?

-

An example is documented in the settings. I've my docker files in /mnt/SingleSSD/docker/ zpool is SingleSSD Dataset is docker, so the working pattern for exclusion is: /^SingleSSD\/docker\/.*/

-

I've done this via dataset properties. To share a dataset: zfs set sharenfs='rw=@<IP_RANGE>,fsid=<FileSystemID>,anongid=100,anonuid=99,all_squash' <DATASET> <IP_RANGE> is something like 192.168.0.0/24, to restrict rw access. Just have a look to the nfs share properties. <FileSystemID> is an unique ID you need to set. I've started with 1 and with every shared dataset I've increased the number <DATASET> dataset you want to share. The magic was the FileSystemID, without setting this ID, it was not possible to connect from any client. To unshare a dataset, you can easily set: zfs set sharenfs=off <DATASET>