ogi

Members-

Posts

288 -

Joined

-

Last visited

Content Type

Profiles

Forums

Downloads

Store

Gallery

Bug Reports

Documentation

Landing

Everything posted by ogi

-

Why is ASPM disabled in Unraid, while it's enabled in Ubuntu?

ogi replied to mgutt's topic in General Support

-

Why is ASPM disabled in Unraid, while it's enabled in Ubuntu?

ogi replied to mgutt's topic in General Support

I'll give the LiveUSB a try in a bit and report back (might be a day or so, the garage where my server resides is absolutely cooking right now, and I don't really want to spend much time there). Regarding the HBA, is there a known HBA that can be flashed to IT mode that supports L1 support? -

Why is ASPM disabled in Unraid, while it's enabled in Ubuntu?

ogi replied to mgutt's topic in General Support

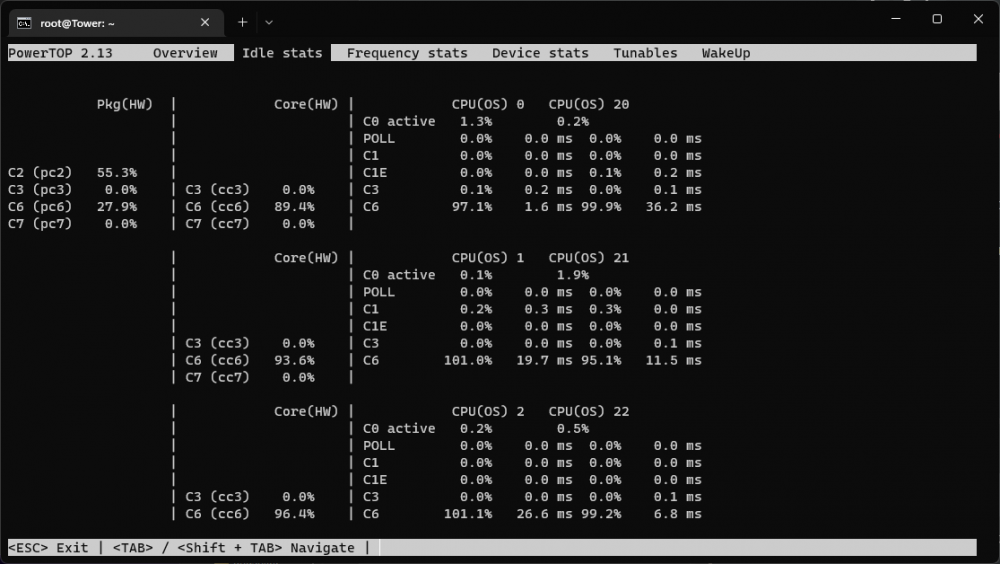

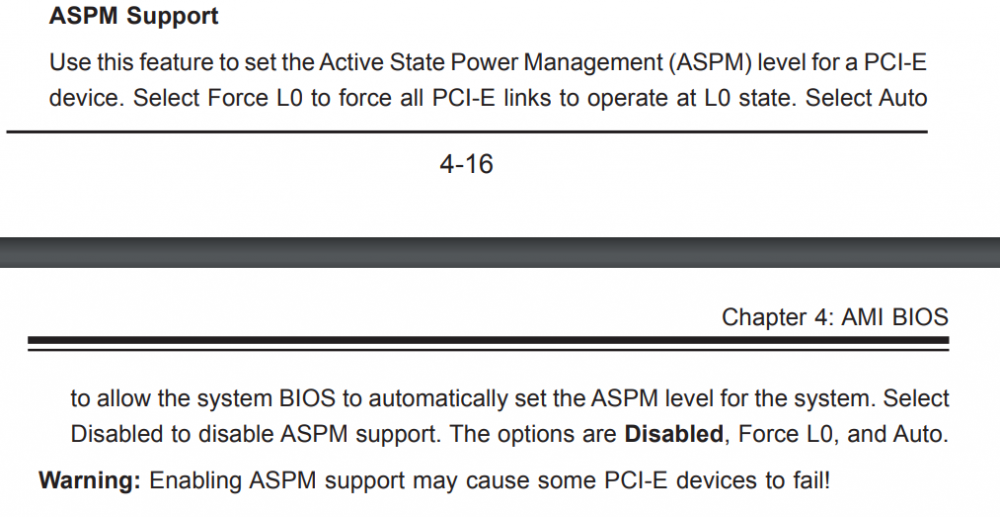

I set my BIOS ASPM option to "auto", here is the blurb in the manual about the options. I didn't actually see the Force L0 option. I also added pcie_aspm=force to the list of options in my /boot/syslinux/syslinux.cfg file root@Tower:~# cat /boot/syslinux/syslinux.cfg default menu.c32 menu title Lime Technology, Inc. prompt 0 timeout 50 label Unraid OS menu default kernel /bzimage append intel_iommu=on rd.driver.pre=vfio-pci video=vesafb:off,efifb:off isolcpus=4-9,24-29 vfio_iommu_type1.allow_unsafe_interrupts=1 initrd=/bzroot pcie_aspm=force label Unraid OS GUI Mode kernel /bzimage append isolcpus=4-9,24-29 vfio_iommu_type1.allow_unsafe_interrupts=1 initrd=/bzroot,/bzroot-gui label Unraid OS Safe Mode (no plugins, no GUI) kernel /bzimage append initrd=/bzroot unraidsafemode label Unraid OS GUI Safe Mode (no plugins) kernel /bzimage append initrd=/bzroot,/bzroot-gui unraidsafemode label Memtest86+ kernel /memtest Before the above two options, all entries when I did lspci showed ASPM disabled, with the above changes, only a handful of entries (primarily PCI bridges and my HBA) show ASPM as being disabled. I have no problem trying ubuntu; server is a bit tough to access but I'll make a go of it. Would the idea be to boot off of a liveUSB and then check ASPM status? -

Why is ASPM disabled in Unraid, while it's enabled in Ubuntu?

ogi replied to mgutt's topic in General Support

Sorry to bring a thread back from the dead; I decided that I wanted to spend time trying to minimize idle power-usage on my Supermicro Server (X9DRi-LN4F+ motherboard). I followed most of the advice here; have a handful of devices that ASPM is still disabled for here; hoping I could get some suggestions on how to address them: root@Tower:~# lspci -vv | awk '/ASPM/{print $0}' RS= | grep --color -P '(^[a-z0-9:.]+|ASPM )' 00:00.0 Host bridge: Intel Corporation Xeon E7 v2/Xeon E5 v2/Core i7 DMI2 (rev 04) LnkCap: Port #0, Speed 2.5GT/s, Width x4, ASPM L1, Exit Latency L1 <16us LnkCtl: ASPM Disabled; RCB 64 bytes, Disabled- CommClk- 00:01.0 PCI bridge: Intel Corporation Xeon E7 v2/Xeon E5 v2/Core i7 PCI Express Root Port 1a (rev 04) (prog-if 00 [Normal decode]) LnkCap: Port #0, Speed 2.5GT/s, Width x4, ASPM L1, Exit Latency L1 <16us LnkCtl: ASPM Disabled; RCB 64 bytes, Disabled- CommClk- 00:01.1 PCI bridge: Intel Corporation Xeon E7 v2/Xeon E5 v2/Core i7 PCI Express Root Port 1b (rev 04) (prog-if 00 [Normal decode]) LnkCap: Port #0, Speed 8GT/s, Width x4, ASPM L1, Exit Latency L1 <16us LnkCtl: ASPM Disabled; RCB 64 bytes, Disabled- CommClk+ 00:02.0 PCI bridge: Intel Corporation Xeon E7 v2/Xeon E5 v2/Core i7 PCI Express Root Port 2a (rev 04) (prog-if 00 [Normal decode]) LnkCap: Port #0, Speed 8GT/s, Width x16, ASPM L1, Exit Latency L1 <16us LnkCtl: ASPM L1 Enabled; RCB 64 bytes, Disabled- CommClk+ 00:03.0 PCI bridge: Intel Corporation Xeon E7 v2/Xeon E5 v2/Core i7 PCI Express Root Port 3a (rev 04) (prog-if 00 [Normal decode]) LnkCap: Port #0, Speed 8GT/s, Width x16, ASPM L1, Exit Latency L1 <16us LnkCtl: ASPM L1 Enabled; RCB 64 bytes, Disabled- CommClk+ 00:11.0 PCI bridge: Intel Corporation C600/X79 series chipset PCI Express Virtual Root Port (rev 06) (prog-if 00 [Normal decode]) LnkCap: Port #17, Speed 2.5GT/s, Width x1, ASPM L0s L1, Exit Latency L0s <64ns, L1 <1us LnkCtl: ASPM L0s L1 Enabled; RCB 64 bytes, Disabled- CommClk+ 00:1c.0 PCI bridge: Intel Corporation C600/X79 series chipset PCI Express Root Port 1 (rev b6) (prog-if 00 [Normal decode]) LnkCap: Port #1, Speed 5GT/s, Width x4, ASPM L1, Exit Latency L1 <4us LnkCtl: ASPM L1 Enabled; RCB 64 bytes, Disabled- CommClk+ pcilib: sysfs_read_vpd: read failed: No such device 02:00.0 Serial Attached SCSI controller: Broadcom / LSI SAS2308 PCI-Express Fusion-MPT SAS-2 (rev 05) LnkCap: Port #0, Speed 8GT/s, Width x8, ASPM L0s, Exit Latency L0s <64ns LnkCtl: ASPM Disabled; RCB 64 bytes, Disabled- CommClk+ 03:00.0 VGA compatible controller: NVIDIA Corporation TU104GL [Quadro RTX 4000] (rev a1) (prog-if 00 [VGA controller]) LnkCap: Port #0, Speed 8GT/s, Width x16, ASPM L0s L1, Exit Latency L0s <512ns, L1 <16us LnkCtl: ASPM L1 Enabled; RCB 64 bytes, Disabled- CommClk+ 03:00.1 Audio device: NVIDIA Corporation TU104 HD Audio Controller (rev a1) LnkCap: Port #0, Speed 8GT/s, Width x16, ASPM L0s L1, Exit Latency L0s <512ns, L1 <4us LnkCtl: ASPM L0s L1 Enabled; RCB 64 bytes, Disabled- CommClk+ 03:00.2 USB controller: NVIDIA Corporation TU104 USB 3.1 Host Controller (rev a1) (prog-if 30 [XHCI]) LnkCap: Port #0, Speed 8GT/s, Width x16, ASPM L0s L1, Exit Latency L0s <512ns, L1 <16us LnkCtl: ASPM L1 Enabled; RCB 64 bytes, Disabled- CommClk+ 03:00.3 Serial bus controller: NVIDIA Corporation TU104 USB Type-C UCSI Controller (rev a1) LnkCap: Port #0, Speed 8GT/s, Width x16, ASPM L0s L1, Exit Latency L0s <512ns, L1 <16us LnkCtl: ASPM L1 Enabled; RCB 64 bytes, Disabled- CommClk+ 04:00.0 VGA compatible controller: NVIDIA Corporation GP106GL [Quadro P2000] (rev a1) (prog-if 00 [VGA controller]) LnkCap: Port #0, Speed 8GT/s, Width x16, ASPM L0s L1, Exit Latency L0s <1us, L1 <4us LnkCtl: ASPM L1 Enabled; RCB 64 bytes, Disabled- CommClk+ 04:00.1 Audio device: NVIDIA Corporation GP106 High Definition Audio Controller (rev a1) LnkCap: Port #0, Speed 8GT/s, Width x16, ASPM L0s L1, Exit Latency L0s <1us, L1 <4us LnkCtl: ASPM L1 Enabled; RCB 64 bytes, Disabled- CommClk+ 05:00.0 Serial Attached SCSI controller: Intel Corporation C602 chipset 4-Port SATA Storage Control Unit (rev 06) LnkCap: Port #0, Speed 2.5GT/s, Width x1, ASPM L0s L1, Exit Latency L0s <64ns, L1 <1us LnkCtl: ASPM L0s L1 Enabled; RCB 64 bytes, Disabled- CommClk+ 06:00.0 Ethernet controller: Intel Corporation I350 Gigabit Network Connection (rev 01) LnkCap: Port #0, Speed 5GT/s, Width x4, ASPM L0s L1, Exit Latency L0s <4us, L1 <32us LnkCtl: ASPM L1 Enabled; RCB 64 bytes, Disabled- CommClk+ 06:00.1 Ethernet controller: Intel Corporation I350 Gigabit Network Connection (rev 01) LnkCap: Port #0, Speed 5GT/s, Width x4, ASPM L0s L1, Exit Latency L0s <4us, L1 <32us LnkCtl: ASPM L1 Enabled; RCB 64 bytes, Disabled- CommClk+ 06:00.2 Ethernet controller: Intel Corporation I350 Gigabit Network Connection (rev 01) LnkCap: Port #0, Speed 5GT/s, Width x4, ASPM L0s L1, Exit Latency L0s <4us, L1 <32us LnkCtl: ASPM L1 Enabled; RCB 64 bytes, Disabled- CommClk+ 06:00.3 Ethernet controller: Intel Corporation I350 Gigabit Network Connection (rev 01) LnkCap: Port #0, Speed 5GT/s, Width x4, ASPM L0s L1, Exit Latency L0s <4us, L1 <32us LnkCtl: ASPM L1 Enabled; RCB 64 bytes, Disabled- CommClk+ 80:00.0 PCI bridge: Intel Corporation Xeon E7 v2/Xeon E5 v2/Core i7 PCI Express Root Port in DMI2 Mode (rev 04) (prog-if 00 [Normal decode]) LnkCap: Port #8, Speed 2.5GT/s, Width x48, ASPM L1, Exit Latency L1 <16us LnkCtl: ASPM Disabled; RCB 64 bytes, Disabled- CommClk- 80:01.0 PCI bridge: Intel Corporation Xeon E7 v2/Xeon E5 v2/Core i7 PCI Express Root Port 1a (rev 04) (prog-if 00 [Normal decode]) LnkCap: Port #0, Speed 8GT/s, Width x8, ASPM L1, Exit Latency L1 <16us LnkCtl: ASPM L1 Enabled; RCB 64 bytes, Disabled- CommClk+ 80:02.0 PCI bridge: Intel Corporation Xeon E7 v2/Xeon E5 v2/Core i7 PCI Express Root Port 2a (rev 04) (prog-if 00 [Normal decode]) LnkCap: Port #0, Speed 8GT/s, Width x16, ASPM L1, Exit Latency L1 <16us LnkCtl: ASPM Disabled; RCB 64 bytes, Disabled- CommClk- 80:03.0 PCI bridge: Intel Corporation Xeon E7 v2/Xeon E5 v2/Core i7 PCI Express Root Port 3a (rev 04) (prog-if 00 [Normal decode]) LnkCap: Port #0, Speed 8GT/s, Width x16, ASPM L1, Exit Latency L1 <16us LnkCtl: ASPM L1 Enabled; RCB 64 bytes, Disabled- CommClk+ 82:00.0 Non-Volatile memory controller: Intel Corporation SSD 660P Series (rev 03) (prog-if 02 [NVM Express]) LnkCap: Port #0, Speed 8GT/s, Width x4, ASPM L1, Exit Latency L1 <8us LnkCtl: ASPM L1 Enabled; RCB 64 bytes, Disabled- CommClk+ 84:00.0 VGA compatible controller: NVIDIA Corporation TU104GL [Quadro RTX 4000] (rev a1) (prog-if 00 [VGA controller]) LnkCap: Port #0, Speed 8GT/s, Width x16, ASPM L0s L1, Exit Latency L0s <1us, L1 <4us LnkCtl: ASPM L1 Enabled; RCB 64 bytes, Disabled- CommClk+ 84:00.1 Audio device: NVIDIA Corporation TU104 HD Audio Controller (rev a1) LnkCap: Port #0, Speed 8GT/s, Width x16, ASPM L0s L1, Exit Latency L0s <1us, L1 <4us LnkCtl: ASPM L1 Enabled; RCB 64 bytes, Disabled- CommClk+ 84:00.2 USB controller: NVIDIA Corporation TU104 USB 3.1 Host Controller (rev a1) (prog-if 30 [XHCI]) LnkCap: Port #0, Speed 8GT/s, Width x16, ASPM L0s L1, Exit Latency L0s <1us, L1 <4us LnkCtl: ASPM L1 Enabled; RCB 64 bytes, Disabled- CommClk+ 84:00.3 Serial bus controller: NVIDIA Corporation TU104 USB Type-C UCSI Controller (rev a1) LnkCap: Port #0, Speed 8GT/s, Width x16, ASPM L0s L1, Exit Latency L0s <1us, L1 <4us LnkCtl: ASPM L1 Enabled; RCB 64 bytes, Disabled- CommClk+ Is this realistically the best that can be done with respect to ASPM enabling or is there further room for improvement? -

Ahh that makes more sense, I see what you're getting at. Will test this when I get some time later today.

-

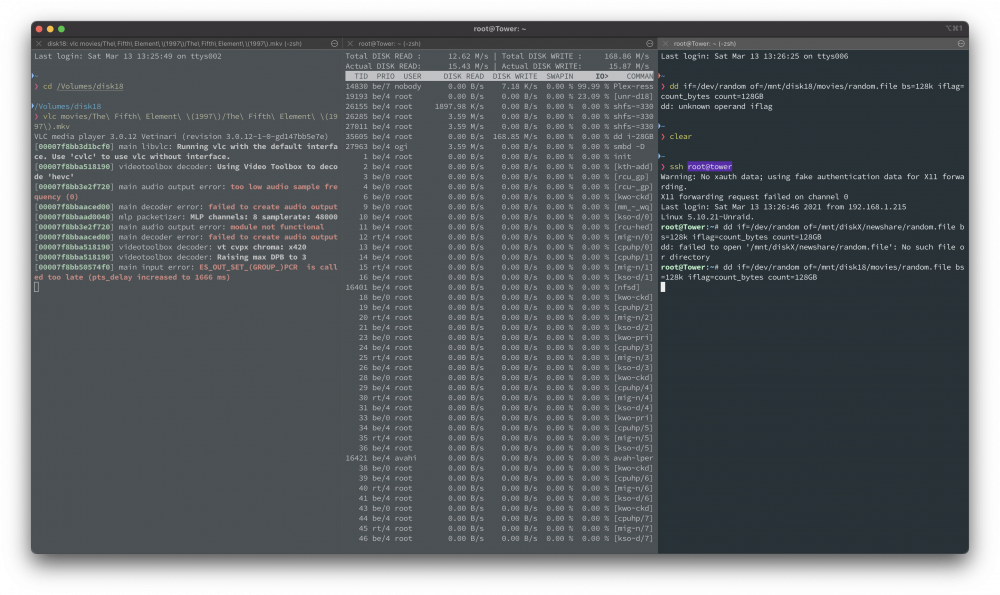

Sorry for the confusion; random.file was written to the same disk that a very high bitrate movie was streaming from (disk 18). I'm not sure which disk random.file was written to when I aimed it at /mnt/user/movies/random.file (haven't looked for it yet). The kids movie, I'm fairly certain is on another disk in the array. All operations went smoothly so now I am having trouble even replicating the issue I began with (where the movie would freeze for 5-10 seconds, and there would be no data coming in via the network during that time).

-

Thanks for chiming in @mgutt It's been running for ~10 minutes w/o issue; have to go back to monitoring the kids.... will edit this post later after the dd command returns. EDIT: kids now want to watch a movie too; so this will be a real stress test haha. EDIT2: dd command finished root@Tower:~# dd if=/dev/random of=/mnt/user/movies/random.file bs=128k iflag=count_bytes count=128GB 976562+1 records in 976562+1 records out 128000000000 bytes (128 GB, 119 GiB) copied, 1954.55 s, 65.5 MB/s Everything seems to be working remarkably well ...two movies playing (one on Plex via transcoding, BTW thanks for your in-memory decoding guide)... I guess whatever I have had going on before, it's no longer an issue? ...maybe I should test some CPU intensive tasks too...but I can do that later. EDIT 3: Just wanted to say thanks again for your debugging steps.

-

It's running now; I'm getting occasional hangups in the stream; here is a screenshot when during one of those freezes... for the most part its playing well. The `dd` command finished root@Tower:~# dd if=/dev/random of=/mnt/disk18/movies/random.file bs=128k iflag=count_bytes count=128GB 976562+1 records in 976562+1 records out 128000000000 bytes (128 GB, 119 GiB) copied, 2241.48 s, 57.1 MB/s root@Tower:~# Given the movie (mostly) played w/o interruption, not sure what to make of it.

-

I have a 2x E5-2680 v2 CPUs; I use dual parity drives inside a Supermicro CSE-846 chassis. My situation I don't think is Plex specific; and unfortunately I couldn't replicate last night while watching a high bitrate video (video is 82615 kb/s), but I did just upgrade from unraid 6.8.3 to 6.9.1. When streaming very high bitrate video (on VLC for examples) periodically the video stream will outright stop for ~10 seconds... and then resume. I have assumed this was due to some other write operation to the array; but I cannot be certain. I should point out things did get better over NFS vs. SMB; but I suspect that's just because the faster file transfer allowed for faster buffering (getting NFS mounts on macOS took me a while to sort out, I should probably post a guide about that!) I'll try and monitor things in iotop and the "System Stats" page on the webui and see if I can get a better idea of what else is happening when this occurs. As your issue involved Plex transcoding, and mine seems to have to do with just general file read operations, I'll create a new post if I'm able to reproduce more consistently. Thanks for chiming in!

-

thanks for the suggestion @primeval_godI wasn't even sure where to begin w/ looking for issues here.

-

Hi @mguttI'm curious if you got some resolution here... I've noticed my video streams have to pause/buffer while another file is transferring to the array. I'm hoping to come up with some settings to prioritize plex streams.

-

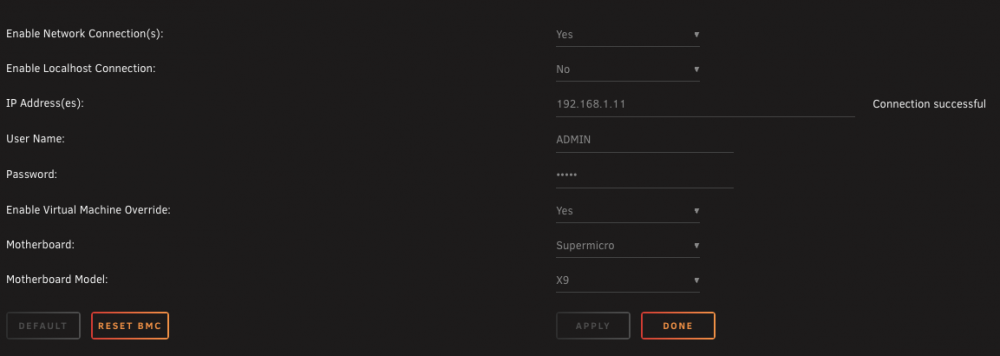

I have this same motherboard; ...that's curious why everything is grayed out...I had to specify a network connection and have it register "connection successful" before even attempting to do anything else... here's a screenshot of my settings.

-

you can definitely modify the thresholds from within the app; you need to go to config settings and select sensors from the drop down. Careful removing a fan, you cannot get it back (unless you factory reset the BMC controller). On my x9 board, I can only set the thresholds on increments of 75. For example I can set a threshold at 750, or lower it to 675, but I cannot set it to 700 even.

-

hi dlandon, Thanks for maintaining this plugin, it's amazing the functionality it provides! I didn't see if there was a feature request for this already, but I was hoping you could offer functionality for different temperature thresholds? I have a SSD as an unassigned device, which runs hotter than my spinning disks, and often get numerous warnings about excessive temperature and such. Thanks again for your work on this plugin, it's been an absolute life saver!

-

I did, those settings should be captured in fan.cfg, but aren't easy to read, here a screenshot from my GUI These settings have kept things pretty quiet for me hard drives never get more than 45C during a party check. Next time I power off the machine, I'm going to install the Noctua low-noise adapters for my CPU fans, and the 2 fans connected to FAN5/6 (but I haven't done that yet).

-

Attached are my fan.cfg and ipmi-sensors.config files which reside in /boot/config/plugins/ipmi Keep in mind the settings there are for the fans I'm using, in the configuration I have. I have Noctua iPPC 3000 fans connected to FAN1/2/3, and I have some Noctua 80mm fans connected to FAN5/6. The CPU fans (92mm, can't remember the model) I have connected in a y-splitter connected to FANA. One of the things I noticed is that once you remove a fan setting from the config, there is no way to add it back, until you restore the IPMI controller to factory settings, so careful removing fan settings from the sensors config! ipmi-sensors.config fan.cfg

-

Unraid has kernel panic moments after passthrough GPU is utilized by VM

ogi replied to ogi's topic in VM Engine (KVM)

Thanks for chiming in @jonpI didn't for you to answer support tickets on a weekend! I bought this GPU ages ago, to say I got my money's worth out of it would be an understatement, I'm okay with retiring it. I suppose before I buy a new GPU, I should re-purpose the P2000 GPU into one of those slots to make sure passthrough works as intended and that it is in-fact this GPU that is causing the issue, I'll update this thread if I turn up anything else of interest. -

Unraid has kernel panic moments after passthrough GPU is utilized by VM

ogi replied to ogi's topic in VM Engine (KVM)

Hi, I do indeed have 2 GPUs, the monitor output I described above was through the onboard VGA connector. Before I describe the PCIe layout, probably best you look at the photo of the motherboard here: https://www.supermicro.com/products/motherboard/Xeon/C600/X9DRi-LN4F_.cfm The first slot, closest to the CPUs is a 4x slot in a 8x connector (occupied with a NVMe adapter for my cache drive). The slot furthest from the CPUs holds a Quadro P2000 GPU, which I use primarily for plex transcoding (running in Docker). That slot furthest from the CPU is up against the chassis, so there is no way a 2x width card can fit. Adjacent to that slot, is an actual 8X connector which I have my HBA attached to. That leaves the 3 PCIe 16x slots, 2nd from the CPU to the 4th from the CPU. As the GTX 670 is a dual slot width card, I can only use two of those slots, and I've tried both at this point. Thanks for chiming in, I really do appreciate a second set of eyes on this issue! -

Unraid has kernel panic moments after passthrough GPU is utilized by VM

ogi replied to ogi's topic in VM Engine (KVM)

Well, when the GPU goes invisible the way I described earlier, all I need to do is plug it into my desktop, power it up, then put it back into the server, and wha-la it's visible again. Anyway, I trie adding the following options when booting, but still with the same result. append isolcpus=16-19,36-39 pcie_acs_override=downstream,multifunction intel_iommu=on rd.driver.pre=vfio-pci video=vesafb:off,efifb:off initrd=/bzroot This time I had dmesg -wH running, I don't think it gave me any more meaningful information, but I'll post the screenshot regardless At this point, I'm just starting to suspect that this GPU just plain won't work with passthrough. I'd certainly welcome other things to try if anyone has other suggestions. -

Unraid has kernel panic moments after passthrough GPU is utilized by VM

ogi replied to ogi's topic in VM Engine (KVM)

Another oddity I'm discovering is that sometimes the GTX 670 is not even listed in the Tools -> System Devices, or visible in `lspci` root@Tower:/boot/config# lspci | grep 670 root@Tower:/boot/config# On reboot the GPU is usually shown, but I've had to reboot on a few occasions now to ensure the device is visible in system devices..... i suppose i should try starting the VM again like this without the device being visible in unraid....server is being utilized somewhat heavily right now so this will have to wait -

Unraid has kernel panic moments after passthrough GPU is utilized by VM

ogi replied to ogi's topic in VM Engine (KVM)

From my googling, it sounds like 600 series cards, could be compatible, but generally weren't initially. Manufacturers were distributing UEFI capable vBIOSs on request through the forums from the looks of things. I confirmed in my desktop via GPU-Z, that my Gigabyte GTX 670 did not have UEFI capability. I should note that before I flashed the BIOS, no matter what configuration I had in the VM setup, i would always get Error Code 43; it wasn't until i read the bit on the wiki that OVMF devices must support UEFI booting. I tried a SeaBios config, but could never get the VM to start. -

Unraid has kernel panic moments after passthrough GPU is utilized by VM

ogi replied to ogi's topic in VM Engine (KVM)

Card is not bricked, it works fine on the desktop (that's where I did the flashing), and where I verified the card had UEFI capability. The vbios I flashed on the card is meant for my model card, the difference it had vs. the vbios on my card is the UEFI capability, which is needed. Here is the post with another user that discovered the same thing: