kaiguy

Members-

Posts

723 -

Joined

-

Last visited

Converted

-

Gender

Undisclosed

Recent Profile Visitors

The recent visitors block is disabled and is not being shown to other users.

kaiguy's Achievements

Enthusiast (6/14)

28

Reputation

-

Intel Socket 1151 Motherboards with IPMI AND Support for iGPU

kaiguy replied to Hoopster's topic in Motherboards and CPUs

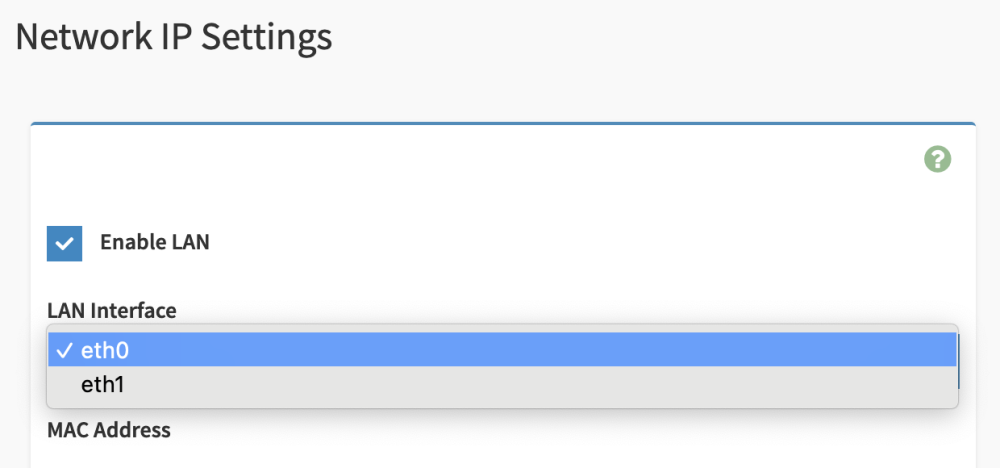

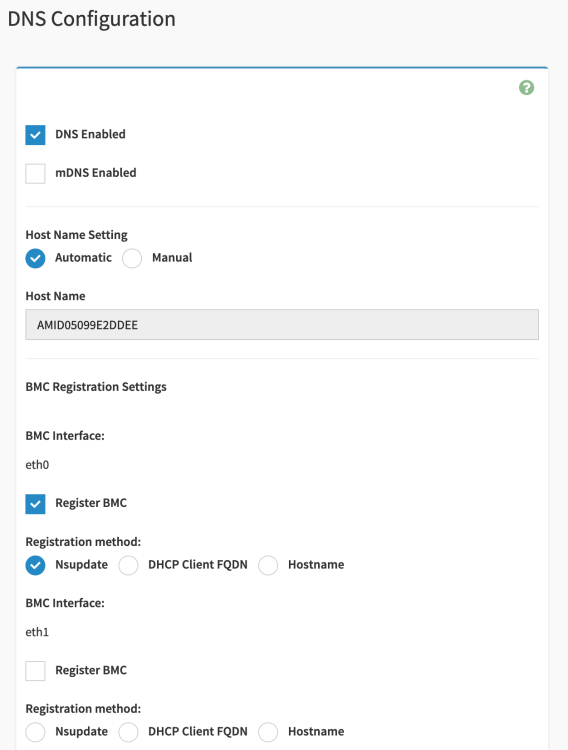

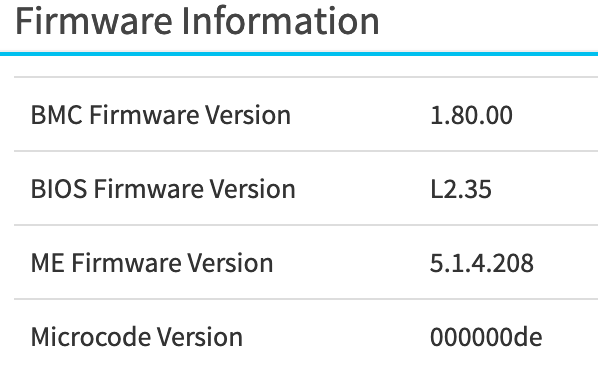

Hmm... this is a little odd. So I ended up successfully able to run the command locally on my unraid server (without specifying an IP address). I no longer see bond0 at all, but I also still don't have the bond configuration option. It's almost like the command just killed the bond without exposing that option. If I force refresh the network settings screen, it does end up showing the bond conf option before logging me out. When I log back in its not visible. What I do have is now the ability to choose enabling the LAN on eth0 or eth1 under Network IP settings, and also the ability to register eth0 and/or eth1 to the BMC. Maybe I'm just misunderstanding that I thought I would see 3 eth options (the 2 LAN and 1 IPMI LAN). Edit: Ok, I got the bonding configuration to show up. Required me to do a BMC cold reset in the settings. Ultimately, though, something is just not working for me. No matter what, I can't seem to get the board to utilize the IPMI port. Even with bonding disabled it's always attaching the IPMI mac address to either eth0 or eth1. I wonder if its related to this bug, but I'm not feeling confident or motivated enough to do anything about it. Oh well. -

Intel Socket 1151 Motherboards with IPMI AND Support for iGPU

kaiguy replied to Hoopster's topic in Motherboards and CPUs

Thank you both, @Hoopster and @JimmyGerms. I tried the command locally on my server but as Hoopster experienced it didn't work for me. I don't have a secondary unraid server, so I am going to spin up a VM on a different machine, install ipmitool, and give it shot. I'll edit this post with my results. -

Intel Socket 1151 Motherboards with IPMI AND Support for iGPU

kaiguy replied to Hoopster's topic in Motherboards and CPUs

Thanks, @JimmyGerms. I am on the same as well. I wonder if I need to plug a cable into LAN2 for the option to show up? Next step will probably be pulling power from the server to soft-reset the BMC. Weird. -

Intel Socket 1151 Motherboards with IPMI AND Support for iGPU

kaiguy replied to Hoopster's topic in Motherboards and CPUs

Rehashing the IPMI bonding question earlier on this page... Although I have a network cable plugged into both the IPMI LAN and LAN1 (LAN2 is unused), I notice that my new Unifi switch is associating both MAC addresses with a single port. So I figured I'd just go and break the bonding, but I don't seem to have the "Network Bond Configuration" option in the GUI. I also didn't see anything in the BIOS. Any thoughts? -

Been running the last few rc's without issue, and updated to stable tonight just fine. Thanks for all of the work that went into this huge release!

-

Working great so far. Thanks @Taddeusz! Supper happy I can now use ed25519 keys.

-

Intel Socket 1151 Motherboards with IPMI AND Support for iGPU

kaiguy replied to Hoopster's topic in Motherboards and CPUs

Thanks for the heads up, @Hoopster. I've never ran into that before, but I have had an intermittent issue where trying to login to the HTML5 IPMI gui would just keep going back to the login screen. The only way to correct it was to shut down and pull the power from the server, but I might try reflashing the BMC next time I encounter it. -

Intel Socket 1151 Motherboards with IPMI AND Support for iGPU

kaiguy replied to Hoopster's topic in Motherboards and CPUs

Closing the loop. Since disabling turbo boost (once again), I have not encountered another CPU_CATERR crash, even with some pretty intensive CPU workloads. -

Thanks for sharing this. Using your script (with the minor edit that @ICDeadPpl highlighted--it was erroring out for me as well without adding the backslash). What is your recommendation, @mgutt, for identifying what is making /var/docker/overlay2 writes? I'm getting pretty consistent writes still even after incorporating the script.

-

For whatever reason, the QR code doesn't seem to work with my Bitwarden app. Never ran into that problem before (it just won't scan). I just tried setting up TOTP on another site and it worked just fine. Odd.

-

1.5.0 has dropped.

-

Server showing offline -- logout/login on plugin fixes temporarily

kaiguy replied to kaiguy's topic in Connect Plugin Support

I use Adguard Home, but my unraid server specifically bypasses Adguard for DNS. My firewall does utilize blocklists, but only for ingress. So short answer is, no. -

Intel Socket 1151 Motherboards with IPMI AND Support for iGPU

kaiguy replied to Hoopster's topic in Motherboards and CPUs

Might be too early to fully confirm, but disabling Turbo Boost has helped my server survive a few more nights without a CPU_CATERR. I'll stick with you, @Hoopster, and keep Turbo Boost disabled from now on. Thanks for your input. -

Intel Socket 1151 Motherboards with IPMI AND Support for iGPU

kaiguy replied to Hoopster's topic in Motherboards and CPUs

Welp, started getting unraid lockups due to CPU_CATRR again after over a year of stability. No changes to my unraid or server config aside from swapping in some larger disks over the past couple of weeks. It's now happened twice in the last 4 days, the most recent one during a data rebuild. The day before the first recent CPU_CATRR I enabled Plex's new credit detection, which I believe is fairly CPU intensive. Considering the hangs have occured during the time where Plex is doing its maintenance tasks (between midnight and 4am), I'm fairly certain this is the catalyst, and makes me worry my CPU cooling is just insufficient for max load. I'm going to keep the Plex container shut down until my rebuild is complete, then I might try disabling Turbo Boost (as I seem to recall that helped back in late 2021 when I was getting it fairly frequently, but it's been enabled for quite some time now). Bummer. -

Server showing offline -- logout/login on plugin fixes temporarily

kaiguy replied to kaiguy's topic in Connect Plugin Support

Thanks, @ljm42. As I mentioned before, the unraid-api restart does show as connected for a bit, but the API_KEY under unraid-api status continues to show as invalid (after 5 seconds, 30 seconds, 10 minutes, always). Is that expected behavior? Logging out and back in does not correct the API_KEY: invalid issue.