-

Posts

309 -

Joined

-

Last visited

Content Type

Profiles

Forums

Downloads

Store

Gallery

Bug Reports

Documentation

Landing

Everything posted by johnsanc

-

I'm not sure if that setting did the trick or not, but either way it sounds like those defaults were not appropriate for my use case. I tried making the changes you listed and let it sit several hours. I started my Windows 10 VM and it hung at the boot screen... so no noticeable change. I have 128GB of RAM and I originally allocated 96GB to my Windows 11 VM (with memorybacking), and 8GB to a Windows 10 VM. I dropped my Win11 VM down to 64GB and now Win10 boots without any issues. So I guess even though I had plenty of memory to go around, something wasn't playing nice. Not sure if maybe I needed to reboot my Win11 VM after changing the two settings you mentioned for it to take effect. Maybe I'll try out a few other memory combinations tomorrow to see if I can reproduce the hanging behavior of my Win10 VM.

-

Wow. Nice find. that perfectly explains the behavior I was seeing as well with my VMs. My attempt to create a Windows 10 VM would just sit on a blank screen randomly most of the time. Lo and behold, memory backing on my other VM was the culprit. Considering I don't use virtiofs due to my unexplained performance issues, I'm just going to remove the memory backing config altogether. Does more harm than good unless you need it it seems.

-

Thanks. I'll just let this disk rebuild and keep an eye out to see if happens again. Wish there was an easy way to see what file is associated with that sector to diagnose what might have happened. Do you know if there is a good way to do this that I can check after the disk rebuilds?

-

Hmm interesting. That's what I thought as well. Shouldn't Unraid control this though and ensure all other services are stopped before unmounting? What is the best practice for an array shutdown? Do I need to manually stop all docker containers first? I cannot think of anything else that would have been running during unmount except maybe the file integrity plugin for hashing.

-

I had Samba run into an issue and lock a temp file it with DENY_ALL. The only way to resolve that is to restart samba, so I decided to just shut down my VM and stop the array to restart it. Upon restarting the array I noticed disk1 was in an error state. I plan to just rebuild the disk because it looks healthy otherwise. Anyone know why this occurred? The syslog seems to show a read/write error during unmounting of disks... or am I reading the log incorrectly? Diagnostics attached. Any insight is appreciated. tower-diagnostics-20240411-2248.zip

-

Thanks for trying to help, but yeah I do have cores isolated from host and pinned to VM. Its one of the first things I adjusted. I even tried different combinations of cores isolated and pinned with the same results. BIOS updates are another issue... I tried that weeks ago and any BIOS update past the one I'm currently on simply doesn't work correctly. Cannot access UEFI at all. Oddly enough the firmware update must have worked since I can boot into my Windows NVME just fine, just cannot access UEFI to change anything to get unraid to boot from usb... so that's a no-go. In case you were curious: https://forum.asrock.com/forum_posts.asp?TID=32460&PID=114573

-

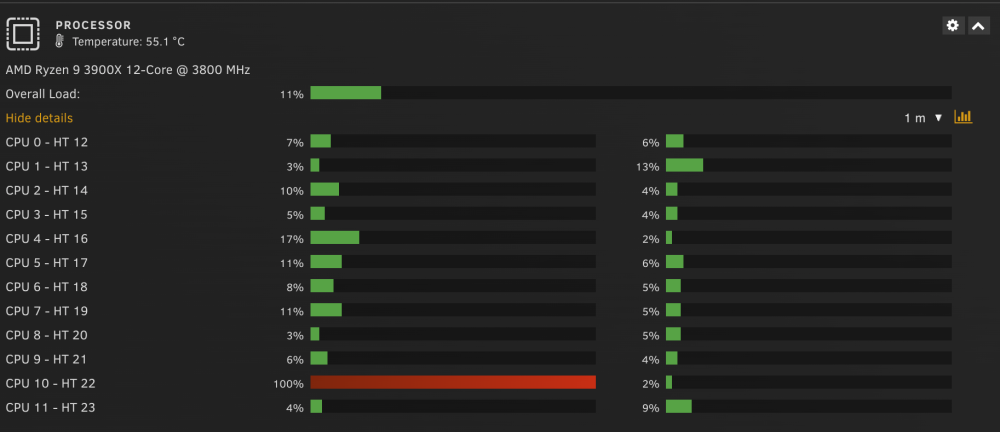

Upgraded to 6.12.9 - performance is even worse now with the same behavior of a single vcpu being used to 100% during a file transfer. Only about 45 MiB/s. Need more people to try this with AMD, so far I believe literally everyone else who has tried this is using Intel.

-

No idea. So far I've seen no one report any results using AMD though. So hopefully someone else can try. The CPU behavior is very weird. I followed every guide to the letter and everything else works perfectly fine.

-

Doh, you are right. I just removed the ^M characters at the end of each line and it worked. Speed is still terrible for me though. 60-70 MB/s max. A single vcpu gets utilized to 100% according to unraid dashboard.

-

Just tried this method. I also get the same message: virtiofsd died unexpectedly: No such process The log says: libvirt: error : libvirtd quit during handshake: Input/output error

-

Thanks for the suggestion. Same issue though, ~40-60 MiB/s and a single cpu thread gets pegged to 100%. With emulated CPUs however the performance graph in Windows doesn't lock up. I think we just need more people trying this out with different hardware configurations. So far everyone I have asked uses Intel CPUs and it seems to work as expected.

-

Just for good measure, spun up a completely new Window 11 VM using a vdisk instead of NVME, same issue. Also it locks up user input on VNC while its transferring.

-

Completely reinstalled Windows 11, exact same performance issues and weirdness with a single thread used at 100% and Windows performance graphs halting while a transfer is running. Yes I am using the Rust version. I will wait until other people with AMD CPUs test this out. I am completely out of ideas. Edit: Also confirmed that the regular non-Rust version has the exact same issue.

-

OK so I tried adjusting my CPU isolation and pinning and I got the speed up 60 MiB/s consistently now by shifting all the CPUs for the VM to higher numbers and leaving lower numbers for Unraid. However, I also noticed some weird behavior. When I am copying to my VM's NVME from the virtiofs drive, all CPU graphs in Windows stop completely and then resume once the transfer is complete. I checked both task manager and process explorer. I also noticed that a single thread was at 100% whenever I am moving something. What is going on here? Is that normal?

-

Everything else with the VM appears to work just fine. I wiped out all old virtio-win drivers and guest tools and reinstalled. After a bit of googling was able to finally completely uninstall everything without the installer error, so that's good. Also verified I have no old drivers and only the latest is used. Either way, still the same performance issue. I'm not completely reinstalling windows just to mess with this. I'll just stick with Samba since its way more performant in every scenario I've tested with the latest drivers.

-

I'm not familiar with driver store explorer... I'll look into that. I do have issues with installing new drivers. The installer always fails and has to rollback so I need to manually install each one.

-

Yes I do have a setup like yours 16/24 are allocated to the VM. The test Windows 10 VM i setup as a test only had 2/24 allocated and performed better (but still not great). I am out of ideas as to why my Windows 11 VM would perform so poorly... the only major difference is that its running on a separate NVME instead of a vdisk on my cache pool. I also noticed worse performance of remote desktop (RDP) whenever VirtioFS is enabled and memorybacking configured. As soon as I disable these RDP is much snappier.

-

New stable drivers seem fine. Speed is still terrible for me on Windows 11 at only 40 MiB/s or so. I tried to setup a clean Windows 10 VM and transfers are about 100 MiB/s for the exact same file. Samba on Windows 11 transfers this 2 GiB file at 650 MiB/s with explorer. No idea what could be causing the discrepancy, but glad its at least stable even if I won't be using it.

-

I do the same, Windows VM running on nvme with no vdisk. I also have 16 threads and 40 GB of RAM

-

So Yan confirmed the Windows drivers aren't optimized for performance... but that doesn't really explain the drastic difference between @mackid1993's results and mine. (400 MB/s vs my 60 MB/s)

-

@mackid1993 - I am using the latest WinFSP: 2.0.23075. Its possible I could have something wrong with my setup or misconfigured, but I'm not sure what that might be. I followed all the directions here and got it up and running properly. I am using your launcher script and that also works just fine, just lackluster performance is all. For my setup my SMB speeds are about what I would expect to/from my SSD cache.

-

@mackid1993 - Thanks for sharing. Your speeds are much better than mine, but still pretty poor compared to SMB. Were you transferring to/from spinning disks or from an SSD cache pool to your to VM? I'm curious what speeds you get between a fast unraid cache drive and your VM.

-

Yes I have - The rust version made no significant difference. Speeds are still 45-60 MB/s. On the plus side, the VM seems stable and hasn't locked up with the 100% cpu utilization issue. Note: I opened an issue here for more visibility beyond these forums: https://github.com/virtio-win/kvm-guest-drivers-windows/issues/1039

-

I'm trying out the new drivers to see if the VM freeze issue is resolved. However... is there a way to make the performance any better? Directory listings with tens of thousands of files are significantly faster than smb shares, but actual transfer speeds are terrible. I am getting about 45 MB/s with virtiofs vs 275 MB/s with samba shares. Do I have something misconfigured? I don't understand all the hype over virtiofs if this kind of performance is normal.

-

Windows VM doesn't start unless Force Stopped first

johnsanc replied to johnsanc's topic in VM Engine (KVM)

For anyone else running into this issue, I figured out how to fix it. In my case I was still using the old clover bootloader and my nvme controller was not bound at boot. So to fix I did: Changed primary vDisk to none since I wanted to boot from the NVME Went into the system devices profiler and bound my nvme controller at boot Set the NVME to boot order of 1