-

Posts

269 -

Joined

-

Last visited

Content Type

Profiles

Forums

Downloads

Store

Gallery

Bug Reports

Documentation

Landing

Posts posted by Jorgen

-

-

All this confusing and hair pulling, and all I had to do was add the server IP to the "ignored addresses" value in the privoxy settings. And I'm sure this has been posted here already too! Time for a nap, I love this community

Glad you worked it out!

It can be confusing with the two different methods of using the VPN tunnel, each one with its own quirks on how to set it up.

Sent from my iPhone using Tapatalk-

1

1

-

-

Just poking the "wireguard" with a stick again. Also more than willing to contribute to some sort of "bounty" reward if it's a feature that's otherwise not seen as viable.

Wireguard is supported and has been for a while, but maybe I’m misunderstanding your question?

Sent from my iPhone using Tapatalk-

1

1

-

-

New to Jackett due to the tightening of iptables issue. Am I correct in assuming that if my indexer isn’t listed then this docker is of no use or could I still use it to route download clients thru? Using nzbplanet for nzb’s and rarbg for torrents.

On a side note, I was using Privoxy to route Sonarr, Radarr and Lidarr thru DelugeVPN. Adding the “Additional_Ports” variable for these dockers to DelugeVPN and ignoring my server’s IP address has restored the download client connection to DelugeVPN but not NZBgetVPN. Assuming I can’t make the same changes to NZBgetVPN because a docker update would be required to recognize the Additional Ports variable? Is there a fix to get NZBgetVPN working similar to DelugeVPN or should I bite the bullet and bind these containers to DelugeVPN?

I ended up adding all of the download apps in the delugeVPN network, including NzbGet (the non-VPN version from binhex). I am seeing slower download speeds for nzbget this way, but at least it’s working and all apps can talk to each other again.

Binhex is working on a secure solution to allow apps inside the VPN network to talk to apps outside it, so it might be possible to run NzbGet on the outside again in the future.

Your only other option is to configure sonarr/radarr to talk to NzbGet on the internal docker ip, e.g. 172.x.x.x, but beware that the actual up is dynamic for each container and may change on restarts.

Edit: yeah jackett is of no use for NZB’s, you need to set them up as separate indexers in radarr/sonarr. Which shouldn’t be a problem, both apps should talk to the internet freely over the VPN tunnel

Edit2: sorry ignore the first bit of my reply, you’re using privoxy, not network binding. Sorry for the confusion. -

Fair question - firewalld is my response. But I come from EL7/EL8 land, and firewalld replaced iptables there.

I see, learn something new everyday. This container is based on arch which seems to support firewalld, but that’s obviously up to binhex what to use. From all the effort he’s put into the iptables I’d hazard a guess that he’s not keen to change it anytime soon.

Sent from my iPhone using Tapatalk -

My Unraid server sits behind an edge firewall switch and a secondary firewall. Also - why is this container still using iptables?

Just out of curiosity, what do you think it should use instead of iptables? They seem well suited to the task at hand of stopping any data leaking outside the VPN tunnel?

Sent from my iPhone using Tapatalk -

15 hours ago, JimmyGerms said:

Second attempt at clarifying what I'm after haha.

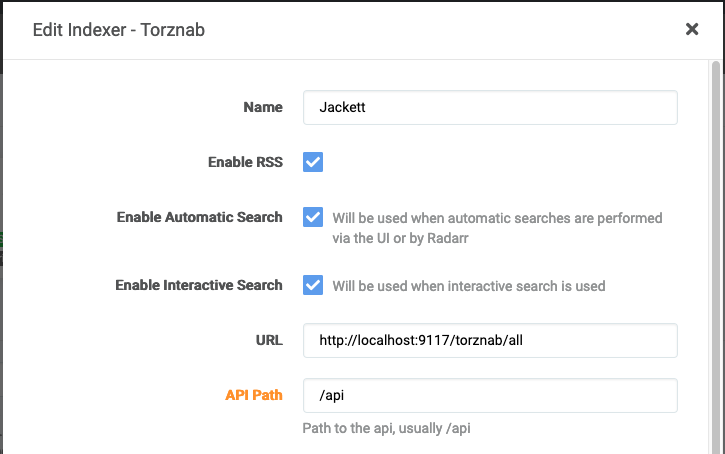

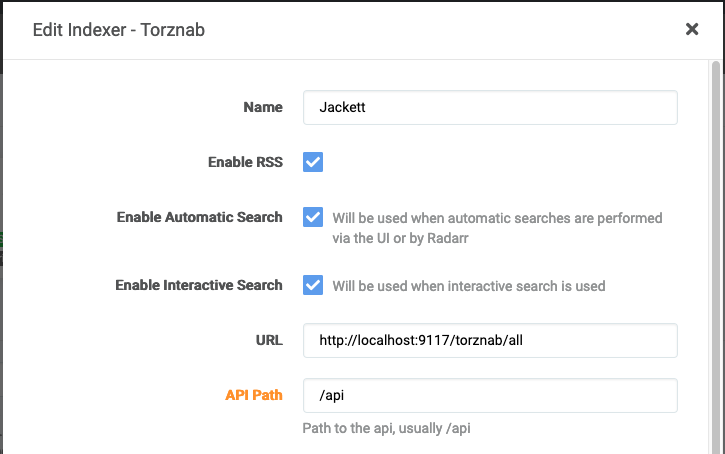

So, with the changes to the binhex VPN dockers I wanted to verify if I was utilizing Jackett properly. Sonarr and Radarr are currently configured to tunnel through the VPN and have been updated with the proper host setting: 'localhost'. Now, within Jackett these buttons are still using 192.168.1.x causing Sonarr and Radarr to error while grabbing indexers.

To fix this, within Sonarr/Radarr I edited what the "Copy Torznab Feed" gives me and replaced 192.168.1.x with 'localhost'. With this, both dockers pass while checking the indexers!

Is this the correct way to update these settings? Can I set something up within Jackett so I do not have to manually change the ip address when adding a new indexer?

Thanks again for your help and time. I'm learning quite a bit!

This doesn't answer your question directly, but in Sonarr/Radarr you can also set them up to use all your configured indexers in jacket, see example from Radarr below. That way you only have to set up one indexer, once, in sonarr/radarr. If you add new indexers to jackett, they will be automatically included by the other apps.

Doesn't work if you need different indexers for the different apps though...

-

On 2/27/2021 at 6:18 AM, abisai169 said:

Here is my dilemma. Up until the latest update I was running Radarr and Sonarr through DelugeVPN without issue. Both Radarr and Sonarr were able to communicate with my Plexserver (Host Network) and NZBGet (Bridge Network). After this recent update things broke.

I was able to fix things enough that I could communicate with the WebUI for for both Radarr and Sonarr. However, I still can't communicate from Radarr/Sonarr to my Plexserver or NZBGet. I have zero desire to run either of these through the VPN. How can I maintain communication between all the containers running through deluge and the ones that don't?

I have the same problem, where everything works as expected (after adding ADDITIONAL PORTS and adjusting application settings to use localhost) except being able to connect to bridge containers from any of the container with network binding to delugeVPN.

Jackett, Radarr and Sonarr are all bound to the DelugeVPN network.

Proxy/Privoxy is not used by any application.

NzbGet is using the normal bridge network.

I can access all application UIs and the VPN tunnel is up. Each application can communicate with the internet.

In Sonarr and Radarr:

- I can connect to all configured indexers, both Jackett (localhost) and nzbgeek directly (public dns name)

- I can connect to delugeVPN as a download client (using localhost)

- I CAN NOT connect to NzbGet as a download client using <unraidIP>:6790. Connection times out.

- I CAN connect to NzbGet using it's docker bridge IP (172.x.x.x:6790)

It's my understanding that the docker bridge IP is dynamic and may change on container restart, so I don't really want to use that.

@binhex it seems like the new iptable tightening is preventing delugeVPN (and other containers sharing it's network) from communicating with containers running on bridge network on the same host?

Here's a curl output from the DelugeVPN console to the same NzbGet container using unraid host IP (192.168.11.111) and docker network IP (172.17.0.3)

sh-5.1# curl -v 192.168.11.111:6789 * Trying 192.168.11.111:6789... * connect to 192.168.11.111 port 6789 failed: Connection timed out * Failed to connect to 192.168.11.111 port 6789: Connection timed out * Closing connection 0 curl: (28) Failed to connect to 192.168.11.111 port 6789: Connection timed out sh-5.1# curl -v 172.17.0.3:6789 * Trying 172.17.0.3:6789... * Connected to 172.17.0.3 (172.17.0.3) port 6789 (#0) > GET / HTTP/1.1 > Host: 172.17.0.3:6789 > User-Agent: curl/7.75.0 > Accept: */* > * Mark bundle as not supporting multiuse < HTTP/1.1 401 Unauthorized < WWW-Authenticate: Basic realm="NZBGet" < Connection: close < Content-Type: text/plain < Content-Length: 0 < Server: nzbget-21.0 < * Closing connection 0 sh-5.1#

Edit: retested after resetting nzbget port numbers back to defaults. Raised issue on github: https://github.com/binhex/arch-delugevpn/issues/258

-

4 hours ago, MammothJerk said:

Wait so to clarify:

for dockers to make use of the delugevpn privoxy (and even the download client for radarr/sonarr/lidarr) they must have "--net=container:Binhex-DelugeVPN" in their extra parameters?

No.

You can use privoxy from any other docker or computer on your network by simply configuring the proxy settings of the application/computer to point to the privoxy address:port. For example, you would do this under settings in the Radarr Web UI. Or in Firefox proxy setting on you normal PC.

But for dockers running on you unraid server, like radarr/sonarr/lidarr, there is an alternative way. You can make the other docker use the same network as deluge, by adding the net=container... into the extra parameters. It has some benefits in that you are guaranteed that all docker application traffic goes via the VPN. When using privoxy, only http traffic is routed via the VPN, and only if the application itself has implemented the proxy function properly.But doing it the net=container way, you shouldn't also use the proxy function in the application itself.

So one or the other, depending on your use case and needs, but not both.

-

1

1

-

-

I'm not familiar with PrivateVPN, so not sure how much help I can offer on this. Have you considered using PIA instead?Thank you for your help,

As far as I understand my main problem was not being to able to port forward. So I searched and found PrivateVPN which provides that option. I bought a 30 day trial period just to try with deluge but again I'm out of luck. I just changed the ovpn files and certificates and also used your new name_servers and activated Strict_port_forward, but again I'm not able to download. Probably there is more changes I should have done but not sure what to change without breaking the system completely.

ok I tried to look more into it. As far as I understand PrivateVpn "servers with OpenVPN TAP + UDP" offers this for particular ovpn locations. When I try to use one these ovpn files.

these errors come up on deluge log which prevents it from starting.

2021-01-04 17:20:34 WARNING: Compression for receiving enabled. Compression has been used in the past to break encryption. Sent packets are not compressed unless "allow-compression yes" is also set.

2021-01-04 17:21:39 --cipher is not set. Previous OpenVPN version defaulted to BF-CBC as fallback when cipher negotiation failed in this case. If you need this fallback please add '--data-ciphers-fallback BF-CBC' to your configuration and/or add BF-CBC to --data-ciphers.

2021-01-04 17:21:39 WARNING: file 'credentials.conf' is group or others accessible

2021-01-04 17:21:44 WARNING: --ns-cert-type is DEPRECATED. Use --remote-cert-tls instead.

really not sure how to cope with these errors or if they are related at all.

If I prefer a OPENVPN + TUN connection there all these errors go away but yet again I can't increase my download speed.

Either way, I think we need to see more detailed logs at this point, can you follow this guide and post the results please? Remember to redact your user name and password from the logs before posting!

https://github.com/binhex/documentation/blob/master/docker/faq/help.md

-

3 hours ago, odyseus8 said:

1 - I don't know what to do in order to define the incoming port correctly. I didn't change any major settings. As far as I know Surfshark(VPN service I use) doesn't support port forwarding but when I change the Strict_Port_Forward to "no" upload speeds are great but no download speeds. In supervised.log it says it is listening on port 58846( not sure if this is the incoming port or not)

This is almost certainly the main problem. If surfshark doesn't support port forwarding your speeds will be slow, sorry. Maybe someone else is using surfshark and can confirm if they have managed to get good speeds despite this?

Strict_port_forward is only used with port forwarding so you might as well leave it at "no"

3 hours ago, odyseus8 said:4- I didn't install the itconfig plugin but if its a must I can do that.

It has definetly helped others, so it's worth a shot. But you really are fighting an uphill battle without port forwarding.

3 hours ago, odyseus8 said:7- Using the default Name_Servers as I mentioned not sure how to change or modify them. SHould I just add, ,8.8.8.8 at the end for google DNS or just delete all and only add 1 name.

...

P.s. I have noticed this error on deluge for most of the torrents, maybe its relevant. "Non-authoritative 'Host not found' (try again or check DNS setup)"

So this is worth pursuing for sure, due to the error you're seeing. The defaults include PIA name servers, which I think have been depreciated by now. There are also other considerations, i.e. don't use google, see Notes at the bottom of this page: https://github.com/binhex/arch-delugevpn

Try replacing the name servers with this:

84.200.69.80,37.235.1.174,1.1.1.1,37.235.1.177,84.200.70.40,1.0.0.1

If the error persists, it's likely something wrong with your DNS settings for unraid itself.

3 hours ago, odyseus8 said:Also some of the torrrents have Tracker Status: Error: "End of file" error

No idea about this one, sorry

-

2 hours ago, odyseus8 said:

First of all thanks binhex for such a good docker.

About my problem, I've started using a VPN (Surfshark to be exact) for the last couple of weeks. In the first week I didn't seem to have any problem regarding the connection and especially the speed of the downloads. But for the last 2 weeks or so whenever I enable VPN my download speeds fall down to 30-40 Kib/s where it should be around 3 Mib/s. If I disable the VPN and restart the docker the speed increases back to normal. If then I re-enable VPN speeds decrease once again. I also speedtested same endpoints with same VPN provider on my windows computer and the speed results seems fine. I suppose there is something wrong in my prefences and/or config files. Can anyone offer any help. If any info is needed for me to share please let me know. Thanks.

If you haven't done so already, work through all the suggestions under Q6 here: https://github.com/binhex/documentation/blob/master/docker/faq/vpn.md

-

2 hours ago, Nimrad said:

Mine looks similar:

[Interface] PrivateKey = fffff Address = 10.34.0.134/16 DNS = 10.35.53.1 [Peer] PublicKey = fffff AllowedIPs = 0.0.0.0/0,::/0 Endpoint = pvdata.host:3389

Ok, I'm really guessing here, @binhex will need to chime in with the real answer, but I think you need to:

1. Remove the ipv6 reference

2. Remove the DNS entry (maybe, it might also be ignored already. Either way it would be better to move it to the DNS settings of the docker)

3. Add the wireguardup and down scripts

3. Ensure the endpoint address is correct. "pvdata.host" does not resolve to a public IP for me and I'm pretty sure it needs to for wireguard to be able to connect to the endpoint and the tunnel to be established.

4. Try removing the /16 postfix from the Address line

So apart from #3, try the below as wg0.conf. Although I'm pretty sure it will fail still because of #3.

[Interface] PrivateKey = fffff Address = 10.34.0.134 PostUp = '/root/wireguardup.sh' PostDown = '/root/wireguarddown.sh' [Peer] PublicKey = fffff AllowedIPs = 0.0.0.0/0 Endpoint = pvdata.host:3389 -

On 1/2/2021 at 8:29 AM, Jorgen said:

Can you post your wg0.conf file (but redact any sensitive info)?

Which VPN provider are you using?

Sent from my iPhone using Tapatalk@Nimrad this is what the wg0.conf file looks like when using PIA. I assume it needs to have the same info when using other VPN providers as well.

The error in you log specifically states that the Endpoint line is missing from your .conf file, but I don't know what it needs to be set to for your provider/endpoint.

[Interface] Address = 10.5.218.196 PrivateKey = <redacted> PostUp = '/root/wireguardup.sh' PostDown = '/root/wireguarddown.sh' [Peer] PublicKey = <redacted> AllowedIPs = 0.0.0.0/0 Endpoint = au-sydney.privacy.network:1337

-

Nobody knows what this last error means? Are there any other logs I could explore to troubleshoot this? As mentioned the config file works fine in windows.

Can you post your wg0.conf file (but redact any sensitive info)?

Which VPN provider are you using?

Sent from my iPhone using Tapatalk -

25 minutes ago, jay010101 said:

The fix above worked great for me. Thank you for the post. I'm not sure if I did something wrong in the setup of the container?

I looks like the Controller gave the wrong IP to the AP. It had my container IP 172.x.x.x not the correct 192.168.x.x of the unRaid server.

Should the Unifi container be a Bridge or a Host under Network?

EDIT: I was poking around the Unifi software and saw the below settings for "overide inform host" is this related? (see below image)

It should be Bridge network, and you have already found the correct application setting to fix AP auto-adoption when running inside a Docker container (in Bridge mode).

Set Controller Hostname/IP to the IP of your Unraid server, then tick the box next to "Override infrom host with controller hostname/IP"

See https://github.com/linuxserver/docker-unifi-controller/#application-setup for more details, especially this:QuoteApplication Setup

The webui is at https://ip:8443, setup with the first run wizard.

For Unifi to adopt other devices, e.g. an Access Point, it is required to change the inform ip address. Because Unifi runs inside Docker by default it uses an ip address not accessable by other devices. To change this go to Settings > Controller > Controller Settings and set the Controller Hostname/IP to an ip address accessable by other devices.

-

1

1

-

-

Hey,

I did the same thing earlier with the same result.

I tried deleting the Big Sur image files and changing it to method 2 which then grabbed the correct Big Sur image file from Apple.

So yeah, I'd say workaround-able bug to be fixed when there is time to.

Thanks for this, just confirming that method 2 pulls down the correct installer for me as well.

Sent from my iPhone using Tapatalk -

@SpaceInvaderOne I just pulled down your latest container and trying to install Big Sur it actually runs the Catalina installer.

I've deleted everything and started over a few times (with unraid reboot in between for good measure) with the same result, is there a bug in the latest image?

The installer is correctly named BigSur-install.img but when you run it from the new VM it is definetly trying to install Catalina:

-

Would that mean these ports are likely blocked on the tracker end?

Possibly, not sure how you would find out though. Can you ask the tracker?

Sent from my iPhone using Tapatalk -

I don't know/understand enough about these ports to know what makes one work vs another. Any tips on how I could fix this in settings to it is no longer seemingly rolling a dice?

Thanks!

I don’t think you can, sorry.

The port is randomly given out by PIA from its pool for the particular server you are connecting to.

The container uses a script that asks for a new (random) port on each reconnection to the VPN server.

Sent from my iPhone using Tapatalk -

with the caveat that you wouldn't be able to mount that photo library remotely to use on a physical Mac (though iCloud Photo Library would take care of this; you could also potentially create a share in the Mac VM to then open the library on another mac).

Using iCloud is the way to go in my experience. Using the same library from two different macs is not safe. Apple recommends against it and there are many report of corruptions from users doing it.

An alternative would be to share the library from within Photos on the VM. Other macs can then access it in read-only mode on the same network.

Sent from my iPhone using Tapatalk -

Couldn't you write a launchd script that runs every night at 2am, etc? One that simply rsync's your photo folder to a share on your unraid nas?

For backups yes, if you have enough space on your Mac to store the full library in the first place (I don’t). There’s also a live database in the folder structure so syncing that without corruptions will need consideration.

Probably easier to use TimeMachine instead of custom script as Apple have already worked it out for you.

Sent from my iPhone using Tapatalk -

Being able to edit the vm in Unraid vm manager. Add cores, ram gpu usb controller ect without having to edit xml after.

Should cut down on the support requests in this thread...

Just out of interest, how did you manage to do this? I thought this was a limitation in unraid’s VM implementation?

Sent from my iPhone using Tapatalk -

6 hours ago, nekromantik said:

how do you guys stop the MediaCovers dir in app config filling up with posters?

as this is not mapped to a share it takes up space on my docker.img file

this is on v3

My MediaCover directory is in appdata on my cache disk, not inside the docker.img. As far as I know I haven't done anything special to put it there, wasn't even aware of it until I saw your post.

It should be inside /config from the container perspective, which should be mapped to your appdata share.

-

I’m successfully doing exactly this, using a vdisk on a user share added to a Mac VM XML as a disk. Have been running for 1-2 years on two separate VMs without a single problem, one for me, one for the wife.

I use iCloud Photo Library and only sync the latest photos (or whatever the OS thinks I can afford to store locally) to the local storage on my phone, laptop and iPad. I can still access all photos, and if the high res original is not on the device it will sync it down from iCloud automatically when required.

The VM has a full copy of the library, you know just in case iCloud loses all my data. I only spin up the VM once a week to sync down the latest photos.

I also use time machine in the VM to backup the whole photo library to another array disk. I really don’t want to lose my photos. Or my wife’s, which would be much worse. I figure cloud + 2 local copies should be enough.

Edit: just realized it’s actually not exactly the same. I don’t mount the disk image from the share inside the VM, I mount it as a extra disk via the VM XML. I think I decided it was easier and more reliable to get the disk mounted this way, since I start and stop the VM automatically on a schedule.

-

1

1

-

[Support] binhex - DelugeVPN

in Docker Containers

Posted

If you are using a proxy in the app settings of radarr/sonarr you can’t check from the container console wether they are using the VPN or not.

The console works on a OS level in the container, and the OS is unaware of the proxy that the apps are using.

I don’t know how to check that the app itself is actually using the VPN via the proxy, maybe someone else has a solution for that.

Sent from my iPhone using Tapatalk