-

Posts

68 -

Joined

-

Last visited

Content Type

Profiles

Forums

Downloads

Store

Gallery

Bug Reports

Documentation

Landing

Everything posted by Mobius71

-

So I somehow fixed this, but I'm not 100% sure how. Just to document for anyone else that runs into this, I went in and changed a few things to try and pinpoint the issue (process of elimination). I changed the ram allocation down a few GBs and also noticed I could change machine from i440fx-7.1 to 7.2 (it was previously set at i440fx-7.1 and had worked without issue, but figured, eh why not). I also saw that the disk target bus for the VM image was set to virtio instead of sata so I changed that to sata (it was previously set to sata, but I changed it at some point while trying to make the VM start). As the VM works, I didn't want to muck about with it anymore so again, not sure, but as of now all is well.

-

The VM can't run at all so not processes running in the VM. Nothing new was introduced into the VM prior to system shutdown and it was previously starting reliably every time. Also no backups running that would affect the VM as far as I see.

-

So I powered down and unplugged my server to do some light dusting and whatnot. Powered it back on and now I'm getting the following when trying to start up my Win 10 VM: 2024-02-26T15:14:34.876523Z qemu-system-x86_64: terminating on signal 15 from pid 15338 (/usr/sbin/libvirtd) 2024-02-26 15:14:36.404+0000: shutting down, reason=shutdown Here's my xml: <?xml version='1.0' encoding='UTF-8'?> <domain type='kvm'> <name>Windows 10</name> <uuid>dc3ec2d9-0608-7d4d-0a9e-c92902f75358</uuid> <metadata> <vmtemplate xmlns="unraid" name="Windows 10" icon="windows.png" os="windows10"/> </metadata> <memory unit='KiB'>24641536</memory> <currentMemory unit='KiB'>24641536</currentMemory> <memoryBacking> <nosharepages/> </memoryBacking> <vcpu placement='static'>4</vcpu> <cputune> <vcpupin vcpu='0' cpuset='8'/> <vcpupin vcpu='1' cpuset='9'/> <vcpupin vcpu='2' cpuset='10'/> <vcpupin vcpu='3' cpuset='11'/> </cputune> <os> <type arch='x86_64' machine='pc-i440fx-7.1'>hvm</type> <loader readonly='yes' type='pflash'>/usr/share/qemu/ovmf-x64/OVMF_CODE-pure-efi.fd</loader> <nvram>/etc/libvirt/qemu/nvram/dc3ec2d9-0608-7d4d-0a9e-c92902f75358_VARS-pure-efi.fd</nvram> <smbios mode='host'/> </os> <features> <acpi/> <apic/> </features> <cpu mode='host-passthrough' check='none' migratable='on'> <topology sockets='1' dies='1' cores='2' threads='2'/> <cache mode='passthrough'/> <feature policy='require' name='topoext'/> </cpu> <clock offset='localtime'> <timer name='rtc' tickpolicy='catchup'/> <timer name='pit' tickpolicy='delay'/> <timer name='hpet' present='no'/> </clock> <on_poweroff>destroy</on_poweroff> <on_reboot>restart</on_reboot> <on_crash>restart</on_crash> <devices> <emulator>/usr/local/sbin/qemu</emulator> <disk type='file' device='disk'> <driver name='qemu' type='raw' cache='writeback'/> <source file='/mnt/disks/S1SMNSAFC04456N/Windows 10/vdisk1.img'/> <target dev='hdc' bus='virtio'/> <serial>vdisk1</serial> <boot order='1'/> <address type='pci' domain='0x0000' bus='0x00' slot='0x05' function='0x0'/> </disk> <disk type='file' device='cdrom'> <driver name='qemu' type='raw'/> <source file='/mnt/user/isos/virtio-win-0.1.240-1.iso'/> <target dev='hdb' bus='sata'/> <readonly/> <address type='drive' controller='0' bus='0' target='0' unit='1'/> </disk> <disk type='block' device='disk'> <driver name='qemu' type='raw' cache='writeback'/> <source dev='/dev/disk/by-id/nvme-CT1000P3PSSD8_2324E6E3D215-part2'/> <target dev='hdd' bus='sata'/> <serial>vdisk2</serial> <address type='drive' controller='0' bus='0' target='0' unit='3'/> </disk> <controller type='sata' index='0'> <address type='pci' domain='0x0000' bus='0x00' slot='0x03' function='0x0'/> </controller> <controller type='virtio-serial' index='0'> <address type='pci' domain='0x0000' bus='0x00' slot='0x04' function='0x0'/> </controller> <controller type='pci' index='0' model='pci-root'/> <controller type='usb' index='0' model='qemu-xhci' ports='15'> <address type='pci' domain='0x0000' bus='0x00' slot='0x07' function='0x0'/> </controller> <interface type='bridge'> <mac address='52:54:00:92:2f:36'/> <source bridge='br0'/> <model type='virtio-net'/> <address type='pci' domain='0x0000' bus='0x00' slot='0x02' function='0x0'/> </interface> <serial type='pty'> <target type='isa-serial' port='0'> <model name='isa-serial'/> </target> </serial> <console type='pty'> <target type='serial' port='0'/> </console> <channel type='unix'> <target type='virtio' name='org.qemu.guest_agent.0'/> <address type='virtio-serial' controller='0' bus='0' port='1'/> </channel> <input type='mouse' bus='ps2'/> <input type='keyboard' bus='ps2'/> <audio id='1' type='none'/> <hostdev mode='subsystem' type='pci' managed='yes'> <driver name='vfio'/> <source> <address domain='0x0000' bus='0x09' slot='0x00' function='0x0'/> </source> <rom file='/mnt/disk1/isos/vbios/MSI_2070_Super_Ventus_OC_vbios.rom'/> <address type='pci' domain='0x0000' bus='0x00' slot='0x06' function='0x0'/> </hostdev> <hostdev mode='subsystem' type='pci' managed='yes'> <driver name='vfio'/> <source> <address domain='0x0000' bus='0x09' slot='0x00' function='0x1'/> </source> <address type='pci' domain='0x0000' bus='0x00' slot='0x08' function='0x0'/> </hostdev> <hostdev mode='subsystem' type='pci' managed='yes'> <driver name='vfio'/> <source> <address domain='0x0000' bus='0x09' slot='0x00' function='0x2'/> </source> <address type='pci' domain='0x0000' bus='0x00' slot='0x09' function='0x0'/> </hostdev> <hostdev mode='subsystem' type='pci' managed='yes'> <driver name='vfio'/> <source> <address domain='0x0000' bus='0x09' slot='0x00' function='0x3'/> </source> <address type='pci' domain='0x0000' bus='0x00' slot='0x0a' function='0x0'/> </hostdev> <memballoon model='none'/> </devices> </domain> It will look like it's starting up, but after about a minute it'll shutdown. I've tried checking all the VM settings, device groups for passthrough, the libvirt log as well, but it's always blank, even after I turned debug logging on. Is my libvirt img jacked up maybe? I'm kinda at the end of what to do on this and all the google results have also led me to dead ends. I've attached diagnostics. Any help would be appreciated. tower-diagnostics-20240226-1018.zip

-

At some point I noticed that plex, jellyfin, and some other docker apps weren't working properly. I went through my troubleshooting routine and noticed that somehow all the folders in appdata had the owner changed to haldaemon for some reason. I was able to fix the issue by changing the owner and permissions back to nobody users, but was wondering if anyone has had this happen to them before or if anyone knew why this would happen?

-

I recently upgraded my BIOS to get an nvme working on my system and apparently something changed with iommu so that now a sata controller is getting passed to vfio and messes with the array. I've tried starting in safe mode, but I cannot edit the VM config. As soon as I disable VMs with VM manager and reboot, everything else starts and runs as normal. I can't even set the VM to not auto start as it won't let me save changes to the VM config. Is there any other way to edit the VM xml when VMs are disabled?

-

[ Solved ] Help - Wonky behavior after power off and reboot.

Mobius71 replied to Mobius71's topic in General Support

Yep, that fixed it! Thanks again JorgeB! -

[ Solved ] Help - Wonky behavior after power off and reboot.

Mobius71 replied to Mobius71's topic in General Support

Oh man, I don't know how I didn't catch that. I'll sort that out when I can get back in front of all that later this afternoon and let you know how it goes. Thanks JorgeB! -

[ Solved ] Help - Wonky behavior after power off and reboot.

Mobius71 replied to Mobius71's topic in General Support

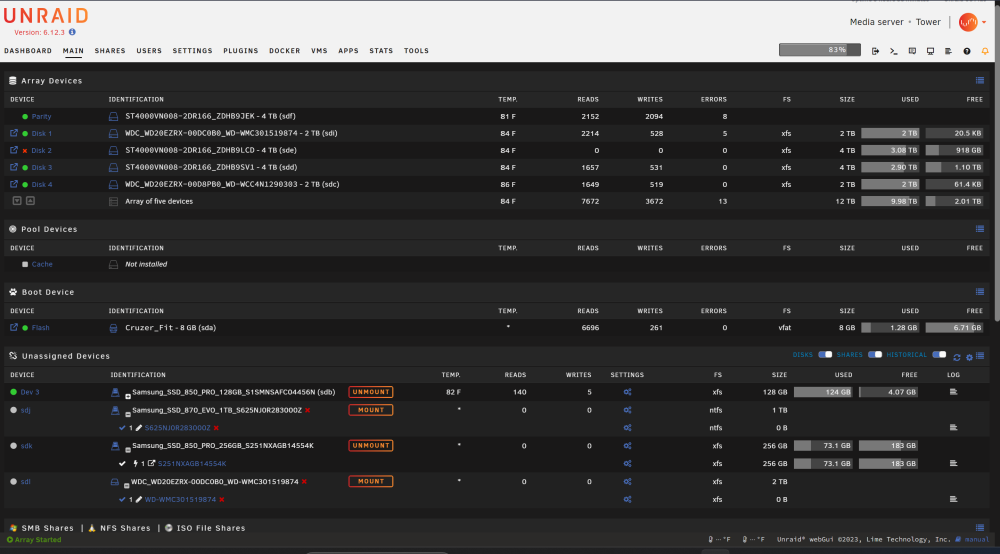

Had to reboot several times to get the VMs page to show up but here it is <?xml version='1.0' encoding='UTF-8'?> <domain type='kvm'> <name>Windows 10</name> <uuid>6fe4dea8-2e66-4620-f656-86e038845777</uuid> <metadata> <vmtemplate xmlns="unraid" name="Windows 10" icon="windows.png" os="windows10"/> </metadata> <memory unit='KiB'>25165824</memory> <currentMemory unit='KiB'>25165824</currentMemory> <memoryBacking> <nosharepages/> </memoryBacking> <vcpu placement='static'>6</vcpu> <cputune> <vcpupin vcpu='0' cpuset='9'/> <vcpupin vcpu='1' cpuset='21'/> <vcpupin vcpu='2' cpuset='10'/> <vcpupin vcpu='3' cpuset='22'/> <vcpupin vcpu='4' cpuset='11'/> <vcpupin vcpu='5' cpuset='23'/> </cputune> <os> <type arch='x86_64' machine='pc-i440fx-5.1'>hvm</type> <loader readonly='yes' type='pflash'>/usr/share/qemu/ovmf-x64/OVMF_CODE-pure-efi.fd</loader> <nvram>/etc/libvirt/qemu/nvram/6fe4dea8-2e66-4620-f656-86e038845777_VARS-pure-efi.fd</nvram> <smbios mode='host'/> </os> <features> <acpi/> <apic/> </features> <cpu mode='host-passthrough' check='none' migratable='on'> <topology sockets='1' dies='1' cores='3' threads='2'/> <cache mode='passthrough'/> <feature policy='require' name='topoext'/> </cpu> <clock offset='localtime'> <timer name='rtc' tickpolicy='catchup'/> <timer name='pit' tickpolicy='delay'/> <timer name='hpet' present='no'/> </clock> <on_poweroff>destroy</on_poweroff> <on_reboot>restart</on_reboot> <on_crash>restart</on_crash> <devices> <emulator>/usr/local/sbin/qemu</emulator> <disk type='file' device='disk'> <driver name='qemu' type='raw' cache='writeback'/> <source file='/mnt/disks/S1SMNSAFC04456N/Windows 10/vdisk1.img'/> <target dev='hdc' bus='virtio'/> <boot order='1'/> <address type='pci' domain='0x0000' bus='0x00' slot='0x05' function='0x0'/> </disk> <disk type='file' device='cdrom'> <driver name='qemu' type='raw'/> <source file='/mnt/user/isos/virtio-win-0.1.190-1.iso'/> <target dev='hdb' bus='sata'/> <readonly/> <address type='drive' controller='0' bus='0' target='0' unit='1'/> </disk> <disk type='block' device='disk'> <driver name='qemu' type='raw' cache='writeback'/> <source dev='/dev/disk/by-id/ata-Samsung_SSD_870_EVO_1TB_S625NJ0R283000Z'/> <target dev='hdd' bus='sata'/> <address type='drive' controller='0' bus='0' target='0' unit='3'/> </disk> <controller type='usb' index='0' model='qemu-xhci' ports='15'> <address type='pci' domain='0x0000' bus='0x00' slot='0x07' function='0x0'/> </controller> <controller type='pci' index='0' model='pci-root'/> <controller type='sata' index='0'> <address type='pci' domain='0x0000' bus='0x00' slot='0x04' function='0x0'/> </controller> <controller type='virtio-serial' index='0'> <address type='pci' domain='0x0000' bus='0x00' slot='0x03' function='0x0'/> </controller> <interface type='bridge'> <mac address='52:54:00:8a:e4:30'/> <source bridge='br0'/> <model type='virtio-net'/> <address type='pci' domain='0x0000' bus='0x00' slot='0x02' function='0x0'/> </interface> <serial type='pty'> <target type='isa-serial' port='0'> <model name='isa-serial'/> </target> </serial> <console type='pty'> <target type='serial' port='0'/> </console> <channel type='unix'> <target type='virtio' name='org.qemu.guest_agent.0'/> <address type='virtio-serial' controller='0' bus='0' port='1'/> </channel> <input type='tablet' bus='usb'> <address type='usb' bus='0' port='1'/> </input> <input type='mouse' bus='ps2'/> <input type='keyboard' bus='ps2'/> <audio id='1' type='none'/> <hostdev mode='subsystem' type='pci' managed='yes'> <driver name='vfio'/> <source> <address domain='0x0000' bus='0x08' slot='0x00' function='0x0'/> </source> <rom file='/mnt/user/isos/vbios/MSI_2070_Super_Ventus_OC_vbios.rom'/> <address type='pci' domain='0x0000' bus='0x00' slot='0x06' function='0x0'/> </hostdev> <hostdev mode='subsystem' type='pci' managed='yes'> <driver name='vfio'/> <source> <address domain='0x0000' bus='0x08' slot='0x00' function='0x1'/> </source> <address type='pci' domain='0x0000' bus='0x00' slot='0x08' function='0x0'/> </hostdev> <hostdev mode='subsystem' type='pci' managed='yes'> <driver name='vfio'/> <source> <address domain='0x0000' bus='0x08' slot='0x00' function='0x2'/> </source> <address type='pci' domain='0x0000' bus='0x00' slot='0x09' function='0x0'/> </hostdev> <hostdev mode='subsystem' type='pci' managed='yes'> <driver name='vfio'/> <source> <address domain='0x0000' bus='0x08' slot='0x00' function='0x3'/> </source> <address type='pci' domain='0x0000' bus='0x00' slot='0x0a' function='0x0'/> </hostdev> <memballoon model='none'/> </devices> </domain> Also wanted to note, that initially, when restarted, my shares show up, but after a couple of minutes they all disappear and Unraid says I have zero shares. -

[ Solved ] Help - Wonky behavior after power off and reboot.

Mobius71 replied to Mobius71's topic in General Support

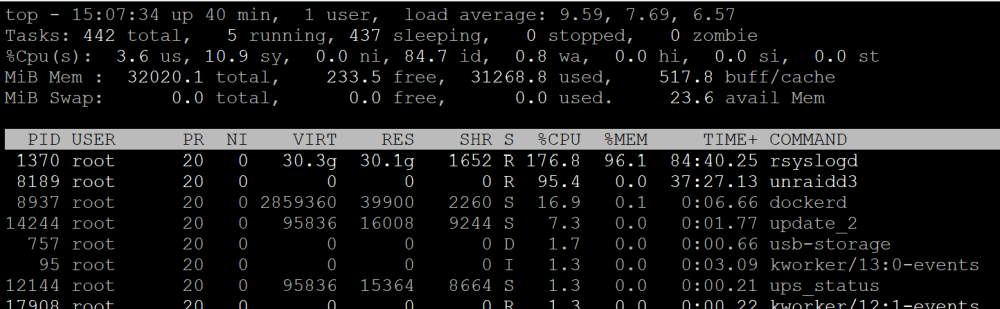

I couldn't access the vm config to turn off auto start until I killed rsyslogd. Then the GUI began behaving much more normally. I turned off auto start for VM and rebooted the server. New diagnostics file attached. I'm still having most or all of the same issues as before. Log immediately gets filled with "Tower kernel: md: disk0 read error" tower-diagnostics-20230825-1801.zip -

[ Solved ] Help - Wonky behavior after power off and reboot.

Mobius71 replied to Mobius71's topic in General Support

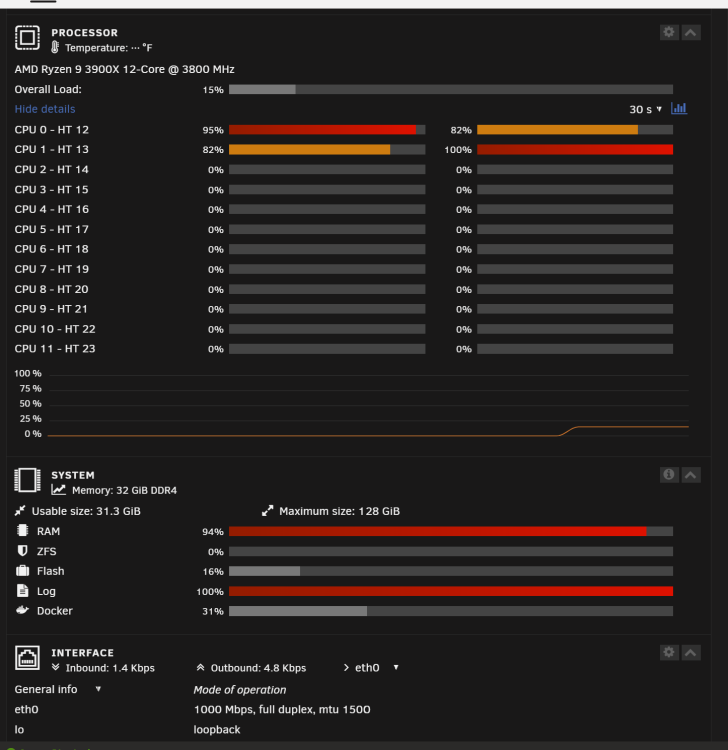

Also seeing high mem and cpu usage: I have those 4 cores locked to the OS so I can use the rest elsewhere. Also the log is at 100%...so looks like syslog is going crazy and eating up resources. Gonna shut things down for now. -

[ Solved ] Help - Wonky behavior after power off and reboot.

Mobius71 replied to Mobius71's topic in General Support

I'm passing a graphics card to the vm and maybe a usb controller I think. VM is set to start automatically. If I just reboot into headless Unraid, the boot freezes after "loading bzroot....ok" and will not progress further. If I reboot into Unraid GUI mode it will actually boot. New Diag file attached. tower-diagnostics-20230825-1436.zip -

So recently, I had to power down the server because something was hung to the point where the GUI and my ssh session were very slow to respond. After it came back up, I'm seeing a disk read error in syslog, most of my docker containers can't start (two of them claim to be started for some reason ¯\_(ツ)_/¯) because of a 403 not found error (because it can't see the disk apparently), and VM's page is empty. Also array disk 1 reports to be fine, but is also showing up under unassigned devices. If I cd to /mnt/disk1 and run ls -lharts I get this: "/bin/ls: cannot open directory '.': Input/output error". I immediately get worried because array disk2 was already being emulated and I'm waiting for a replacement to show up. At that point, I restarted in safe mode and started array in maintenance mode to run xfs_repair on suspected problem disks and all apparently went fine. I could manually mount disk1 to a created mount point and was able to pull the data off just to be safe. I restarted the server and it's all still borked. Would it be best to do a new config at this point? I don't want to lose data, but I do have all mission critical things backed up. I still don't want to have to recreate all my media if there's any way around it. My diag file is attached. Thanks in advance for the help! tower-diagnostics-20230825-1119.zip

-

Can we get iperf packages added to this by chance?

-

Sharing GPU with multiple VMs at the same time possible ?

Mobius71 replied to batesman73's topic in VM Engine (KVM)

Well I should've watched the previous post before I posted as the video Maor posted references the link I posted. Sorry for the redundant info everyone. -

Sharing GPU with multiple VMs at the same time possible ?

Mobius71 replied to batesman73's topic in VM Engine (KVM)

Found a good video demonstrating using 1 gpu for 2 vm's, but it's done with Hyper V. Might be some correlations to be made with KVM on unraid, but I'm not well versed enough to know. Still thought it was interesting. -

I didn't have to edit syslinux.cfg at all. I had my gpu in slot one. I made sure it was isolated into its own iommu group.. I didn't bind to vfio. I followed the spaceinvader one video on dumping the vbios from within unraid using a script he had written. I set the card and vbios in the vm template and Bob's your uncle. I've been gaming on it for weeks now with no issue. We can compare settings if you want. Beforehand I did encounter the same issue of the logs filling up, but since I did the above, this has not happened.

-

I got my issue sorted yesterday. @DaNoob Let me know if you're still having an issue or if you were able to resolve it.

-

Following as I am running into a similar if not the same exact issue. I have the same mobo as you. Using a 3900x cpu and MSI 2070 Super Ventus OC. If I manage to get this to work in the primary slot, I'll let you know.

-

No luck with bios update. Looks like I'll be upgrading my rig soon, unless someone has any other ideas.

-

Thanks for the reply. I've pretty much done everything but that at this point. I've also verified the GPU was good by testing it in my neighbor's gaming rig. Fingers crossed the bios update does the trick.

-

Bump

-

So I recently got my hands on a 2070 super graphics card and thought to myself it's time to spin up a gaming VM. I prep the VM and get to the part where I shut down the server to install the graphics card so I can boot up and pass it through. I try to boot and I get a beep code, but it's stuck at the Gigabyte splash screen and I cannot press F2 to enter bios nor can I press F10 for the boot menu. I tried different pcie slots and still no dice. I tried a different card in the same pcie slot with power removed from the 2070 super and unraid boots. If the graphics card has power supplied to it at all, it hangs at the Gigabyte splash screen. I have a 650w power supply. Motherboard is a Gigabyte GA-7PESH3. Could this be a power supply issue? Ii don't really have another system to test on as my desktop is an hp mini. Also not sure if there is a conflict with the mobo and the card at all. This is basically the same socket as the 1150 chipset. Last I checked that shouldn't be an issue with using this graphics card. I'm kinda stuck at this point. Please help.

-

I'm aware of a couple remote transcoding projects for Plex where one instance of Plex is master and the others work as slaves. Why not just get a couple boxes that are good at transcoding with your favorite distro to run as slaves? I had planned on doing something similar with a few cheap dual cpu 1U servers I had laying around, but the WAF pretty much killed that project. And in the end, I don't transcode enough to warrant that setup anyway. Now if Unraid itself could be clustered for replication and HA, that would be interesting. Following. Sent from my SAMSUNG-SM-G900A using Tapatalk

-

Memtest has been running for 24 hrs now with no errors. So should I go back to 6.3.5 and try to fix and then update to 6.5 or just jump to 6.5 with a fresh install and try to set everything back up? If I go straight to 6.5 then what other files do I need to copy over to try and save my settings and docker configs?