-

Posts

802 -

Joined

-

Last visited

Content Type

Profiles

Forums

Downloads

Store

Gallery

Bug Reports

Documentation

Landing

Everything posted by IamSpartacus

-

Precautions before encrypting array disks with LUKS

IamSpartacus replied to Opawesome's topic in Security

Yes if the remote end of my VPN is down I'd have to manually start the server but thats extremely rare and I'm ok with that. -

Precautions before encrypting array disks with LUKS

IamSpartacus replied to Opawesome's topic in Security

Was this directed at me? -

Precautions before encrypting array disks with LUKS

IamSpartacus replied to Opawesome's topic in Security

To picky back off of this, you can also autostart your encrypted array without having the key stored on your USB. I edited my go file to auto start my array with the key on a network device connected to my network across a site to site VPN connection that is offsite. There are other options as well such as what's shown in this video. -

unRAID 6 NerdPack - CLI tools (iftop, iotop, screen, kbd, etc.)

IamSpartacus replied to jonp's topic in Plugin Support

I updated fio. Try again and let me know This worked, thank you! -

unRAID 6 NerdPack - CLI tools (iftop, iotop, screen, kbd, etc.)

IamSpartacus replied to jonp's topic in Plugin Support

Would it be possible to add mergerfs into the Nerd Pack? -

SMB & ZFS speeds I don't understand ...

IamSpartacus replied to MatzeHali's topic in General Support

Thanks for the response. I'm sure my issue is because Unraid is running in a VM. I'm probably SoL. -

SMB & ZFS speeds I don't understand ...

IamSpartacus replied to MatzeHali's topic in General Support

Were you running those fio tests on Unraid or another system? I ask because I'm testing a scenario running Unraid in a VM on Proxmox with an all NVMe zfs pool added as a single "drive" as cache in Unraid. However, I'm unable to run fio even after it's installed via NerdTools. I just get "illegal instruction" no matter what switches I use. -

unRAID 6 NerdPack - CLI tools (iftop, iotop, screen, kbd, etc.)

IamSpartacus replied to jonp's topic in Plugin Support

Has anyone gotten fio to successfully run? I just get "illegal instruction" even with --disable-native set. I've tried this in both 6.9 beta 25 and 6.8.3 stable. -

I'm seeing major SMB issues in 6.9 beta 25. Simply browsing my shares it often takes 20-30 seconds for each subfolder to load in Windows Explorer. I'm seeing the same behavior on 4 different Windows 10 1909 machines on my network that I've tested with. I'm also seeing the following error messages in my syslog as soon as I first access a share from Windows Explorer: Aug 20 15:51:07 SPARTA smbd[35954]: sys_path_to_bdev() failed for path [.]! Aug 20 15:51:07 SPARTA smbd[35954]: sys_path_to_bdev() failed for path [.]! Aug 20 15:51:07 SPARTA smbd[35954]: sys_path_to_bdev() failed for path [.]! Aug 20 15:51:07 SPARTA smbd[35954]: sys_path_to_bdev() failed for path [.]! Aug 20 15:51:07 SPARTA smbd[35954]: sys_path_to_bdev() failed for path [.]! Aug 20 15:51:07 SPARTA smbd[35954]: sys_path_to_bdev() failed for path [.]! Aug 20 15:51:07 SPARTA smbd[35954]: sys_path_to_bdev() failed for path [.]! Aug 20 15:51:07 SPARTA smbd[35954]: sys_path_to_bdev() failed for path [.]! Aug 20 15:51:27 SPARTA smbd[36254]: sys_path_to_bdev() failed for path [.]! Aug 20 15:51:27 SPARTA smbd[36254]: sys_path_to_bdev() failed for path [.]! Aug 20 15:51:27 SPARTA smbd[36254]: sys_path_to_bdev() failed for path [.]! Aug 20 15:51:27 SPARTA smbd[36254]: sys_path_to_bdev() failed for path [.]! Aug 20 15:51:27 SPARTA smbd[36254]: sys_path_to_bdev() failed for path [.]! Aug 20 15:51:27 SPARTA smbd[36254]: sys_path_to_bdev() failed for path [.]! Aug 20 15:51:27 SPARTA smbd[36254]: sys_path_to_bdev() failed for path [.]! Aug 20 15:51:27 SPARTA smbd[36254]: sys_path_to_bdev() failed for path [.]! Diagnostics attached. sparta-diagnostics-20200820-2147.zip

-

Does anyone know if NFS v4 is/will be supported in 6.9?

-

Command to delete an unassigned BTRFS pool?

IamSpartacus replied to IamSpartacus's topic in General Support

Fixed this by simply doing an erase on the disk via the preclear plugin. -

Having trouble finding the command to delete a (non-cache) btrfs pool that was created using this method. The pool currently has a single device in it but I can't for the life of me find the command needed to blow the pool away completely. Trying to delete the partition from UD fails as well. Any ideas?

-

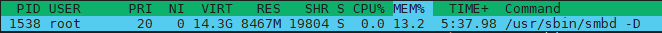

I'm seeing the samba service using a lot of memory and not releasing it on a consistent basis. I have samba shares from this server mounted to another Unraid server and do lots of file transfers (radarr/sonarr imports, tdarr conversions) between them. But even unmounting those shares does not release the used memory by samba. Maybe someone can enlighten me on what could be causing this memory usage to remain even when no shares are in use. athens-diagnostics-20200528-1444.zip

-

You probably have to set the number of backups to keep number to something other than 0 first.

-

Need to identify what is spinning up my parity disks

IamSpartacus replied to IamSpartacus's topic in General Support

Well than there's my answer. I suspect Radarr is the cultprit as it's constantly upgrading movie qualities. -

Need to identify what is spinning up my parity disks

IamSpartacus replied to IamSpartacus's topic in General Support

Ok so I see all the extra share cfg files. Most of those shares don't exist anymore and I've confirmed none of those folders exist on any of my disks. The only shares I have left are the following: Furthermore, while those shares were originally created with capital letters, they've all been converted to lower case. I guess the 'mv' command while changing the directory case does not change the share.cfg file, I'll have to fix that. The only shares that are set to Use Cache = Yes (meaning they eventually write to the array) are 4k, media, and data. And on every disk, those top level folders are indeed lower case. I've also confirmed all my docker templates reference the lower case shares. I've also just done a test file transfer to each share and every transfer wound up on cache and not on any of the disks. So if all the top level folders are correct on each of the disks to match the current shares, I'm not sure what could be causing the disk spin ups. QUESTION: If say radarr/sonarr are upgrading a file quality that exists on the array, would that cause a parity write during the write to cache since sonarr/radarr is technically deleting the previous file and overwriting it with the new/better quality version? -

I'm looking for some help in identifying what is causing my parity drives to constantly spin up. Every single one of my shares uses the cache drive and I only enact the mover once per day. Yet my parity drives are constantly being spun up periodically even though my disk spin down is set for 1hr of idle time. My data disks spin down and stay down unless the shares are being accessed, but I'm constantly seeing parity drives up during the day. So my question is, what could be causing parity writes to the array when all my shares use cache and none write directly to the array? unraid.zip

-

It does, I'm using it now and it works well.

-

Got it, sounds like that should work. How does one run the container with the headless option? And is there a shutdown option for once jobs are complete?

-

Unrelated issue, if I try to enable Real Time Sychronization and hit OK, the program freezes up and is unresponsive until I restart the container. There is nothing in the container logs when this happens.

-

Thank you. That seems to have fixed the issue. I don't know why the characters were an issue with the smb option but when the destination is set as a local mount point (via UD SMB mount) it works without issue and identifies the files on both ends are identical thus not creating any duplicates.

-

If I mount the destination SMB share via UD, how do I notate that in the docker template? Local? Because if I still use remote, I'm not sure what's changing.

-

I'm doing a sync now. Will let you know what the result is. Destination is an SMB share, it's not mounted locally via UD.

-

Hmmmm. I guess I'll delete the files off destination and let them copy over with DirSyncPro and then see what happens upon the next analysis.

-

Try just creating a folder named Déjà Vu (2006) with a text file with the same name inside it. Or try this actual video file. And btw, I'm using a local for source and smb for destination.