Duggie264

Members-

Posts

42 -

Joined

-

Last visited

Recent Profile Visitors

The recent visitors block is disabled and is not being shown to other users.

Duggie264's Achievements

Rookie (2/14)

1

Reputation

-

[SOLVED] Parity Swap Procedure - Asking to Copy Again

Duggie264 replied to timethrow's topic in General Support

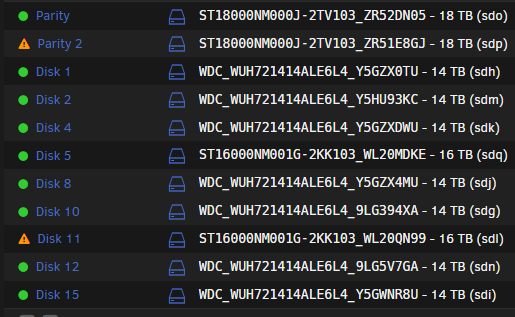

Thanks @JorgeB, Just for completeness: I had a couple of disk failures that resulted in a dual Parity swap procedure - Successfull I then recieved some newer replacement HDD that necessitated another dual parity swap procedure. Parity copy went smoothly, prior to the disk rebuild, I accidentally clicked a drop down on the main page. This meant that I would have to redo the parity copy (16-->18TB). I then did a search, and came across this thread (which should be linked from ther Parity Swap procedure page!) Everything would have went well, had I not missed the bit about dual parity and not using the "29" at the end of the cmds... so I now have this: and this Disks 5 and 11 were the locations of the original missing/failed disks, that should have been rebuilt on the two old p[arity disks that you can see occupying thier slots (MDKE anbd QN99) However as I incorrectly entered 29... I am guessing that: Parity 1 Valid Parity 2 Invalid (being rebuilt across disk set as you confirmed above) Disk 5 (original Parity 1) - should be getting overwritten with original emulated Disk 5 data? Disk 11 (original Parity 2) - should be getting overwritten with origianl emulated Disk 11 data? So I suppose the real question is, what will the outcome of this be? -

[SOLVED] Parity Swap Procedure - Asking to Copy Again

Duggie264 replied to timethrow's topic in General Support

So when starting the Array, would it try and rebuild P2 from the original P2 disk first, or would it just rebuild parity 2 from across the disk set (thus resulting in the loss of data from the failed disk the old P2 is replaceing, I assume?) -

[SOLVED] Parity Swap Procedure - Asking to Copy Again

Duggie264 replied to timethrow's topic in General Support

@JorgeB, just out of curiosity, if you had dual parity, and left in the numbers "29" after each command, then proceeded to start the array, what is the likely outcome.... wish I was asking for a friend... 🤔🤨😒🙄😔 -

Awesome, thanks for the help and information! best get swapping 😎

-

Yeah I am positive - they were brand new drives and were clicking from the get go - one failed to even initialise, and by the time I realised both the other drives had mechanical issues, one had already successfully hounded the array, which subsequently died. Also happy with the parity swap procedure, just wasn’t sure how much more risk there would be swapping out a parity drive when there are already two failed data drives! cheers for your assistance bud, much appreciated!

-

Yeah two dead data drives.

-

Thanks @itimpi So I already have dual parity, the issue now is I have dual (array) drive failures. I do have 2 16TB drives, but will I be able to swap out one of the Parity drives, when I already have two disks dead?

-

Duggie264 started following User shares missing - 6.9.2 and Disk Swap Query

-

BLUF: Can I replace a failed disk with a larger than parity disk (temporarily)? Background: I purchased three disks, one failed on testing, the other two were installed, subsequently one failed. The manufacturer, as part of the returns process has refunded the entire purchase amount to paypal for me, but I am now time limited in and have to return all three disks. All my disks (array and dual parity) are 14TB. I have two brand new 16TB disks. My array now shows two disks missing (as expected) and is running "at risk" Question 1. Will Unraid allow me to (temporarily) replace the missing 14TB drives with 16TB drives (I appreciate how parity works, but do not know A) if the extra two TB on the array disks will cause a parity fail, or B) if they will just be unprotected, or C) if Unraid will even allow the process). I have further 16TB drives on the way that will replace the parity drives in the event of B) 2. Am I correct in assuming that as I am missing two disks, I will be unable to swap a 14TB parity drive out for a 16TB (i.e. the swap-disable process?)

-

had this a couple of times, was rebuilding so didn't really care as I was due restarts anyway. Just had it whilst chasing what makes my Docker Log fill up so quickly. Specifically I am running Microsoft Edge Version 97.0.1072.55 (Official build) (64-bit), and I opened the terminal from the dashboard, as soon as I entered the command "co=$(docker inspect --format='{{.Name}}' $(docker ps -aq --no-trunc) | sed 's/^.\(.*\)/\1/' | sort); for c_name in $co; do c_size=$(docker inspect --format={{.ID}} $c_name | xargs -I @ sh -c 'ls -hl /var/lib/docker/containers/@/@-json.log' | awk '{print $5 }'); YE='\033[1;33m'; NC='\033[0m'; PI='\033[1;35m'; RE='\033[1;31m'; case "$c_size" in *"K"*) c_size=${YE}$c_size${NC};; *"M"*) p=${c_size%.*}; q=${p%M*}; r=${#q}; if [[ $r -lt 3 ]]; then c_size=${PI}$c_size${NC}; else c_size=${RE}$c_size${NC}; fi ;; esac; echo -e "$c_name $c_size"; done " I started getting the nginx hissy fit Jan 10 10:18:44 TheNewdaleBeast nginx: 2022/01/10 10:18:44 [error] 3871#3871: nchan: Out of shared memory while allocating message of size 10016. Increase nchan_max_reserved_memory. Jan 10 10:18:44 TheNewdaleBeast nginx: 2022/01/10 10:18:44 [error] 3871#3871: *4554856 nchan: error publishing message (HTTP status code 500), client: unix:, server: , request: "POST /pub/update2?buffer_length=1 HTTP/1.1", host: "localhost" Jan 10 10:18:44 TheNewdaleBeast nginx: 2022/01/10 10:18:44 [error] 3871#3871: MEMSTORE:00: can't create shared message for channel /update2) I have 256GB Memory, and was at less than 11% utilisation so that wasn't an obvious issue, the output from df -h shows no issues either. Filesystem Size Used Avail Use% Mounted on rootfs 126G 812M 126G 1% / tmpfs 32M 2.5M 30M 8% /run /dev/sda1 15G 617M 14G 5% /boot overlay 126G 812M 126G 1% /lib/firmware overlay 126G 812M 126G 1% /lib/modules devtmpfs 126G 0 126G 0% /dev tmpfs 126G 0 126G 0% /dev/shm cgroup_root 8.0M 0 8.0M 0% /sys/fs/cgroup tmpfs 128M 28M 101M 22% /var/log tmpfs 1.0M 0 1.0M 0% /mnt/disks tmpfs 1.0M 0 1.0M 0% /mnt/remotes /dev/md1 13T 6.4T 6.4T 51% /mnt/disk1 /dev/md2 13T 9.5T 3.3T 75% /mnt/disk2 /dev/md4 13T 767G 12T 6% /mnt/disk4 /dev/md5 3.7T 3.0T 684G 82% /mnt/disk5 /dev/md8 13T 9.6T 3.2T 76% /mnt/disk8 /dev/md10 13T 9.1T 3.7T 72% /mnt/disk10 /dev/md11 13T 8.9T 3.9T 70% /mnt/disk11 /dev/md12 13T 7.0T 5.8T 55% /mnt/disk12 /dev/md15 13T 5.7T 7.2T 45% /mnt/disk15 /dev/sdq1 1.9T 542G 1.3T 30% /mnt/app-sys-cache /dev/sdh1 1.9T 17M 1.9T 1% /mnt/download-cache /dev/nvme0n1p1 932G 221G 711G 24% /mnt/vm-cache shfs 106T 60T 46T 57% /mnt/user0 shfs 106T 60T 46T 57% /mnt/user /dev/loop2 35G 13G 21G 38% /var/lib/docker /dev/loop3 1.0G 4.2M 905M 1% /etc/libvirt tmpfs 26G 0 26G 0% /run/user/0 I then ran the following commands in order to restore connectivity (after first restart I had no access) root@TheNewdaleBeast:~# /etc/rc.d/rc.nginx restart Checking configuration for correct syntax and then trying to open files referenced in configuration... nginx: the configuration file /etc/nginx/nginx.conf syntax is ok nginx: configuration file /etc/nginx/nginx.conf test is successful Shutdown Nginx gracefully... Found no running processes. Nginx is already running root@TheNewdaleBeast:~# /etc/rc.d/rc.nginx restart Nginx is not running root@TheNewdaleBeast:~# /etc/rc.d/rc.nginx start Starting Nginx server daemon... and normal service was restored Jan 10 10:22:40 TheNewdaleBeast root: Starting unraid-api v2.26.14 Jan 10 10:22:40 TheNewdaleBeast root: Loading the "production" environment. Jan 10 10:22:41 TheNewdaleBeast root: Daemonizing process. Jan 10 10:22:41 TheNewdaleBeast root: Daemonized successfully! Jan 10 10:22:42 TheNewdaleBeast unraid-api[13364]: ✔️ UNRAID API started successfully! Not sure if this is helpful, but to me it appeared that issue was caused (or coincidental with) use of web terminal.

-

Good news (Phew) rebooted and restarted Array and all is looking good so far!

-

Yeah I couldn't either - just wanted to ask first this time, rather than waiting until I am deeply mired in a rebooted server with no Array, no Diagnostics and all I have is a sheepish grin!

-

@rxnelson - Good Point, and most likely why it didn't work for me! I had just moved my 1080 GTX from an i7 6700K based gaming rig to my Dual Xeon E5-2660 Unraid rig, and completely forgot that the Xeons don't have integrated graphics 🙄However I would be curious to know if it will work with an integrated or non-nvidia GPU? Oh and apologies for late reply - I am not getting notifications for some reason!

-

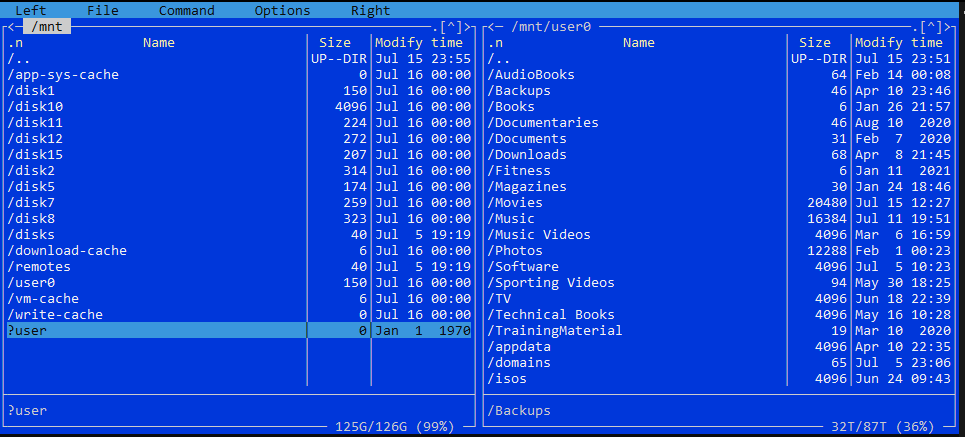

BLUF: User Shares Missing. in MC mnt/user is missing, mnt/user0 is there and there is a ?user in red. Server has not yet been restarted (only array stop/started) Good evening, I had 13x256GB, 1x1TB SSD and 1x1TB NVME in a giant cache pool. With multiple cache pools now a thing, I wanted to break down my cache and sort out my shares as follows: Get Rid of old cache pool: Disabled Docker and VM Set all my shares to Cache: Yes. Called in Mover to move everything to the array* ** Shut down the array. Removed all drives from the cache pool, then deleted it. * When mover finished there were still some appdata files in Cache and Disk 2 so I spun up MC via ssh and did the following: 1. mnt/disk2/appdata ---> mnt/disk10/ 2. mnt/cache/appdata ---> mnt/disk10/ **When I exited MC and returned to the GUI window I noticed that all my user shares had disappeared (Ahhh F%$£!) I immediately downloaded diagnostics - the one ending 2305 I then spent a bit of time looking for similar errors - wasn't massively concerned as all data is still there. Create New Cache Pools Created the 4 new cache pools. Set the FS to XFS for the single drives and Auto for the actual pools Started the array back up and formatted everything to mount them again. Still no User shares - immediately downloaded diagnostics again - the one ending 2355 on looking in MC I see this: no User on the left, but User0 on the right shows the Shares structure still there. I fully accept that I may have done something wrong/stupid etc, but have three questions: 1. Can someone help? and if so 2. How do I recover my Shares and 3. What did I do that actually screwed it up (as I do like to learn, albeit painfully) Many thanks in anticipation of your assistance! thenewdalebeast-diagnostics-20210715-2305.zip thenewdalebeast-diagnostics-20210715-2355.zip

-

Allegedly, from 465.xx.onwards nvidia drivers now support passthrough (GPU Passthrough), as passthrough was software hobbled in drivers to "force" upgrades/purchase of Tesla/Quadro. However unable to passthrough EVGA 1080 GTX SC - 8GB successfully to a Win 10 VM - I czn see it in device manager but is a code 43 regardless. Will attempt this script this week at some point if I get time.

-

Just came across this, nice work! if you are still maintaining/developing it would be great if there was someway to display the mags that reside in a folder structure like: Mag Title/Year/IssueTitle.pdf thanks