zer0zer0

-

Posts

38 -

Joined

-

Last visited

Content Type

Profiles

Forums

Downloads

Store

Gallery

Bug Reports

Documentation

Landing

Posts posted by zer0zer0

-

-

So, it won't rebuild from parity?

That data is only recoverable using testdisk or similar?

-

9 hours ago, JorgeB said:

That start sector is wrong, should be 64, something messed with your partition/disk, you can try running testdisk to see if it finds the old partition.

Hmm, all of the other disks also start at 8?

Device Start End Sectors Size Type /dev/sdb1 8 2441609210 2441609203 9.1T Linux filesystem /dev/sdc1 8 2441609210 2441609203 9.1T Linux filesystem /dev/sdd1 8 2441609210 2441609203 9.1T Linux filesystem /dev/sde1 8 2441609210 2441609203 9.1T Linux filesystem /dev/sdf1 8 2441609210 2441609203 9.1T Linux filesystem -

5 hours ago, JorgeB said:

Are you sure that this was ever formatted? Kind of strange that the filesystem is set to auto, assuming it's still sde post the output of:

fdisk -l /dev/sdeIt was definitely formatted with xfs and then all of a sudden just threw that error

root@DARKSTOR:~# fdisk -l /dev/sde Disk /dev/sde: 9.1 TiB, 10000831348736 bytes, 2441609216 sectors Disk model: HUH721010AL4204 Units: sectors of 1 * 4096 = 4096 bytes Sector size (logical/physical): 4096 bytes / 4096 bytes I/O size (minimum/optimal): 4096 bytes / 4096 bytes Disklabel type: gpt Disk identifier: 14CEF3CF-1F72-48D2-8C97-83C61932AE02 Device Start End Sectors Size Type /dev/sde1 8 2441609210 2441609203 9.1T Linux filesystem

-

Same result unfortunately

Sorry, could not find valid secondary super block

-

12 hours ago, JorgeB said:

That won't help, start the array in maintenance mode and post the output of:

xfs_repair -v /dev/md4p1

It’s going to take a while 😃why /dev/md4p1 and not /dev/sde?

-

All of a sudden one of my disks gave me the dreaded error of unmountable, no supported file system

Disk 4 - HUH721010AL4204_7PH0NGHC (sde)

Check xfs filesystem is totally missing from the gui for this disk, but there for all the other array disks?

Running a check from the CLI comes back with could not find a valid secondary superblock

Where do I go from here apart from just replacing the drive?

Diagnostics zip attached

-

-

As far as I can tell all of the drives are operating as expected and all connections are fine.

It might start our at ~28MB/sec and then it will drop under 10 🙃

I do get a weird result for smart test on the parity drive - Background short Failed in segment --> 3

But it reports are healthy at the same time?

Diagnostics file attached

Diskspeed tests are also good when benchmarking the drivesBut, as you can see here the parity drive is only being written really slowly

-

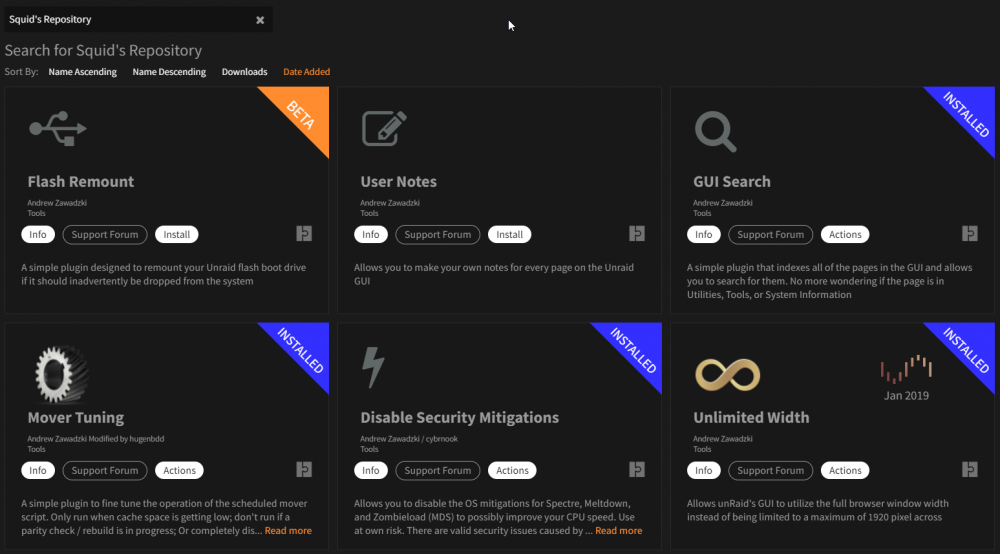

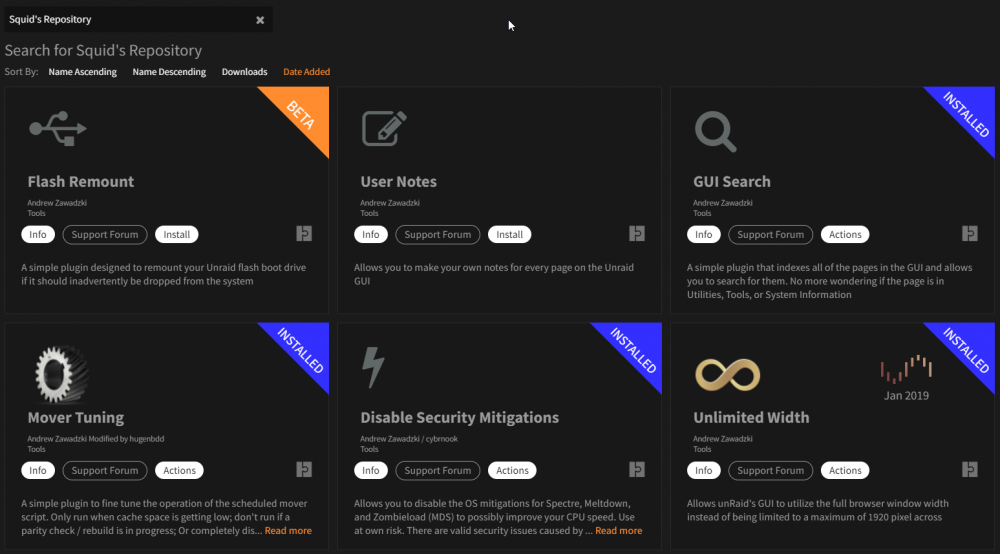

5 hours ago, Squid said:

It's in CA. The original won't show because it's now marked as incompatible, and the fork shows (GuildNet/Squid as author) when running 6.10+

I'm not sure what's up, but I'm on 6.10.0-rc8 and I'm not seeing it no matter what I search for in community apps (version 2022.05.15)

Searching for "docker folder" comes up with totally irrelevant results and searching for your repo only shows your other awesome work!!

-

On 9/18/2021 at 4:14 PM, sergio.calheno said:

Hey,

First of all, thanks for the great docker!

However I'm trying to add this whitelist but for that I need python3 inside the docker. Any idea I can get a walk through on how to do it? I've tried searching for it, but I don't get a concrete answer on how to install python3 inside a docker container (or if it's even possible). I tried entering in the console "sudo apt-get python3" but to no avail...

Any help would be greatly appreciated.

Thanks!

Hmm, apt installing python3 and then running those whitelist scripts works perfectly for me...

sudo apt update

sudo apt install python3

-

1

1

-

-

1 hour ago, ich777 said:

What is failing exactly?

Is the file in the download folder and Sonarr doesn't moves it to the destination?

I made things as simple as possible and I'm just using /torrents as my path mapping, so I don't think that's the issue.

Yes the file gets downloaded and I can see it sitting in the /torrents directory and it starts seeding

I've also run DockerSafeNewPerms a few times, but that hasn't changed anything.

I can get around it by using nzbToMedia scripts for the time being, but it's weird I can't get it working natively

-

Anyone else having issues getting torrents to complete with Sonarr?

I have paths mapped properly, and have tried qbittorrent, rtorrent, deluge, etc. without any luck

Sonarr sends torrents perfectly, and the torrent clients download them perfectlyThen the completion just doesn't happen and there's no logs

-

-

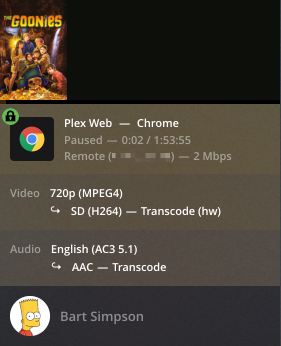

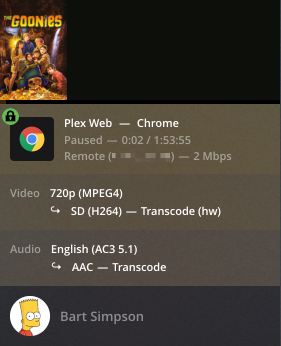

17 minutes ago, Spucoly2 said:

I've seen times when nvidia-smi doesn't show anything but it is actually transcoding.

You should be sure you are forcing a transcode and then check in your Plex dashboard for the (hw) text like this...

-

3 hours ago, ich777 said:

This was descussed a few times here in the thread, the solution was actually to switch to the plexinc container or linuxserver container.

You can also hook @binhex woth a short message or a post in his thread, I think he runs his container just fine with the Plugin.

binhex plexpass container is working well for me

-

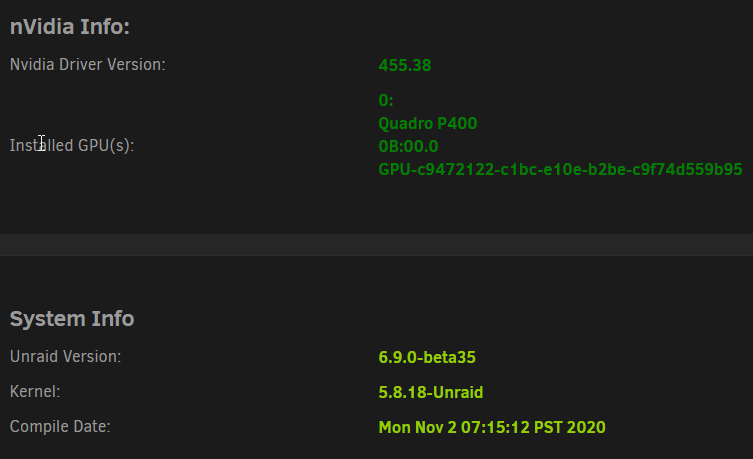

hmmm, seems it might even be a SuperMicro X10 and/or Xeon E5 v3 specific issue.

No issues on my E3-1265L v3 running on an AsRock Rack E3C224D2I

@StevenD can run 6.9rc2 with hypervisor.cpuid.v0 = FALSE on his setup with ESXi 7, SuperMicro X9, and E5-2680v2 cpu's

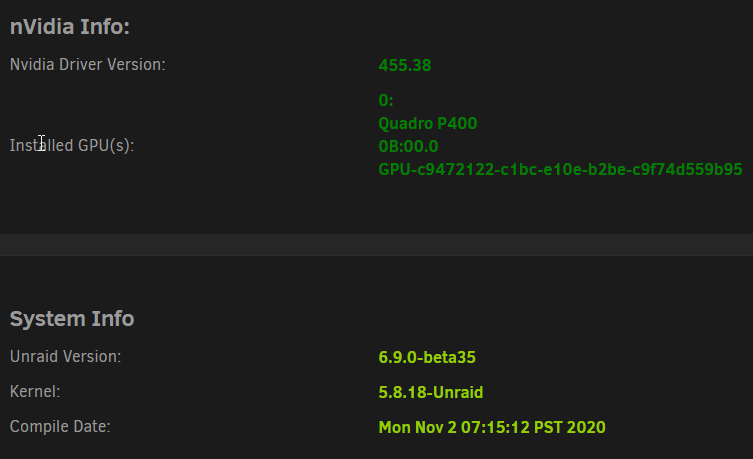

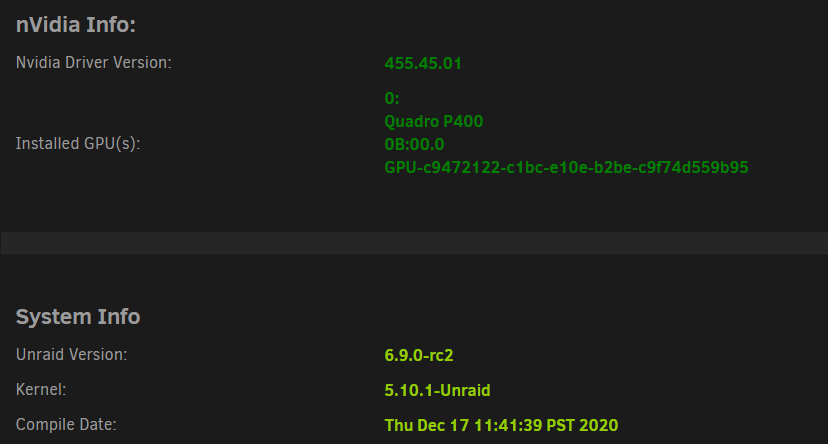

Thanks to @ich777, I can confirm that you can run the latest 6.9rc2 with a modified bzroot that has the 6.9.0-beta35 microcode

-

5 hours ago, ich777 said:

Sorry I really want to help but I don't know much about ESXI. Eventually @StevenD can help, isn't there also a subforum here in the forums about virtualizing Unraid?

I talked to @StevenD and dropped back to UnRAID version 6.9.0-beta35 like he's running, and this issue is resolved

-

3

3

-

-

-

1

1

-

-

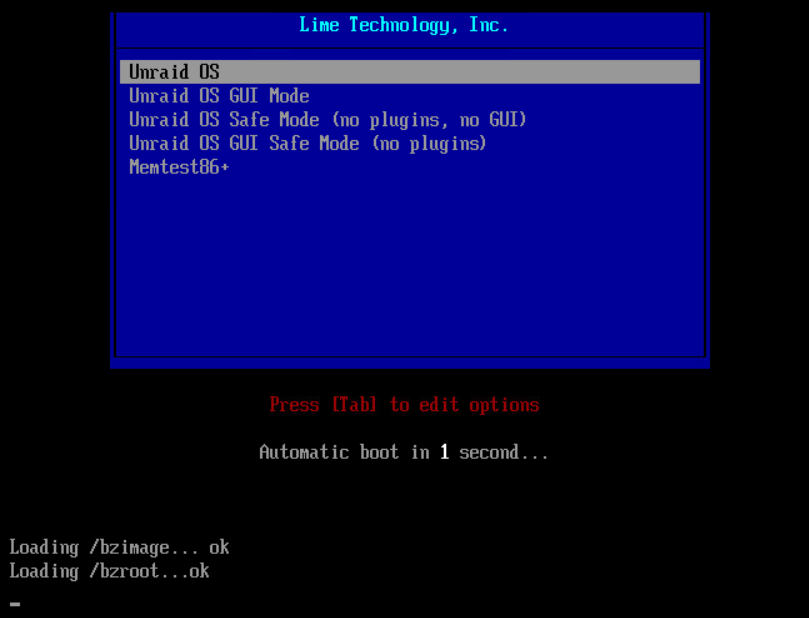

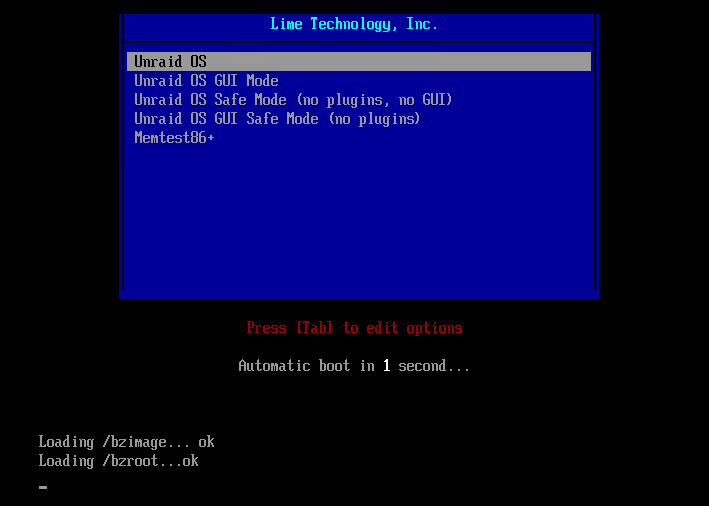

To follow up on my issues, I can boot if I choose just one cpu, so it's probably not an issue with this plugin specifically.

It is more likely to be UnRAID itself.

If I set one cpu and also hypervisor.cpuid.v0 = FALSE in the vmx file I can get it to boot and the plugin appears to be working as expected.

@ich777- if you can think of any logs etc. to get this resolved let me know

-

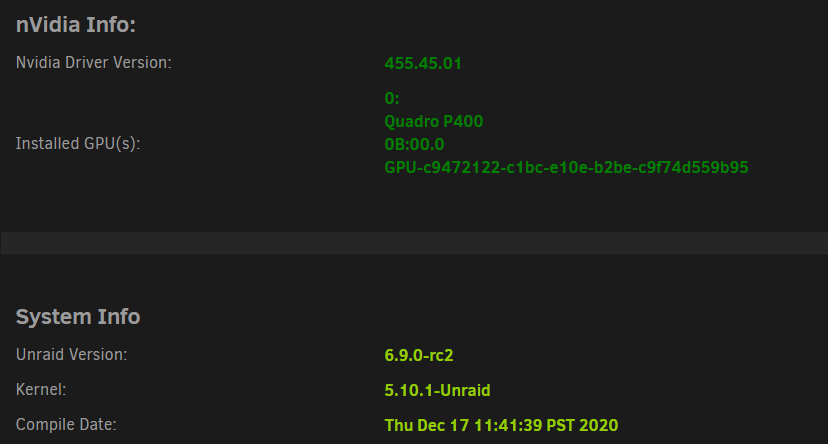

I have pretty much the exact same issue!

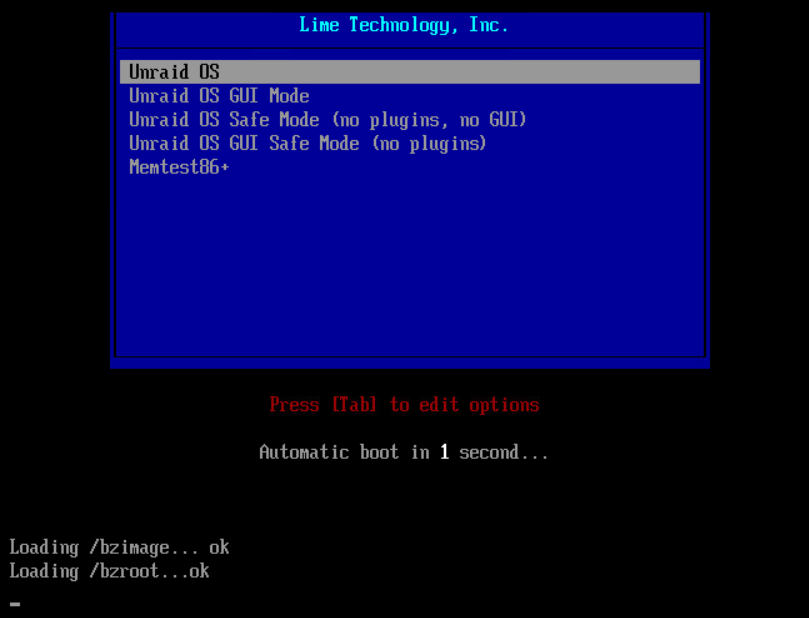

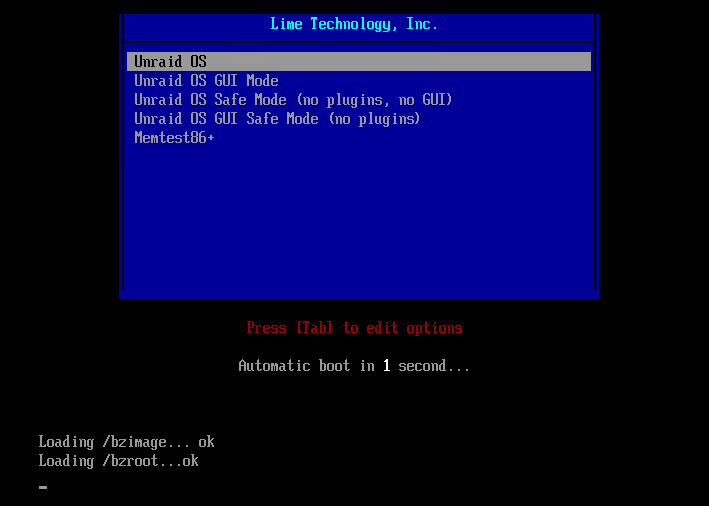

If I add hypervisor.cpuid.v0 = FALSE, booting freezes right after loading bzroot.If I set just one cpu I can boot just fine

I'm not sure it's Nvidia specific as I still get the error even without my Nvidia card passed through to the virtual machine.

And it won't boot even without any hardware at all passed through

ESXi 6.7 with the latest patchesUnRAID 6.9.0-rc2

Nvidia Quadro P400

A plain Ubuntu 20.10 instance with hypervisor.cpuid.v0 = FALSE set works as expected. No problems at all.

Mine was also working great with linuxserver.io nvidia builds.

So it’s definitely not a hardware or esxi issue.

-

On 1/6/2021 at 6:17 AM, StevenD said:

I'm running unRAID virtualized under ESXI 7 with an RTX 4000, using @ich777 plugin. I don't recall doing anything special to get it to work. I do have these settings:

pciHole.dynStart 3072 hypervisor.cpuid.v0 FALSEbut I don't recall specifically setting them.

That's weird that yours is working and mine isn't.

I also just tested that it works as expected with a plain Ubuntu 20.10 instance with hypervisor.cpuid.v0 = FALSE set. No problems at all.

Mine was working great with linuxserver.io nvidia builds.

So it’s definitely not a hardware or esxi issue.

I have the following setup:- ESXi 6.7 with the latest patches

- UnRAID 6.9.0-rc2

- Nvidia Quadro P400

Passing through both the nvidia card and audio device.

Other passed through devices like sas hba, and nvme drives work perfectly.

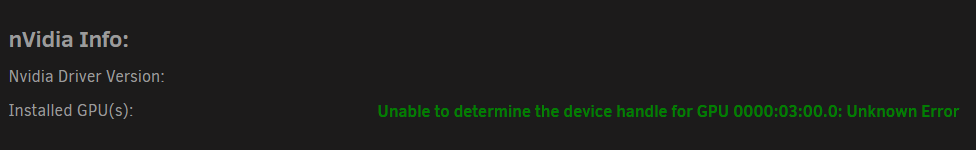

Without any flags in the vex file I can boot the UnRAID virtual machine and can see the Nvidia card

root@XXXX:~# lspci | grep NV

03:00.0 VGA compatible controller: NVIDIA Corporation GP107GL [Quadro P400] (rev a1)

03:00.1 Audio device: NVIDIA Corporation GP107GL High Definition Audio Controller (rev a1)But anything else has errors like

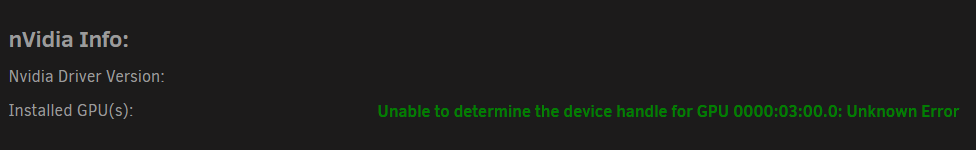

root@XXXX:~# nvidia-smi

Unable to determine the device handle for GPU 0000:03:00.0: Unknown Error

Nvidia Driver Settings page gives me this error

If I add the hypervisor.cpuid.v0 = FALSE variable to the vmx file it freezes after bzroot and won't boot

-

Just wanted to let you know that the ESXi logo is a bit messed up

-

14 hours ago, ich777 said:

Are you using 6.8.3 or 6.9.0RC2?

I know very littly about ESXi7 so I really can't help here.

Eventually this can help: Click

Tried all of the different advanced variables, without any luck, so I gave up and did a bare metal UnRAID with a nested ESXi instead

-

1

1

-

-

Anyone have any luck getting this working with an UnRAID host running on ESXi 7 with pass through?

Mine either freezes at boot time, or if I change some advanced variables around I can get it to boot, but get a message on the plugin page saying "unable to determine the device handle"I have tried the normal advanced settings you need for ESXi and nvidia passthrough like setting hypervisor.cpuid.v0 = “FALSE” and pciHole.start = “2048” but I'm not having much luck so far

[Support] devzwf - Proxmox Backup Server Dockerfiles

in Docker Containers

Posted

I'm excited to see this container, but it doesn't want to come up on my server

Just gets stuck at

12/11/202311:31:59 AM API: Starting...

12/11/202311:32:00 AM PROXY: Starting...