guitarlp

Members-

Posts

301 -

Joined

Content Type

Profiles

Forums

Downloads

Store

Gallery

Bug Reports

Documentation

Landing

Everything posted by guitarlp

-

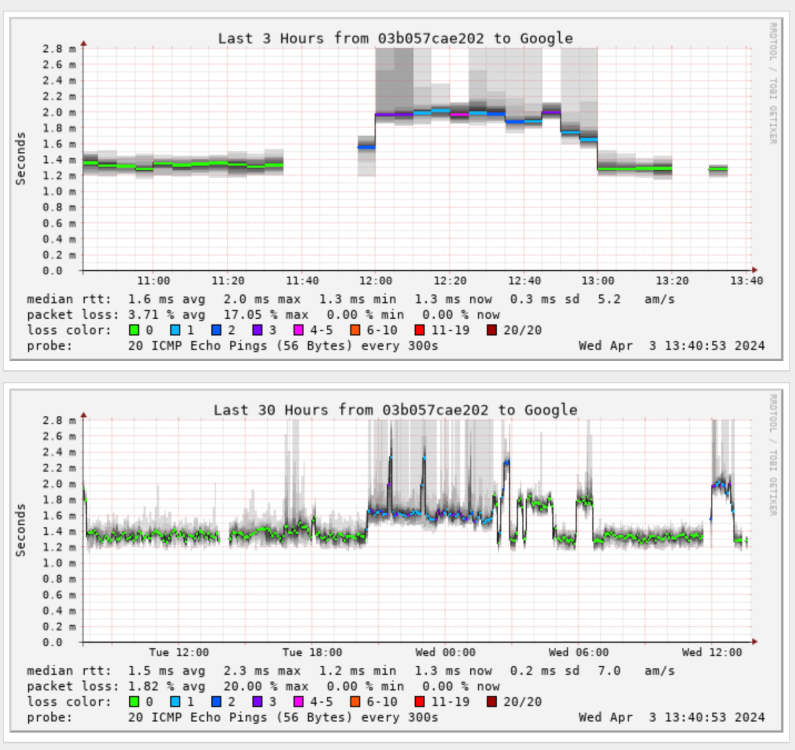

I moved my smokeping docker container from my bridge network to my custom br0 network. However, once I did that, all my targets connecting via IPv6 are getting packet loss. Here's an example (anything that isn't green is when I get packet loss and the container is on the br0 network): When I run a ping from within that container, I'm always getting packet loss when I run the command forcing IPv6. I don't get any packet loss on IPv4: 80c35650254d:/config# ping -4 -c 5 google.com PING google.com (142.251.40.46): 56 data bytes 64 bytes from 142.251.40.46: seq=0 ttl=118 time=1.429 ms 64 bytes from 142.251.40.46: seq=1 ttl=118 time=1.337 ms 64 bytes from 142.251.40.46: seq=2 ttl=118 time=1.262 ms 64 bytes from 142.251.40.46: seq=3 ttl=118 time=1.401 ms 64 bytes from 142.251.40.46: seq=4 ttl=118 time=1.400 ms --- google.com ping statistics --- 5 packets transmitted, 5 packets received, 0% packet loss round-trip min/avg/max = 1.262/1.365/1.429 ms 80c35650254d:/config# ping -6 -c 5 google.com PING google.com (2607:f8b0:4007:819::200e): 56 data bytes 64 bytes from 2607:f8b0:4007:819::200e: seq=0 ttl=117 time=1.918 ms 64 bytes from 2607:f8b0:4007:819::200e: seq=2 ttl=117 time=1.812 ms 64 bytes from 2607:f8b0:4007:819::200e: seq=3 ttl=117 time=1.952 ms 64 bytes from 2607:f8b0:4007:819::200e: seq=4 ttl=117 time=1.884 ms --- google.com ping statistics --- 5 packets transmitted, 4 packets received, 20% packet loss round-trip min/avg/max = 1.812/1.891/1.952 ms I monitor sites like google.com using smokeping, but I also monitor IP addresses like Quad9's DNS servers. With IP addresses, I'm not seeing any packet loss when testing the IPv4 9.9.9.9: But I am seeing packet loss when I test Quad 9's IPv6 DNS servers: So my issue is that any outbound connection to an IPv6 address is always getting some packet loss when in my custom br0 mode. Any ideas on what I should look into? I'm on the latest unRAID 6.12.9. I'm using pfSense are my gateway, and IPv6 is handled via SLAAC. Here are my interface settings: Here is my routing table: Here are my docker settings:

-

Why do some containers using a custom br0 network show port mappings, and others do not? I understand that when you use a custom network, the port mappings no longer matter. But I'm confused on why some containers in the unRAID GUI are showing port mappings, and others are not, for the same br0 network. For those containers that don't list them, is there a way for me to get them showing up, so that things are consistent in the GUI? See the attached screenshot. The `swag` and `Plex-Media-Server` docker containers use my br0 network and have port mappings defined. But `homeasistant` has no port mappings showing.

-

Large copy/write on btrfs cache pool locking up server temporarily

guitarlp replied to aptalca's topic in General Support

This post for example. -

Large copy/write on btrfs cache pool locking up server temporarily

guitarlp replied to aptalca's topic in General Support

Thank you for the response. I understand that part though. I default to encrypt everything, but I'm wondering if doing an encrypted XFS drive is going to fix this, or if I need to ultimately do an unencrypted XFS disk. Normally encryption doesn't impact perceived performance... but normally raid1 doesn't cause huge iowaits with freezing docker containers :). -

Large copy/write on btrfs cache pool locking up server temporarily

guitarlp replied to aptalca's topic in General Support

This is an old thread (apologies), but this still doesn't appear to be fixed on the latest `6.11.5`? When I switch from btrfs raid 1 to XFS non raid, can I do that encrypted, or does it have be be an unencrypted XFS disk? I read something in this thread about encryption possibly attributing to this issue. -

Is the Dynamix SSD Trim plugin still required on pools that use BTRFS with encryption? The plugin mentions: But I have read that the `discard=async` BTRFS option included as of unRAID 6.9 doesn't work on encrypted drives.

-

Would it be possible to exclude docker updates creating warnings? I run the CA Auto Update Applications app to update Docker apps every day. However, if Fix Common Problems runs before an update is installed, warnings get created which give me small heart attack when I receive those via Pushover notifications. I can ignore each notification, but that's per docker app (and only after a warning fires). I have 27 dockers right now, and today I was able to ignore 4 of them, but that means I have 23 apps that may cause warnings in the future and every time I add a new docker that adds another chance for a warning to appear.

-

Is this container still up to date and okay for use? It failed to install a couple of times, but now that it did install unRAID is showing the version as "not available".

-

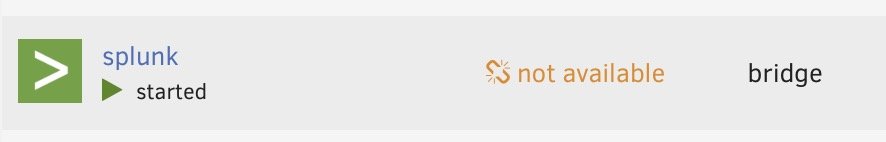

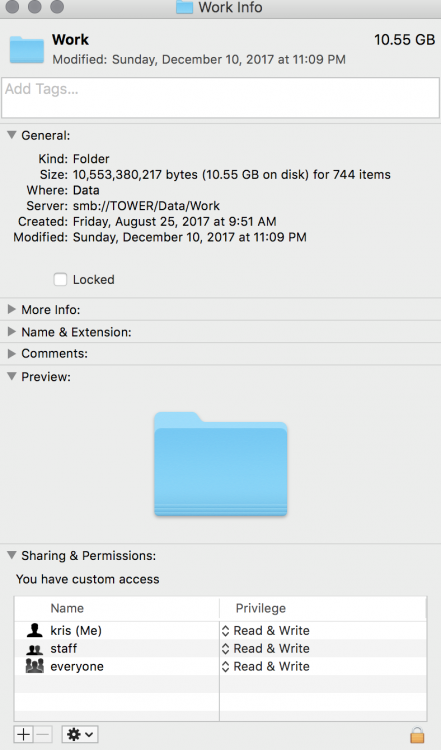

I'm running unRAID 6.4.0, but this also happened on 6.3.5. I'm on MacOS 10.13.2. My Samba shares are exported as private where only certain users can read and write to these shares. I've ran the New Permissions scripts on all of my shares. When I connect to a an unRAID share over Samba I can connect fine (smb://TOWER/share_name). I can see all my shares and connect to each of them. However, once I'm connected to a share all of the folders within that share have the red permission icon on the folder. If I try to open the folder, I'm presented with the following message: If, within unRAID, I enable the `Enhanced OS X Interoperability` for that share the issue is fixed, but I don't want to enable this on all my shares. The reason being, when this feature is enabled, MacOS writes at non-standard permissions to the shares. Instead of a new folder being 777 permissions like it is when writing from one of my freeBSD machines, the permissions may be 755. For files, they'll end up as 644. That's a problem with my workflow, because other users on my network then can't rename or delete these files since they don't have permission to do so. I'd be okay enabling that feature mentioned above if I could force 777 and 666 permissions on my shares, but even though I've played around with `Samba extra configuration` settings, I haven't been able to get the permissions to be forced to certain values. So the reason for this post... why is it that, when I don't have `Enhanced OS X Interoperability` enabled for a share, my Mac can see the share, but I can not open any folders within said share? Here's an example of what I see on my Mac for a share that does not have the Enhanced OS X Interoperability feature enabled: And here's an example of what I see when I do have that feature enabled: Both folders, on unRAID, have 777 permissions with nobody:users as the owners.

-

No problem. I had the same issue and banged my head around it for about an hour. Then I re-read the instructions (carefully this time) and once I removed that folder things worked for me as well.

-

Remove the new syslinux folder from 5.0.4. You should only be copying over the bzimage and bzroot files (along with the readme if you want).

-

I'd backup the flash drive, re-format, and flash the latest rc16c back onto the drive. If that doesn't work, then it doesn't sound like an unRAID issue (it would be a hardware issue). If it does work, you can try copying back all your settings to the flash drive and see if it works at that point.

-

Yep, I did this yesterday to test if the ESXi layer was causing issues with my unRAID shares. Booted into the BIOS and changed the flash boot device from ESXi to unRAID, booted, and everything was up and running like normal. Once finished, went back to the BIOS, switched back to the ESXi flash device, and booted back into ESXi. unRAID came back online like normal again. That's one of the big pluses for ESXi. If you have a problem with it, you can always boot bare metal into unRAID to access that data.

-

I can't figure it out. So far I have: Repaired permissions Cleared Spotlight data and forced a refresh Cleared Keychain of all logins related to this server via AFP Deleted Finder preferences Uninstalled TotalFinder Uninstalled Alfred Uninstalled Pacifist Uninstalled OSXfuse Uninstalled BlueHarvest Uninstalled NFSManager Rebooted and tested after every change to try and find the culprit Unless anyone else has any ideas, I'm just going to turn off AFP for now since it's a headache (and always has been). I would have ideally liked to have unRAID manage my TM backups, but I'll just let napp-it manage that for now. For some reason my machine has something on it that forces a complete scan of the unRAID server when it tries to mount a share (only when mounting with AFP), and I'm out of ideas on what it could be at this point.

-

Did a bunch more testing, including booting into unRAID directly instead of through ESXi. However, I've narrowed down the issue to my Macbook. If I connect to the share from another Macbook, it only spins up the parity and disk1. On my Mac however, everything spins up. I tried disabling TotalFinder, as well as telling Spotlight not to search that network location, but I'm still running into this issue. I guess it's time to un-install each potential problem program one by one until I find the culprit.

-

That would tell me what files/folders are being accessed or modified to help me troubleshoot? I don't have unMenu installed because I like to keep unRAID stock and use separate Linux machines for all the app/front end stuff. I can install it to help troubleshoot, but I'm wondering how inotify would help me out.

-

I'm at a loss at why my machine seems to be spinning up all the disks during each TM backup. I've tried rebooting unRAID, as well as my Macbook, but it didn't make a difference. I have TM pointing at a single disk (none user share) and I'm only using AFP on that disk. Yet every time I run a Time Machine backup, all the disks spin up one by one, and Time Machine doesn't start the backup until all of the disks have spun up. I'm running rc 16c, and it's completely stock. I was running 4.7 previously, but I wiped my flash drive, and re-configured all the settings from scratch on rc 16c so that I didn't have any left over configuration or garbage from my old unRAID. I checked the sys logs, and all I see is this: Jul 28 01:06:24 NAS emhttp: Spinning down all drives... Jul 28 01:06:24 NAS kernel: mdcmd (94): spindown 0 Jul 28 01:06:25 NAS kernel: mdcmd (95): spindown 1 Jul 28 01:06:25 NAS kernel: mdcmd (96): spindown 2 Jul 28 01:06:26 NAS kernel: mdcmd (97): spindown 3 Jul 28 01:06:26 NAS kernel: mdcmd (98): spindown 4 Jul 28 01:06:26 NAS kernel: mdcmd (99): spindown 5 Jul 28 01:06:27 NAS kernel: mdcmd (100): spindown 6 Jul 28 01:06:27 NAS kernel: mdcmd (101): spindown 7 Jul 28 01:06:28 NAS kernel: mdcmd (102): spindown 8 Jul 28 01:06:28 NAS kernel: mdcmd (103): spindown 9 Jul 28 01:06:28 NAS kernel: mdcmd (104): spindown 10 Jul 28 01:06:29 NAS kernel: mdcmd (105): spindown 11 Jul 28 01:06:29 NAS kernel: mdcmd (106): spindown 12 Jul 28 01:06:30 NAS emhttp: shcmd (112): /usr/sbin/hdparm -y /dev/sde &> /dev/null That's me manually spinning down all the drives. Once that completes I tell Time Machine to make a backup and all the disks start to spin up. But nothing posts to the log after that point. Any other thoughts on what could be the problem?

-

Spin up disks on a user share at a certain day/time via cron?

guitarlp replied to guitarlp's topic in User Customizations

Thanks for throwing out that idea. I some testing and if I disable the cache disk NFS shares do spin up when accessed from a remote mount. SMB allows me to use the cache drive and still have disks spin up, but it looks like unRAID requires NFS to go direct to disk and skip the cache drive to properly spin up the drives. -

Spin up disks on a user share at a certain day/time via cron?

guitarlp replied to guitarlp's topic in User Customizations

I didn't think about that. I do use a cache drive and the share in question was set to use the cache. I'll do some testing today without the cache drive to see if that gets things spinning up properly. -

Spin up disks on a user share at a certain day/time via cron?

guitarlp replied to guitarlp's topic in User Customizations

It doesn't. At least, it doesn't spin up the disks when applications on my Ubuntu server try to access a mounted NFS share, or when ESXi tries to copy data to an NFS share. If those shares are on unRAID and the disks for those shares are spun down, nothing gets copied. -

Wondering if there's a script that someone has created where I can spin up a specific user share at certain days/times. The reason being, I'd like to use unRAID as backup location for my VM's in ESXi (using the gheyttoVCB script). ESXi supports NFS shares, but NFS shares don't wake up unRAID disks. I have my ESXi Backups happen at a set time on certain days of the week via cron, and I figure I can spin up the unRAID disks for that NFS share a few minutes before ESXi will attempt to write to it. This way I can use unRAID for all my ESXi VM backups, while at the same time allowing all my disks to stay spun down as often as possible.

-

Interesting thought (that unRAID may spin up each disk until it finds the Time Machine share). I decided to test it by swapping disk14 and disk2 positions. However, after doing so and spinning down all of the disks, unRAID spun up all disks, 1-14 in sequential order when I told Time Machine to create a backup. If it is pointing to a specific disk share, and not using a "user share" then the mac is scanning those disks for some reason. Joe L. How so? if AFP is disabled on all user shares, and only enabled on 1 disk share, how could my mac be accessing those disks to cause them to spin up? My Mac would query the server, see the one disk share, and then connect that that share right? My Mac see's no other disk or user shares using AFP, so it wouldn't even know there are other disks to spin up. Edit: I may be wrong here, but it would seem like my Mac is sending a signal to unRAID asking for all of the available shares over AFP, and unRAID is then spinning up each disk to see if there's anything being shared on them. I'm not running any add-on's, just bone stock unRAID. Maybe those of you that don't have this issue are running that cache_dir's script so unRAID already knows what's on each disk and doesn't cause the disks to spin up?

-

Interesting thought (that unRAID may spin up each disk until it finds the Time Machine share). I decided to test it by swapping disk14 and disk2 positions. However, after doing so and spinning down all of the disks, unRAID spun up all disks, 1-14 in sequential order when I told Time Machine to create a backup.