bluepr0

Members-

Posts

214 -

Joined

-

Last visited

Converted

-

Gender

Undisclosed

Recent Profile Visitors

The recent visitors block is disabled and is not being shown to other users.

bluepr0's Achievements

Explorer (4/14)

1

Reputation

-

bluepr0 started following Unraid OS version 6.8.0-rc6 available

-

There's some kind of bug that doesn't show your VM list, I've got one and it doesn't show up there.

-

bluepr0 started following unRAID 6.7.2 becomes unresponsive and then crashes and Docker Containers - always update ready

-

Glad it's a bug, was driving me crazy as it started to happen without changing anything.

-

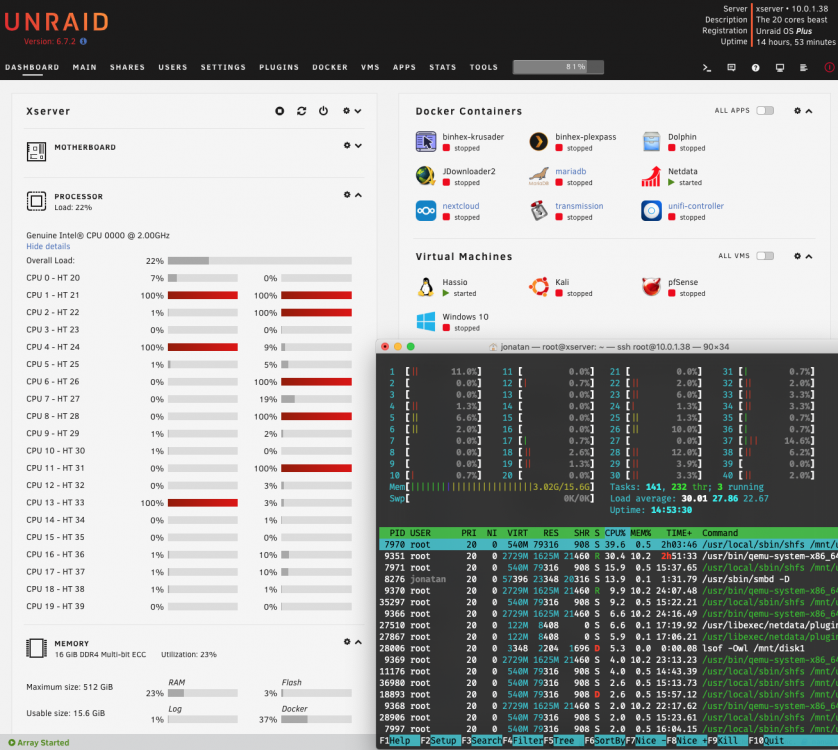

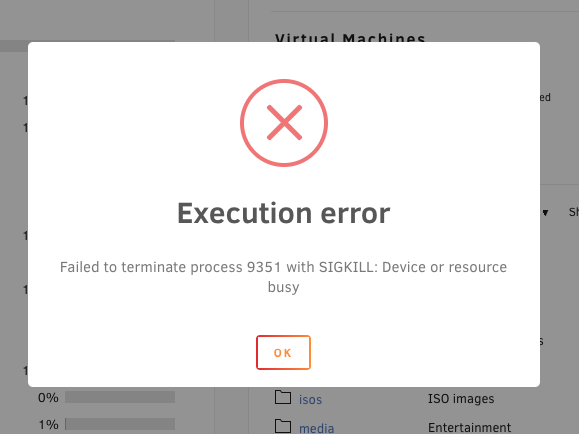

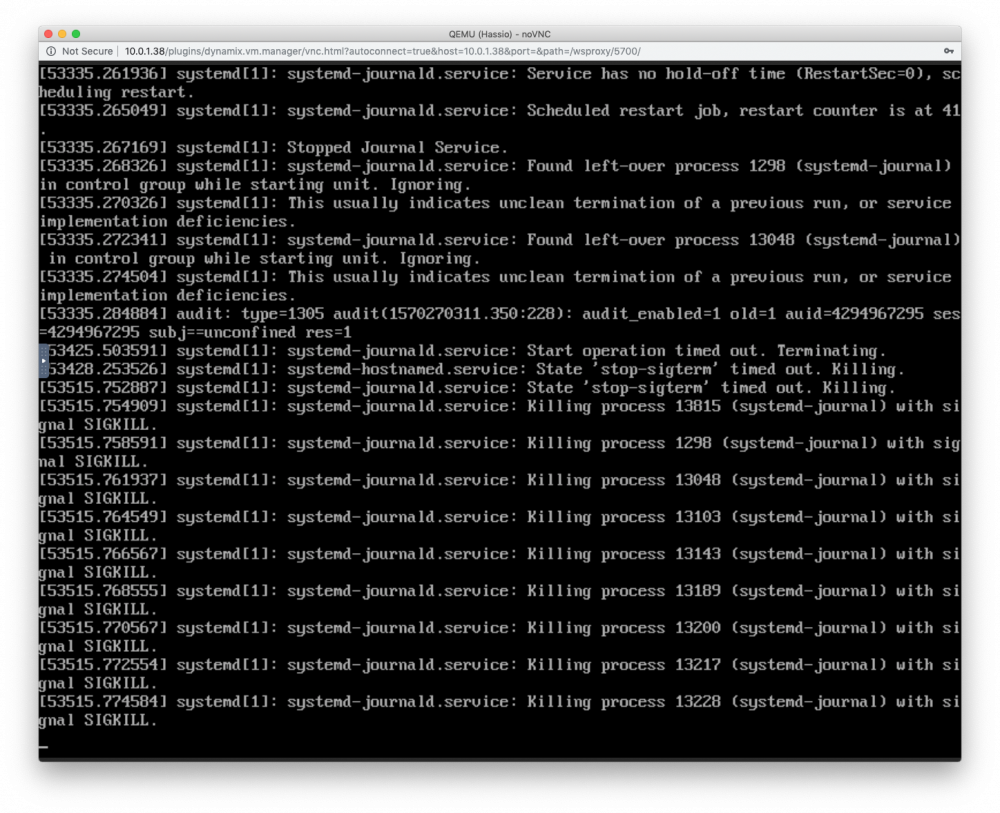

Hello! I've been running flawlessly unRAID 6.7.2 since it came out. However since a couple of days the server is hanging completely and I'm unable to bring it back unless I force restart. Wake up this morning and it was looking like this As you can see on the web interface, some of the cores are blocked at 100% (however that doesn't seem to be the case if you get into terminal > ssh > htop. I was only running a VM, because this happened to me a few times already so I was trying to troubleshoot what's the problem. I'm also unable to stop or force shutdown the VM, I will get the message below And this is what the VM itself shows in the video output By the time I try to force shutdown the VM I'm not even able to get into the web interface, but I still get SSH access. I have been reading the Help post here https://forums.unraid.net/topic/37579-need-help-read-me-first/ and followed the instructions, first I run diagnostics command to create the ZIP, however the powerdown command is not working. It won't shutdown In the logs > libvirt.txt I'm seeing the same message I attached before in the screenshot. However I'm not sure how to troubleshoot or what's really the culprit of these hangs. Any help would be greatly appreciated so I can move forward and find a solution. Thanks! xserver-diagnostics-20191005-1253.zip

-

bluepr0 started following Unraid OS version 6.7.0-rc6 available and 6.7.0 Time Machine drive

-

Hello, I'm currently using time machine just fine on my current iMac. However I've bought a new one recently and at the time of installing or restoring the system I'm not getting the Time Machine volume. Not entirely sure how to debug but on one machine I can see it and use it perfectly and on the other it's not even showing up Is there any steps I can take to try to see what's going on? Both machines are over ethernet and connect to the network (can see both) Thanks!

-

Hello! thanks so much for putting this docker together. Looks like it's working like a charm... probably not renewing my Dropbox subscription next year. Anyway! I just wanted to ask if it could be possible that the nextcloud docker is avoiding my disk where the nextcloud share is to go to stand-by mode? Even if it's not syncing anything since yesterday, I'm still seeing my disk up. If yes, is there a way I can avoid this and let my disk to go to sleep? Thanks!

-

Shameless bump in case someone can throw some light

-

Updated successfully from rc5.

-

bluepr0 started following [Support] Linuxserver.io - Nextcloud

-

It seems to be working... yaaay! Thanks a lot!. Will do a check for sure, something weird happened for sure.

-

Alright removed it and added it back and now when I run the VMDelay it actually turns on the VMs (so it's triggering the other script). I will try restarting just to confirm it's finally working. Thanks!

-

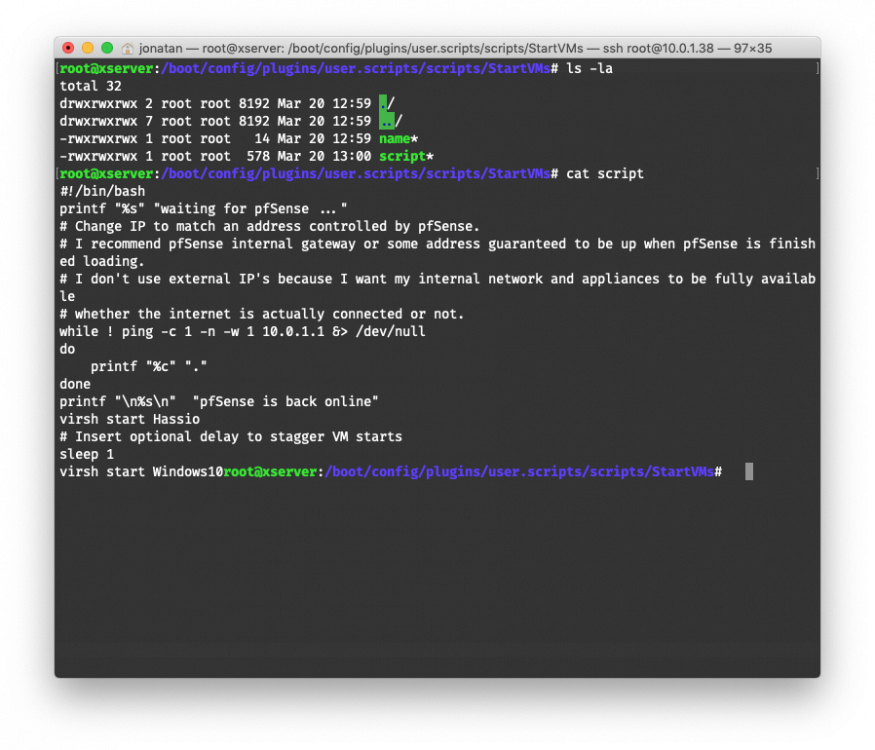

Also If I do root@xserver:~# cd /boot/config/plugins/user.scripts/scripts/StartVMs -bash: cd: /boot/config/plugins/user.scripts/scripts/StartVMs: No such file or directory root@xserver:~# I can get into scripts folder root@xserver:~# cd /boot/config/plugins/user.scripts/scripts/ root@xserver:/boot/config/plugins/user.scripts/scripts# ls -la total 56 drwxrwxrwx 7 root root 8192 Mar 20 12:59 ./ drwxrwxrwx 3 root root 8192 Mar 19 21:19 ../ drwxrwxrwx 2 root root 8192 Mar 20 12:59 StartVMs/ drwxrwxrwx 2 root root 8192 Mar 20 12:59 VMDelay/ drwxrwxrwx 2 root root 8192 Mar 19 21:14 delete.ds_store/ drwxrwxrwx 2 root root 8192 Mar 19 21:14 delete_dangling_images/ drwxrwxrwx 2 root root 8192 Mar 19 21:14 viewDockerLogSize/ root@xserver:/boot/config/plugins/user.scripts/scripts# But then I can't O_O root@xserver:/boot/config/plugins/user.scripts/scripts# cd StartVMs -bash: cd: StartVMs: No such file or directory root@xserver:/boot/config/plugins/user.scripts/scripts# I can get inside VMDelay root@xserver:/boot/config/plugins/user.scripts/scripts# ls StartVMs/ VMDelay/ delete.ds_store/ delete_dangling_images/ viewDockerLogSize/ root@xserver:/boot/config/plugins/user.scripts/scripts# cd VMDelay/ root@xserver:/boot/config/plugins/user.scripts/scripts/VMDelay# ls -la total 32 drwxrwxrwx 2 root root 8192 Mar 20 12:59 ./ drwxrwxrwx 7 root root 8192 Mar 20 12:59 ../ -rwxrwxrwx 1 root root 7 Mar 20 12:59 name* -rwxrwxrwx 1 root root 85 Mar 20 12:59 script* root@xserver:/boot/config/plugins/user.scripts/scripts/VMDelay# I will try to delete and add the script again

-

This root@xserver:~# /boot/config/plugins/user.scripts/scripts/StartVMs/script -bash: /boot/config/plugins/user.scripts/scripts/StartVMs/script: No such file or directory root@xserver:~# VERY WEIRD O_O

-

Nope, I get this output but the VMs stay off root@xserver:~# echo "/boot/config/plugins/user.scripts/scripts/StartVMs/script" | at now warning: commands will be executed using /bin/sh job 8 at Wed Mar 20 15:43:00 2019 root@xserver:~#

-

Nope, if I run the VMDelay script it runs fine but it doesn't fire the other script it seems, at least the VMs are not getting started. If I run manually StartVMs they do get started so I would guess it's not a problem of the script itself. I did copy and paste the script on Atom to modify and then paste it on the script file on unRAID However here's the text as well #!/bin/bash printf "%s" "waiting for pfSense ..." # Change IP to match an address controlled by pfSense. # I recommend pfSense internal gateway or some address guaranteed to be up when pfSense is finished loading. # I don't use external IP's because I want my internal network and appliances to be fully available # whether the internet is actually connected or not. while ! ping -c 1 -n -w 1 10.0.1.1 &> /dev/null do printf "%c" "." done printf "\n%s\n" "pfSense is back online" virsh start Hassio # Insert optional delay to stagger VM starts sleep 1 virsh start Windows10 And the other one #!/bin/bash echo "/boot/config/plugins/user.scripts/scripts/StartVMs/script" | at now Thanks so much for your help ❤️

-

-