-

Posts

61 -

Joined

-

Last visited

Converted

-

Gender

Undisclosed

Recent Profile Visitors

1799 profile views

aterfax's Achievements

Rookie (2/14)

10

Reputation

-

Was this an issue for other users? Did this get resolved in the container?

-

Likewise, reverting now. If the docker image has been updated / rebased on Debian a cursory Google suggests that this may be related: https://github.com/matrix-org/synapse/issues/4431 Edit: Reverting to the previous image works fine. Filed an issue on Github for this.

-

By default the apt mirroring seems to be missing the cnf directory. This caused my ubuntu machines to throw the 'Failed to fetch' error from my original post. I added the cnf.sh linked from above to the config directory, edited it to support "jammy" then ran it from inside the running container to download the missing files. Script as follows: #!/bin/bash cd /ubuntu-mirror/data/mirror/ for p in "${1:-jammy}"{,-{security,updates}}\ /{main,restricted,universe,multiverse};do >&2 echo "${p}" wget -q -c -r -np -R "index.html*"\ "http://archive.ubuntu.com/ubuntu/dists/${p}/cnf/Commands-amd64.xz" wget -q -c -r -np -R "index.html*"\ "http://archive.ubuntu.com/ubuntu/dists/${p}/cnf/Commands-i386.xz" done This resolved the 'Failed to fetch' problem. This could be added to the container in one way or another I suspect, but it does not appear to be something that needs to be ran regularly according to the link above.

-

Is the ich777/ubuntu-mirror docker working correctly? Seeing errors: E: Failed to fetch https://ubuntu.mirrors.domain.com/ubuntu/dists/jammy/main/cnf/Commands-amd64 404 Not Found [IP: 192.168.1.3 443] Which may relate to: https://www.linuxtechi.com/setup-local-apt-repository-server-ubuntu/ Note : If we don’t sync cnf directory then on client machines we will get following errors, so to resolve these errors we have to create and execute above script. Edit: Following the instructions above to grab the cnf directory seems to have resolved the issue.

-

General warning from me, I seem to be getting horrible memory leaks with Readarr. After a few hours it consumes all RAM / CPU and grinds my Gen 8 microserver to a halt.

-

To be explicit with my volume mounts for SSL working: /data/ssl/server.crt → /mnt/user/appdata/letsencrypt/etc/letsencrypt/live/mailonlycert.DOMAIN.com/cert.pem /data/ssl/ca.crt → /mnt/user/appdata/letsencrypt/etc/letsencrypt/live/mailonlycert.DOMAIN.com/chain.pem /data/ssl/server.key → /mnt/user/appdata/letsencrypt/etc/letsencrypt/live/mailonlycert.DOMAIN.com/privkey.pem I do not recall the exact details of why the above is optimal but I suspect that Poste is handling making it's own full chain cert which results in some cert mangling if you do give it your fullchain cert rather than each separately (various internal services inside the docker need different formats) - I believe that without the mounts as above the administration portal will be unable to log you in. @brucejobs You might want to check if this is working for you / Poste may have fixed the above. ---------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------- To move back to the Swag docker itself. My own nginx reverse proxy config for the Swag docker looks like: # mail server { listen 443 ssl http2; server_name mailonlycert.DOMAIN.com; ssl_certificate /etc/letsencrypt/live/mailonlycert.DOMAIN.com/fullchain.pem; ssl_certificate_key /etc/letsencrypt/live/mailonlycert.DOMAIN.com/privkey.pem; ssl_dhparam /config/nginx/dhparams.pem; ssl_ciphers 'ECDHE-RSA-AES128-GCM-SHA256:ECDHE-ECDSA-AES128-GCM-SHA256:ECDHE-RSA-AES256-GCM-SHA384:ECDHE-ECDSA-AES256-GCM-SHA384:DHE-RSA-AES128-GCM-SHA256:DHE-DSS-AES128-GCM-SHA256:kEDH+AESGCM:ECDHE-RSA-AES128-SHA256:ECDHE-ECDSA-AES128-SHA256:ECDHE-RSA-AES128-SHA:ECDHE-ECDSA-AES128-SHA:ECDHE-RSA-AES256-SHA384:ECDHE-ECDSA-AES256-SHA384:ECDHE-RSA-AES256-SHA:ECDHE-ECDSA-AES256-SHA:DHE-RSA-AES128-SHA256:DHE-RSA-AES128-SHA:DHE-DSS-AES128-SHA256:DHE-RSA-AES256-SHA256:DHE-DSS-AES256-SHA:DHE-RSA-AES256-SHA:AES128-GCM-SHA256:AES256-GCM-SHA384:AES128-SHA256:AES256-SHA256:AES128-SHA:AES256-SHA:AES:CAMELLIA:DES-CBC3-SHA:!aNULL:!eNULL:!EXPORT:!DES:!RC4:!MD5:!PSK:!aECDH:!EDH-DSS-DES-CBC3-SHA:!EDH-RSA-DES-CBC3-SHA:!KRB5-DES-CBC3-SHA'; ssl_prefer_server_ciphers on; location / { proxy_set_header Host $host; proxy_set_header X-Real-IP $remote_addr; proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for; proxy_set_header X-Forwarded-Proto $scheme; proxy_pass https://10.0.0.1:444; proxy_read_timeout 90; proxy_redirect https://10.0.0.1:444 https://mailonlycert.DOMAIN.com; } } Some adjusting if you have multiple SSL certs would be needed and you should take care if using specific domain certs ala documentation here: https://certbot.eff.org/docs/using.html#where-are-my-certificates The SSL configuration is effectively duplicated from: /config/nginx/ssl.conf thus could be simplified if you are only using one certificate file with: include /config/nginx/ssl.conf Likewise for the proxy configuration you can simplify if content with the options in /config/nginx/proxy.conf with: include /config/nginx/proxy.conf; ---------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------- When using includes just be sure that the included file has what you need e.g. The option: proxy_http_version 1.1; Is particularly important if you are using websockets on the internal service. In some cases (Jellyfin perhaps) you may also want additional statements like the following for connection rate limiting: Outside your server block: limit_conn_zone $binary_remote_addr zone=barfoo:10m; Inside your server block: location ~ Items\/.*\/Download.* { proxy_buffering on; limit_rate_after 5M; limit_rate 1050k; limit_conn barfoo 1; limit_conn_status 429; } ---------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------- Cheers, (hope there's no typos!)

-

The SWAG docker uses certbot........ https://github.com/linuxserver/docker-swag/blob/master/Dockerfile /mnt/usr/appdata/swag/KEYS/letsencrypt/ is a symlink - due to the way docker mounts things you are better avoiding trying to mount anything symlinked as the appropriate file pathing must exist in the container. If you mount the symlink it will point at things that do not exist within a given container which is why the method 1 below required you to mount the entire config folder - a bad practice. Mounting a symlink of a cert directly will work, but mounting directly from /mnt/usr/appdata/swag/KEYS/letsencrypt/ will almost certainly break the minute you attempt to get multiple certs for more than one domain. You appear to be talking about generating a single certificate with multiple subdomains.... this is going to generate only one certificate and one folder it sits in. Feel free to make an issue on their Github if you are so convinced that you know their container better than they do: https://github.com/linuxserver/docker-swag/issues You can argue the toss about how you should correctly mount things - but you really should be doing method 2. https://hub.docker.com/r/linuxserver/swag I am not using the letsencrypt docker, I am using swag which is a meaningless distinction since they are the same project with a different name due to copyright issues. You do not really appear to be reading anything linked properly nor understanding anything fully. I'm not continuing with this dialogue.

-

You are familiar with the concept of the past I assume? Edit: Here are the docs you need, your certificate files are not in the keys folder: https://certbot.eff.org/docs/using.html#where-are-my-certificates

-

1 - Yes these are paths. 2 - I added mail.domain.com - naturally you need to have setup your CNAME / MX records and forward ports in your router/network gateway to the Poste.io server. If you wish for the web GUI of poste.io also to be accessible externally you will also need to setup the correct reverse proxy with SWAG. I don't know what you mean by .config 3 - I blanked the folder in the screen shot as I do not want to share my domain name, but yes the subfolder with your domain name / subdomain name is where the PEM files should be if you have setup SWAG correctly to get SSL certificates. If you are lacking certificates you have probably got it setup incorrectly.

-

EDIT: UPDATED MAY 2021! I ended up mounting the default certificate files in the docker directly to the certificates from my letsencrypt docker: To be explicit with my volume mounts for SSL working: /data/ssl/server.crt → /mnt/user/appdata/letsencrypt/etc/letsencrypt/live/mailonlycert.DOMAIN.com/cert.pem /data/ssl/ca.crt → /mnt/user/appdata/letsencrypt/etc/letsencrypt/live/mailonlycert.DOMAIN.com/chain.pem /data/ssl/server.key → /mnt/user/appdata/letsencrypt/etc/letsencrypt/live/mailonlycert.DOMAIN.com/privkey.pem I do not recall the exact details of why the above is optimal but I suspect that Poste is handling making it's own full chain cert which results in some cert mangling if you do give it your fullchain cert rather than each separately (various internal services inside the docker need different formats) - I believe that without the mounts as above the administration portal will be unable to log you in. As I mentioned further up in this thread you can alternatively mount .well-known folders between your Poste IO and letsencrypt docker - this will not work if your domain has HSTS turned on with redirects to HTTPS (or this was the case with the version of letsencypt in the docker a while ago as it was reported here: https://bitbucket.org/analogic/mailserver/issues/749/lets-encrypt-errors-with-caprover )

-

You have advanced view turned on, you can toggle this at the top right.

-

I too would like these to be added however I have solved the problem elsewhere before: https://forums.unraid.net/topic/71259-solved-docker-swarm-not-working/?do=findComment&comment=760934

-

Many thanks for the explanation - I was not aware that certain things would have issues with the virtual paths as it were. The script for L4D you sent me works great and starts everything in a screen which is super. In terms of screen / tmux support for gameservers - I have gotten quite used to using https://linuxgsm.com/ which keeps each server console in its own tmux and has a robust logging setup and a lot of support for many game servers. The access to the console via SSH or if in a docker potentially via the Unraid or portainer console is certainly my preference to RCON given RCON's shortcomings. The developer (Dan Gibbs) has actually been looking for someone able to maintain / make a docker from his project for some time - so you might find working with his project easier to maintain / supports more game server types? His solution as it stands is excellent supporting automatic install of plugins etc... I'd love to see a collab!

-

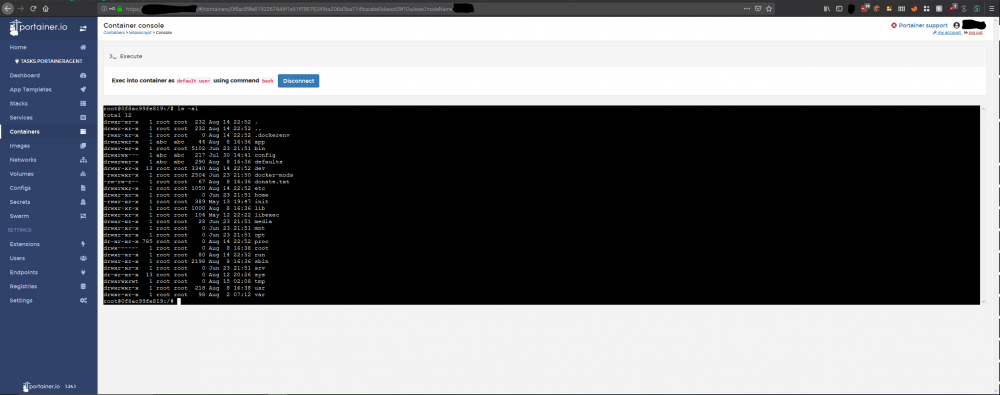

I do mean over SSL - you can access your docker consoles from the docker page inside Unraid after all or in setups like mine I have access to all running dockers via a portainer instance being hosted with SSL protection. I was using /mnt/user/appdata/l4d2 before getting it working on the cache drive- is there a reason why you cannot run this from an appdata dir like all my other dockers? I really have no idea what could be causing a segfault in /mnt/user/appdata/l4d2 unless the file system is doing something whacky in the background with the cache. E.g. below:

-

Very odd - I now seem to have it working ok but only when on the cache drive.... Edit - the ask for access via screen for server console is kinda agnostic of the server type - some things will not support rcon and rcon itself is an insecure protocol - I'd rather just login over SSL and deal with things via portainer or via Unraid docker console.