Kaizac

-

Posts

470 -

Joined

-

Days Won

2

Content Type

Profiles

Forums

Downloads

Store

Gallery

Bug Reports

Documentation

Landing

Posts posted by Kaizac

-

-

2 minutes ago, HpNoTiQ said:

Forgot to say, I've already tried bolognaise script and safe docker new perm.

I've already in my script :# Add extra commands or filters

Command1="--rc"

Command2="--uid=99 --gid=100 --umask=002"You ran safe docker perms when you didn't have your rclone mounts mounted?

And I think the default script already runs umask so seems like you are doubling that flag now?

-

11 hours ago, Logopeden said:

HI all

so i use drive api i think( i dont use team folders)

I tryd to use SA account but i think i messed that up. (ended up loosing files/making duplicates ect)

But back to my question.

Today i have:

User 1 Using a crypt with folder in it for evrything. (lets call this the stadard setup)

can i add a secound user (user 2) to help with the upload to get 2x 700gb a day?

Feel free to PM if you feel you can help.

You can only use service accounts with team drives. And I don't think multiple actual accounts work for your own drive. You would have to share the folder with the other account. But I think it will then fail on making the rclone mount cause rclone can't see the shared folder.

If you have the option, team drives are the easiest option for everything. Including being consistent with the storage limits of Google Workspace.

7 hours ago, HpNoTiQ said:Hi,

Root user is driving me nuts!Since I upgraded Unraid to 6.10, I can't manage to keep my dockers working.

Every docker is using -e 'PUID'='99' -e 'PGID'='100' (I've 2 sonarrs (1080-4k) and 2 radarrs, 2 syncars + 1 qbittorrent + Cloudplow).

My folders are :

Movies/HD and Movies/UHD.Every once a while, my folders HD and UHD are switching owner to root and then Sonarr/raddar can't import anymore!

My script is updated and I think now that it's mergerfs running as root (as my scripts).What should I try to keep it working?

Thank you,Read a few posts up with responses to Bjur from me and Bolagnaise that should solve your issue.

On 9/16/2022 at 11:30 AM, Digital Shamans said:Thank you for the reply.

No, I used Space Invader's scripts from a 5yrs old yt video.

Just run the mount script from the GitHub linked in the 1st post in this topic.

https://github.com/BinsonBuzz/unraid_rclone_mount

The script run successfully (so it says) and done tonnes of something (many lines were produces).

At the end, it did change nothing, except nesting another gdrive folder within gdrive folder.

None of the files are visible in Krusader (they are in Unraid).

When trying copy a file into a secure ot gdrive folder, I have a prompt about no access.

Why use Krusader at all?Shouldn't rClone itself be sufficient enough?

I don't want to be rude. But you have no idea what you are doing. And I don't think we can understand what you are trying to do. I don't see why you bring in Krusader which is just a file browser which can also browse to mounted files. I suggest you first read up on rclone and then what the scripts in this topic do before you continue. Or be precise in what you are trying to accomplish.

-

Got a 500 internal error using this just now. And after that my plex database got malformed and is broken now. So be careful and make sure you backup your database.

-

7 minutes ago, Bjur said:

Thanks for the help guys.

I've stopped all my rclone mounts, and ran the permission tool on my disk. I didn't include cache, since that was not advised, so I have included dockers.

Should I also run it on cache with dockers, seems the are correct folder wise.

I added the UID, PID, Umask to all my user scripts just in case.

I will try and test now.

Don't overthink it. You have the Tools > New Permissions functionality which you can use to fix permissions on a folder level. For you that would be (I suppose) mount_rclone and mount_mergerfs and your localdata folder if you have it and if the permissions are not correct there. You don't need to run these permissions on your appdata/dockers.

I don't know if you saw the edit of Bolagnaise above? Read it, cause your mount script won't work like this. Add \ to every addition you made. So I would put it like this:

# create rclone mount rclone mount \ --allow-other \ --buffer-size 256M \ --dir-cache-time 720h \ --drive-chunk-size 512M \ --log-level INFO \ --vfs-read-chunk-size 128M \ --vfs-read-chunk-size-limit off \ --vfs-cache-mode writes \ --bind=$RCloneMountIP \ --uid 99 \ --gid 100 \ --umask 002 \ $RcloneRemoteName: $RcloneMountLocation &You have to finish with that "&".

-

44 minutes ago, Bjur said:

@KaizacI tried using the UID/PID/UMASK in userscripts mount and added it to the section in the mountscript:

# create rclone mount

rclone mount \

--allow-other \

--buffer-size 256M \

--dir-cache-time 720h \

--drive-chunk-size 512M \

--log-level INFO \

--vfs-read-chunk-size 128M \

--vfs-read-chunk-size-limit off \

--vfs-cache-mode writes \

--bind=$RCloneMountIP \

$RcloneRemoteName: $RcloneMountLocation &

--uid 99

--gid 100

--umask 002

Sonarr still won't get import access from the complete local folder where it's at.

My rclone mount folders are still showing root:

@BolagnaiseIf I try the permission docker tool, I would risk breaking Plex transcoder, which I don't want.

Also if I run the tool.

Would I only have to run it once of each time I reboot?

@DZMM In regards to the Rclone share missing, it has happened a few times even when watching a movie, where I need to reboot to get that specific share working again while the other ones still working.

Why would it break your Plex transcoder?

You can try running the tool when you don't have your rclone mounts mounted. So reboot the server without the mount script and then run the tool on mount_mergerfs (and subdirectories) and mount_rclone. Maybe it will be enough for Sonarr.

-

On 8/25/2022 at 9:09 PM, Concave5872 said:

I'm unable to get my webui working with vpn enabled. Things work fine with vpn turned off.

I did some searching, and in all the similar cases I found, there was a mismatch in the WEBUI_PORT and container port forwarding (usually, the WEBUI_PORT was set to something like 8123 but the forwarded container ports were still set to 8080). I think my ports are being set correctly.

My VPN appears to be working correctly, and I can successfully ping google.com from the container when the VPN is up, I just can't load the webui.

Does anyone have ideas of what else I could be missing? I've included my supervisord.log file below. Thanks!

I'm having the same issue. Sometimes it's working for a few days and then it's not reachable again. So I'm just moving back to Deluge. Was a lot more stable and actually moved my files to the right folders.

-

26 minutes ago, DZMM said:

Sounds dangerous and a bad idea sharing metadata and I think they'd need to share the same database, which would be an even worse idea.

I'd try and solve the root cause and see what's driving the API ban as I've had like 2 in 5 years or so e.g. could you maybe schedule Bazarr to only run during certain hours? Or, only give it 1 CPU so it runs slowly?

I don't use Bazarr but I think I'm going to start as sometimes in shows I can't make out the dialogue or e.g. there's a bit in another language and I need the subtitles, but I've no idea how Plex sees the files. I might send you a few DMs if I get stuckYeah was afraid it would be a bad idea, and so it is. I'll have to find a way to trigger the script automatically when it gets api banned.

API bans didn't happen before, but had to rebuild all my libraries last weeks after some hardware changes and bad upgrades from Plex.

Regarding Bazarr, you can hit me up in my DM's yes. I have everything you need and also how to get it to work with Autoscan so Plex sees the subtitles.

-

1

1

-

-

12 minutes ago, DZMM said:

IMO you can't go wrong with using /user and /disks - at least for your dockers that have to talk to each other. I think the background to Dockers is they were setup as a good way to ringfence access and data. However, we want certain dockers to talk to each other easily!

Life gets so much easier settings paths within docker WEBGUIs when your media mappings look like my Sonarr mappings below:

Correct, like that. I would only use /disks though when you actually use unassigned drivers. Cause you also have to think about the Read Write - Slave setting then. If you just use cache and array folders, only /user is sufficient. But I think @maxse is mostly "worried" about setting the right paths/mappings inside the docker itself. And that just requires going through the settings and check for the used mappings and alter them where needed.

-

@DZMM can you help me with your brainpower?

I'm using seperate rclone mounts for the same Team Drivers but with different service accounts (Like Bazarr instances on it's own mount, Radarr and Sonarrs, Plex, Emby, and 1 for normal usage). Sometimes one of those get api banned. Mostly Plex lately, so I have script to mount a backup mount and switch that one within the mergerfs with the Plex local folder and reboot Plex. It then works again.

However I'm wondering if you can run 2 Plex instances. 1 to do all the API stuff, like scanning and meta refreshing, etc. And then use 1 Plex instance for the actual streaming. You can point the 2 Plex instances to their own mergerfs folders, which contains the same data. And then I'm thinking you can share the Library. But I don't think this will work right? Cause the Streaming Plex won't get real-time updates through the changed Library data from the other Plex instance right? And you would have to be 100% sure you disable all the jobs and tasks on the Streaming Plex intance.

What do you think, possible or not?

-

On 9/4/2022 at 3:10 AM, maxse said:

In terms of setting up plex itself. Am I right that it would be set not to scan library periodically but to only scan when a change is detected, and partial scan of that changed directory? Will plex be able to detect the change?

Also, does this mean that I won't be able to have plex add thumbnails? Because it will scan the entire library that would be in the cloud and would get API banned for scanning on all of the files? I currently like to have the thumbnails, and detecting intros etc...

Also, How would I check what you mentioned about? I'm a basically a newbie when it comes to dockers. I understnad how path mappings work but I just followed spaceinvaderone's videos when I originally set them up. I use binhex for the Arrs... do you happen to know if it will work by remove the /data folder like you mentioned? I'm assuming just mapping the /data to whatever mergerfs mount I need to isn't the same same correct?

Again thank you soo much for all this. I appreciate you! I'm actually thinking of building an itx build just for this with 2 18tb drives mirrored to store irreplaceable files and just consolidate my server! Just want to make sure I understand everything and will be able to do it before I start to spend the $ on new hardware, etc...

For Plex you can use the only partial scan indeed. And then from Sonarr and Radarr I would use the connect option to send Plex a notification. It will then trigger a scan. Later on when you are more experienced you can set up Autoscan which will send the triggers to Plex.

Thumbnails will be difficult, but if they are really important for you, you can decide to keep your media files on your local storage for a long while so Plex can do everything it needs to do. Generally it's advised to just disable thumbnails because it requires a lot of CPU power and time, especially if you're going for a low power build. It all depends on your library size as well of course.

I've also disabled all the other planned tasks in the maintenance settings like creating chapters and such and especially media analysis. What I do use is the Intro Detection for Series, I can't do without that. And generally series are easier to grab good quality right away and require less upgrades than Movies, so upgrades are less of a problem.

Regarding the paths you can remove or edit the /data paths and add /user in the docker template. Within the docker itself you need to check the settings of that docker and change the paths accordingly. I know binhex uses /data so if you use mostly binhex it will often work ok. But because you use different path/mapping names Unraid will not see it as the same drive and thus will see it as a move from one drive to another, instead of within the same drive. And moving server side is pretty much instant, but if you use the wrong paths it will go through your server back to the cloud storage.

So again, check your docker templates and mappings/paths that point to media files and such delete those and only use 1 path which is high level like /mnt/user or /mnt/user/mount_unionfs/Gdrive. And then go in the docker and change the paths used inside. Once you know what you are doing and looking for it's very simple and quick. Just do it docker by docker.

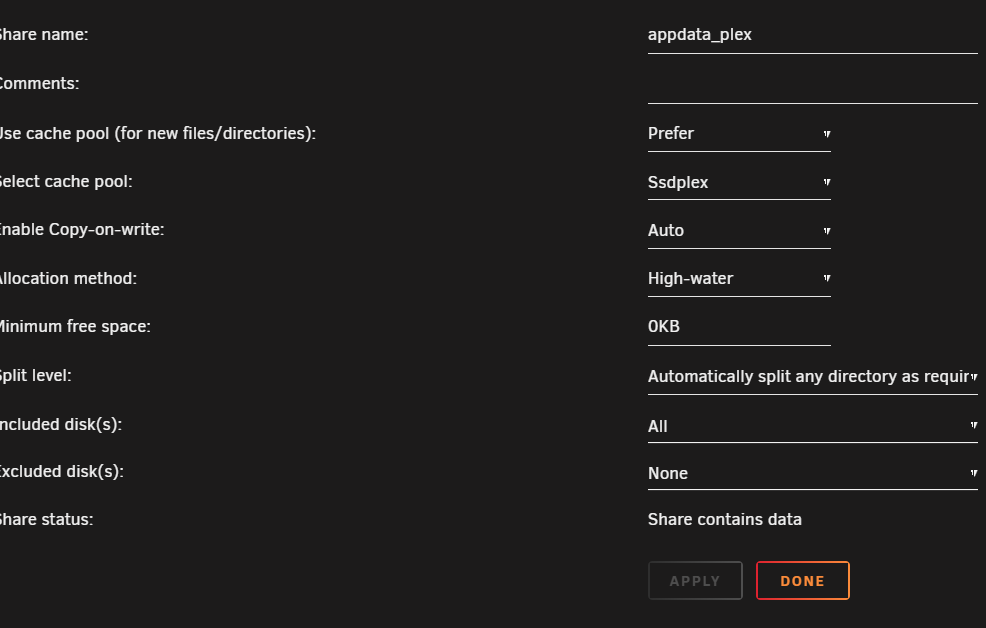

Regarding your ITX build, I would recommend if you have the money to get at least 1 cache SSD and better is to get 2 SSD's (1 TB per SDD or more) for cache put in a pool with BTRFS. It's good for your appdata, but you can also run your downloads/uploads and Plex library from it. Especially the downloading/uploading will be better from the SSD because it does not have to switch between reading and writing like a HDD. Using 1 SSD for cache is fine as well, just be careful of what other data you let go through your cache SSD, because if your SSD dies (and it will often instantly die, unlike a HDD). your data will be lost. And get backups of course. Just general good Server housekeeping ;).

EDIT: If you are unsure if you are doing the mappings right, just show screenshots of before and after from the template and inside the docker if you want and we can check it for you. Don't feel bothered doing that, I think many struggle with the same in the beginning.

-

2 hours ago, Bjur said:

Thanks for the answer @DZMM. I will strongly consider if it's worth the effort because it is working fine that part.

But can you answer guid gid umask should be in the scrip above?

Also I've seen a couple of times that my movies share dissappear suddenly without any reason all other scripts don't have this. Am I the only one who have seen this?

For the GID and UID you can try the script Bolagnaise put above this post. Make sure you alter the directory to your folder structure. For me this wasn't the solution, cause it was often stuck on my cloud folders. But just try it, maybe it works and you don't need to bother further.

If that doesn't solve your issue, then you need to put the GID and UID commands where you suggested yes.

We discussed your local folder structure earlier. The whole point of using mergerfs and the merged folder structure (/mnt/user/mount_unionfs/gdrive instead of /mnt/user/local/gdrive) is that it doesn't matter to your server and dockers if the files are actually local or in your cloud storage, it treats it the same.

If you then used the upload script correctly, it will only upload the files that are actually stored locally, because the upload script does look at the local files, not the merged files/folders.

The dissapearing of your folders is not something I've noticed myself. But are you looking at the local folder or the merged folder when you see that happening? If you look at the local folders it would explain it to me, because the upload script deletes source folders when done and empty. If it's when you look at your merged folder, then it seems strange to me.

-

9 minutes ago, Bjur said:

Thanks for the answer.

I'm not sure I follow. What's wrong with my mappings.

When I start to download it puts it in local folder before uploading it.

The dockers are mounted path in media folder in mount_merger folders.

All is what I was adviced in here and have worked before.

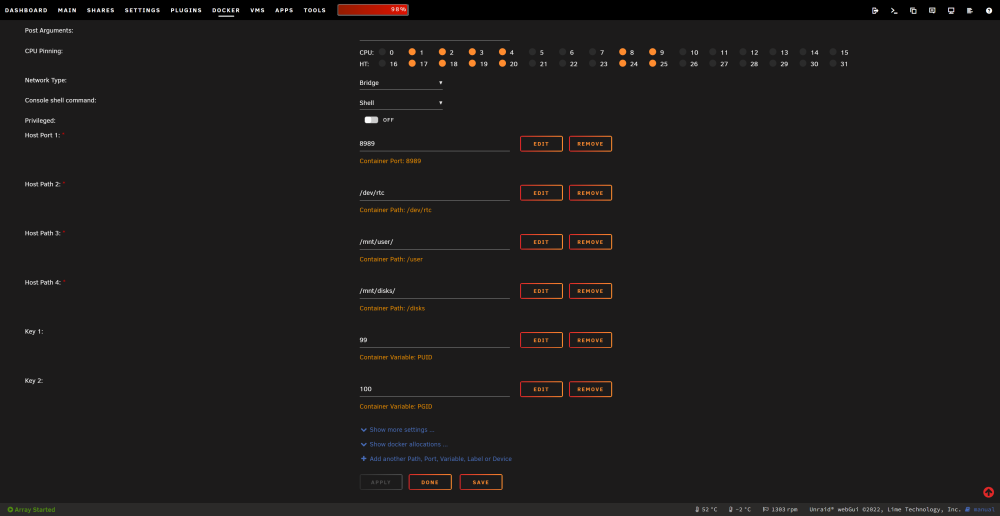

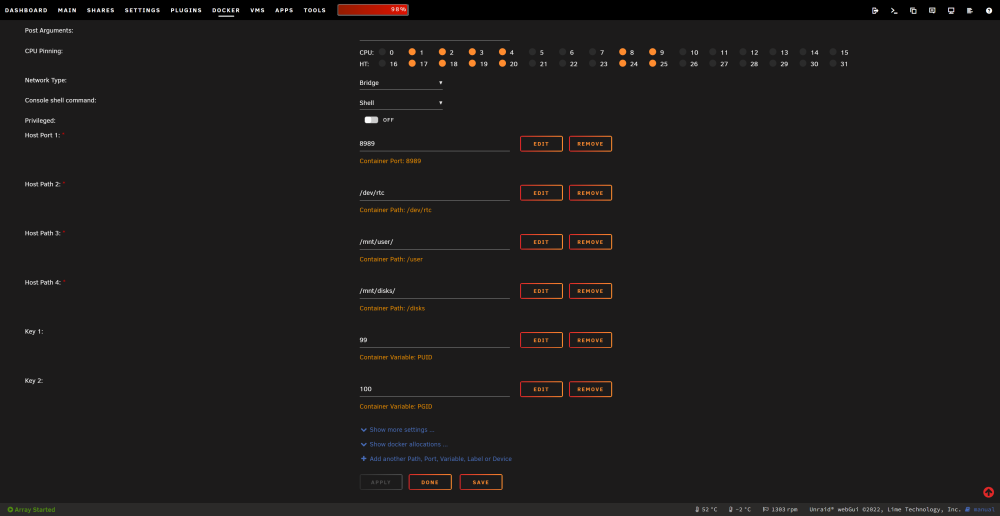

The /dev/rtc is only shown once in template, but that's default.

The other screenshots from ssh is the direct paths as asked for.

I'm not an expert, so please share advice on what I should map differently.

With the mappings what I'm saying is that you can remove the paths for /incomplete /downloads for Sab and for Sonarr /downloads and /series and just replace those with 1 path to /mnt/user/. Then inside the dockers for example Sab you will just point your incomplete folder to /mnt/user/downloads/incomplete instead of /incomplete. That way you keep the paths uniform and dockers will look at the same file through the same route (this is often a reason for file errors).

What I find confusing when looking at your screenshots again, is that you point to the local folders. Why are you not using the /mnt/unionfs/ or mnt/mergerfs/ folders?

9 minutes ago, Bjur said:PS: in regards to the mount scripts.

Is it the mount script to mount the shares in user scripts, else I don't know where it's located.

I don't even know where the merger mount is.

I'm talking about the user script, but within (depending on what your script looks like) you have a part that is the mount and after the mount you merge the mount (cloud) with a local folder. So I was talking about the 2/3 flags that you need to add to your mount part of your user script. If you use the full template of DZMM then you can just add those 2 flags (--uid 99 --gid 100) in the list with all the other flags.

Hope this makes more sense to you?

-

16 minutes ago, Bjur said:

Well I'm not a big fan of your mappings. I don't really see direct conflicts there, but I personally just removed all the specific paths like /incomplete and such. I'm talking about the media paths here, not paths like the /dev/rtc one.

And only use /user (or in your case /mnt/user). And then within the docker use that as start point to get to the right folder. Much easier to prevent any path issues, but that's up to you.

I also had the permissions issues so what I did is adding these lines to my mount scripts (not the merger, but the actual mount script for your Rclone mount). Those root/root folders are the issue, since sonarr is not running as root.

--uid 99 --gid 100

And in case you didnt have it already (I didn't): --umask 002

Add these and reboot, see if that solves the importing issue.

-

1 hour ago, Bjur said:

Hi @Bolagnaise: I have update Unraid to 6.10 stable in order to avoid any problems, but I have problems with Sonarr not moving files because of permissions.

My folders is showing this.

Which of you fixes should I use.

Should I just add

--uid 98 \

--gid 99 \to my scripts or do I need to do some extra work.

You don't show the owners of your media folders?

But I think it's an issue with the docker paths. You need to show your docker templates for Sab and Sonarr.

-

10 hours ago, maxse said:

Thank you!

So I don't plan to do team drives or those SA, I just want to keep things as simple as possible. Dont want to spend time learning about them as that's when I see people post issues, it's with the service accounts (not even sure what they are so don't want to get into it). I'm okay with the 750GB/day and things just taking longer..

Edu account is unlimited still for a while. I just don't know if I can make a clinet ID since I'm not the admin for it?

I would not be using my personal gdrive for this project...

-I think I'm just going to use unraid for this. I need 2 3.5" drives though to store family videos, and photos. The SFF Dell is too small for that. Reason for 2 drives is to have them RAID mirror just in case...

# Bandwidth limits: specify the desired bandwidth in kBytes/s, or use a suffix b|k|M|G. Or 'off' or '0' for unlimited. The script uses --drive-stop-on-upload-limit which stops the script if the 750GB/day limit is achieved, so you no longer have to slow 'trickle' your files all day if you don't want to e.g. could just do an unlimited job overnight.

BWLimit1Time="01:00"

BWLimit1="off"

BWLimit2Time="08:00"

BWLimit2="15M"

BWLimit3Time="16:00"

BWLimit3="12M"

This is the part I didn't understand. Do I just erase what I dont need? So if I want to upload at 200Mbps until the 750GB limit is reached. How would I change the above?

Do I just erase what I don't need?

BWLimit1=20M --drive-stop-on-upload-limit

and erase all the other lines?

Also still confused about the paths. So for plex I erase the /tv and /movies

and add a path /user and point to /mnt/user/mount_unionfs/gdrive and then just select the individual movies or shows subfolder within the plex program itself when I add libraries correct?

Now for say Radarr. The /data folder (where downloads go) and the /media where they get moved to. Do I just have /data point to /mnt/user/mount_unionfs/gdrive and /media point to /mnt/user/mount_unionfs/gdrive/Movies?

like that?

Thank you again sooo much!

You won't be able to make a Client ID indeed, which will mean a drop in performance. You should still be able to configure you rclone mount to test things. When you decide this is the way for you I would honestly think about just getting an enterprise Google Workspace account. It's 17 euros per month and you won't be running the risk of getting problems with your EDU account owner. But I can't see the depths of your wallet ;).

For BWlimit you would just put everything on 20M, don't erase and don't add any flags there. It's already done further below in the list of flags used for the upload.

QuoteBWLimit1Time="01:00"

BWLimit1="20M"

BWLimit2Time="08:00"

BWLimit2="20M"

BWLimit3Time="16:00"

BWLimit3="20M"

For Plex, yes you just use your subfolders for libraries. So /user/Movies and /user/TV-Shows.

For all dockers in your workflow like Radarr and Sonarr, Sab/NZBget, Bazarr maybe. You remove their own /data or whatever points they use and you add the /user path as well. So Radarr should also look into /user, but then probably /user/downloads/movies. And your Sab will download to /user/downloads/movies so Radarr can import from there. So don't user the /media and /data paths, because then you won't have the speed advantage of mergerfs.

Just be aware that when you remove these paths and put in /user, you also have to check inside the docker (software) that the /user path is also used. If it's programmed to use /data then you have to change that to /user as well.

-

17 hours ago, slimshizn said:

Yeah, we have noticed a jump in electricity here also since a couple years ago. I'm doing some serious monitoring with grafana of my setup to see what I can do to reduce that overall.

Look into Powertop if you haven't already. Can make quite a difference depending on your build(s). I don't think moving to a seedbox for electricity costs will be worth it. You'll still have to run dual systems like DZMM does. Or you would have to use just one server of Hetzner for example and do everything there. But you're easily looking at 40-50 euros per month already and then you still won't have a comparable system (in my case at least).

15 hours ago, maxse said:wow, my mind is still blown with all this lol.

I think Im just going to stick to unraid, it's too confusing too learn the paths on synology, and I dont want to spend all that time learning and then with no place to troubleshoot..

Quick question, will this work with an education account? I currently use it for backup with rclone, but I havent seen anyone use it with streaming like this. Will this work, or do some more features need to be enabled on an enterprise gdrive that I can't use on an edu account?

And lastly, if I name my folders the same way it's basically just a copy/paste of the scripts, correct?

Can someone please post a screen shot of the paths to set in *.arr apps and sab? I remember having an issue years ago, and followed spaceinvader one's guide, but now since the paths are going to be different, I want to make sure the apps all know where to look...

*edit*

Also, can someone explain the BW limits, and the time how that works? I don't understnad it exactly. Like if I don't want to have it time based, but just upload at 20MB/s until 750GB is reached starting at say 2AM. How would I set the parameters?

You removed your earlier posts I think regarding the 8500T. Just to be clear, your Synology is only good for serving data, it will never be able to transcode anything efficiently. Maybe a 1080p stream, but not multiple and definitely not 4K's. I personally would only consider a Synology as an offsite backup solution if you actually consider running dockers and serving transcodes especially.

Regarding the eduction account. I suspect you are not the admin/owner of that? So edu accounts are as far as I know, unlimited as well. But I don't think you have Team Drives that you can create there as non-admin. I don't know how the storage limits are for your personal drive then. With the change from Gsuite to Google Workspace it seems like the local Gdrive became 1 TB and the Team Drives became unlimited. So you would have to test if you can store over 1 TB on your local drive if you don't have access to Team Drives. You also don't have access to Service Accounts, so you will have only your personal account with access to your personal Gdrive, which you have to be careful with API hits/Quota's for. Should be fine if you just build up slowly.

If you name drives/folders the same it is indeed a copy paste, aside of the parameters you have to choose (backup yes/no, etc.). Just always do test runs first, rule 1 of starting with this stuff.

Regarding the paths, since you will probably only have 1 mount you just have to remove all the custom directory/names of the docker. So Plex often has /tv and /movies and such in it's docker template. Remove those and replace the dockers in your workflow with /user and point that to /mnt/user/mount_unionfs/gdrive or whatever the name of your mount will be. This is important for mergerFS, since it will be able to hardlink which makes the speed of data moving a lot faster (like importing from Sab to Sonarr).

BW-limits are the limit with which you will upload. You'll have to look at your upload speed of your WAN connection and then decide what you want to do. With Google Drive rclone now has a flag to respect google api when you uploaded more (--drive-stop-on-upload-limit). The situation you described is not really possible I think. You would just set bw-limit to 20MB/s and leave it running. If it his the quota it will stop with the above flag. But canceling an upload job while it's running is not really possible or safe to do without risking data loss. So you either blast through your 750GB and let the upload stop. Or you just set a bw-limit that it can run on continuously.

But I would first advise to check the edu account limits and then configure the mount itself with encryption and then see if you can actually mount it and get mergerfs running. After that you can start finetuning all the limits and such.

-

1

1

-

-

4 minutes ago, maxse said:

Yes, of course I searched a few days. I saw the backups but could not find anything on how to actually set it up for streaming and radarr/sonarr how you guys did here.

is it really the same script once I install rclone on synology? I can just copy/paste the scripts as long as I use the same share GSE I’ve names? Because that would be amazing.

I know that synology uses different ways to point to shares and the syntax it uses in the path is different. So I’m worried that I may not be able to figure it out and wasn’t sure if you guys would be able to help me on the synology since it’s not unfair anymore.

All the rclone mount mentions are the same, they are not system based. However the paths and use of mergerfs can differ.

I found this for mergerfs. https://github.com/trapexit/mergerfs/wiki/Installing-Mergerfs-on-a-Synology-NAS.

If you have that working you just need to get the right paths in the scripts.

But in the beginning just use simple versions of the script. Run the commands and then see if it's working. The scripts of DZMM are quite complex if you want to translate them to your system.

-

On 8/21/2022 at 7:48 AM, maxse said:

Hi folks,

Does anyone know how this exact thing can be done on a Synology NAS?

Don't mean to be off-topic but I love unraid and the community (had it since 2014 or so).

I can't seem to find any guides, just some random posts on reddit of people with synology saying they got it working, but don't provide any instructions how or how they even did it.

I just got a Synology and it would be awesome if I could set it up this way.

Really appreciate any help or pointing me in the right direction.

Did you really search? First thing when I google is this:

https://anto.online/guides/backup-data-using-rclone-synology-nas-cron-job/

Once you got rclone installed and you I assume you know your way around the terminal then you can follow any Rclone guide and to configure your mount through "rclone config". If there are specific steps you are stuck at then we need more information to help you.

On 8/21/2022 at 7:34 PM, francrouge said:do you have any documentation on service account ? i found some but not up to date also for teamdrive thx

Probably the same as what you found. If you follow the guide from DZMM and use the AutoRclone generator you should have a group of 100 service accounts who have access to your teamdrives. Then just put them in a folder and while configuring your mounts remove all the client id info and such and just point to the service account. For example: "/mnt/user/appdata/rclone/service_accounts/sa_tdrive_plex.json". This way I have multiple mounts for the same teamdrive, but based on different service account.

This way when you hit an API quota you can swap your mergerfs folder for example from "/mnt/user/mount_rclone/Tdrive_Plex" to "/mnt/user/mount_rclone/Tdrive_Plex_Backup". The dockers won't notice and your API quota is resetted again.

-

3 hours ago, francrouge said:

Hi guys i need help with Sqlite3: Sleeping for 200ms to retry busy DB

I currently got an ssd just for the appdata.

The folder app data seem to be creating a share with the folder.

Any idea how to fix this

I already did a optimse library etc.

Sqlite3: Sleeping for 200ms to retry busy DB. Sqlite3: Sleeping for 200ms to retry busy DB. Sqlite3: Sleeping for 200ms to retry busy DB. Sqlite3: Sleeping for 200ms to retry busy DB. Sqlite3: Sleeping for 200ms to retry busy DB. Sqlite3: Sleeping for 200ms to retry busy DB. Sqlite3: Sleeping for 200ms to retry busy DB. Sqlite3: Sleeping for 200ms to retry busy DB. Sqlite3: Sleeping for 200ms to retry busy DB. Sqlite3: Sleeping for 200ms to retry busy DB. Sqlite3: Sleeping for 200ms to retry busy DB. Sqlite3: Sleeping for 200ms to retry busy DB. Sqlite3: Sleeping for 200ms to retry busy DB. Sqlite3: Sleeping for 200ms to retry busy DB. Sqlite3: Sleeping for 200ms to retry busy DB. Sqlite3: Sleeping for 200ms to retry busy DB. Sqlite3: Sleeping for 200ms to retry busy DB. Sqlite3: Sleeping for 200ms to retry busy DB. Sqlite3: Sleeping for 200ms to retry busy DB. Sqlite3: Sleeping for 200ms to retry busy DB. Sqlite3: Sleeping for 200ms to retry busy DB. Sqlite3: Sleeping for 200ms to retry busy DB. Sqlite3: Sleeping for 200ms to retry busy DB. Sqlite3: Sleeping for 200ms to retry Critical: libusb_init failedMan I had the same and been spending a week on it, but found the solution for my situation yesterday.

Turns out it will do this when you hit the api limit/download quota on your Google drive mount which you use for your Plex.

I was rebuilding libraries, so it can happen, but this was too often for me.

Turned out Plex Server had the task under Settings > Libraries for chapter previews enabled on both when adding files and during maintenance. This will make Plex go through every video and create chapter thumbnails.

Once I disabled that the problem was solved and Plex became responsive again.

-

On 7/25/2022 at 10:24 AM, DZMM said:

Is anyone who is using rotating service accounts getting slow upload speeds? Mine have dropped to around 100 KiB/s even if I rotate accounts....

Still having issues? Cause for me it's working fine using your upload script. I have 80-100 rotating SA's though.

On 8/14/2022 at 11:00 AM, Sildenafil said:I can't stop the array because of the script, end up in a loop trying to unmount /mnt/user.

I use the mount script without mergerfs.Some help on how to set the script to execute at the stop of the array? The one on github creates this problem for me.

I've never been able to stop the array once I started using rclone mounts. I think the constant connections are preventing it. You could try shutting down your dockers first and make sure there are no file transfers going on. But I just reboot if I need the array down.

On 8/17/2022 at 11:43 AM, francrouge said:Hi guys

i was wondering if anyone of you map there download docker to be diectly on gdrive and seed from it

Question:

#1 Do you crypt the files ?

#2 do you use hardlink

Any tutorial or additionnal infos maybe how to use it ?

thx

I do use direct mounts for certain processes, like my Nextcloud photo backups go straight into the Team Drive (I would not recommend using the personal Google drive anymore, only Team drives). I always use encrypted mounts, but depending on what you are storing you might not mind that it's unecrypted.

I use the normal mounting commands, although I currently don't use the caching ability that Rclone offers.

But for downloading dockers and such I think you need to check whether the download client is downloading in increments and uploading those or first storing them locally and then sending the whole file to the Gdrive/Team Drive. If it's storing in small increments directly on your mount I suspect it could be a problem for API hits. And I don't like the risk of corruption of files this could potentially offer.

Seeding directly from your Google Drive/Tdrive is for sure going to cause problems with your API hits. Too many small downloads will ruin your API hits. If you want to experiment with that I suggest you use a seperate service account and create a mount specifically for that docker/purpose to test. I have seperate rclone mounts for some dockers or combination of dockers that can create a lot of API hits and seperate it from my Plex mount so they don't interfere with each other.

-

1

1

-

-

On 6/21/2022 at 4:53 AM, Bolagnaise said:

I’ve had been experiencing permission issues since upgrading to 6.10 as well and i think i finally fixed all the issues.

RCLONE PERMISSION ISSUES:

Fix 1: prior to mounting the rclone folder using user scripts, run ‘docker safe new permissions’ from settings for all your folders. Then mount the rclone folders using the script.

Fix 2: if that doesnt fix your issues, in the mount script add the following BOLDED sections to the create rclone mount section of the script, or add them to the extra parameters section, this will mount rclone folders as user ROOT with a UMASK of 000.

Alternatively you could mount it as USER:NOBODY with the uid:99 gid:100

DOCKER CONTAINER PERMISSIONS ISSUES FIX (SONARR/RADARR/PLEX)

Fix 1: Change PUID and PGID to user ROOT 0:0 and add an environment variable for UMASK of 000 (NUCLEAR STRIKE OPTION)

Fix 2: Maintain PUID and PGID to 99:100 as USER:NOBODY and using the user scripts plugin, update the permissions of the docker containers permissions using the following script, change the /mnt/ path directory to reflect your Docker path setup. Rerun for each containers path after changing it.

#!/bin/bash for dir in "/mnt/cache/appdata/Sonarr/" do `echo $dir` `chmod -R ug+rw,ug+X,o-rwx $dir` chown -R nobody:users $dir doneThanks for this. I have been experimenting with using the 99/100 added lines to my mount scripts. That didn't seem to work. I removed it and added the umask. That alone was not enough either. So I think the safe permission did the trick. I added umask and 99/100 to my mount scripts now and it seems to work. Hopefully it stays like this.

I wonder if using 0/0 (so you mount as root) would actually cause problems. Cause you might not have access to those files and folders when you are not using a root account.

Anyways, I'm glad it seems to be solved now.

-

4 hours ago, Bolagnaise said:

adding the below to you mount script should resolve the permissions issue if your updating to 6.10 RC7, i will test in approx 12 hours if this fixes the permissions issue with docker containers and plex.

What is the reason for you to use uid 98 and gid 99 instead of the uid 99 and gid 99?

-

3 hours ago, endiz said:

Thanks squid. I do have a display that supports HDR & DV, and it looks great. But if i'm watching on a non-hdr screen remotely (e.g. cell phone), it says it's transcoding but the tone mapping is off with the strange green tint mentioned above.

Anyways, i think it's a plex issue so i posted in their forums. I'll post back if i come across a solution.

I think it's two seperate issues, because I have both issues.

The green color you've shown I also had. When testing the file directly on my laptop it also showed the green color. So not a Plex issue.

And Plex does indeed have confirmed issues with HDR tonemapping. Especially on latest gen intel GPU's. Which has not been solved yet. Make sure if your GPU is not the issue, that Hardware Transcoding is actually working by checking your Plex dashboard when watching a transcoded video.

-

After updating to 6.10.0-rc1 my 11th gen (11500) HW transcoding is not working anymore. Removed the modprobe file, also didn't work. The driver does seem to be loaded fine without modprobe file. Maybe it has to do with the Intel TOP plugin that needs an update, I have no idea. But just be aware that HW transcoding for me is currently fully broken.

Before it would HW transcode even in HDR on plenty of movies (not all as we discussed earlier).

Guide: How To Use Rclone To Mount Cloud Drives And Play Files

in Plugins and Apps

Posted

What team drive limitations are you talking about? If your seedbox can use rclone it can mount directly to Google drive. But a seedbox also indicates torrent seeding and that's generally a problem with Google drive because of the api hits and the resulting temporary bans.

If you just want to use your seedbox to download and move the files to your Google drive that's entirely possible and is what DZMM seems to be doing.