-

Posts

125 -

Joined

-

Last visited

Recent Profile Visitors

3471 profile views

FoxxMD's Achievements

Apprentice (3/14)

24

Reputation

-

@thenexus Yes you can use rtl_tcp to expose your RTL-SDR device on a port that any other rtl_tcp client can then consume/control. The underlying library for controlling receivers in ShinySDR, gr-osmosdr, supports RTL-TCP. To add an RTL-TCP device to ShinySDR config you would add a device like this: config.devices.add(u'osmo', OsmoSDRDevice('rtl_tcp=HOST_IP_AND_PORT')) I have a docker-compose project that utilizes rtl_tcp and shinysdr that you can use a reference for setting this up, assuming you wanted to dockerize the rtl_tcp device as well: https://github.com/FoxxMD/rtl-sdr-icecast-docker Instead of using the compose file you would instead setup the rtl_tcp container on the machine you have in the "main room" and then configure ShinySDR on unraid to use the above config (or reference config from the project here)

-

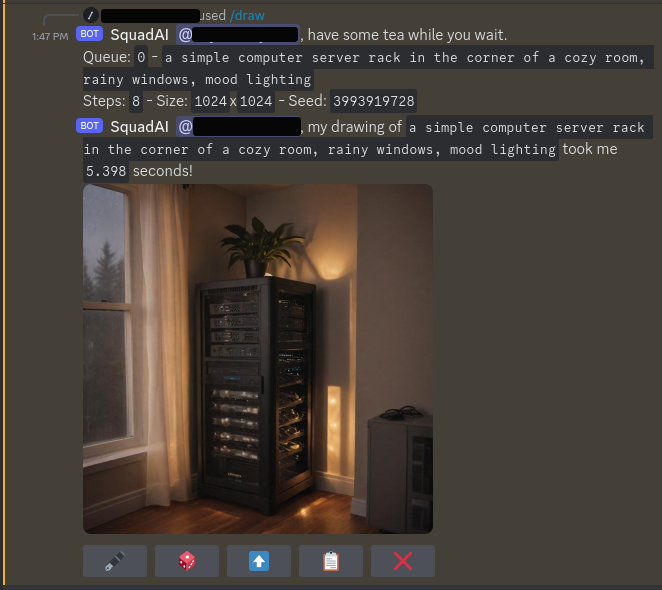

Application Name: aiyabot Application Site: https://github.com/Kilvoctu/aiyabot Github Repo: https://github.com/Kilvoctu/aiyabot Docker Hub: https://github.com/Kilvoctu/aiyabot/pkgs/container/aiyabot Template Repo: https://github.com/FoxxMD/unraid-docker-templates Overview aiyabot is a self-hosted discord bot for interacting with a Stable Diffusion API to create AI generated images. It is compatible with AUTOMATIC1111's Stable Diffusion web UI or SD.Next APIs and provides these features through Discord commands: text2image image2image prompt from image generate prompts from text upscale image image identification (metadata query) Generation settings are also configurable globally or per channel: Model to generate with Width/Height Extra nets (lora, hypernet) Prompt/Negative prompt Batch generation settings highres fix/face restore/VAE strength/guidance(cfg)/clip skip/styles mark as spoiler Be aware that aiyabot will help you craft valid generation commands but it does not magically "make good images" -- the bot will only generate with the settings you specifically configure. You will need a basic understanding of Stable Diffusion in order to achieve good result. Requirements Discord Bot - You need to create a Discord bot in a Server you have appropriate permissions in and have a token ready to use with Aiyabot. This is covered in Setting up a Discord bot in the project wiki. Compatible Stable Diffusion instance - The bot needs access to a SD API. Recommend to use stable-diffusion in CA Apps with (04 SD.Next) or (02 Automatic1111) Usage Map the SD container's API port: * Container Port 7860 -> Host Port 7860 Fill in the CA app template with your discord bot token and URL to the SD API and the start the app to get the bot running. All commands and configuration is done through discord slash commands in the server the bot is running on. Generating Prompts Prompt generation (using /generate command) is disabled by default because it requires downloading and installing an ML model into the container which can be a large download and take a long time to process. If you wish to use this command then modify the CA template variable for USE_GENERATE (Download and install optional prompt generation command) from false to true.

-

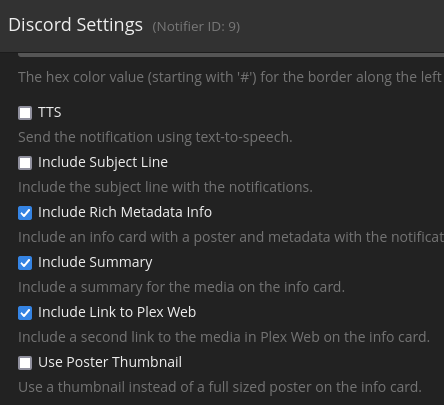

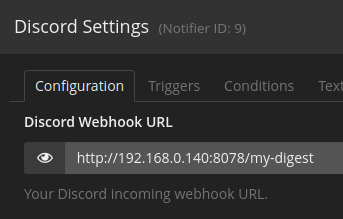

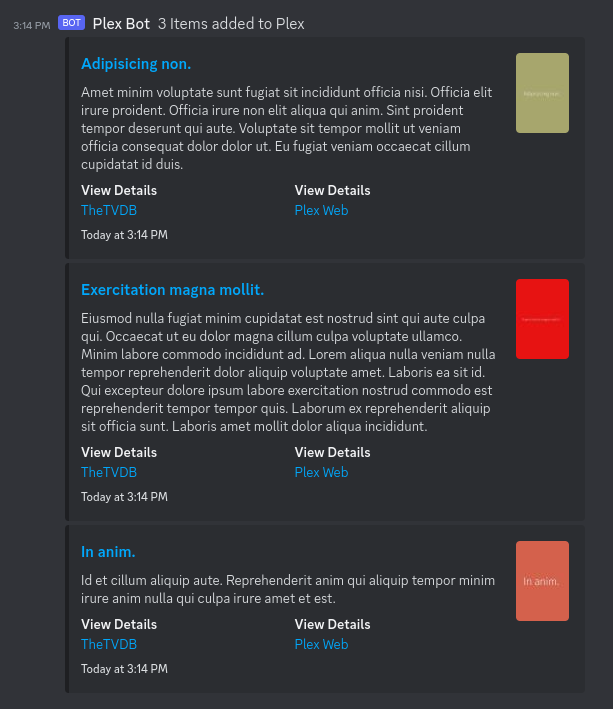

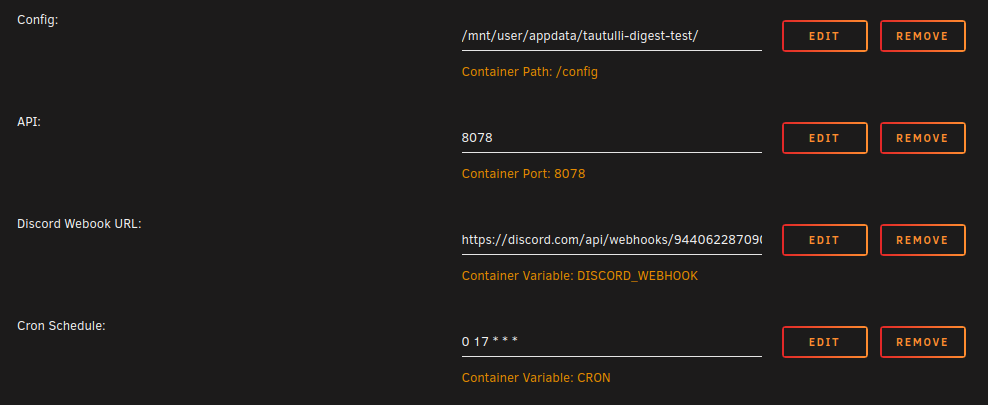

Application Name: tautulli-notification-digest Application Site: https://github.com/FoxxMD/tautulli-notification-digest Github Repo: https://github.com/FoxxMD/tautulli-notification-digest Docker Hub: https://hub.docker.com/r/foxxmd/tautulli-notification-digest Template Repo: https://github.com/FoxxMD/unraid-docker-templates Overview tautuilli-notification-digest (TND) creates a "digest" (timed summary) of notifications of "Recently Added" events for discord using Tautulli's Discord notification agent. Tautulli already provides an email "newsletter" that compiles triggered events (media added) from Plex and then sends it as one email at a set time. This same functionality does not exist for notifications. This functionality is often requested for discord and there are even some existing guides but they are quite involved. This app provides a drop-in solution for timed notifications that compile all of your "Recently Added" Tautulli events into one notification. Usage These instructions are mostly the same as the Setup section in the project readme but are tailored for unraid. Create a Discord Webhook If you don't already have a webhook then create one for the channel you want to post digests to. If you have an existing one then we will reuse it later. Create/Modify a Tautulli Discord Notification Agent Create a notification agent for Tautulli for use with Discord. If you already have one you can modify it. Settings for the agent must be set like this: Configuration Discord Webhook URL https://YOUR_UNRAID_IP:8078/my-digest This assumes you use the unraid app template default settings ✅ Include Rich Metadata Info ✅ Include Summary ✅ Include Link to Plex Web (optional) ❎ Use Poster Thumbnail Triggers ✅ Recently Added Update the TND unraid App Template DISCORD_WEBHOOK - This is the actual Discord URL you created earlier. TND will post the digest to this URL at the specified time CRON - A cron expression that determines when TND posts the digest. Use a site like crontab.guru if you need help setting this up. You're Done That's it! Your Recently Added media will now be compiled by TND into a digest that will be posted once a day at 5pm. Check the docker logs for activity and notification status. Running Pending Notifications Any Time Pending notifications can be run before the scheduled time by sending a POST request to http://SERVER_IP:8078/api/my-digest To do this with a browser use https://www.yourjs.com/bookmarklet in the Bookmarklet Javascript box: fetch('http://MY_IP:8078/api/my-digest', {method: 'POST'}).then(); Convert to Data URL Drag and drop converted data url into your bookmarks Then the bookmark can be clicked to trigger pending notifications to run. Further Configuration The unraid default template and the instructions above assumes using ENV config which generates a default URL slug (my-digest) for you. TND can handle multiple notification agents using different slugs (which creates multiple digests). Digests can further be configured using a yaml config to customize embed formats and collapse thresholds.

-

Can you try modifying this line in 05.sh to include curl? conda install -c conda-forge git curl python=3.11 pip gxx libcurand --solver=libmamba -y

-

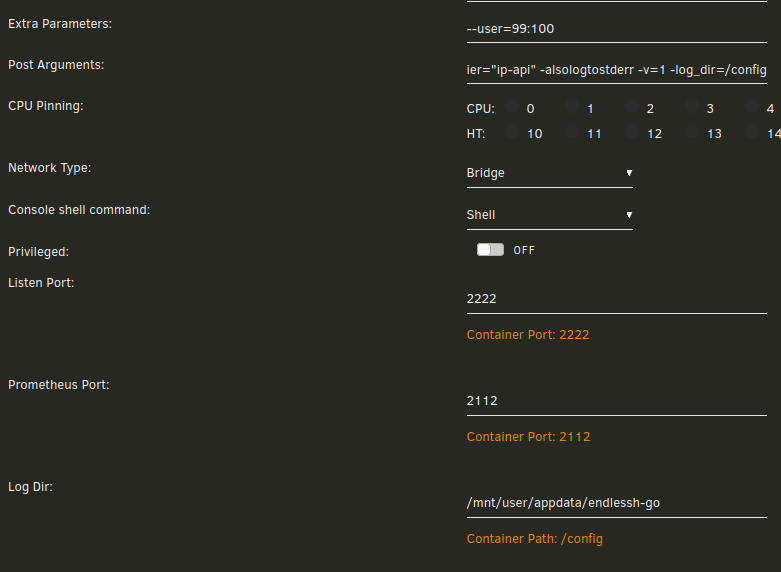

Application Name: endlessh-go Application Site: https://github.com/shizunge/endlessh-go Github Repo: https://github.com/shizunge/endlessh-go Docker Hub: https://hub.docker.com/r/shizunge/endlessh-go Template Repo: https://github.com/FoxxMD/unraid-docker-templates Overview Endlessh is an SSH tarpit that very slowly sends an endless, random SSH banner. It keeps SSH clients locked up for hours or even days at a time. The purpose is to put your real SSH server on another port and then let the script kiddies get stuck in this tarpit instead of bothering a real server. Linuxserver.io provides the original endlessh on CA -- this is not that. This is a re-implementation of the original endlessh in golang with additional features, namely, translating IP addresses to Geohash and exporting Prometheus metrics which can be visualized with a Grafana dashboard. Usage The app requires no setup outside of what the template already provides. You do not need to use the exported metrics in order for the app to work for its primary function (SSH tarpit). !!!!!!!! Be extremely careful when configuring port forwarding for this !!!!!!!!: DO NOT forward port 22 directly to unraid. Instead, forward external port 22 to unraid on port 2222 (or whatever you configure for the container) Double check your unraid SSH settings under Management Access If you do not need SSH, make sure "Use SSH" is set to "No" If you do need it, make sure it is NOT the same port you are forwarding to unraid for Endlessh Setting up Metrics In order to use and visualize the exported metrics you will need to set up a Prometheus container and a Grafana container. Prometheus Find in CA under "Prometheus" and install In /mnt/user/appdata/prometheus/etc create or edit prometheus.yml to include this text block: scrape_configs: - job_name: 'endlessh' scrape_interval: 60s static_configs: - targets: ['HOST_IP:2112'] Replace HOST_IP with the IP of your unraid host machine. Restart the Prometheus container to start collecting metrics. Grafana Find in CA under "Grafana" and install After you have gone through initial setup and logged in to Grafana: Open hamburger menu (top left) -> Connections -> Add new connection -> Prometheus Under Connection (Prometheus server URL) use your unraid host IP and the Port Prometheus was configured with: http://UNRAID_IP:9090 Save & Test Open hamburger menu -> Dashboards New -> Import Use ID 15156 -> Load Select a Prometheus data source -> use the prometheus data source you just created Import You should now have a saved Dashboard that will visualize your endlessh-go metrics like this It may take some time for anything to populate as you need to wait for attackers to find your honeypot Logging The container logs all output to the docker container logs by default. If you wish to also log to file modify your container like so: In Post Arguments replace -logtostderr with -alsologtostderr In Post Arguments append this to the end: -log_dir=/config In Extra Parameters add this: --user=99:100 Add a new Path variable Container Path: /config Host Path: /mnt/user/appdata/endlessh-go Your settings will look like this after all modifications are done:

- 1 reply

-

- 1

-

-

@Pentacore and youre running this on unraid? What version? Do you have the container in a custom network or anything? Can you try this while using Automatic1111: 1. Shell into the container (Console from unraid docker page on the container) 2. Run apt install ca-certificates 3. Run update-ca-certificates 4. nano entry.sh 4a. Above the "case "$WEBUI_VERSION" line add this: export SSL_CERT_FILE=/etc/ssl/certs/ca-certificates.crt 4b. hit Control + o , then Enter to save the file 5. Restart the container See if this fixes anything for you EDIT: Many other people say that if you are using a network monitor appliance, network wide, like zscaler or maybe something on your router, that their certificate may not be present in the container. https://stackoverflow.com/questions/71798420/why-i-get-ssl-errors-while-installing-packages-on-dockeron-mac https://stackoverflow.com/a/76954389

-

Yes it is. You can change this by updating the version in 06.sh line 15: conda install -c conda-forge git python=3.10 pip --solver=libmamba -y

-

This one is squarely on Fooocus It looks like they updated requirements_versions.txt two days ago and added onnxruntime==1.16.3 which conflicts with the existing numpy==1.23.5 in the same file. I would open an issue on their repo or you can try manually editing requirements_versions.txt (in your persisted folder in the 06-Fooocus folder) to specify the correct numpy version and then restart the container.

-

@BigD ah yes that happens because s6 is the init process for the container and using init: true injects tini, so you end up with container init'ing tini init'ing s6! This is likely happening because some process started by Fooocus is not respecting shutdown signals or is frozen (or it may be the entry process for the container by holaf!) You can adjust how long s6 waits for processes to finish gracefully using an ENV to customize s6 behavior. I would look at S6_KILL_FINISH_MAXTIME and S6_KILL_GRACETIME

-

what is your WEBUI_VERSION

-

You don't want to run it on CPU and integrated GPUs are much too weak to use. Stable Diffusion requires a recent-ish released dedicated graphics card with a decent amount of VRAM (8GB+ recommended) to run at reasonable speeds. It can run on CPU but you'll be waiting like an hour to generate one image.

-

@wtfreely should be fixed now

-

@Joly0 @BigD I forked holaf's repository, available at foxxmd/stable-diffusion, and have been improving off of it instead of using the repo from last post. There are individual pull requests in for all my improvements on his repository BUT the main branch on my repo has everything combined as well and where i'll be working on things until/if holaf merges my PRs. My combined main branch is also available as a docker image at foxxmd/stable-diffusion:latest on dockerhub and ghcr.io/foxxmd/stable-diffusion:latest I have tested with SD.Next only but everything else should also work. To migrate from holaf to my image on unraid edit (or create) the stable-diffusion template: Repository => foxxmd/stable-diffusion:latest Edit Stable-Diffusion UI Path Container Path => /config Remove Outputs These will still be generated at /mnt/user/appdata/stable-diffusion/outputs Add Variable Name/Key => PUID Value => 99 Add Variable Name/Key => PGID Value => 100 _______ Changes (as of this post): Switched to Linuxserver.io ubuntu base image Installed missing git dependency Fixed SD.Next memory leak For SD.Next and automatic1111 Packages (venv) are only re-installed if your container is out-of-date with upstream git repository -- this reduces startup time after first install by like 90% Packages can be forced to be reinstalled by setting the environmental variable CLEAN_ENV=true on your docker container (Variable in unraid template) ______ If you have issues you must post your problem with the WEBUI_VERSION you are using

-

Yes I'll make PRs. The memory leak fix and lsio rework are not dependent on each other so they'll be separate.

-

@Joly0 @BigD I got tired of waiting for the code so I just reverse engineered it 😄 https://github.com/FoxxMD/stable-diffusion-multi The master branch is, AFAIK, the same as the current latest tag for holaf's image. I have not published an image on dockerhub for this but you can use it to build your own locally. The lsio branch is my rework of holaf's code to work on Linuxserver.io's ubuntu base image. This is published on dockerhub at foxxmd/stable-diffusion:lsio. It includes fixes for SD.Next memory leak and I plan on making more improvements next week. If anyone wants to migrate from holaf's to this one make sure you check the migration steps as it is slightly different in folder structure. Also I haven't thoroughly checked everything actually works..just 04-SD-Next on my dev machine (no gpu for use here yet). I will better test both master/lsio next week when i get around to improvements as well. EDIT: see this post below for updated image, repository, and migration steps ___ I'm also happy to add @Holaf as an owner on my github repository if that makes things easier for them to contribute code. Or happy to fold my changes into their repository, when/if they make it available. I don't want to fragment the community but I desperately needed to make these changes and its almost been a month waiting for code at this point.