BrianAz

Members-

Posts

123 -

Joined

-

Last visited

Content Type

Profiles

Forums

Downloads

Store

Gallery

Bug Reports

Documentation

Landing

Everything posted by BrianAz

-

Same. Any ideas? I'm not sure why it happens, but it does not seem to impact my Plex server (that I've noticed)

-

I am interested in running two dual-parity protected arrays in a single chassis w/ Unraid on bare metal. I have a smaller array for testing and data that would be a minor headache to replace (6 data drives + 2 parity). I also have my "Production" Unraid array that houses my primary data that would be a huge issue if I lost (18 data drives and growing + 2 parity & full/daily offsite backup). My chassis is 36 bays and I currently run Unraid on top of ESXi, passing through the USB keys along with HBAs and NVMe cache drives to get as close to bare metal as I can. Edit: After ZFS lands, surely multiple Unraid parity arrays will be next…?

-

I'm having this issue as well... I have two Ubuntu 16.04 servers and they both will throw an error after a while on my most used folders. I have the mover enabled (FYI), but haven't tied any of this to it yet. This had not been a problem until recently. I guess I'll try to use 1.0 and see if it fixes it. Very frustrating.

-

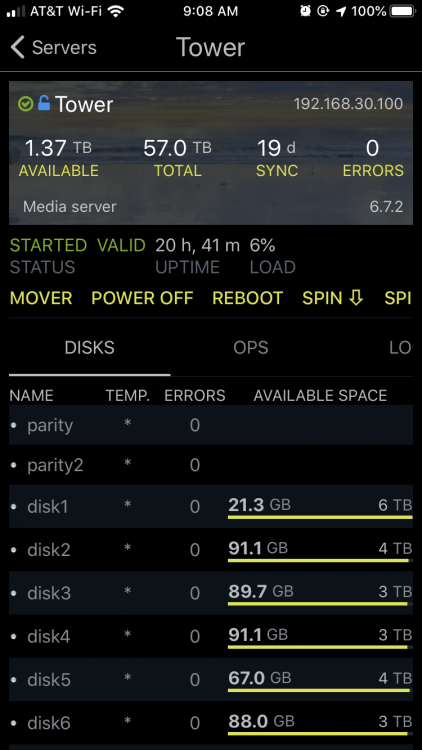

Thanks for the app. I am trying to get my NUT stats to display. Am I missing them? iOS 13 ControlR v4.10.0 NUT Plug-in 02-03-19 unRaid 6.7.2 Do I need the ControlR plugin for NUT stats to work? Thanks

-

unRAID OS version 6.3.5 Stable Release Available

BrianAz replied to limetech's topic in Announcements

Just a heads up in case anyone else encounters this... I upgraded to 6.3.5 today and all of a sudden my unRAID CPU (Celeron G1610) usage shot up to 100% and the system load skyrocketed as well. Upon investigating, I saw that my smbd process was consuming all the CPU. Thankfully, I experienced a very similar issue recently on my FreeNAS box. It seems that Ubuntu 16.04 VMs (mine are on ESXi) mount smb shares default to v1.0 which has problems connecting to FreeNAS/unRAID smbd and causes high CPU/Load on the NAS. Like with the FreeNAS issue, I specified version 2.1 in my /ets/fstab mounts and as soon as I re-mounted, my CPU/Load on unRAID dropped to normal levels. Hope this helps someone. I have not seen any negative results of specifying version 2.1, but welcome any discussion as to why this is happening. Thanks. -

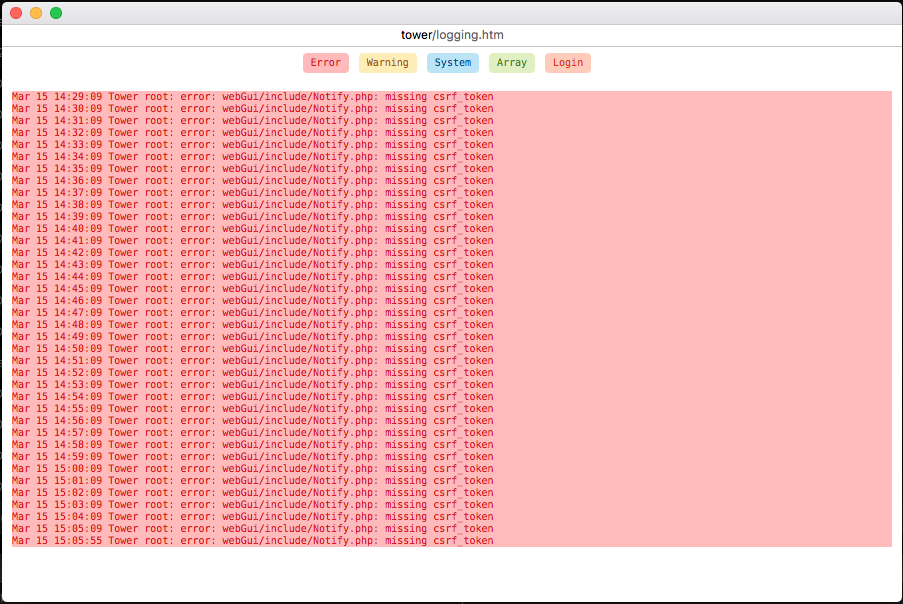

Hi - when you do the update, could you also look into this error? Since upgrading (I think it was to 6.3) we are seeing these errors in our logs every minute (MacOS Margarita) or sporadically (MargaritaToGo). I'm not sure if its something you can fix or if it's on the unRAID side. Related thread: Reply to Error in log every minute Thanks

-

Looks like the issue I experienced due to Margarita... Are you running that on your Mac or phone? See this other thread:

-

Love the new forum software! Looking forward to the dark theme. Thx

-

Thanks everyone! Been trying to find the cause of these messages for a bit now but could not figure out what the heck was running every so often that would cause these errors. Anyway, while I did not have Margarita installed on my Mac, I DID have MargaritaToGo on my iPhone. As soon as I opened it, errors immediately appeared in the log (bottom of screenshot). Appreciate the help tracking this down, will track this thread to see what the resolution is.

-

unRAID won't clear a new parity drive. There is no need for it to be clear since it will be completely overwritten by the parity sync. So if you want to test your new parity drive you may want to preclear it anyway. I meant I would be preclearing the OLD parity drive to be used as a new data drive in the array (like you discuss below). Thanks for this, I'll be sure to keep a link to it for the future.

-

FYI, since v6.2 clear is done with the array online, but disk is not tested like when using preclear. Thanks for that info too. I'll take advantage of that when I upgrade my parity drives again (and look to use them as data drives in the array). As a follow up to what you noted above, I proceeded with starting the array and unRAID picked up the preclear signature and prompted for only a quick format. Everything as you indicated. thx

-

That's normal, unRAID only checks for the preclear signature after starting the array. Many thanks Johnnie! Been quite a while since I expanded my array. Wanted to make sure I wasn't giving the go-ahead for 9+ hours of downtime.

-

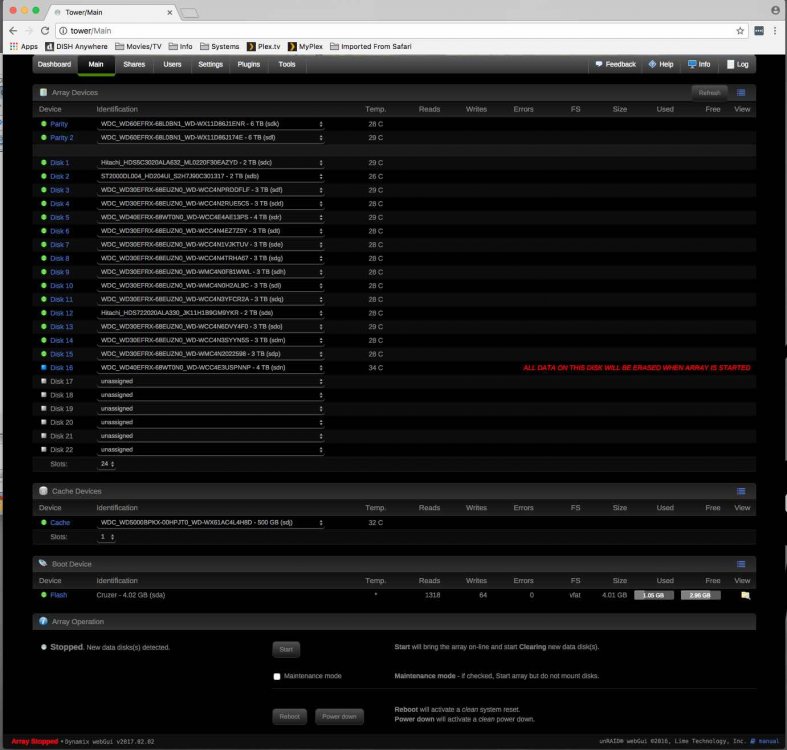

Hello - I'm attempting to preclear a 4TB drive to expand my array but after each successful preclear, the array appears to want to Clear it again before adding it. I've attached diagnostics, preclear reports and a screenshot of the "Start will bring the array on-line and start Clearing new data disks" message I'm concerned about. Please let me know what else might be helpful to troubleshoot. I'm hoping to avoid multiple hours of downtime by pre clearing and the language I'm seeing seems to tell me thats what unRAID intends to do once I click start. Am I misunderstanding the meaning or is something not right? Thanks for your help, Brian tower-diagnostics-20170215-1213.zip TOWER-preclear.disk-20170215-1211.zip

-

In Unassigned Devices, you can see a X icon next to the disk serial which can be used to clear the old session. In Tools > Preclear Disk, that icon exist too with same functionality. FYI for Mac users - I was experiencing this issue as well. I have a slot dedicated to pre clearing disks for this and other NAS I have. In Safari, clicking the red X on the Preclear plugin page did nothing. Switched to Chrome and it acted just as you said it should. Not sure why it behaves different in Safari. Side question: Can the plugin be modified to read the serial and if it doesn't match the last preclear on record, it behaves as if no prelcear has been done and presents users with "Start Preclear"? Thanks for this awesome tool! I'm macOS user too, and I can't replicate the issue. Every time a user complains about it, I open Safari and it works strait forward. Can't patch what I can't replicate, I'm afraid. If you can invite me on a remote TeamViewer session, than I can investigate it using your setup. Thanks. I just purchased two 6TB drives to replace my current parity drives which will then be moved into my array as data drives. I expect I'll be running at least 2-3 additional pre-clears during this process. Assuming I encounter the issue moving forward, I'll PM you to find a good time for TeamViewer. Appreciate the offer.

-

I first mentioned this twelve pages ago. Whoops, sorry about that . I did read back several pages in the thread, but admittedly could have searched for "Safari". Thx for pointing out this has already been identified.

-

In Unassigned Devices, you can see a X icon next to the disk serial which can be used to clear the old session. In Tools > Preclear Disk, that icon exist too with same functionality. FYI for Mac users - I was experiencing this issue as well. I have a slot dedicated to pre clearing disks for this and other NAS I have. In Safari, clicking the red X on the Preclear plugin page did nothing. Switched to Chrome and it acted just as you said it should. Not sure why it behaves different in Safari. Side question: Can the plugin be modified to read the serial and if it doesn't match the last preclear on record, it behaves as if no prelcear has been done and presents users with "Start Preclear"? Thanks for this awesome tool!

-

Do you mean that you physically move the drive without shutting down the server? I stop the array, pull the drive, relocate the drive, restart the array. So when I hover over the red X, the tooltip says "Stop Preclear" (obviously there isn't one ongoing) and clicking it refreshes the page, but doesn't actually change anything that I can see - I've still no option to start a preclear. Pretty sure by now that Im missing something obvious, but not sure what it is! Is it mounted by Unassigned Devices? I just got some help from gfjardim via PM - my problem was that I was missing the Unassigned Devices plugin - with it I was able to kick off my preclear as I wanted. I'd just like mostly to give thanks to gfjardim for his help here - there wasn't a bug, rather a misunderstanding on my part, and the support I got was the kind of top notch you'd not dare hope for even if you'd parted with a great deal of money. Really above and beyond the call of duty and I am very thankful. Outstanding As a follow up to this, I've established that it does count as a very minor bug - the problem is that the 'stop pre clear' Red X button doesn't clear down the status of a device if you click it in Safari. It works just as intended in Chrome. Just submitting for clarity! I can confirm this bug in Safari. I have a drive that has had some problems in another storage system recently so I put it in unRAID to run preclear on it. After one preclear, clicking the red X in Safari (I wanted to start another) did nothing. I finally saw this post and installed Chrome. Clicked the red X and I'm ready to run a preclear again on this drive. Thanks!

-

If you click on Plugins -> Installed Plugins -> Click Preclear plugin icon I don't have one going at the moment or I'd share a screenshot, but you should be able to click on the eyeball on the right to see progress.

-

Absolutely LOVE MargaritaToGo on my iPhone! Is Mover control/info a possible feature for the next release? In addition to being able to start the mover manually, it would be nice to get status showing any of the following: last run time total GB moved during last run next run time avg move speed during last run user shares with data on cache drive currently list of files/folders moved during last run and/or currently on cache drive ahead of next move Thanks for this app. Great addition to unRAID.

-

Curious... how long have you been on v6? Is this a recent upgrade? When I had my issues with RFS disks, I had zero issues running v5 but as soon as I booted v6 and the mover ran once or twice, it would lock up. So I reverted to v5 and everything was OK again for months. Upgraded to v6 and same issue. Eventually converting RFS -> XFS fixed it for me. If it's not a recent upgrade, I wonder why this started all of a sudden. Perhaps you crossed some unknown threshold on the RFS disks or something.. maybe they have filled up and that's triggering it? Also, maybe give this a try? http://lime-technology.com/forum/index.php?topic=48763.msg468269#msg468269

-

Not to mention, why? The server is going to be shut down anyway, so does it really matter if it's on the cache disk when it's shut down or on the array disk when it's shut down? I wonder if OP thinks an SSD is like RAM and the info will disappear when electricity is gone. Possibly worrying about lack of parity protection for data on cache drive? That's not a significant risk imo. I configure things to get the server down cleanly as quickly as possible once I've determined the power is likely to be out for a while. I think I give it a few minutes delay to see if it's momentary power blip and then begin shutdown w/o worrying if mover has run yet. I figure my data was sitting on that cache drive w/o parity protection for X hours before anyways, so what additional risk am I taking by shutting things off cleanly?