-

Posts

514 -

Joined

-

Last visited

Content Type

Profiles

Forums

Downloads

Store

Gallery

Bug Reports

Documentation

Landing

Everything posted by Living Legend

-

[Support] Eurotimmy - RomM (ROM Manager) by zurdi15

Living Legend replied to Eurotimmy's topic in Docker Containers

I'll have to look into this. Outside of this and the "cool factor" of a front end visual of what you have on your NAS, what are people using this to achieve? -

[Support] Eurotimmy - RomM (ROM Manager) by zurdi15

Living Legend replied to Eurotimmy's topic in Docker Containers

This seems like a cool project. I loaded it up and ran a few scenarios without issue. I'm posting here to try and understand general use cases for this docker. It appears to be a sweet looking front end to a group of files. Besides that, is there some sort of general use/functionality I'm missing? Can this replace a scraper one may use when populating their ROMS for something like a retro pie? Is there any connectivity to something like EmulatorJS that actually gives the ability to play these in a web browser? Regardless of the use cases, it's a sweet looking project. Well done. Curious to see how people intend to leverage this. -

Brief summary. I am a fool and run my entire home network through a pfsense VM on unRAID. It has worked for years....until now. Lately, my unassigned device which houses the vdisk seems to unmount every few days. With a reset, the device will come back online mounted. I looked through the syslog and found this at the end related to that device. Jan 9 06:55:19 unraid unassigned.devices: Mount drive command: /sbin/mount -t xfs -o rw,noatime,nodiratime,discard '/dev/sdk1' '/mnt/disks/diskUnassignedKingston480GB' Jan 9 06:55:19 unraid kernel: XFS (sdk1): mounting with "discard" option, but the device does not support discard Jan 9 06:55:19 unraid kernel: XFS (sdk1): Filesystem has duplicate UUID cc2bfbe6-93f9-49d1-ae40-1741ec6d5d72 - can't mount Jan 9 06:55:19 unraid unassigned.devices: Mount of '/dev/sdk1' failed. Error message: mount: /mnt/disks/diskUnassignedKingston480GB: wrong fs type, bad option, bad superblock on /dev/sdk1, missing codepage or helper program, or other error. Jan 9 06:55:19 unraid unassigned.devices: Partition 'diskUnassign' cannot be mounted. Jan 9 06:55:19 unraid unassigned.devices: Don't spin down device '/dev/sdk'. Is something triggering this, or do I have faulty hardware somewhere? I'm using a SuperMicro 2U server unit with hot swappable drives. I certainly hope this is not an issue with the backplane and instead something simple like the sata cables. unraid-diagnostics-20210109-0859.zip

-

unRAID Sporadically Freezes Or VMs Stopped

Living Legend replied to Living Legend's topic in General Support

If the computer completely freezes, does running a diagnostic after the reboot recover any useful information or must you be able to run a diagnostic before this happens? I thought I found the culprit with Shinobi, my PVR docker. I saw it hike up to 50+ GB RAM once which caused my PFsense to lockup. But I have had that turned off for the past week and it happened again today. -

unRAID Sporadically Freezes Or VMs Stopped

Living Legend replied to Living Legend's topic in General Support

Geez, what is causing that in the middle of the night when nothing is in use? I have 64GB of RAM with just some Dockers and 1-2 VMs. Seems like something is happening to cause this unreasonable spike. -

This has been happening once or twice a week for the past couple weeks. One of two things. I wake up in the morning and hear the server screaming. My VMs will have been shutdown. I run pfSense (probably bad idea) off a virtual machine so that throws off my entire home network. I'm forced to add a monitor to the server. When I view the dashboard it shows CPU/Memory maxing it. No choice but to reboot to bring pfsense online. Occasionally, the server itself will just freeze and I'll have to do the dreaded hard reboot. Here are two diagnostic files. I believe the more recent one was from a VM shutdown where I set up a monitor and was able to save a diagnostic before a reboot: unraid-diagnostics-20200529-0805.zip unraid-diagnostics-20200526-1745.zip Any ideas?

-

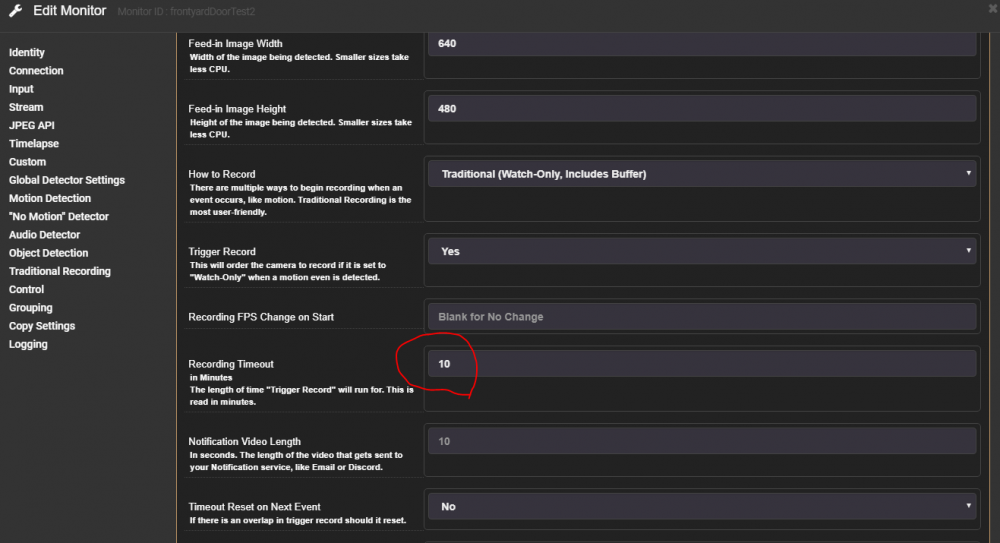

[Support] spaceinvaderone - Shinobi Pro

Living Legend replied to SpaceInvaderOne's topic in Docker Containers

Has anyone else had trouble setting up motion? I thought I had it figured out, maybe something to do with time stamps where it wasn't making proper comparisons on frames? Was a a complete guess, but when I removed that timezone docker mapping, my watch-only monitor with trigger-record finally would trigger. Then I decided to shorten the 10 minute recording to 30 seconds. And now motion no longer triggers it. I even went as drastic as I could, set a specific done by my front door, only leveraged that zone, set indifference to 1, and then wildly swung the door. It would not trigger the event. Spent too many hours on this so I'll have to shelve it for a while. I may try to run the program natively rather than use the docker. Per usual, I'm sure this is user error, but there's typically less potential for user error outside of the docker realm. EDIT: The attached image seems to be the culprit. If I don't set this for 10, it won't trigger. When I place it on 1, it doesn't trigger. Any ideas? -

[Support] spaceinvaderone - Shinobi Pro

Living Legend replied to SpaceInvaderOne's topic in Docker Containers

Figured it out, that was silly. I had the "temp streams" mapped to the same location as the permanent recordings. I assume upon reset, the temp streams folder gets cleared which was ultimately clearing my recrodings folder. Now on to figuring out how to get recording on motion working. -

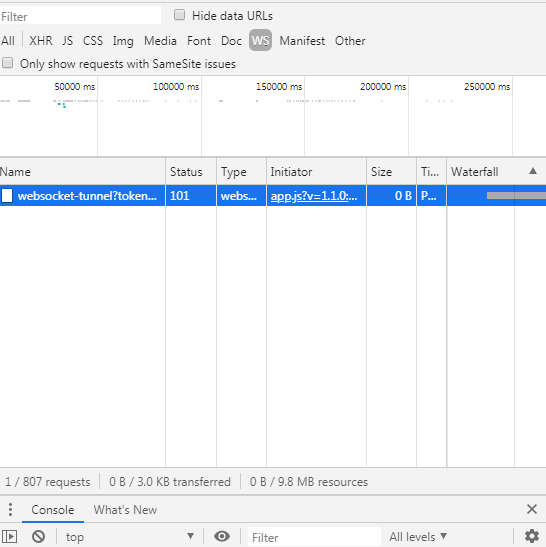

[Support] jasonbean - Apache Guacamole

Living Legend replied to Taddeusz's topic in Docker Containers

I have all settings in Guac RDP blank besides my IP address, port of 3389, and authentication set to any. Maybe I'm missing something. -

[Support] jasonbean - Apache Guacamole

Living Legend replied to Taddeusz's topic in Docker Containers

I think I can rule out NGINX. I'm home now and I just tested Guacamole locally without passing through NGINX. VNC yields very good results. Not as good as Win Client RDP, but very good, especially locally. RDP is still incredibly laggy. It takes the inital screen multiple seconds to cascade in from top to bottom. Could it be a connection setting somewhere within Guac? -

[Support] jasonbean - Apache Guacamole

Living Legend replied to Taddeusz's topic in Docker Containers

-

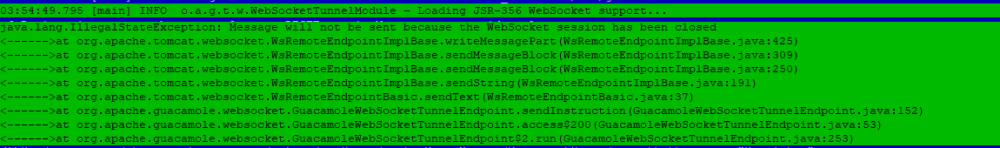

[Support] jasonbean - Apache Guacamole

Living Legend replied to Taddeusz's topic in Docker Containers

I am using chrome. Here is a screenshot of what I can see from that log file. Sorry, I'm remote now and can only seem to access these files through terminal so I took a screen shot: That first message appears numerous times throughout the log. The messages below only appear that one time. -

[Support] jasonbean - Apache Guacamole

Living Legend replied to Taddeusz's topic in Docker Containers

Both of these are already set as advised. I was reading through the Guacamole docs and noticed this excerpt: Apache will not automatically proxy WebSocket connections, but you can proxy them separately with Apache 2.4.5 and later using mod_proxy_wstunnel. After enabling mod_proxy_wstunnel a secondary Location section can be added which explicitly proxies the Guacamole WebSocket tunnel, located at /guacamole/websocket-tunnel: Is this parameter enabled for this docker? -

[Support] jasonbean - Apache Guacamole

Living Legend replied to Taddeusz's topic in Docker Containers

I did a little searching, but don't see this being a common issue for others. I have a Windows10 VM up and running successfully. I can locally access through Windows RDP client without an issue. In a daring moment, I opened port 3389 and redirected to the VM to test externally. This worked flawlessly too. It operated as clean and quick as if it was the local OS. I've had Guacamole docker up and running for a few years now. I have a VNC connection and RDP connection set up. VNC is okay, but not as good as the native windows RDP client. RDP through guacamole however is incredibly inconsistent. It always connects, but at times is incredibly laggy. I've tried every configuration under the sun through the Guacamole GUI to no avail. I've tried to tinker with my NGINX settings to see if there was something I was missing, but nothing there seems to make a difference either. Any thoughts as to why RDP through Guacamole connecting a Windows to Windows machine can be so unstable? -

I have. And I wouldn't call it an issue. Just a question for people that use this docker to see if they have any experience with the filter feature.

-

Just wanted to bump my post that may have gotten lost in the shuffle earlier this week. @dlandon, any suggestions on the optimal way regarding computer resources to keep MP4 files for 24 hours, then delete, and keep the few daily snapshots in perpetuity? Is there a way to do it with one stream with a filter on the event that can distinguish between MP4 and JPG files, or do I need two independent streams, one to clear MP4s daily and one to keep JPGs?

-

I have a question on what the optimal way to handle saving/filtering through two camera's JPG and MP4 outputs. I'm currently running two cameras. In my ideal scenario, the cameras would be recording using the H264 camera passthrough 24/7. These recordings would be maintained for 24-48 and then deleted. Additionally, I would like an image saved once an hour per camera. These will never be deleted. What would be the optimal way to do this regarding minimizing server resources? I was able to set up JPGs and MP4s to save from the same camera feed to the same event folder. The problem was I could not figure out how bifurcate images/videos from the event folder via filter. It was an all or nothing proposition. If I wanted to delete beyond 24 hours, I lost the pictures too. If I wanted to keep beyond 24 hours, I was forced to keep all videos. The next option seems to be to set up 2 feeds for each camera, one responsible for images, one responsible for video. The video feed will use the filter to delete the event folders > 24 hours old while the image feed will remain untouched. I hesitated to do this as I assumed it would be more resource intensive. Any suggestions on the best way to accomplish this?

-

Thanks for the breakdown. I'm going to keep playing around with Emby and see where it takes me. I'm running a dual E5-2670 CPU setup along with 64GB RAM so fortunately backend transcoding works pretty well. Before the Fire Sticks 4k, I was most recently running a couple VMs of LibreELEC off this server for my client devices, and then using a basic graphics card to output the video. Have used everything from the original RPi, to RPi 3, and Chromebox. The reason I've been on the sticks recently is for the native Netflix App as this has always been a bit of a hassle to get to work (and continue working) on Kodi.

-

TLDR: I've been a Kodi (local, synchronized databases), Plex (remote) user for my media for almost a decade until recently when most of my devices are now Fire TV Sticks (4k) because Netflix gets a high usage. Plex has been used for local media on the sticks for some reason. Finally loaded Kodi to a stick and then read about Emby maintaining the library rather than using the Kodi-Headless docker or running a Kodi VM again. Didn't know anything about Emby until reading about it today. Curious as to why people use Emby, what it has replaced, and how it integrates into their media setup. ****************************************************************** I have always seen Emby in the app section, but never looked through it's uses until very recently. I was a long time Kodi user for close to a decade. My set up until recently was all Kodi devices throughout the house with a synchronized database. One of the clients was a VM which was on 24/7, so library updates were pushed to this device. I had issues in the past with the Kodi Headless Docker not updating movies because of a scraping issue, so directing to the VM was a fine solution. In the past year, with the increase in Netflix usage and the accumulation of a few FireTV (4k) sticks, 95% of media is being consumed through Netflix/Plex. I cut the cord with FiOS TV completely and sold my HDHR. No long recording through MythTV was another reason my Kodi usage was no longer a high priority. With that said, I had some time today so I looked into side loading Kodi onto the Fire Stick. I was toying around with the headless Kodi docker again since I no longer use the Kodi VM, and read a post about how the Linuxserver.io guys were mostly on Emby these days as it related to Kodi. Wasn't sure what that meant so I dug a bit further and it appears people use Emby as the backend server that gets updated with media. This data can be pulled with the Emby/Kodi add on, and run natively on Kodi clients. Seems useful. So now I'm juggling between a Emby backend where I'm only using it to monitor my library, Kodi front ends to display my media library, and Plex for people when outside of the household to connect to the library. Mostly curious here, but what are the primary use purposes people have for Emby as it relates to Kodi and Plex? I know this is certainly a use case dependent thing, but have there been any types of prevailing opinions on the most efficient ways of handling media libraries running to many clients locally and remotely?

-

Interesting, because while I did ultimately buy another quad port NIC for cheap on eBay, I'm now fairy certain that I could have managed without one. When I installed the additional NIC, I now had 8 ports, all with the same ID, so my problem still existed. I followed this thread to resolve: This method allows the user the select specific PCI numbers to pass through rather than device IDs. Then these specific PCI #s can be utilized in the VM by manually editing the VM XML file.

-

A Single unRAID Share Lost File Data?

Living Legend replied to Living Legend's topic in General Support

Ah, I see. I have had the issue in the past where it continues to grow. which was why I ballooned it to 50G. I cleared out a few old unused dockers and reset a few mappings to try and resolve the issues. It looks like I may have partially resolved the issue though since I"m currently sitting under 20G -

A Single unRAID Share Lost File Data?

Living Legend replied to Living Legend's topic in General Support

Yes, you're definitely right. I have many many dockers and there has to be at least one or two that are storing some sort of file inside the container and making them grow. Haven't found a simple way to solve his one yet. This shows it should be around 20GB. Not sure why zoneminder and HA get so big. Homeassistant database gets large but that's on the array. Zoneminder recordings have their own share. DelugeVPN downloads to different folders within a share. Always been perplexed by this one. -

A Single unRAID Share Lost File Data?

Living Legend replied to Living Legend's topic in General Support

Yes, I have made this mistake. I could not figure out why some of my movies that initially downloaded to cache were not moving out of cache. I'd try to move them manually and then poof, they'd disappear. I wish I could attribute this loss of data to the user error mentioned above, but unfortunately that's not the case here. If the error was on me it'd be easy to move on and learn from my mistake, but the problem is I haven't manually moved files into a disk share in probably over a year. I only add or delete files at my user shares. So I have absolutely no idea how this one random share that I haven't accessed in about a month has all the files names but no data. To me this goes to show the importance of backing up important data outside of the array because every once in a while, even though unRAID is meant to protect your data in the event of drive failure, it may throw out some quirk and gobble up a few files. -

A Single unRAID Share Lost File Data?

Living Legend replied to Living Legend's topic in General Support

Out of curiosity, to attempt to prevent in the future, what can cause something like this? Is this a file corruption issue or potential disk failure issue? If the former, I understand that an actual backup would be the only way to resolve. But if the latter, shouldn't I have had some sort of drive failure notification so that I could attempt to rebuild before I hit the point of no return? -

A Single unRAID Share Lost File Data?

Living Legend replied to Living Legend's topic in General Support

Yeah, that's what I assumed. I assume that there's no basic resolution to this type of issue?