-

Posts

1245 -

Joined

-

Last visited

-

Days Won

4

Content Type

Profiles

Forums

Downloads

Store

Gallery

Bug Reports

Documentation

Landing

Everything posted by ken-ji

-

Sorry guys, real life's been busy. I'll look into this soon over the weekend.

-

Show your docker mappings here and check them for a folder named Dropbox - that sounds like the source of the issue.

-

I think when the thread was marked solved, we didn't have the host access setting yet. hence my comment. I think the issue with the host access failing is due to a race condition that fails on certain cases (containers starting up before networking is ready?) @bonienl would have a better idea. I do think enabling ipvlan on the latest versions would solve the issue with access over the same network interface. As for bug reports, just file one with the server diagnostics in both cases - working during startup and not to compare with.

-

How to get IPv6 address for unraid docker application

ken-ji replied to Yvan's topic in General Support

the command to see IPv6 routes is "ip -6 route" what's odd is your router not being able to ping the docker. have you tried running a traceroute tool on your router, maybe the router route settings is not correct. or you have firewall rules? not really familiar with OPNsense -

How to get IPv6 address for unraid docker application

ken-ji replied to Yvan's topic in General Support

Its not immediately clear what's wrong. If you do not set a static IPV6 address, Docker should assign one - so for testing, do that and see if the container is able to ping the router and vice-versa? I assume other devices in the VLAN have ipv6 working properly? -

How to get IPv6 address for unraid docker application

ken-ji replied to Yvan's topic in General Support

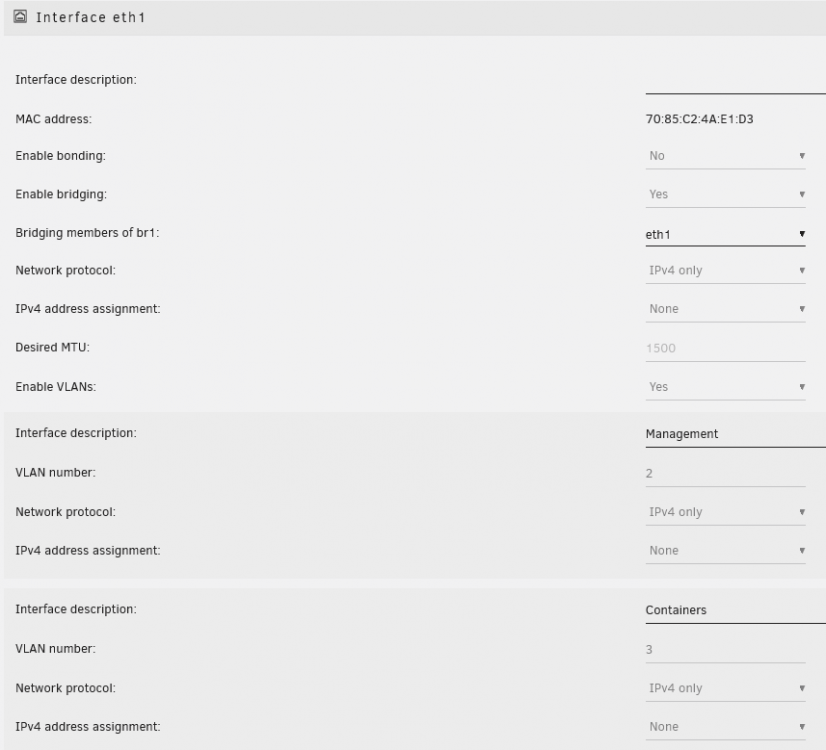

What are you using to reach the the container over IPV6? Unraid cannot reach anything on br0, as 6.9.2 macvlan system used by docker expressly prohibits the host and container communication over the "shared" interface. Also, does your LAN ipv6 have the same /64 prefix as the container? In my case I don't use static assignments, just SLAAC, which gives me fairly static IPV6 address since the container MAC address is defined by the static IPv4 assigned to it. root@MediaStore:~# ip addr s dev br0 18: br0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000 link/ether 70:85:c2:4a:e1:d1 brd ff:ff:ff:ff:ff:ff inet 192.168.2.5/24 scope global br0 valid_lft forever preferred_lft forever inet6 xxxx:xxxx:xxxx:xxxx:7285:c2ff:fe4a:e1d1/64 scope global dynamic mngtmpaddr noprefixroute valid_lft 3584sec preferred_lft 1784sec inet6 fd6f:3908:ee39:4001:7285:c2ff:fe4a:e1d1/64 scope global dynamic mngtmpaddr noprefixroute valid_lft 3584sec preferred_lft 1784sec inet6 fe80::7285:c2ff:fe4a:e1d1/64 scope link valid_lft forever preferred_lft forever root@MediaStore:~# docker exec nginx ip addr 1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN qlen 1000 link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 inet 127.0.0.1/8 scope host lo valid_lft forever preferred_lft forever inet6 ::1/128 scope host valid_lft forever preferred_lft forever 2: tunl0@NONE: <NOARP> mtu 1480 qdisc noop state DOWN qlen 1000 link/ipip 0.0.0.0 brd 0.0.0.0 3: gre0@NONE: <NOARP> mtu 1476 qdisc noop state DOWN qlen 1000 link/gre 0.0.0.0 brd 0.0.0.0 4: gretap0@NONE: <BROADCAST,MULTICAST> mtu 1476 qdisc noop state DOWN qlen 1000 link/ether 00:00:00:00:00:00 brd ff:ff:ff:ff:ff:ff 5: erspan0@NONE: <BROADCAST,MULTICAST> mtu 1464 qdisc noop state DOWN qlen 1000 link/ether 00:00:00:00:00:00 brd ff:ff:ff:ff:ff:ff 6: ip_vti0@NONE: <NOARP> mtu 1480 qdisc noop state DOWN qlen 1000 link/ipip 0.0.0.0 brd 0.0.0.0 7: sit0@NONE: <NOARP> mtu 1480 qdisc noop state DOWN qlen 1000 link/sit 0.0.0.0 brd 0.0.0.0 215: eth0@if33: <BROADCAST,MULTICAST,UP,LOWER_UP,M-DOWN> mtu 1500 qdisc noqueue state UP link/ether 02:42:c0:a8:5f:0a brd ff:ff:ff:ff:ff:ff inet 192.168.95.10/24 brd 192.168.95.255 scope global eth0 valid_lft forever preferred_lft forever inet6 fd6f:3908:ee39:4002:42:c0ff:fea8:5f0a/64 scope global dynamic flags 100 valid_lft 3435sec preferred_lft 1635sec inet6 xxxx:xxxx:xxxx:xxxx:42:c0ff:fea8:5f0a/64 scope global dynamic flags 100 valid_lft 3435sec preferred_lft 1635sec inet6 fe80::42:c0ff:fea8:5f0a/64 scope link valid_lft forever preferred_lft forever My containers are connected to a different VLAN, hence the /64 is not quite the same. But I have local-only IPV6 prefix for my LAN for intersite VPN traffic, and the fact that my ISP gives out a dynamic IPV6 prefix the dynamic ipv6 prefix makes it hard to run docker with network settings defined, thus making me use SLAAC more efficiently - it doesn't help that my router is a Maikrotik, which has other issues with IPV6 networks (lack of proper DHCPv6) -

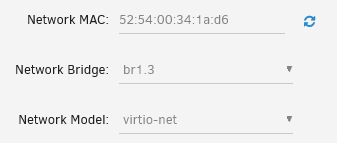

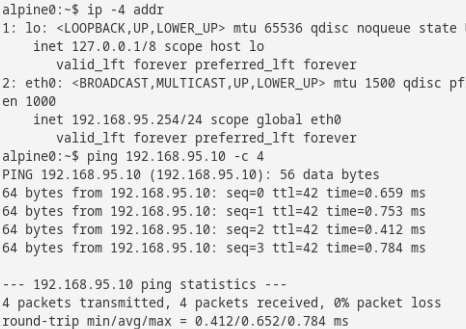

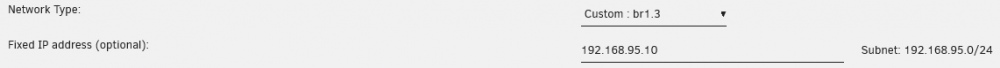

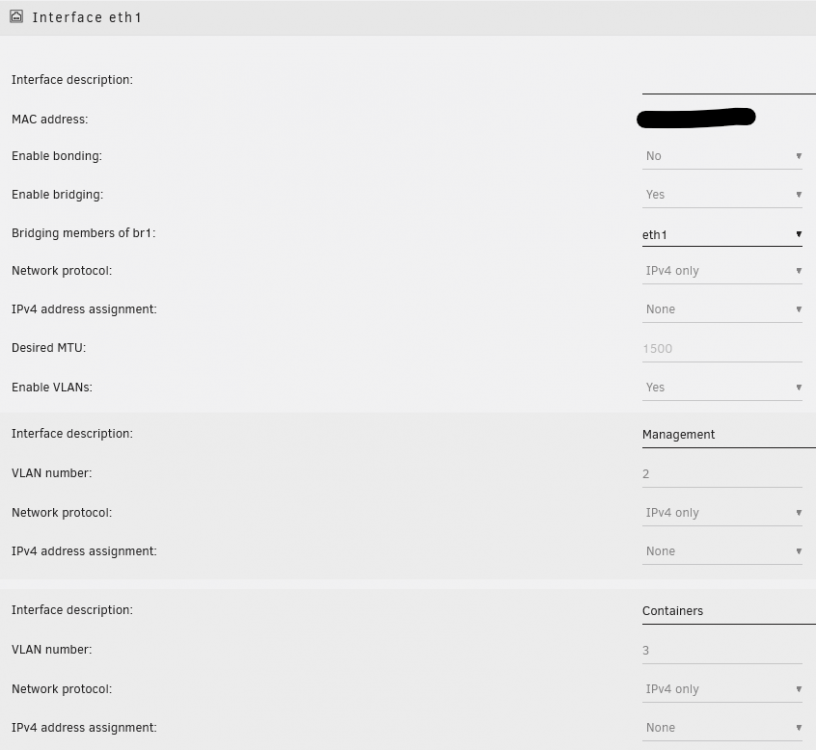

1. This is possible. As long as the Spare interfaces are configured without IP addresses, network isolation on those interfaces is enforced, though on the IP level only 2. I rarely do it since my config uses br1.3 for my docker containers, and my VMs on br0.3, but nothing is preventing the VM and docker container from sharing the same bridge interface. My Docker container: My VM From the VM

-

the keytype and bits depends on your needs but the simplest command is ssh-keygen -C "comment so you know which key this is" -f path/to/private_key -m pem -N "" This creates the keypair /tmp/mykey and /tmp/mykey.pub using the rsa format (with 1024bits I think) `-C "comment"` adds a comment at the end of the public key so you can tell which public key it is later on. `-N ""` specifies an empty passphrase which is used to encrypt/decrypt the private key (This could be use to "safely" store the private key in certain places) `-m pem` is a function for interoperability of the private key with older ssh implementations (if it works without for you then you can skip specifying it) You can specify `-t ecdsa` or `-t ed25519` to use those key formats if needed.

-

My mistake, I'm not using any of the unraid.net services and I missed the authorized_keys files Are you able to generate logs or diagnostics from your client? I simply use the builtin ssh client in Windows, Mac and Linux all the time so I usually just run it with ssh -v to see why ssh client is being made to do password authentication.

-

ah. you need to rename the public key unraidbackup_id_ed25519.pub to authorized_keys then you can delete the private key from Unraid I thought you were trying to ssh to other servers from Unraid. My case uses both hence the authorized_keys and id_rsa files In a nutshell * Generate private and public keys * on the server you are going to access, append the public key to authorized_keys file, creating it if it doesn't exist. The typical location is /root/.ssh/authorized_keys * make sure the ownership is -rw------- (600)

-

hmm is your config file referring to the unraidbackup_id_ed25519 as the private key? by default ssh will try to use id_rsa, id_dsa, id_ecdsa, id_ed25519 and maybe a few others as the private key file running `ssh -v` will let you have an idea on what's happening.

-

As of 6.9.2 the only thing you really need to do is generate your ssh keys and stick them in the correct place either /root/.ssh or /boot/config/ssh/root (/root/.ssh is a symlink to this) root@MediaStore:~# ls -al /root/ total 32 drwx--x--- 6 root root 260 Jan 18 11:20 ./ drwxr-xr-x 20 root root 440 Jan 17 06:58 ../ -rw------- 1 root root 13686 Jan 17 11:29 .bash_history -rwxr-xr-x 1 root root 316 Apr 8 2021 .bash_profile* drwxr-xr-x 4 root root 80 Oct 29 10:21 .cache/ drwx------ 5 root root 100 Nov 30 06:02 .config/ lrwxrwxrwx 1 root root 30 Apr 8 2021 .docker -> /boot/config/plugins/dockerMan/ -rw------- 1 root root 149 Jan 18 11:20 .lesshst drwx------ 3 root root 60 Oct 29 10:21 .local/ -rw------- 1 root root 1024 Nov 13 00:36 .rnd lrwxrwxrwx 1 root root 21 Apr 8 2021 .ssh -> /boot/config/ssh/root/ drwxr-xr-x 5 root root 280 Jan 16 10:23 .vscode-server/ -rw-r--r-- 1 root root 351 Jan 18 00:00 .wget-hsts root@MediaStore:~# ls -l /root/.ssh/ total 32 -rw------- 1 root root 418 Mar 9 2021 authorized_keys -rw------- 1 root root 883 Mar 9 2021 id_rsa -rw------- 1 root root 209 Apr 19 2021 id_rsa.pub -rw------- 1 root root 3869 Jul 24 08:59 known_hosts root@MediaStore:~# ls -l /boot/config/ssh/root/ total 32 -rw------- 1 root root 418 Mar 9 2021 authorized_keys -rw------- 1 root root 883 Mar 9 2021 id_rsa -rw------- 1 root root 209 Apr 19 2021 id_rsa.pub -rw------- 1 root root 3869 Jul 24 08:59 known_hosts

-

the SSL port is a bit different since Unraid is configured to redirect almost all access to the canonical url (https://XXXXXXXXXXXXXXXXXXXXXX.unraid.net or https://yourdomain.tld) depending on how the SSL certs are provisioned. only https://unraid_lan_ip_address will not be rewritten (to still allow access while the internet is down) as for your SSH issue. I'm not sure since I haven't tried doing such a thing, but something to look at is the version of ssh used by your external host - openssh 7.6p1 - there might be interoperability issues with openssh 8.8, since your connection log indicates the network natting worked (client was able to ascertain the server app version) but connection was broken. maybe sshd -ddd will give you a better idea why the client disconnected.

-

You really shouldn't be doing it this way. And since you've happily published your WAN IP + ssh port you'll have bad actors knocking on it soon, if not already * Revoke the port forward. * See what your options are for deploying your own VPN server (Wireguard on Unraid) or maybe something on the Router (not familiar with the ASUS line) * Use that VPN to connect and login to the Unraid SSH (or Web UI) Also Unraid only allows root to login by default, so that might also explain the connection closed/reset

-

I've pushed a new version of my dropbox image containing the current version of dropbox. I've had to work out how to automate building - now that Docker autobuilds are a paid option. and I didn't want to move off docker.io registry for the time being.

-

I don't think the UI supports custom virtual nics. You'll have to configure it yourself AFAIK

-

Hmm, I'm stumped. I'll check to see if there's anything in the Dropbox headless client - they really don't give it as much support as they should.

-

Hmm. should be instant, but running `dropbox.py status` should be returning `Syncing...` not `Starting...` `Starting...` means the daemon is not running correctly... Can you try restarting the container, then running `ps xafu` as the root user inside the container? Mine returns this bash-4.3# ps xafu USER PID %CPU %MEM VSZ RSS TTY STAT START TIME COMMAND root 243 0.2 0.0 18012 3064 pts/0 Ss 05:49 0:00 bash root 251 0.0 0.0 17684 2216 pts/0 R+ 05:49 0:00 \_ ps xafu root 1 0.0 0.0 17880 2840 ? Ss 05:43 0:00 /bin/bash /usr/local/bin/dockerinit.sh nobody 20 5.5 1.3 4839120 444748 ? Sl 05:43 0:19 /dropbox/.dropbox-dist/dropbox-lnx.x86_64-135.3.4177/dropbox root 21 0.0 0.0 4376 664 ? S 05:43 0:00 sleep 86400

-

that's the wrong command. Not sure on what's the values in 6.10rc2 but in 6.9.2 its root@MediaStore:~# sshd -T | grep pubkeyaccepted pubkeyacceptedkeytypes [email protected],[email protected],[email protected],[email protected],[email protected],[email protected],[email protected],[email protected],[email protected],ecdsa-sha2-nistp256,ecdsa-sha2-nistp384,ecdsa-sha2-nistp521,[email protected],ssh-ed25519,[email protected],rsa-sha2-512,rsa-sha2-256,ssh-rsa ssh-rsa is at the very end

-

Please do realize that the thread is "solved" since its by docker design. Docker will not allow the host to talk to containers that are bridged using macvlan (default) I think 6.10rc1 allows ipvlan - which might work, so you should see if it solves the issue. As for the issue with Docker host access shim failing, well, it should be discussed on a different thread as this one was just asking about access to containers on br0

-

So i tried to mount it remotely via Terminal /mnt/user/isos <world>(ro,async,wdelay,hide,no_subtree_check,fsid=121,anonuid=99,anongid=100,sec=sys,insecure,root_squash,all_squash) This is my public share (I can't mount my private shares as they're open only on the LAN and I'm currently away) I'm not sure if I have actual read access to some of the files, since access is squashed to nobody, but I think that's an issue that can be resolved. My only Macbook is my employer's and this is the first time I've tried to access Unraid from it. And here's what happened. (Ishikawa is my Macbook, and Unraid is 192.168.2.5 over VPN) Ishikawa:~ kenneth$ mkdir RemoteTest Ishikawa:~ kenneth$ mount 192.168.2.5:/mnt/user/isos RemoteTest Ishikawa:~ kenneth$ mount /dev/disk1s1s1 on / (apfs, sealed, local, read-only, journaled) devfs on /dev (devfs, local, nobrowse) /dev/disk1s5 on /System/Volumes/VM (apfs, local, noexec, journaled, noatime, nobrowse) /dev/disk1s3 on /System/Volumes/Preboot (apfs, local, journaled, nobrowse) /dev/disk1s6 on /System/Volumes/Update (apfs, local, journaled, nobrowse) /dev/disk1s2 on /System/Volumes/Data (apfs, local, journaled, nobrowse) map auto_home on /System/Volumes/Data/home (autofs, automounted, nobrowse) 192.168.2.5:/mnt/user/isos on /Users/kenneth/RemoteTest (nfs, nodev, nosuid, mounted by kenneth) Ishikawa:~ kenneth$ cd RemoteTest/ /Users/kenneth/RemoteTest Ishikawa:RemoteTest kenneth$ ls -l total 51486120 -rwxrwxrwx 1 root wheel 2147483648 Jul 17 21:30 BigSur-install.img -rwxrwxrwx 1 root wheel 157286400 Jul 17 21:24 BigSur-opencore.img -rw-rw---- 1 kenneth _lpoperator 417333248 Dec 9 2013 CentOS-6.5-x86_64-minimal.iso -rw-r--r-- 1 kenneth _lpoperator 950009856 Jun 20 2018 CentOS-7-x86_64-Minimal-1804.iso -rw-r--r-- 1 kenneth _lpoperator 519045120 Jun 20 2018 CentOS-7-x86_64-NetInstall-1804.iso -rw-rw---- 1 kenneth _lpoperator 7554990080 Mar 28 2020 CentOS-8.1.1911-x86_64-dvd1.iso -rw-r--r-- 1 kenneth _lpoperator 4692365312 Apr 12 2018 Win10_1803_English_x64.iso -rw-r--r-- 1 root wheel 2368405504 Mar 6 2020 Zorin-OS-15.2-Lite-64-bit.iso -rw-r--r-- 1 root _lpoperator 139460608 Jan 29 2021 alpine-standard-3.13.1-x86_64.iso -rw-r--r-- 1 root _lpoperator 36700160 Jun 20 2019 alpine-virt-3.10.0-x86_64.iso -rw-r--r-- 1 root _lpoperator 42991616 Feb 17 2021 alpine-virt-3.13.2-x86_64.iso -rw-rw---- 1 kenneth _lpoperator 3901456384 Sep 13 2019 debian-10.1.0-amd64-DVD-1.iso -rw-r--r-- 1 root _lpoperator 351272960 Feb 8 2020 debian-10.3.0-amd64-netinst.iso -rw-r--r-- 1 kenneth _lpoperator 657457152 Jun 30 2018 debian-9.4.0-amd64-i386-netinst.iso -rw-r--r-- 1 kenneth _lpoperator 305135616 Jun 30 2018 debian-9.4.0-amd64-netinst.iso -rw-r--r-- 1 kenneth _lpoperator 116391936 Dec 29 2019 slackware64-current-mini-install.iso -rw-r--r-- 1 kenneth _lpoperator 322842624 Aug 16 2018 virtio-win-0.1.160-1.iso -rw-r--r-- 1 kenneth _lpoperator 371732480 May 21 2019 virtio-win-0.1.171.iso -rw-rw-rw- 1 kenneth _lpoperator 394303488 Jan 19 2020 virtio-win-0.1.173-2.iso -rw-r--r-- 1 root wheel 412479488 Jul 21 2020 virtio-win-0.1.185.iso drwxrws--- 1 kenneth _lpoperator 4096 Mar 6 2021 virtio-win-0.1.190-1 -rw-rw-rw- 1 root _lpoperator 501745664 Nov 24 2020 virtio-win-0.1.190-1.iso

-

Haven't used NFS in awhile since I don't have NFS clients locally to the Unraid server anymore But I think it should be like this: 10.0.0.0/24(sec=sys,rw,async,insecure,no_subtree_check,crossmnt) There a few things that depend on your setup. what's the admin user for you in Unraid? the user that's allowed write access to the shares? then you'll add something like anonuid=99,anongid=100,all_squash this will "squash" all access to uid = 99, which is the nobody user in Unraid and group = 100, which is the users group so you can change the uid to match the "admin" user will the clients be accessing the files as root? then add "no_root_squash" to allow root continue access as root

-

%u is expanded to the username so this tells sshd to look for the private keys in /etc/ssh/root.pubkeys (for the root user)

-

I think the VNC protocol is limited to 8 character passwords