Kosti

Members-

Posts

87 -

Joined

-

Last visited

Content Type

Profiles

Forums

Downloads

Store

Gallery

Bug Reports

Documentation

Landing

Everything posted by Kosti

-

OK, thanks guys, I am going to attempt to downgrade and see what happens as either way I am locked out due to making the passphrase in the first place, should've stuck with dumbo 123! Only encryption done is the cache drive, no other drive and that I changed the cache drive from XFS to ZFS Will be a good test, but when I get a chance to turn it on, I am going to give this a try as potentially only the cache drive will need reformatting

-

Sorry been stuck with work and no time to play with the server as its been shutdown since I can't bring up the array. so one more question before I close this out as resolved Can I revert to my previous unraid version of 6.9 and will this resolve my passphrase issue? Is there a way I can restore to this from a back up of the USB as I went from 6.9.2-->6.14 but I am pretty sure I backed up the USB and most of the unraid before I went to a newer version of unraid I know its a long shot but just maybe I can trick the system, the only issue I can see is maybe the cache drive went from XFS to ZFS encryption, damn it I just don't want to reinstall everything Now looking at Bitwardern or similar to manage my PW since my grey matter is failing me - I'm such an idiot !

-

Spewing really didn't want to rebuild it - damn Im such an idiot

-

Thanks, how many times can I keep trying to guess it?

-

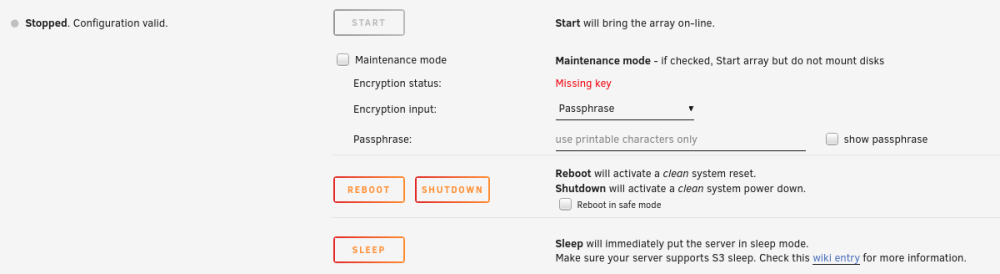

So I had a power outage and server rebooted only to find the array stopped due to missing key. I do not recall the passphrase I used sometime back when I converted my cache drive to zfs when 6.12.4 was released Am I shit out of luck to reset the key? Being the cache drive is the only zfs formate file system is there a simple recovery method? The permit reformat - will that destroy all the content of the 4 x 12TB & Parity drives? I do have a backup to an external 20TB drive mainly the folders that I wanted to backup, not sure if it included dockers or the USB drive on that backup. If I remove the cache from the array & format it will it bring me back to the same place with a missing key or is the encryption across the whole array? Only the cache drive is formatted as ZFS and for some reason I'd chosen encryption just not sure if its only on this cache drive and I can get the array started again? What Options are available for stupidity?

-

Quick update, as mover just finished however only a few folders "moved" and some stayed on the disks for example Domain Isos System |->Docker |->Libvirt These above folders are empty folders but were not created either on the cache nvme with the exception of the System folders? But mover did not delete folders when it moves the docker& livirt, Does mover not create empty directories and remove them from the current drive? Should I move Domains & isos manually to the cache drive and delete the System folder from the disk? Also in the appdata folder from the disk shows 20 folders but move function only moved 17, All folders remained in disk as well, should I manually move them? I have not started the VMs or Docker at present just not sure if I need to manually move them and clean up the disks manually or I've broken something I also see my ZFS memory is at 99% full

-

Thanks for the tips! Even though there was no duplications it wouldn't migrate files with the move function, so I manually moved these folders from cache to disk (x) then formatted the nvme to ZFS encrypted and set the move function from array-->cache and ran mover. Now the files are being moved off the array and onto cache via the move function. However I think I did break something as I did delete a folder that was empty for my backups as I have a modified script to backup too external USB using rsync but this now fails rsync: [sender] change_dir "/mnt/user/backups#342#200#235#012/mnt/user" failed: No such file or directory (2) rsync error: some files/attrs were not transferred (see previous errors) (code 23) at main.c(1336) [sender=3.2.7] true Not sure how to fix this up? does it matter who executes the script either root or use?

-

Quick question, Sorry if it doesn't belong here but Im wanting to ZFS my cache name drive and tried to move everything off the cache to the array but mover doesn't fire up? Have cache-->array for the following' appsdata isos domains system I've even stopped the docker and VM libvert etc still doesn't move, now I can only assume it doesn't overwrite existing same files or copies of the same folder, so they are either duplicates or I stuffed up somewhere Is it safe to delete these if they exist on the array and move them later after I reformat the cache drive to zfs or just leave it as btrfs?

-

Hey All Just want to thanks the UNRAID team and LT Family as I finally made the leap of faith from 6.92-->6.12.4 with no really issues detected in the logs. I performed the usual steps of backing up the flash drive, backed up my folders to an external 20TB HDD, updated all apps and plugins, stopped docker, VMs and the array then proceeded with the update process. Once rebooted, the array restarted and I enabled the docker and VM's again. Love the GUI, and ZFS, but I do not know if I will convert my xfs to zfs even though all my drive sizes are the same and since my unraid server is just a basic NAS with some docker stuff, it all went smoothly. While snap shots are good my external Rclone backup seems to do the trick for now. I did loose my Docker Folders, so I see I need to remove the old plugin and grab the new folder view plugin, I assume it will not retain my old structure but that gives me something to do while I play around, my previous 6.9.2 was up for about 70days without any issues from my last reboot due to a power failure, I really need to get new batteries for my UPS.. Thank you again, and I do hope those having issues are solved quickly PEACE Kosti

-

[Guide] InvokeAI: A Stable Diffusion Toolkit - Docker

Kosti replied to mickr777's topic in Docker Containers

Greta, thanks Yep seems its detected [2023-07-26 21:18:04,801]::[InvokeAI]::INFO --> InvokeAI version 3.0.0[0m [2023-07-26 21:18:04,801]::[InvokeAI]::INFO --> Root directory = /home/invokeuser/userfiles[0m [2023-07-26 21:18:04,801]::[InvokeAI]::DEBUG --> Internet connectivity is True[0m [2023-07-26 21:18:05,101]::[InvokeAI]::DEBUG --> Config file=/home/invokeuser/userfiles/configs/models.yaml[0m [2023-07-26 21:18:05,101]::[InvokeAI]::INFO --> GPU device = cuda NVIDIA GeForce RTX 2070[0m [2023-07-26 21:18:05,101]::[InvokeAI]::DEBUG --> Maximum RAM cache size: 6.0 GiB[0m [2023-07-26 21:18:05,104]::[InvokeAI]::INFO --> Scanning /home/invokeuser/userfiles/models for new models[0m [2023-07-26 21:18:05,303]::[InvokeAI]::INFO --> Scanned 0 files and directories, imported 0 models[0m [2023-07-26 21:18:05,303]::[InvokeAI]::INFO --> Model manager service initialized[0m O[0m: Application startup complete. -

[Guide] InvokeAI: A Stable Diffusion Toolkit - Docker

Kosti replied to mickr777's topic in Docker Containers

Hey Buddy Just wanted to say thank you for creating this! I have just installed as per your guide although for a while I couldn't figure out why my docker was filling up but after a few spaceinvader docker videos (this guy is a rock star) I was able to complete the install and launch the container..Woot Woot! I haven't generated anything yet, just wondering how to confirm the GPU is being used as I have an Nvidia GeForce RTX 2070 GPU Also are there any good guides for using prompts including negative prompts and which model to use, as I am a total noob so still finding my way around. Again thank you for this ! PEACE Kosti -

Hey All Sorry this is an old thread, but I was setting up the back up script with email notifications, but I couldn't get gmail working as "Less secure app access" is no longer supported by Google, and I don't want to use 2FA at the moment on the email account. Is there an alternative to getting email notifications via SMTP as I tried outlook mail but this also failed for me. TIA PEACE Kosti

-

Automated Backup on external HDD when device is connected via USB?

Kosti replied to Tracker's topic in General Support

Hey All, I know this is an old thread, however I want to back up the entire share content of my unraid server to a USB disk. Can I use copy and paste to use this script by @Tracker and just modify the directory's folder names right, do I need to name my unassigned drive to HDDBackup Backup to match this line in the above script logger Unmounting Ext. HDDBackup Backup -t $PROG_NAME Let me know PEACE Kosti -

In prep for the upgrade, I am about to backup the unraid server content and I have just ran the upgrade assist app (awesome app) here is the results Disclaimer: This script is NOT definitive. There may be other issues with your server that will affect compatibility. Current unRaid Version: 6.9.2 Upgrade unRaid Version: 6.12.0-rc5 Checking for plugin updates OK: All plugins up to date Checking for plugin compatibility Issue Found: ca.cfg.editor.plg is deprecated with 6.12.0-rc5. It is recommended to uninstall this plugin. Issue Found: docker.folder.plg is not compatible with 6.12.0-rc5. It is HIGHLY recommended to uninstall this plugin. A fork attempting to keep this plugin running on 6.10.0 is now available. See also the support thread for more details Issue Found: NerdPack.plg is not compatible with 6.12.0-rc5. It is HIGHLY recommended to uninstall this plugin. OK: All plugins are compatible Checking for extra parameters on emhttp OK: emhttp command in /boot/config/go contains no extra parameters Checking for disabled disks OK: No disks are disabled Checking installed RAM OK: You have 4+ Gig of memory Checking flash drive OK: Flash drive is read/write Checking for valid NETBIOS name OK: NETBIOS server name is compliant. Checking for ancient version of dynamix.plg OK: Dynamix plugin not found Checking for VM MediaDir / DomainDir set to be /mnt OK: VM domain directory and ISO directory not set to be /mnt Checking for mover logging enabled OK: Mover logging not enabled Checking for reserved name being used as a user share OK: No user shares using reserved names were found Checking for extra.cfg OK: /boot/config/extra.cfg does not exist Issues have been found with your server that may hinder the OS upgrade. You should rectify those problems before upgrading So my transition shouldn't be that bad once I sort out the plugin that have potential issues, nice work LT team.. QQ. Is it ok to go through RC releases before a stable release is offered, any concerns I know some do this on non prod servers, but I only have one 😛 PEACE Kosti

-

Hey All Quick questions, apology in advance if this is not the correct place to ask. I am on 6.9.2, never needed to make changes until recently added larger storage capacity, and discovered I'm miles behind. Wow ZFS look at you unraid! Well done LT.. So right now I use unRAID array with an optional "Cache" all are XFS format and cache drives are btrfs. Apart for backing up the USB flash drive , any other gotcha's before I drive into 6.12 stable when released? My play is to go straight to 6.12? PEACE Kosti

-

I will need to confirm, again, thanks for the heads up buddy! Phew, checked the FS and all XFS on my data drives and cache drives using btrfs OK I kicked off a new pre clear it's about 50% in no major concerns so far only a few temp alerts reaching 46 Deg C..Fingers crossed

-

OK, so perhaps I should uninstall this plugin and use Unassigned Devices Preclear as mentioned in the top of the page..facepalm to self.. Is the error related to the plugin and the drive is ok, will remove the old one run the new plugin and come back in about 20+ hrs PEACE Kosti

-

Damn, its been ages since I touched anything with Unraid since I initially set it up and added the pre clear under the tools - disk utilities Apologies for the noobness, I assume I am out of date with the tool and perhaps this is the cause and maybe using depreciated apps? How can I confirm, EDIT - I believe its from gfjardim Also have a warning or attention stating Checked my Unraid Version 6.9.2 2021-04-07 Hope this helps PEACE Kosti

-

Wow, its been a while, So I ran a pre clear on a 20TB just delivered from Amazon, but some error, not sure if its the drive or something within my setup as I also had a parity check running at the same time I kicked off the pre clear. Here is a log from pre clear icon, as I only ran 1 pass, how do I dig deeper to find out the cause? May 02 19:30:38 preclear_disk_ZVT8CP1P_23673: Pre-Read: dd output: 9534201+0 records out May 02 19:30:38 preclear_disk_ZVT8CP1P_23673: Pre-Read: dd output: 19994668695552 bytes (20 TB, 18 TiB) copied, 97922.6 s, 204 MB/s May 02 19:30:38 preclear_disk_ZVT8CP1P_23673: Pre-Read: dd output: 9534916+0 records in May 02 19:30:38 preclear_disk_ZVT8CP1P_23673: Pre-Read: dd output: 9534915+0 records out May 02 19:30:38 preclear_disk_ZVT8CP1P_23673: Pre-Read: dd output: 19996166062080 bytes (20 TB, 18 TiB) copied, 97935 s, 204 MB/s May 02 19:30:38 preclear_disk_ZVT8CP1P_23673: Pre-Read: dd output: 9535610+0 records in May 02 19:30:38 preclear_disk_ZVT8CP1P_23673: Pre-Read: dd output: 9535609+0 records out May 02 19:30:38 preclear_disk_ZVT8CP1P_23673: Pre-Read: dd output: 19997621485568 bytes (20 TB, 18 TiB) copied, 97947.3 s, 204 MB/s May 02 19:30:38 preclear_disk_ZVT8CP1P_23673: Pre-Read: dd output: 9536316+0 records in May 02 19:30:38 preclear_disk_ZVT8CP1P_23673: Pre-Read: dd output: 9536315+0 records out May 02 19:30:38 preclear_disk_ZVT8CP1P_23673: Pre-Read: dd output: 19999102074880 bytes (20 TB, 18 TiB) copied, 97959.6 s, 204 MB/s May 02 19:30:38 preclear_disk_ZVT8CP1P_23673: Pre-Read: dd output: 9537012+0 records in May 02 19:30:38 preclear_disk_ZVT8CP1P_23673: Pre-Read: dd output: 9537011+0 records out May 02 19:30:38 preclear_disk_ZVT8CP1P_23673: Pre-Read: dd output: 20000561692672 bytes (20 TB, 18 TiB) copied, 97971.9 s, 204 MB/s May 02 19:30:38 preclear_disk_ZVT8CP1P_23673: Pre-Read: dd output: 9537023+1 records in May 02 19:30:38 preclear_disk_ZVT8CP1P_23673: Pre-Read: dd output: 9537023+1 records out May 02 19:30:38 preclear_disk_ZVT8CP1P_23673: Pre-Read: dd output: 20000588955136 bytes (20 TB, 18 TiB) copied, 97972.1 s, 204 MB/s May 02 19:30:38 preclear_disk_ZVT8CP1P_23673: Pre-read: pre-read verification failed! May 02 19:30:38 preclear_disk_ZVT8CP1P_23673: S.M.A.R.T.: Error: May 02 19:30:38 preclear_disk_ZVT8CP1P_23673: S.M.A.R.T.: May 02 19:30:38 preclear_disk_ZVT8CP1P_23673: S.M.A.R.T.: ATTRIBUTE INITIAL NOW STATUS May 02 19:30:38 preclear_disk_ZVT8CP1P_23673: S.M.A.R.T.: Reallocated_Sector_Ct 0 0 - May 02 19:30:38 preclear_disk_ZVT8CP1P_23673: S.M.A.R.T.: Power_On_Hours 0 27 Up 27 May 02 19:30:38 preclear_disk_ZVT8CP1P_23673: S.M.A.R.T.: Reported_Uncorrect 0 0 - May 02 19:30:38 preclear_disk_ZVT8CP1P_23673: S.M.A.R.T.: Airflow_Temperature_Cel 26 51 Up 25 May 02 19:30:38 preclear_disk_ZVT8CP1P_23673: S.M.A.R.T.: Current_Pending_Sector 0 0 - May 02 19:30:38 preclear_disk_ZVT8CP1P_23673: S.M.A.R.T.: Offline_Uncorrectable 0 0 - May 02 19:30:38 preclear_disk_ZVT8CP1P_23673: S.M.A.R.T.: UDMA_CRC_Error_Count 0 0 - May 02 19:30:38 preclear_disk_ZVT8CP1P_23673: S.M.A.R.T.: SMART overall-health self-assessment test result: PASSED May 02 19:30:38 preclear_disk_ZVT8CP1P_23673: error encountered, exiting... Not sure what other info is needed, pls let me know if there is something I need tp worry about or re run the pre clear now that the parity check has finished. PEACE Kosti

-

OK, success! Although perhaps my issue was with the "file name" as the way I copied it, was through Krusader in a docker to move the file however when I looked at it with MC Midnight Commander, it had ._au-syd.prod.surfshark_openvpn_udp.ovpn So after I edited the file I created a new one name au-syd_surfshark_openvpn_udp.ovpn and moved "remote" and qbittorrent is now working...Yeah! However some errors in log file what I was hoping to confirm how to resolve, in particular the last line Options error.. 2022-01-24 08:23:29,552 DEBG 'start-script' stdout output: [info] Starting OpenVPN (non daemonised)... 2022-01-24 08:23:29,738 DEBG 'start-script' stdout output: 2022-01-24 08:23:29 DEPRECATED OPTION: --cipher set to 'AES-256-CBC' but missing in --data-ciphers (AES-256-GCM:AES-128-GCM). Future OpenVPN version will ignore --cipher for cipher negotiations. Add 'AES-256-CBC' to --data-ciphers or change --cipher 'AES-256-CBC' to --data-ciphers-fallback 'AES-256-CBC' to silence this warning. 2022-01-24 08:23:29,738 DEBG 'start-script' stdout output: 2022-01-24 08:23:29 WARNING: file 'credentials.conf' is group or others accessible 2022-01-24 08:23:29,738 DEBG 'start-script' stdout output: 2022-01-24 08:23:29 WARNING: --ping should normally be used with --ping-restart or --ping-exit 2022-01-24 08:23:29,818 DEBG 'start-script' stdout output: 2022-01-24 08:23:29 WARNING: 'link-mtu' is used inconsistently, local='link-mtu 1633', remote='link-mtu 1581' 2022-01-24 08:23:29 WARNING: 'auth' is used inconsistently, local='auth SHA512', remote='auth [null-digest]' 2022-01-24 08:23:30 Options error: Unrecognized option or missing or extra parameter(s) in [PUSH-OPTIONS]:7: block-outside-dns (2.5.5) Also one more basic question, do I need to open these ports on my home ISP router (6881) previously I've not needed to do this but I assume I do however I do not need these open 8118, 8585) right Cheers Kosti

-

Thanks @wgstarks Let me try and test Cheers Kosti

-

Anyone able to decipher this error, I am trying to use qbittorrent with vpn but no luck log file shows https://hub.docker.com/u/binhex/ 2022-01-23 11:18:04.075528 [info] Host is running unRAID 2022-01-23 11:18:04.088876 [info] System information Linux Unraid #1 SMP Wed Apr 7 08:23:18 PDT 2021 x86_64 GNU/Linux 2022-01-23 11:18:04.105725 [info] OS_ARCH defined as 'x86-64' 2022-01-23 11:18:04.120779 [info] PUID defined as '99' 2022-01-23 11:18:04.136815 [info] PGID defined as '100' 2022-01-23 11:18:04.451208 [info] UMASK defined as '000' 2022-01-23 11:18:04.465584 [info] Permissions already set for '/config' 2022-01-23 11:18:04.480955 [info] Deleting files in /tmp (non recursive)... 2022-01-23 11:18:04.499044 [info] VPN_ENABLED defined as 'yes' 2022-01-23 11:18:04.514234 [info] VPN_CLIENT defined as 'openvpn' 2022-01-23 11:18:04.528900 [info] VPN_PROV defined as 'custom' 2022-01-23 11:18:04.546205 [info] OpenVPN config file (ovpn extension) is located at /config/openvpn/au-syd.prod.surfshark_openvpn_udp.ovpn 2022-01-23 11:18:04.567833 [crit] VPN configuration file /config/openvpn/au-syd.prod.surfshark_openvpn_udp.ovpn does not contain 'remote' line, showing contents of file before exit... content of .ovpn client dev tun proto udp remote au-syd.prod.surfshark.com 1194 resolv-retry infinite remote-random nobind tun-mtu 1500 tun-mtu-extra 32 mssfix 1450 persist-key persist-tun ping 15 ping-restart 0 ping-timer-rem reneg-sec 0 remote-cert-tls server auth-user-pass #comp-lzo verb 3 pull fast-io cipher AES-256-CBC auth SHA512

-

duplicate post

-

sorry wrong thread

-

Bluetooth and USB Disconnect Error in Log - Guidance Required

Kosti replied to Kosti's topic in General Support

Update - Preclear shows as completed successfully I think Dec 27 09:26:12 Medusa preclear_disk_3551483156325046[20654]: Post-Read: progress - 90% verified @ 119 MB/s Dec 27 12:33:38 Medusa preclear_disk_3551483156325046[20654]: Post-Read: dd - read 12000105070592 of 12000105070592 (0). Dec 27 12:33:38 Medusa preclear_disk_3551483156325046[20654]: Post-Read: elapsed time - 21:16:26 Dec 27 12:33:38 Medusa preclear_disk_3551483156325046[20654]: Post-Read: dd exit code - 0 Dec 27 12:33:38 Medusa preclear_disk_3551483156325046[20654]: Post-Read: post-read verification completed! Dec 27 12:33:39 Medusa preclear_disk_3551483156325046[20654]: S.M.A.R.T.: Cycle 1 Dec 27 12:33:39 Medusa preclear_disk_3551483156325046[20654]: S.M.A.R.T.: Dec 27 12:33:39 Medusa preclear_disk_3551483156325046[20654]: S.M.A.R.T.: ATTRIBUTE INITIAL NOW STATUS Dec 27 12:33:39 Medusa preclear_disk_3551483156325046[20654]: S.M.A.R.T.: Reallocated_Sector_Ct 0 0 - Dec 27 12:33:39 Medusa preclear_disk_3551483156325046[20654]: S.M.A.R.T.: Power_On_Hours 84 125 Up 41 Dec 27 12:33:39 Medusa preclear_disk_3551483156325046[20654]: S.M.A.R.T.: Temperature_Celsius 38 38 - Dec 27 12:33:39 Medusa preclear_disk_3551483156325046[20654]: S.M.A.R.T.: Reallocated_Event_Count 0 0 - Dec 27 12:33:39 Medusa preclear_disk_3551483156325046[20654]: S.M.A.R.T.: Current_Pending_Sector 0 0 - Dec 27 12:33:39 Medusa preclear_disk_3551483156325046[20654]: S.M.A.R.T.: Offline_Uncorrectable 0 0 - Dec 27 12:33:39 Medusa preclear_disk_3551483156325046[20654]: S.M.A.R.T.: UDMA_CRC_Error_Count 0 0 - Dec 27 12:33:39 Medusa preclear_disk_3551483156325046[20654]: S.M.A.R.T.: SMART overall-health self-assessment test result: PASSED Dec 27 12:33:39 Medusa preclear_disk_3551483156325046[20654]: Cycle: elapsed time: 41:08:31 Dec 27 12:33:39 Medusa preclear_disk_3551483156325046[20654]: Preclear: total elapsed time: 41:08:32 BUT the below logs worries me a fair bit as to why is this popping up? Can anyone pls tell me why this is occurring and if this is normal? Dec 27 05:35:57 Medusa kernel: usb 1-14: USB disconnect, device number 10 Dec 27 05:35:57 Medusa kernel: usb 1-14: new full-speed USB device number 11 using xhci_hcd Dec 27 05:35:57 Medusa kernel: input: MSI MYSTIC LIGHT as /devices/pci0000:00/0000:00:14.0/usb1/1-14/1-14:1.0/0003:1462:7D17.0006/input/input9 Dec 27 05:35:57 Medusa kernel: hid-generic 0003:1462:7D17.0006: input,hiddev96,hidraw2: USB HID v1.10 Device [MSI MYSTIC LIGHT ] on usb-0000:00:14.0-14/input0 Any idea chaps?? I really want to start using this new unraid build but fear its not stable enough? PEACE Kosti