-

Posts

226 -

Joined

-

Last visited

Content Type

Profiles

Forums

Downloads

Store

Gallery

Bug Reports

Documentation

Landing

Everything posted by moose

-

plugin to measure kilowatt-hours (kWh) that a unRAID server consumes

moose replied to moose's topic in Plugin System

Thank you @Random.Name I will check this out. -

Does anyone know if a plugin exists (or docker container exists) that can measure the kilowatt-hours (kWh) that a unRAID server consumes? This would be helpful to measure average cost to operate over various periods (hour, day, week, month, year, custom). In unRAID "Settings/UPS Settings" I see a LOADPCT variable that is dynamically being measured and updated ~ every few seconds. I think if this was recorded with respect to time and calibrated with my known UPS capacity, kilowatt-hours could be calculated for user defined time intervals. Users could input their cost per kWh and have a good estimate of what it costs them to operate their unRAID server. I think you can buy a third party device for this but why not make it free for all in unRAID? If I knew how to develop this I would try. Anyone know if something already exists or how someone could create this?

-

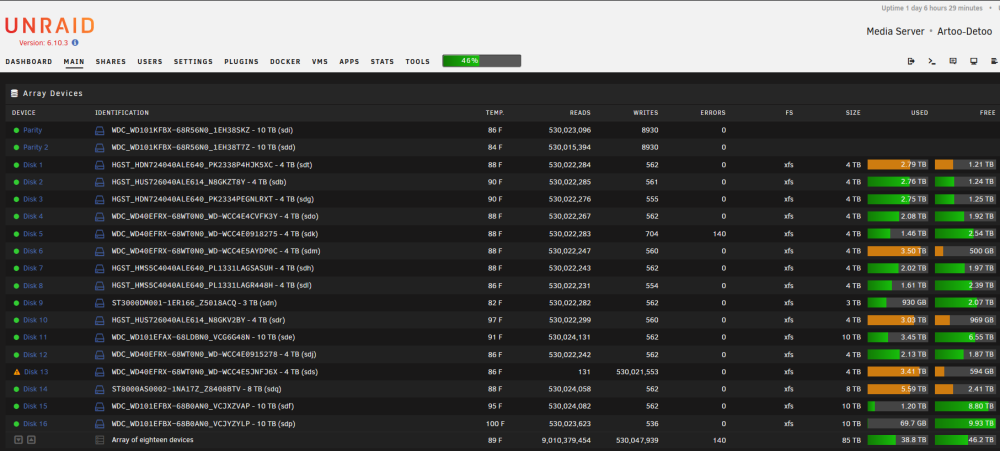

Thank you JorgeB. I ran extended tests on disk 13 again and it started failing, disk 5 also started throwing read errors and was disabled. Disk 5 also failed extended tests. I ended up replacing both disk 5 and 13. The data rebuild completed on the new 5 and 13 disks. I am up and running with no issues! Thank you for the help!!

-

[SOLVED] unable to resume or cancel paused disk rebuild

moose replied to moose's topic in General Support

Will do. Thank you JorgeB and trurl for your help! Very appreciated! I ran another extended SMART test on disk 13 and it failed, so I ended up replacing both disk 5 and 13. The data rebuild on both disks completed successfully. The problem is fixed and I'm up and running again! Thank you!! -

[SOLVED] unable to resume or cancel paused disk rebuild

moose replied to moose's topic in General Support

The extended test on disk 5 failed. Attached are new diagnostics with the array started. artoo-detoo-diagnostics-20220915-2230.zip -

[SOLVED] unable to resume or cancel paused disk rebuild

moose replied to moose's topic in General Support

Yes, all was done with the system on. I enabled Smart attributes 1 and 200 or 1 for all WD disks (some WD did not have SMART attribute 200). I was able to do a clean shut down through the GUI. I rebooted and disk 5 is disabled (disk 13 is emulated and ready for data rebuild). I ran a short self test on disk 5 and it passed, now am running an extended test on disk 5. Attached are new diagnostics. I will wait for the extended test to complete and advice before proceeding. Array is not started. artoo-detoo-diagnostics-20220915-2103.zip Edit: The extended test failed for disk 5. The SMART report is attached. artoo-detoo-smart-20220915-2133.zip -

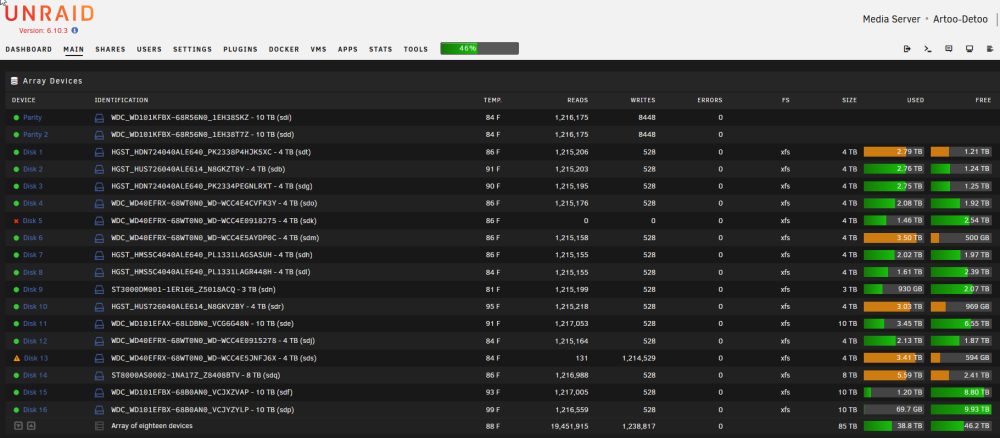

I was rebuilding a disabled disk (#13) and into the disk rebuild process I noticed another data disk (#5) with read errors. I paused the rebuild operation and then ensured the breakout cable was seated into the disk 5 cage and I also pulled disk 5 from the cage and re-seated it. Now when I attempt to resume the disk rebuild or cancel the disk rebuild, nothing occurs, the rebuild operation remains paused. I've attached diagnostics and a screenshot of the GUI interface. Any recommendations? artoo-detoo-diagnostics-20220914-2109.zip

-

Sorry for the delay in responding. Attached are diagnostics. I did not start the array, just booted and generated diagnostics. (Disk 13 is disabled. Disk 3 was also having issues and I thought it was also disabled when I shut down the server but now disk 3 shows as ok.) artoo-detoo-diagnostics-20220912-2030.zip

-

I didn't know Unraid won't disable more disks that there are parity drives. This would have kept me from panicking and shutting it down. @JorgeB I didn't save diagnostics before I shut down the server. If Unraid won't disable more disks than there are parity drives, I can boot the server and produce diagnostics. The server state should be ~ the same as it was when the 2 data disks were disabled due to read errors, correct?

-

I have an array with 2 parity disks and 16 data disks. I had 1 data disk have read errors and become disabled. I performed short/long tests on the disk and it was fine so I rebuilt the data disk, moved the server to a new location due to home remodel. Everything was fine for several days then I had 2 different data disks have read errors and get disabled. I shut server down thinking cabling may be the issue and I was afraid a 3rd data disk may have read errors and get disabled. Server is still shut down. I though I would recheck all cabling before restarting and risking a 3rd data disk disable before I can rebuild the 2 data disks that are currently disabled. I have a LSI controller (flashed to IT mode) and SAS breakout cables. My question is what happens if with 2 parity disks, more than 2 data disks get disabled due to read errors (which might be cabling)? Since the seemingly only way to correct a disabled disk is to rebuild it, 2 parity disks and 3 or more disabled data disks seems like a data loss?

-

Thank you for the clarification jonathanm!

-

FWIW, I have the same scenario as strike. Using the binhex preclear docker, I successfully precleared a 4TB and 8TB drive. I stopped the array, assigned the new drives and started the array. Both drives are "clearing" and should be complete in ~ 24 hours. I would have thought unRAID would have known the drives were already precleared. Anyway it's just going to take a bit longer for the second "clearing" cycle to complete, no big deal for now. Merry Christmas to all! edit: Another oddity on the second clearing topic. A few days earlier, I precleared 2 new 10TB drives using the binhex preclear docker, preclear worked fine. The 2 10TB drives were then assigned as parity replacements (1 10 TB at a time for a dual parity configuration). I didn't have any issue with unRAID clearing these drives for a second time, meaning unRAID did not clear these 2 10TB drives a second time when I added them as new parity drives and rebuilt/re-synced array parity. I'm also on 6.8 stable.

-

Thanks all!

-

Would you mind providing a link to that bug report? Honestly I tried to find it on my own but could not...

-

[Support] Linuxserver.io - Unifi-Controller

moose replied to linuxserver.io's topic in Docker Containers

Thanks j0nnymoe for enlightening me! -

[Support] Linuxserver.io - Unifi-Controller

moose replied to linuxserver.io's topic in Docker Containers

Is anyone running v5.10 or is the general recommendation to go to v5.9 max? I'm running v5.9.29 and haven't had any issues so far... -

@JonMikeIV I also upgraded from 6.6.7 to 6.7.0 but had no hanging issue. I'm not sure if this makes a difference but I always do this before starting the unRAID OS upgrade and have never had it hang: Stop VMs and Dockers Stop Array, confirming it stops sucessfully Then upgrade OS Then reboot I know this doesn't really help your current situation but just wondered if it might explain the hanging.

-

Updated from 6.6.7, no issues. Thank you for the excellent work!

-

[SOLVED] Drive Spindowns Affecting Media Playback?

moose replied to Auggie's topic in General Support

Auggie, I have had similar issues you describe. It seemed disk access was freezing at various random times, sometimes perhaps at intervals although I didn't time them to see if there was a pattern. It would happen watching movies (freeze for 20-60 seconds or so), playing audio, reading/writing files to the array. I wasn't sure exactly when this started to happen but know its been happening for a year or more. Sometime in the past this didn't occur but I don't know that exact time frame or what unRAID release I was on at the time. I do have a computer adjacent to my unRAID server and when using that computer, noticed that when the problem occurred, I could see all the drives being accessed, one drive at a time (LED illuminating for each drive on my supermicro 5-in-3 cages). Once that random drive access stopped the problem would go away and the array would be responsive again. I've searched this forum many times with various keywords and not been able to find anything, until I searched "scanning disks freeze" and this thread was at the top of the results. I also had the Dynamix Cache Dirs pluging installed but wasn't using it. I bet you are correct and this is the root cause. I've uninstalled it and will monitor for the freezes and report back if it occurs or if it doesn't occur. Thank you for posting this. -

Can't connect QNAP or Win10 box to Unraid server via SMB

moose replied to Coolsaber57's topic in General Support

I found and fixed the unRAID SMBv1 access issue via this article: https://www.techcrumble.net/2018/03/you-cant-access-this-shared-folder-because-your-organizations-security-policies-block-unauthenticated-guest-access/ I had followed BobPhoenix's enable "SMB 1.0/CIFS File Sharing Support", also updated my hosts file per your earlier suggestion, the step in the linked article was the last step I needed. It's a Win 10 "rookie mistake" by me. (I'm new to Win 10.) Thanks for your help Frank and LT team! -

Can't connect QNAP or Win10 box to Unraid server via SMB

moose replied to Coolsaber57's topic in General Support

Thanks Frank. The hosts modification doesn't work, I already tried your other suggestions to no avail. I'll keep investigating, researching and trying other methods. I think MS changed something with Win 10 version 1809 which might prevent earlier workarounds. If/when I find a solution, I'll report back to this thread. -

Can't connect QNAP or Win10 box to Unraid server via SMB

moose replied to Coolsaber57's topic in General Support

I have a new win 10 2019 LTSC 1809 install on a laptop. I've enabled SMBv1 per BobPhoenix above, browsed his links and completed other searches for solutions via google. Nothing is working, I cannot browse my unRAID server from my new win 10 laptop. This SMB1.0 quirk between Linux and newer versions of win 10 seems unsolvable for me. Too bad we can't use SMBv2 or SMBv3 in unRAID, seems odd this isn't resolved/updated in Linux... There has to be other unRAID users with w10 1809 that have a workaround. Does anyone have any other ideas to try to resolve? -

Merry Christmas and Happy New Year to everyone!! :) Thank you to the Lime Tech team and community for all you do. Enjoy your time with family and friends!

-

Upgraded from 6.5.3 to 6.6.0 with no issues. New GUI looks great! Thank you for all the hard work!! :)

-

Hi spants, I have a question with respect to the comment/detail from yippy3000, does this mean that after some time of running the docker (as it now exists) that the docker (pihole dns server) becomes unusable/unresponsive? I ask because I installed the pihole docker and it seemed to work great for about a day or so then all LAN devices were unable to DNS resolve. My remedy was to remove pihole. I really like the function/benefit/concept of pihole and would like to reinstall/have it work as a docker. In other words is there any issue with this docker or are people successfully using it for a ~ indefinite period? (guessing the docker is fine and it was perhaps some config tweak/change I made in that first day or so)