bastl

-

Posts

1267 -

Joined

-

Last visited

-

Days Won

3

Content Type

Profiles

Forums

Downloads

Store

Gallery

Bug Reports

Documentation

Landing

Report Comments posted by bastl

-

-

Quick report on RC4. Issue still exists.

-

7 minutes ago, limetech said:

it's not an option we offer either.

I get the point, but qemu with all it's packages is part of unraid and "qemu-img convert" is even mentioned in the wiki. There is lots of stuff people have to manual edit files or use the cli for because there isn't a UI option build in unraid yet. And all are options.

To sum things up, for my usecase with a couple VMs on the cache drive, I have to watch for the size of the files. I only have 500GB cache and the "OPTION" to save 20-30% space is always welcome 😉

-

@limetech Opening the web terminal in Firefox 69.0.3 works, but typing exit to end the session doesn't work anymore. It looks like it only resets/refreshes the session and clears the content of the terminal from before. Same for Brave browser. Don't no if it's worth for an extra bug report.

-

9 hours ago, limetech said:

Changed Priority to Minor

Just for my understanding, a bug that causes data loss isn't "urgent"?

-

I tested a lot of stuff and I think I found something. All the affected vdisks are qcow2 compressed. If I convert them back to uncompressed qcow2 files it looks like this error won't appear and the vdisks don't get corrupted. Did something changed in the current qemu build how to handle compressed qcow2 files or is this maybe a known issue already? Is it maybe an Unraid only issue?

I used the following command to create the compressed files in case someone wanna test it. This way I save nearly 20GB space for a 100GB vdisk file with 55GB allocation. The compressed file is 35GB.

qemu-img convert -O qcow2 -c -p uncompressed_image.qcow2 compressed_image.qcow2-

1

1

-

-

Quick question to all the people having issues. Is there anyone with a Ryzen or TR4 system having issues? I am on a first gen TR4 and never had any problems. Are only Intel systems affected by this, maybe because of the Spectre Meltdown mitigations??? Just an idea.

-

@coblck You have to edit the DockerClient.php (/usr/local/emhttp/plugins/dynamix.docker.manager/include)

change it like it's shown on the post I've linked you

nano /usr/local/emhttp/plugins/dynamix.docker.manager/include/DockerClient.phpScroll down until you see "DOCKERUPDATE CLASS". Below it you should find "Step 4: Get Docker-Content-Digest header from manifest file" Change the following

from

'Accept: application/vnd.docker.distribution.manifest.v2+json',to

'Accept: application/vnd.docker.distribution.manifest.list.v2+json,application/vnd.docker.distribution.manifest.v2+json',done.

With a server restart that file will be reset. If you don't feel confident with editing a file, wait for the next Unraid update and ignore the docker update hints. If there is a real update for a docker container and you run the update, it will install.

-

1

1

-

1

1

-

-

6 hours ago, coblck said:

Hi please may i have a little more help on this what command or where do i locate the file i need to update please thanks in advance

-

2 hours ago, coblck said:

Hi what file do i need to update for the fix

-

14 minutes ago, rsuplido said:

Anyone know if it was caused by the Community Applications plugin update on 8/27?

No. I had this issue before updating CA today.

-

2 minutes ago, j0nnymoe said:

FWIW, I checked our containers that I run on my own server, when updating them one by one the update notification went away.

I was discussing this with @urbanracer34 last night and it seems it could be an error with the "update all containers button".

After updating each one by one, search for updates again and all your dockers will show updates as before. If you look at the output of the update window, you will see it pulls 0 data.

-

Same for me, all the LSIO containers show updates without pulling any data by starting the process.

-

Question: Why it only happens for users using Plex/Sonar/Radar? These are the 3 I always read. I use none of them and don't had any issues so far.

-

11 hours ago, J89eu said:

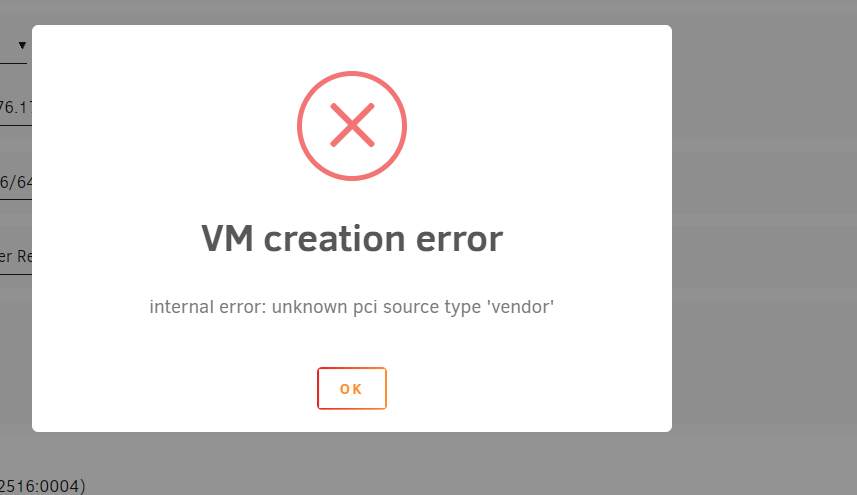

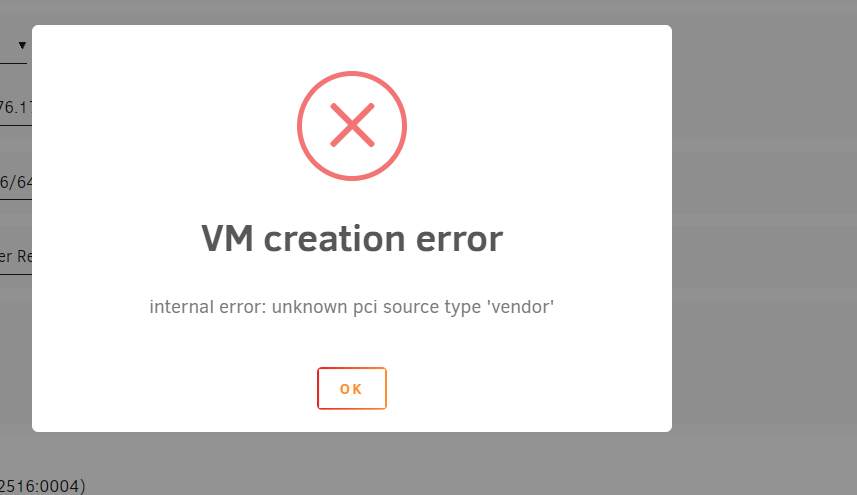

This is the usual error if you remove a USB device for example a keyboard or mouse which you have setup in the VM setting to passthrough. Plug in the specific device or remove the part from your XML

<hostdev mode='subsystem' type='usb' managed='no'> <source> <vendor id='0x0424'/> <product id='0x2228'/> </source> <address type='usb' bus='0' port='1'/> </hostdev>

-

Same for me. Update went fine and server is running for 4 days now without any issues so far.

-

Another thing for "de" keyboard that bugs me is that "@" isn't working most of the time. CTRL+ALT+Q mostly doesn't work.

-

After a couple days now on RC7 updated from RC6 everything looks ok. No errors on my side. DNS issues like on RC6 didn't show up again. For those how have issues and having network issues, check your bridge settings. For me removing a unused bridge that I had added for testing caused the issue und RC6.

-

Small advice. Try to separate the VMs from each other. Try not to use the same cores on different VMs at the same time or you will end up with this situations you saw before.

Lets say you have 8 cores. Limit your total number of running VMs to max 7. Don't use core0, it is always used by Unraid itself to manage all the stuff in the background. And try not to split a core and it's hyperthread between 2 VMs. If you have a core+HT give it to the same VM. Sure it will work but you will drastically drop your performance and will end up with some weird errors.

-

42 minutes ago, MorgothCreator said:

Virtual box is used in unRaid and Synology DSM, so the limitations are the same.

To sum things up, "Virtualbox" is NOT used in Unraid. Unraid uses Qemu/KVM which is a type1 hypervisor where VirtualBox is a type2 hypervisor. Thats 2 complete different things. 😉

-

You ever heared what nested virtualization is and what limitions there are? Virtualize something inside a already virtualized environment is NOT a good idea. You will face a lot of issues when it comes to performance. Why are you using a extra layer of virtualization, if you don't need to? To fool your machine it has more power than it actual has? Thats not how it works!

-

Are you talking about Unraid or are you using Virtualbox or Synology DSM. These are 3 fundamental different software solutions.

-

I guess you have the wrong expectations here. Even if you don't see a full utilisation of all cores, the CPU has to queue up all operations of all VMs and cause delays which can cause "hickups" or lags. That effect you see in dropped network can be one effect of that. Each packet that has to be send or received accross that nic has to be processed by unraid to transfer it to the correct VM/Docker. In an situation, where the process chain/queue is already filled up to an certain point it can lead to a drop of the packet and an rerequest, depending on the protocol or worst case in an reset of that device. You will definitly see better performance with a newer higher core count platform, thats true, but even on a 8core CPU 11 VMs, all running at the same time is a lot of IO operations you're generating. Sharing cores between VMs and Dockers, running at the same time can and will affect the performance of all components and devices used by these VMs/Dockers. Seperate everything as much as you can to reduce side effects like stutter or lag

-

11 VMs on a Dual Core CPU. Isn't this a bit to much?

-

7 minutes ago, bonienl said:

Your network configuration is wrong. Interface eth2 (br2) has the wrong gateway defined.

br0 (correct)

IPADDR[0]="192.168.1.206" NETMASK[0]="255.255.255.0" GATEWAY[0]="192.168.1.1"br2 (wrong)

IPADDR[2]="20.20.20.10" NETMASK[2]="255.255.255.0" GATEWAY[2]="192.168.1.1"Restarting the server may have given gateway[2] a lower (better) metric and effectivily blackholing all your internet traffic.

I quoted another user and that diagnostics weren't mine. But as the other user before I had a second bridge defined for a second physical nic. That nic I never plugged in a cable or used it for anything than for 2 VMs (Pfsense, Windows) to test if I can use that bridge for the VM to prevent any access to my physical lan and completely separate that Windows VM from Unraids network. Nothing else was set to use br2. I forgot to remove that bridge which caused the above issue. Somehow with RC6 Unraid started routing traffic to that second bridge. Removed it and the FCP hints for wrong DNS settings are gone.

6.8.0 RC1+RC4 corrupted QCOW2 vdisks on XFS! warning "unraid qcow2_free_clusters failed: Invalid argument" propably due compressed QCOW2 files

-

-

-

-

-

in Prereleases

Posted

On a single 500GB 960 Evo BTRFS formated NVME in a none default share called "VMs" set as default storage path in VM manager "/mnt/user/VMs/". Didn't tried it on the array or UD