bastl

-

Posts

1267 -

Joined

-

Last visited

-

Days Won

3

Content Type

Profiles

Forums

Downloads

Store

Gallery

Bug Reports

Documentation

Landing

Report Comments posted by bastl

-

-

@jbartlett Never experienced this issue on my 1950x with OC. Windows VMs with and without passthrough, default template or tweaked settings, I never noticed something you described. Maybe an temp issue?! What cooling solution are you using?

-

20 hours ago, darthcircuit said:

Which means my pcie lanes are only running at 1x speed. That will be a problem.

FYI starting with Qemu 4.0 they only changed the naming. This custom arguments are still working.

-

2

2

-

-

@darthcircuit 3-5fps more or less? come on 🤨 1080ti for 4k as the bare minimum and 60fps in most games on highest settings not even reachable. If you have a high refresh rate monitor and want a good 4k experience, get a 2080ti 😂

-

33 minutes ago, darthcircuit said:

Even if 4.0.1 was patched to take the <qemu:commandline> option again, that would be better than nothing.

Starting from 6.8 RC1 I had to remove the extra qemu commandlines and haven't noticed any graphics performance decreases yet. Not in RC1, RC4 or RC5

-

39 minutes ago, Dataone said:

privacy.resistFingerprinting in about:config was the culprit. However, I really don't want to disable this and there's no way to add exceptions per domain.

Is it a global setting or is it handled differently in a private window?

-

7 minutes ago, nuhll said:

name changed and directory, mac adress changed also

Weird. Not sure what happens if you setup a VM with a name that maybe already exists. Will unraid prevent you from doin this or will it mix things up? IDK. Did you restored the libvirt.img recently? It contains all the xml files.

-

@nuhll You know that the name of the folder when a VM first is created uses the name of the VM you put into the gui. Changing the name of the VM later via xml or gui doesn't change the folder the vdisk is placed in. Are you sure your VM was named Debian and you never had a "Debian pfsense" VM?

So if you use an existing VM to install a new OS as guest OS let's say you switch from Debian to Windows the folder name doesn't change 😁 If that's the issue you're talking about, it's not a real issue with unraid. In case the real name of the VM is shown wrong in the list of VMs in Unraid, that might be a problem.

-

I forgot to mention, I'am also affected by "starting services". 2 scripts are triggered with starting the array and running with success. One syncs a icon folder and one pings a synology nas.

-

Downgrade to qemu 4.01 fixed my issue with qcow2 vdisk corruption and broken guest installs on xfs drives.

But switching between hyper-v on and off doesn't change anything. After saving, the setting reverts back to "on".

-

retested with RC5:

No issues in all of my tests. Different guests(Mint, PopOS, Win7, Win10) are able to install without issues on xfs formatted array drives. Cache also works without any problems so far.

The issue I had with compressed qcow2 vdisks is also gone. Old existing compressed files can be used without getting corrupted. Newly generated and compressed qcow2 files are also fine.

Let's hope they fix it in future qemu versions.

-

1

1

-

-

@DZMM RC5 reverted back to qemu 4.01 from 4.1

-

1 hour ago, testdasi said:

I protest this decision! It is unacceptable that I can't use my niche password " ". 😂

Imagine rainbow tables full of emtpy passwords with only your year of birth at the end 😂

-

What I'am understand from 4.0 to 4.1 they added a couple commits to increase the performance of qcow2 and how the block data and meta information are passed through to the underlying file system. Looks like on devices with low iops some writes are getting dropped. I yesterday had a install on a nvme hung once. At the same time I had a script running in the backround compressing and backing up all my vdisks from that nvme. Lots of IO in the backround and tada, error occurs. I wasn't able to test more today, but all tests yesterday on XFS array hdds showed the same errors. Guest installs aborted or unbootable or corrupted installs . Mint, PopOS, Fedora, Windows none were usable afterwards.

-

@jbartlett Try it on a slower drive if you have the time for, maybe the array itself. It looks like it is an IO related issue. Quickly setup a default Linux vm and click trough the installer and see what happens. 😉

-

@testdasi Do you use some extra commands to generate your initial qcow2 vdisk or directly via the ui? From all my testing and reading to the bug reports in some situations these errors won't show up on small vdisks or on fully preallocated disks for example. Or in my case if in the xml the cache option for the vdisk is set to 'none" the error also won't happen. Default is cache='writeback'

I tried a couple different isos and os. It always prevents me from installing the guest OS if I use a vdisk on my array (xfs). No matter which OS I try, template I use or what maschine type or RAM I set. Always the same. Abort or boot errors later.

If you have your array also xfs formatted, please try to install a small linux distro on a 20G qcow2 vdisk for example with everything on default in Unraid.

-

@testdasi single XFS disk or on a array disk?

Most of the time during the install the errors will appear, sometimes only at the first boot and sometimes after a couple minutes of uptime. It's hard to see if the vdisk is corrupted. With "qemu-img check vdisk.img" sometimes it shows some errors, sometimes not. But in all my cases I noticed it inside the VMs, randomly crashes, programs not starting or showing weird errors.

-

I've edited the title for my report for better understanding. Let's hope the Qemu Devs sort this out soon!!!

-

From all the bug reports for qemu 4.1 I have read, the most reports are qcow2 on xfs getting corrupted. No fix for this for now. Only solution is to use RAW images or revert back to unraid 6.7.2. There are also reports from people having issues on ext4 file systems. I hope the issue not beeing able to install a guest os in a qcow2 and the issues I've seen for existing qcow2 files are correlating and will be fixed in 4.1.1.

Until this is released I hope @limetech will downgrade to qemu 4.0 in the next RC builds which isn't affected by these bugs.

-

1

1

-

-

2 hours ago, limetech said:

Can't hold back 6.8 release because of this. Compressed qcow2 has never been an option in our VM manager.

I know compressed qcow2 isn't an option via the gui, but the issue without compression everything on default where the install of an os will fail or corrupt existing images when booting them from an xfs storage can and will have an impact on the data integrity of the users. I'am worried about that situation.

New 1TB drive is up and running fine so far as new cache drive. I will test tomorrow how the smaller drive as UD will handle VMs when formatted xfs. I've only tested qcow2-vdisks on array drives so far because this is the only xfs storage I have to play with.

-

I found a couple different reports back to 2015 talking about file system corruptions on compressed qcow2 files. Let's hope there will be a fix as fast as possible. I guess there are a couple more users using qcow2 as their vdisks and if 6.8 releases to stable without a fix.....i don't even wanna think about it. fingers crossed

-

2 hours ago, Fizzyade said:

I just checked and luckily I saved the diagnostics, although saying that I can't see anything in there relating to this.

Whatever the issue is, downgrading to 6.7 makes it go away.

Thanks for the diags. Had a quick look into it. Not the error I saw at first opening this thread for. No NVME/SSD from Samsung, other array disks as me. Second gen Ryzen 2700 on a x470 board compared to my firstgen TR4 1950x on a x399 board. No "APST feature" warning, ok, you have no nvme's at all only 2 Seagate SSDs. Not sure what onboard controller is used for your sata connections. Highpoint? I don't know.

But you described the same I saw when installing a VM on default qcow2 images. Sooner or later they get corrupted or install fails, right?

I think we need a couple more people testing this @limetech

-

@limetech Another test, this time Linux template changed to i440fx-4.1, rest is the same, new qcow2 vdisk also on the array as before. Same problem, the Mint installer aborts during the install.

After that I did a couple more tests and I narrowed it down a bit. All following tests are done with default Linux template settings, 4cores, 4GB RAM, 20GB qcow2 virtio vdisks on the array, Mint 19.1 xfce. If I manually set cache='none' or cache='directsync' instead of the default cache='writeback' in the xml, I am able to install the OS without any issues. writethrough and unsafe producing the same problem as the default writeback. Installer will abort.

Something is wrong in my opinion how the IO to the underlying device/filesystem is handled if using the default settings. I can't tell if it's a qemu thing or if something else in unraid is the culprit.

Next test with vdisk on the NVME cache with cache='writeback' fresh installation works, but still my already existing compressed qcow2 files producing the same errors, no matter what I define for cache. Compressed qcow2 produces more IO from what I'am understanding, what in general shouldn't be an issue. It might slow the vm down a bit, but shouldn't end up in a corruption.

@Fizzyade Can you post your diagnostics so Limetech can have a deeper look into your system specs maybe? He said it might be my "Highpoint RocketNVME controller" causing this issue. I'am on an x399 Asrock Threadripper board and the affected NVME is a Samsung 960 Evo 500GB setup as cache drive but what my testings already showed it isn't only an issue on that drive. Vdisks on the array are also affected. Are you using similar hardware?

Latest diagnostics if needed. New drive arrived, lets have a look.

-

Short info, qcow2 compressed vdisk sitting on the XFS formatted array shows the same error. Installing Mint or PopOS with an RAW image on the array went fine. As soon as I choose qcow2 in the template without even compressing it the installation will fail during the process. Only things I did:

1. add VM

2. select Linux template

3. 4 cores, 4GB RAM

4. 30GB disk qcow2 virtio

5. select boot iso (Mint 19.1 xfce, PopOS 19.10)

6. VNC set to german

7. untick "start vm after creation"

On RAW it installs fine on qcow2 it will abort during the process. This time there are no warnings or errors in the VM logs. Removing VM+disks in one case where I had a RAW and a QCOW2 image attached only removed the RAW vdisk.

-

46 minutes ago, limetech said:

Highpoint RocketNVME controller

For almost 2 years now I'am using this board (AsRock Fatality x399) and the onboard slots/controller for a Samsungs 960 Evo 500GB for cache and a Samsung 960 Pro 500GB for passthrough. I never had any issues before. Don't know why I should get an new controller if basically everything is functional except of using compressed qcow2 files on 6.8 RC.

46 minutes ago, limetech said:Oct 12 15:05:06 UNRAID kernel: nvme nvme0: failed to set APST feature (-19)

Not exactly sure if I see this since the beginning I'am using unraid (Nov 2017) but this warning I have for a long time with no signs of side effects. How I understood it, is this error/warning is more important in circumstances where if the device lets say a notebook gets into an sleep state the drive doesn't change it's power state correctly or gets dropped. When Unraid is running in my setup there are always VMs and Dockers up and running, utilizing the drive. So it never will go to a powersaving state, right? Warning is only reported once at boot of Unraid and never again. No errors or warnings in the logs even after a week.

The following is from the Arch wiki. This is a mass market mainstream device and not a niche product, so it sounds really hard to believe that there won't be a driver support for it. Maybe the Unraid Kernal missing the patch for the APST issue.

https://wiki.archlinux.org/index.php/Solid_state_drive/NVMe#Power_Saving_APST

Samsung drive errors on Linux 4.10 On Linux 4.10, drive errors can occur and causing system instability. This seems to be the result of a power saving state that the drive cannot use. Adding the kernel parameter nvme_core.default_ps_max_latency_us=5500[3][4] disables the lowest power saving state, preventing write errors.There is also mentioned a patch for it, that should be already merged into mainline 4.11 Kernel.

46 minutes ago, limetech said:Having said that, it's always possible your SSD is failing.

The drives warranty is rated for "3 Years or 200 TBW" and with 80TB I'am not even close to that. No errors reported from the smart report as long as I can see.

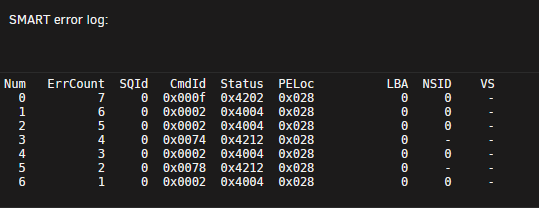

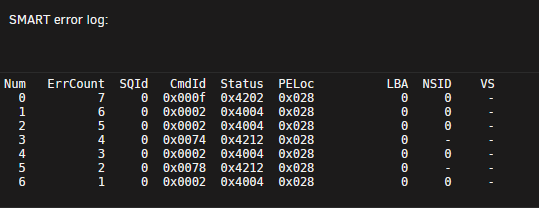

Not exactly sure how to interpret the following

Sure, drives can fail, but in my case I can definitly reproduce that vdisk corruption. Tried it a couple times with a couple different VMs/backups already and I have setup a couple fresh VMs from sketch and NO errors as long as I don't compresse the vdisk to save 10-20% space. EVERYTIME I compress the vdisk what works on 6.7.2 won't work on 6.8 RC.

Btw. a larger new drive is already on it's way. I will report back if the issue will be the same with a Samsung 970 1TB Evo Plus.

Unraid OS version 6.8.0-rc5 available

-

-

-

-

-

in Prereleases

Posted · Edited by bastl

So you only experiencing this with i440fx VMs? Maybe I have some time tomorrow to test i440 on 6.8 RC. I'am running all my VMs on Q35 for quiet some time now without any problems.