-

Posts

810 -

Joined

-

Last visited

Content Type

Profiles

Forums

Downloads

Store

Gallery

Bug Reports

Documentation

Landing

Posts posted by casperse

-

-

-

Hi All

I hope this is the right place to ask this...

I got the temp working and then the probes was gone and I haven't been able to get them back?

I just got the new Gigabyte motherboard C246-WU4 supporting the new Intel’s Xeon E-2176G

and sofar its been working great with UNRAID

I have deleted the plugin and re-installed it but havent been able to get the sensors back again?

Running the command: root@Tower:~# sensors-detect got the below output.

And yes I have also tried to go through the https://wiki.unraid.net/index.php/Setting_up_CPU_and_board_temperature_sensing

but without any success.

Driver `jc42':

* Bus `SMBus I801 adapter at efa0'

Busdriver `i2c_i801', I2C address 0x18

Chip `IDT TSE2004' (confidence: 5)

* Bus `SMBus I801 adapter at efa0'

Busdriver `i2c_i801', I2C address 0x1a

Chip `IDT TSE2004' (confidence: 5)Driver `coretemp':

* Chip `Intel digital thermal sensor' (confidence: 9)Do you want to generate /etc/sysconfig/lm_sensors? (yes/NO): yes

Copy prog/init/lm_sensors.init to /etc/init.d/lm_sensors

for initialization at boot time.

You should now start the lm_sensors service to load the required

kernel modules.Unloading i2c-dev... OK

If anyone have any input on what to do next?

Again thanks for a great plugin and this great support community its really extraordinary!

Cheers

Casperse

-

I think that's only when the power is cut (Which shouldn't be very often) or am I wrong anyone who owns one of these UPS?

Found one that have both USB and the Netcard (Dell version model) so I should be able to use it with two servers!

Using Synology as the master and then this plugin on my Unraid server:

-

Ok sounds like its better to stick with an old APC Smart-UPS 2200VA from Ebay

-

Anyone have any experience with the PowerWalker VI 3000 RLE (It has USB support)

But I haven't found any indication to if its supported by Unraid?

https://powerwalker.com/?item=10121101

Best regards

Casperse

-

Can anyone here Answer this Question about the Fan Wall for this Norco case?

Thanks

-

Hi All

I bought the "Servercase UK SC-4324S = Norco RPC-4224 4U" and it have the 3 fan Wall and a power connector.

BUT on the side of the power connector there is some pins (See picture)

Better see it here:

What are they used for?

It will be pretty hard to get to them when I have mounted it and the MB....(Impossible)

Hope someone can clear this up for me

Thanks

Casperse

-

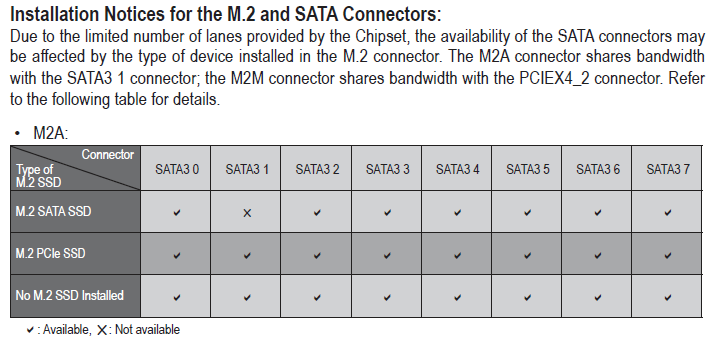

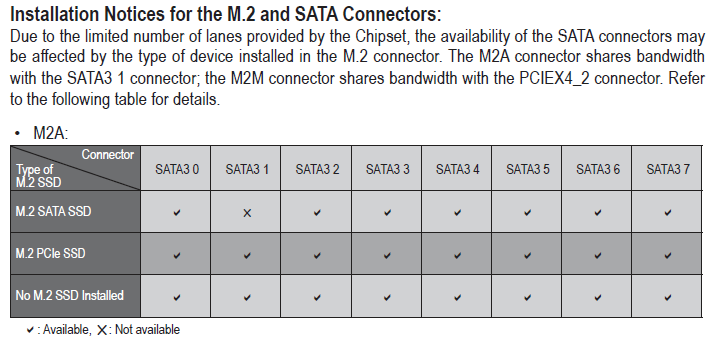

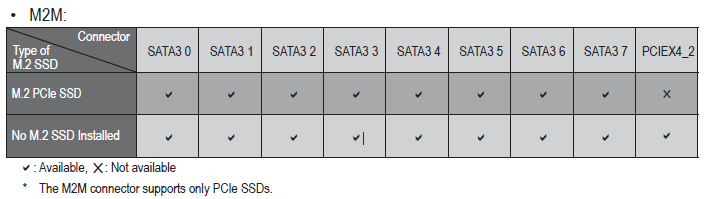

@hawihoneyI think you are comparing the wrong M.2 drives :-)

The loss of a SATA port is for use of a M.2 SATA SSD - and the speed here is the same as a normal SSD

The M.2 PCIe NVMe (Fast one) does not have any impact on my SATA connections.

If I select the first M.2 slot for a fast M.2 PCIe NVMe, and the other M.2 slot as a "Slow" M.2 SATA SSD then there will be no impact to existing SATA connections or my PCIEX4_2 slot and I get one more SSD drive and one fast M.2 PCIe

-

Thanks for sharing your personal opinions 😊

I ended up with the Gigabyte C246-WU4 I needed "Full size PCie" ports and 10 Sata ports. Also I need 4 full slots with 4X & 8X speed.

Since I have a 24+2 drive case that I would like to use 100%

Yes it all comes down to having enough PCI-e slots... (-;

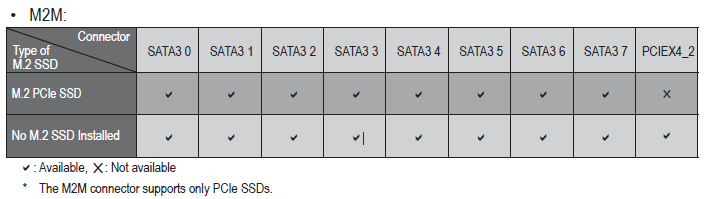

Yes using the first M.2 with PCIe have no impact. but using the secound M.2 slot would mean that I would lose the last PCIe socket

On the positive side I have the option to have one more CASH drive as a M.2 SSD :-)

I needed x8, x4, x8, x4 - For two controller cards, a ethernetcard and a graphic card.

If I use the 8 sata on the board for the rack I could save one of the LSI cards....

So this is actually the only MB I could find that supports what I would like to do

UPDATE: Its very hard to get this motherboard still waiting for it......

-

New to UNRAID and I am building with the same CPU as you but with another MB/RAM setup (Need more SATA ports and all out of PCI-e slots LOL.)

I have some questions I hope you can help me with :-)

- Any reason you selected 1x2TB cash drive instead of having 2x1TB for extra security on your cash?

- Are your VM drive M.2 setup as a UAD? - I guess that would provide barebone speed for your VM's right?

- You did a screen capture of Plex transcoding in your other post, what installation of Plex are you using on UNRAID? (Linuxserver Docker?)

- Also have you placed your Plex metadata on the cash drive or on a SSD mounted as a UAD? (Is there a best recommended practice for this?)

- I was wondering if it would be better to use the M.2 as a UAD for VM's and also Metadata and maybee even for temporary Plex transcoding?

- I also "only" have 32G RAM and I don't think that would be enough to use RAM-drive as a tmp for transcoding? (But it would prolong my SSD life!)

- Do you know if M.2 lifetime is also impacted by high usage - like tmp transcoding usage?

- Shares between different storage servers (I have a 24 drive Synology) is it best using SMB shares or NFS with UNRAID?

Thanks for a very informative and finally a happy ending on your very hard journey building your new system.

I hope I get the right CPU still waiting for it.....

-

-

Any news on this setup? Anyone received a CPU and MB yet? 👍

-

4 hours ago, Tybio said:

One note, you could use the 8 SATA ports on the MB rather than the second LSI card, that would let you fit 2x8 and 1x4. So you could make it work, it would just have no room for expansion and only one M.2 slot working (#1). I can confirm all of this when I get mine up and running, but that's waiting on the processor.

Thats not a bad idea! So if I buy the Gigabyte board that have 10 Sata (I also need two sata for internal SSD drives) and I would end up with a spare 4X slot?

According to the homepage the option is:

8x - Graphic (16x but should run okay at 8x?)

4x - Intel Pro 1000 VT Quad Port NIC - PCI Express

8x - LSI SAS9201-16i PCI-Express 2.0 x8 SAS 6Gb/s HBA Card (Drives)

4x - Free? expansion if I need more Ethernet throughput and a 10GB ethernet card

BUT then the SATA controller need to be supported by unraid/ESXi (I plan to run ESXi, and then Unraid, and windows or linux machine)

just not sure how to be certain that the onboard controller is supported by "everything"

BTW: Which ECC RAM modules are you buying?

-

I have been looking for the same setup (The rumor is that there will soon be a 8 core version Intel’s Xeon E-2188G? ) but I think I have found a problem!

I have bought a 24 bay storage server case: 4u-server-case-w-24x-35-hot-swappable-satasas-drive-bays-6gbs-minisas-550m-deep-sc-4324s

And after getting some great help here from the group on LSI controllers and cables in this post LINK

I now have the following items:

8X (16X) Quadro P2000 card (Game server passthrough or Plex transcoding better than quicksync)

8X - LSI SAS9201-16i PCI-Express 2.0 x8 SAS 6Gb/s HBA Card (Drives)

8X - LSI SAS9201-8i PCI-e Controller / 9211-8i (IT-mode) (Drives)

4X Intel Pro 1000 VT Quad Port NIC - PCI Express (Need more Ethernet throughput or to be replaced with a 10GB ethernet card?)

= 28 Lanes?None of the C246 chipset boards/CPU would be able to run with the above cards (Right?)

Right now it seems my only option is to go with Xeon W? that supports 48 lanes vs the E-Series 16 lanes? (I want ECC)

Or am I missing something....

-

Hi @johnnie.black

I finally got two LSI cards from ebay delivered (Used)- LSI SAS 9201-8i PCI-Express Controller 9211-8i (IT-mode) - FW rev: 20.00.07.00-IT

- LSI SAS9201-16i PCI-Express 2.0 x8 SAS 6Gbs HBA Card - FW rev: 5.00.13.00-IT

I have looked around and can't find the updated FW for the -16i card also the guides to perform the upgrade is different UEFI, DOS

Also some are stating to skip the 20 version and use a v21?

I have done many firmware updates on different controllers, but seems that this one might be better with an older version?

Again thanks for your help couldn't have done this without your and the other comments to this thread!UPDATE Found this: https://docs.broadcom.com/docs/9201_16i_Package_P20_IT_Firmware_BIOS_for_MSDOS_Windows.zipCheers

Casperse

Okay this went pretty smooth, to anyone else who wants to do this just:

The card now reports as a SAS2116_1(B1) but the Utill still show it as a SAS9201-16i main thing is that everything works!QuoteBoot to FreeDos with USB place files in root of USB

sas2flsh -listall --> (Check current Bios version)sas2flsh -o -e 6 --> (Deletes firmware)

sas2flsh -o -f 9201-16i_it.bin -b mptsas2.rom --> (Update firmware and bios rom)

sasflsh -listall --> (Check Bios upgrade ok?)

Ctrl + Del (Reboot)

Ctrl + c (Set card in IT mode) - Dont see anything else than IT as a selection on the till?

-

OK point taken! (-: I will look for used items.

Just wondering. When I have two LSI cards in the same machine, I then have two different firmware menu? how does the card not conflict with each other?

Or will they recognize each other and only show one menu?

-

Anybody @johnnie.black , @testdasi, @bonienl

who have experience positive buying LSI cards from china? (Fake?)

-

Thanks @johnnie.black Ok, running out of time and can't really find any PCI 3.0 that isn't close to full price and then you get warranty

also goes for expanders for 24 drives is non existing on Ebay

Would this be okay for 24 bays (Approved HBA card to be used for unRaid HBA mode)

LSI SAS9201-16i PCI-Express 2.0 x8 SAS 6Gb/s HBA Card

LSI SAS2008-8I SATA 9211-8i 6Gbps 8 Ports HBA PCI-E RAID Controller CardIs the higher number, the newest card and therefore the best value for money, one is 9201 and the other is 9211

-

Just noticed that the back plane in my case only states: " 6Gb/s MiniSAS" so a 12Gb/s and PCIe 3.0 would probably be wasted on this.

Is the LSI 9202-16e the preferred card for HBA? (Most of the ones I find on Ebay only have external and not internal connectors?)

-

Ooh maybe a fourth option:

LSI MegaRaid SAS 9280 -24i4e would this work I think it’s only PCI 2.0 but would this actually create a bottleneck when adding 24 drives to a single card? (HBA mode) -

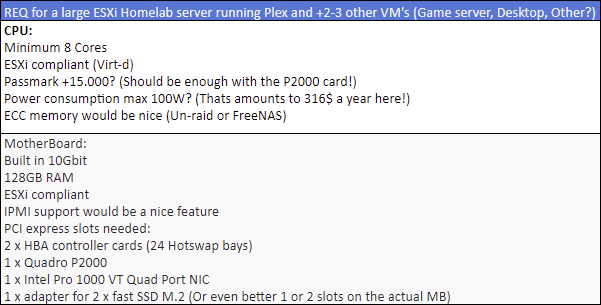

Hi All

Since allot of people using unRaid also is running Plex, I believe this is the right forum to ask this question in...

Also I am really impressed by the forum supporters like @johnnie.black and others to help the "newbies"

So I have tried to gather as much information as possible to help you help me built this 😉

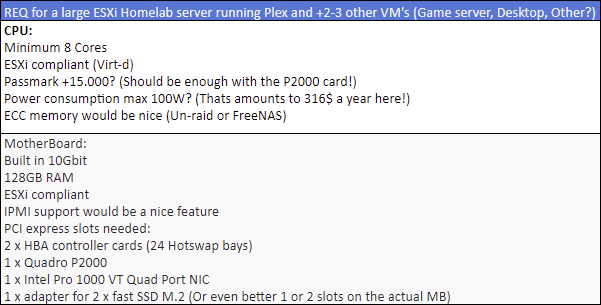

First requirements:

I tried to make a table of all the CPU's but it didn't really help me, because the MB is also a very important factor and I dont know alot about Xeons

Update: The Passmark [20.000] was without the Quadro P2000 for Plex! so I think it can be lower now

My personal input to my research is that if I don't need ECC ram? and IPMI support? and if Intel's Core i9 9900K is supported by ESXi?

Then I might be better of waiting for that to launch? on the other hand Xeon is lower power and sort of built for this virtual Home Lab?

As always I highly appreciate you sharing your thoughts and experiences 👍

BTW: I am currently running a Synology server with 24 drives so this would also be a test to see if I could move everything over to this multi-platform?

Best regards

Casperse

-

Thanks for catching that!! I got the wrong link from their sales department LINK

What about recommendation for 16 port? (The one I find keep having the ports "outside" and not on the card itself?)

-

Thanks! - So after reviewing all your valuable information I have drilled it down between 3 solutions for a minimum of 24 drives or more:

-

2 HBA cards: LSI SAS3008 9300-8I/LSI 9211-8i/LSI 9207-8i ?

and a LSI 9202-16e (Any model recommendations buying used LSI with PCI 3.0? EBAY is a jungle of different models)

and a LSI 9202-16e (Any model recommendations buying used LSI with PCI 3.0? EBAY is a jungle of different models)

- Expander 36: RES3CV360 and LSI 9207-8i and 8 x SFF-8087 miniSAS to SFF-8087 miniSAS cables between the cards (Short ones would be nice)

- Expander 24: LSI 9207-8i and the RES2SV240 expander and some cables? (I don't like buying used cables, so new ones any brand recommendations?)

BTW: I still only have 6 SAS ports on the back-plate of my new server case, so regardless of the above solutions I can order 6 of these cables with the case right?

-

2 HBA cards: LSI SAS3008 9300-8I/LSI 9211-8i/LSI 9207-8i ?

-

So this way the Expander would have higher capasity than it would actuall need for 24 bays (36 Expander might be more expensive than buying two cards 🙂)

I found this article (Because I also would like to use ESXi running Un-raid) and here the MB actual have a built in LSI controller!

https://www.vladan.fr/supermicro-single-cpu-board-for-esxi-homelab-x10srh-cln4f

So was wondering if I then only needed to buy the expansion to such a board?

Dynamix - System Temp

in User Customizations

Posted

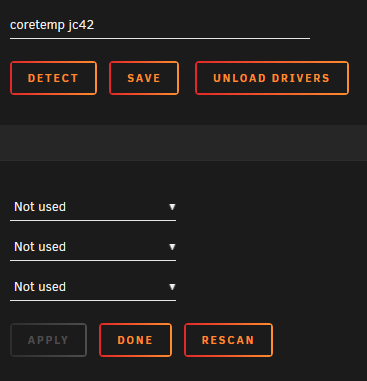

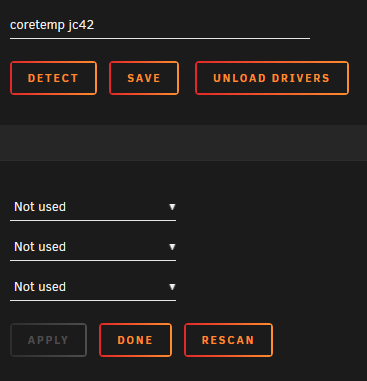

@Maor detect button just keeps writing "coretemp jc42" and shows no sencores in the dropdown menu

Changing the name to "jc42" worked for a second I could select sensors but hitting the Apply button made them all disappear again?

Same thing the first time I did a detect and then all gone?

Could be a small bug, beacuse everything was detected perfectly and then hitting Aply and it was gone