goinsnoopin

Members-

Posts

357 -

Joined

-

Last visited

Converted

-

Gender

Undisclosed

Recent Profile Visitors

The recent visitors block is disabled and is not being shown to other users.

goinsnoopin's Achievements

Contributor (5/14)

7

Reputation

-

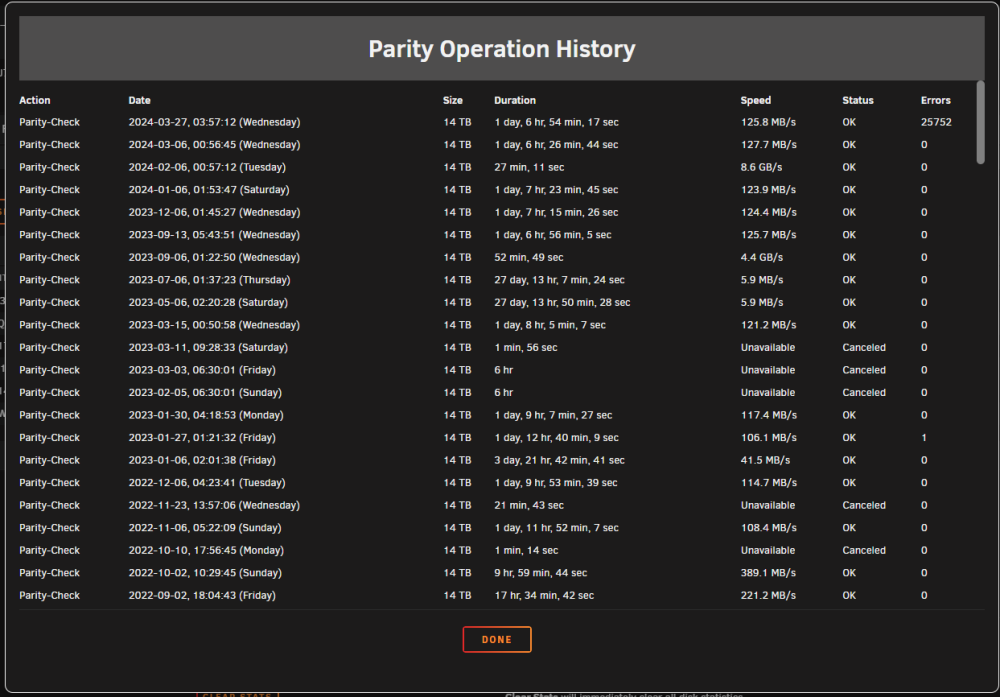

@trurl Parity Check completed and I got an email when it finished indicating that there were 0 errors?? I have attached a current diagnostics. I also attached a screenshot of the parity history that shows the zero errors and the sync errors that were corrected. There was also a second email that read as follows...(what is error code -4 listed after the sync errors): Event: Unraid Status Subject: Notice [TOWER] - array health report [PASS] Description: Array has 9 disks (including parity & pools) Importance: normal Parity - WDC_WD140EDGZ-11B2DA2_3GKH2J1F (sdk) - active 32 C [OK] Parity 2 - WDC_WD140EDFZ-11A0VA0_9LG37YDA (sdm) - active 32 C [OK] Disk 1 - WDC_WD120EMFZ-11A6JA0_QGKYB4RT (sdc) - active 32 C [OK] Disk 2 - WDC_WD20EFRX-68EUZN0_WD-WMC4M1062491 (sdf) - standby [OK] Disk 3 - WDC_WD40EFRX-68N32N0_WD-WCC7K4PLUR7A (sdi) - active 28 C [OK] Disk 4 - WDC_WD30EFRX-68EUZN0_WD-WCC4NEUA5L20 (sdd) - standby [OK] Disk 5 - WDC_WD30EFRX-68EUZN0_WD-WMC4N0M6V0HC (sdj) - standby [OK] Disk 6 - WDC_WD40EFRX-68N32N0_WD-WCC7K3PZZ7Y7 (sdh) - standby [OK] Cache - Samsung_SSD_860_EVO_500GB_S598NJ0NA53226M (sde) - active 37 C [OK] Last check incomplete on Tue 26 Mar 2024 06:30:01 AM EDT (yesterday), finding 25752 errors. Error code: -4 tower-diagnostics-20240327-1952.zip

-

I realize that, and have the settings so it does a shutdown with 5 minutes remaining on battery. I think the issue was server was brough back up after utility power was on for an hour...ups settings did their shutdown again with 5 minutes remaining on ups. This cycle repeated itself a couple times. If I was home, I just would have left the server off. Any suggestions...should I cancel parity check? It will start again at midnight.

-

Yes, just double checked history...monthly parity checks for the last year have been 0. I saw that in the logs and was concerned as well. It was an ice storm and the power came on and off several times in a 5 hour window. So its possible the UPS battery ran down on first outage and got minimal charge before the next outage. Unfortunately I was not home so I am going by what my kids told me. Dan

-

goinsnoopin started following [Solved]Disks not unmounting for shutdown or restart , Parity Check - Power Outage , Unraid 6.12.4 crashing multiple times a week - need assistance and 2 others

-

During storm we lost power. I have a UPS, but for some reason did not shut unraid down cleanly like it has in the past. On reboot this triggered a parity check. My parity check runs over several days due to the hours I restrict this activity. It is currently at 90% or so complete and there are 25,752 sync errors. Monthly on my scheduled parity checks, I set the corrections to No...not sure what the settings are from an unclean shutdown. Logs are attached. Any suggestions on how to proceed? Should I cancel balance of the parity check? tower-diagnostics-20240326-1000.zip

-

Unraid 6.12.4 crashing multiple times a week - need assistance

goinsnoopin replied to goinsnoopin's topic in General Support

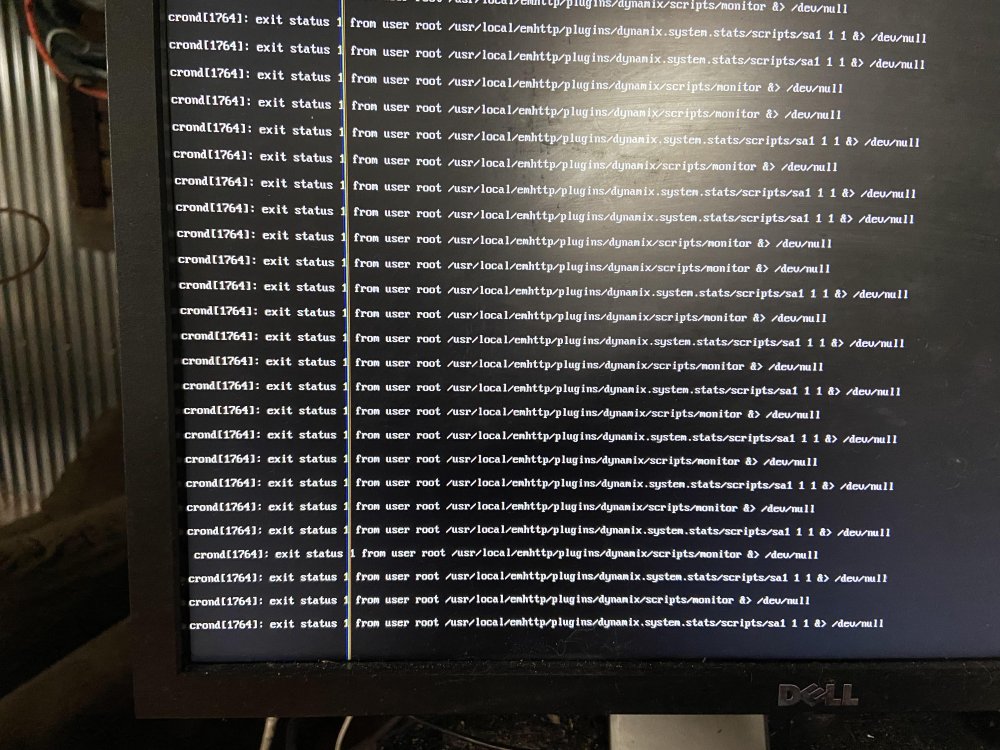

JorgeB Attached is my syslog...this was started on 10/25/23 after a crash I experienced earlier in that day. The snipet above from from line 126 to line 746. Then after line 746 was the reboot. I took a picture of my monitor when the system was crashed....see below. Obviously with the crash being random, I am unable to run and capture a diagnostic that covers the crash event...so I ran one right now for your reference and attached it. Thanks, Dan tower-diagnostics-20231028-0928.zip syslog.txt -

I upgraded to 6.12.4 a couple of weeks ago and my server has gone unresponsive several times a week. I just enabled syslog server in an attempt to get the errors that proceed a crash. Here is what I got on the last crash: Oct 26 19:01:06 Tower nginx: 2023/10/26 19:01:06 [alert] 9931#9931: worker process 7606 exited on signal 6 Oct 26 19:02:14 Tower monitor: Stop running nchan processes Please note there were 600 or so nginx errors basically every second before this one….just omitting to keep this concise. Does anyone have any suggestions on how to proceed? Right now I am considering downgrading to the last 6.11.X release….as I never had issues with it.

-

Unraid USB Flash Drive Replace or Not - Looking for Opinion

goinsnoopin replied to goinsnoopin's topic in General Support

Thanks for the opinions. I backed up my flash drive, deleted previous and successfully upgraded to 6.11.5. -

So I registered Unraid back in 2009 and I am still using the 2GB Lexar firefly usb thumbdrive that was recommended way back then. I am on 6.11.1 and just tried updating to 6.11.5 and I can't upgrade as there is not enough free room on the USB flash drive. It looks like this is because old versions are kept on the flash drive. Back in June, I purchased a Samsung Bar Plus 64 GB thumbdrive to have on hand should I ever need to replace the original Lexar. So I am looking for opinions...should I try and figure out what I can delete off my old flash drive or migrate to the spare I have on hand?

-

[support] Spants - NodeRed, MQTT, Dashing, couchDB

goinsnoopin replied to spants's topic in Docker Containers

The issue is not with this palette...it is all palettes...installing new or updating. I have gotten some help from the nodered github. Here is the issue as I understand it....link does not work within the nodered container and starting with nodered 3.0.1-1 they moved the location of cache to inside the /data path. Here is the link to the github issue I raised with nodered...has some info that may be helpful: github issue -

[support] Spants - NodeRed, MQTT, Dashing, couchDB

goinsnoopin replied to spants's topic in Docker Containers

Update to post above: I rolled my docker tag from latest to the 3.0.1 release of about a month ago and all functions as it is supposed to. The releases 3.0.1-1 (23 days ago) and 3.0.2 (16 days ago) I get the error above. For now I am staying rolled back to 3.0.1 release. -

[support] Spants - NodeRed, MQTT, Dashing, couchDB

goinsnoopin replied to spants's topic in Docker Containers

I have been using this container successfully for over a year. I am getting an error when I attempt to update a palette. Please note that all three palette's give the same error...just with specifics for each palette. Here is the excerpt from the logs for one example: 51 verbose type system 52 verbose stack FetchError: Invalid response body while trying to fetch https://registry.npmjs.org/node-red-contrib-power-monitor: ENOSYS: function not implemented, link '/data/.npm/_cacache/tmp/536b9d89' -> '/data/.npm/_cacache/content-v2/sha512/ca/78/ecf9ea9e429677649e945a71808de04bdb7c3b007549b9a2b8c1e2f24153a034816fdb11649d9265afe902d0c1d845c02ac702ae46967c08ebb58bc2ca53' 52 verbose stack at /usr/local/lib/node_modules/npm/node_modules/minipass-fetch/lib/body.js:168:15 52 verbose stack at async RegistryFetcher.packument (/usr/local/lib/node_modules/npm/node_modules/pacote/lib/registry.js:99:25) 52 verbose stack at async RegistryFetcher.manifest (/usr/local/lib/node_modules/npm/node_modules/pacote/lib/registry.js:124:23) 52 verbose stack at async Arborist.[nodeFromEdge] (/usr/local/lib/node_modules/npm/node_modules/@npmcli/arborist/lib/arborist/build-ideal-tree.js:1108:19) 52 verbose stack at async Arborist.[buildDepStep] (/usr/local/lib/node_modules/npm/node_modules/@npmcli/arborist/lib/arborist/build-ideal-tree.js:976:11) 52 verbose stack at async Arborist.buildIdealTree (/usr/local/lib/node_modules/npm/node_modules/@npmcli/arborist/lib/arborist/build-ideal-tree.js:218:7) 52 verbose stack at async Promise.all (index 1) 52 verbose stack at async Arborist.reify (/usr/local/lib/node_modules/npm/node_modules/@npmcli/arborist/lib/arborist/reify.js:153:5) 52 verbose stack at async Install.exec (/usr/local/lib/node_modules/npm/lib/commands/install.js:156:5) 52 verbose stack at async module.exports (/usr/local/lib/node_modules/npm/lib/cli.js:78:5) 53 verbose cwd /data 54 verbose Linux 5.15.46-Unraid 55 verbose node v16.16.0 56 verbose npm v8.11.0 57 error code ENOSYS 58 error syscall link 59 error path /data/.npm/_cacache/tmp/536b9d89 60 error dest /data/.npm/_cacache/content-v2/sha512/ca/78/ecf9ea9e429677649e945a71808de04bdb7c3b007549b9a2b8c1e2f24153a034816fdb11649d9265afe902d0c1d845c02ac702ae46967c08ebb58bc2ca53 61 error errno ENOSYS 62 error Invalid response body while trying to fetch https://registry.npmjs.org/node-red-contrib-power-monitor: ENOSYS: function not implemented, link '/data/.npm/_cacache/tmp/536b9d89' -> '/data/.npm/_cacache/content-v2/sha512/ca/78/ecf9ea9e429677649e945a71808de04bdb7c3b007549b9a2b8c1e2f24153a034816fdb11649d9265afe902d0c1d845c02ac702ae46967c08ebb58bc2ca53' 63 verbose exit 1 64 timing npm Completed in 3896ms 65 verbose unfinished npm timer reify 1661012682451 66 verbose unfinished npm timer reify:loadTrees 1661012682455 67 verbose code 1 68 error A complete log of this run can be found in: 68 error /data/.npm/_logs/2022-08-20T16_24_42_307Z-debug-0.log I cleared my browser cache and have tried from a second browser (chrome is primary...tried from firefox also). Any help would be greatly appreciated! Dan -

I have a windows 10 vm, and the other day performance became terrible…basically unresponsive. I shutdown Unraid and Unraid did not boot up. I pulled the flash drive made a backup without issue on a standalone pc then ran chkdsk and it said it needed to be repaired. I repaired and Unraid booted fine. The VM still had terrible performance. I used a backup image of a clean win 10 install created a new VM and everything was fine for last day or so. This new VM now has issues. I have attached diagnostics. Recently upgraded to 6.10.2. Would love for someone with more experience to take a look at my logs and see if anything jumps out as being an issue. Thanks, Dan tower-diagnostics-20220608-1029.zip

-

[Solved]Disks not unmounting for shutdown or restart

goinsnoopin replied to goinsnoopin's topic in General Support

Thanks, figured my issue out, corrupt libvirt.img now that the image is deleted and recreated all is well. -

About a month ago, when attempting to shutdown my server I had two disks that did not unmount. I have never had this issue in the several years that I have used unraid. The second time I shutdown, I decided to manually stop my vms and docker containers. Once both of these were stopped, I then stopped the array on the main tab. Two disks did not unmount....my ssd cache drive and disk 6. What is the best way to troubleshoot why these disks won't unmount? I have need to restart my server again and would like a strategy for investigating so I can figure out the issue. Obviously I can grab logs. Thanks, Dan

-

Since I have backup of the VMs xml, any reason not to delete libvirt.img and start fresh?