aim60

Members-

Posts

139 -

Joined

-

Last visited

-

Days Won

1

aim60 last won the day on June 23 2017

aim60 had the most liked content!

Converted

-

Gender

Undisclosed

Recent Profile Visitors

The recent visitors block is disabled and is not being shown to other users.

aim60's Achievements

Apprentice (3/14)

18

Reputation

-

The check has been running for a while now, without errors. Thanks

-

aim60 started following Future Unraid Feature Desires , Parity Disk Rebuild – Troubling Syslog Messages and Jonsbo N2 - My First NAS

-

Running 6.12.8 My array has 2 parity disks. I was in the process of rebuilding parity1 onto a larger disk. About half way through, I paused the rebuild to do some significant array access. Upon resuming the rebuild, the following showed in the syslog. Apr 8 22:04:41 Tower7 emhttpd: writing GPT on disk (sdj), with partition 1 byte offset 32KiB, erased: 0 Apr 8 22:04:41 Tower7 emhttpd: shcmd (161402): sgdisk -Z /dev/sdj Apr 8 22:04:42 Tower7 root: GPT data structures destroyed! You may now partition the disk using fdisk or Apr 8 22:04:42 Tower7 root: other utilities. Apr 8 22:04:42 Tower7 emhttpd: shcmd (161403): sgdisk -o -a 8 -n 1:32K:0 /dev/sdj Apr 8 22:04:42 Tower7 kernel: sdj: sdj1 Apr 8 22:04:43 Tower7 kernel: sdj: sdj1 Apr 8 22:04:43 Tower7 root: Creating new GPT entries in memory. Apr 8 22:04:43 Tower7 root: The operation has completed successfully. Apr 8 22:04:43 Tower7 emhttpd: re-reading (sdj) partition table Apr 8 22:04:43 Tower7 emhttpd: shcmd (161404): udevadm settle Apr 8 22:04:43 Tower7 kernel: sdj: sdj1 Apr 8 22:04:52 Tower7 kernel: mdcmd (38): check resume Apr 8 22:04:52 Tower7 kernel: md: recovery thread: recon P ... The rebuild completed successfully. I now need to determine if the contents of parity1 are valid. Since a non-correcting parity check would take almost a full day, I’m looking for an opinion as to the condition of parity1. If it is thought it is valid, I will do the non-correcting parity check. If not, I assume the way to proceed is to stop the array, wipe parity1 and redo the rebuild without pausing. tower7-diagnostics-20240409-1328.zip

-

Check out the USB Manager plugin. It allows you to pass individual usb devices/ports to a vm.

-

Once “Everything is a Pool”, the ability to start and stop pools individually.

-

aim60 started following BTRFS error and Read-only cache since updating to 6.12 , Announcing New Unraid OS License Keys , TRIM with linux guest for minimal img file and 1 other

-

A significant portion of the value of unraid comes from the awesome collection of plugins and dockers created by the community. In the long term, it will be almost impossible for those creators to support older versions of unraid. In fact CA itself will no longer support the very stable 6.11.5. So most people actively using unraid will be forced to keep upgrading.

-

Just an FYI Getting a lot of these in the syslog Jan 30 12:36:21 Tower7 Parity Check Tuning: ERROR: marker file found for both automatic and manual check P Q I had an unclean shutdown. The array is set to not auto-start. After power cycling the server, I unchecked the box to correct parity, before starting the array. A correcting parity check started anyway. Unraid 6.11.5, Parity Check Tuning 2023.12.08 tower7-diagnostics-20240130-1358.zip

-

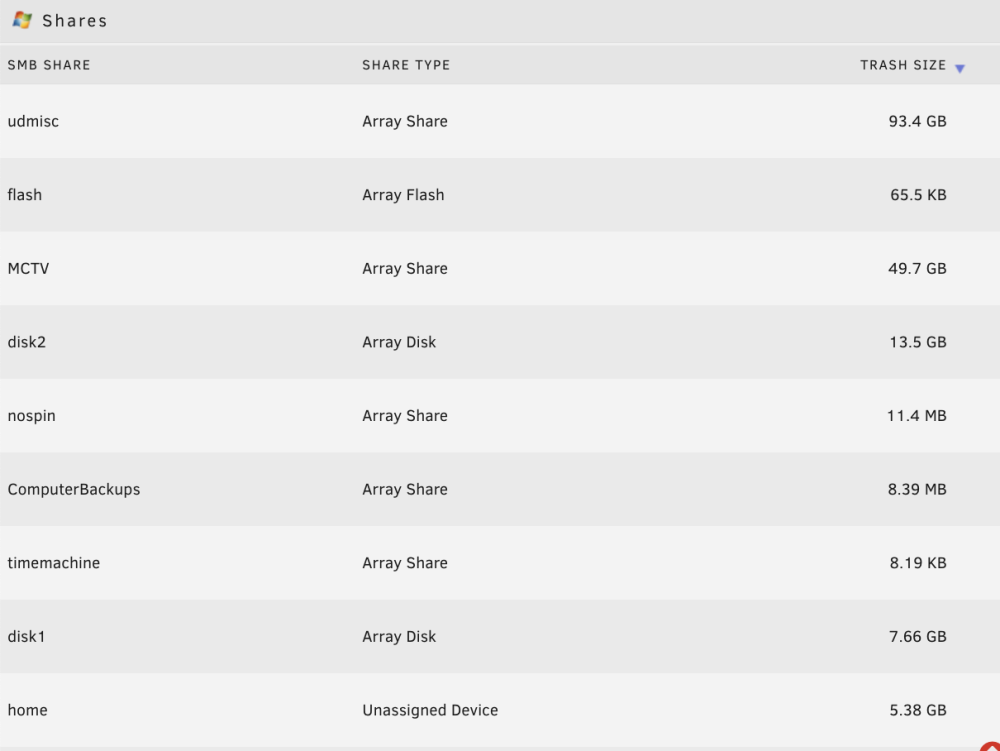

Minor cosmetic issue. If you go to the Recycle Bin settings page and sort the shares by Trash Size, the list is sorted by the number of (GB, MB), ignoring the fact that GBs are larger than MBs.

-

Try these switches to the ls command ls -lsh total 151M 65M -rwxrwxrwx 1 nobody users 20G Dec 30 15:02 vdisk1.img* 86M -rwxrwxrwx 1 nobody users 10G Dec 30 15:02 vdisk2.img* The size on the left is the allocated space.

-

Thanks for the insight. I did some additional testing. Client access to a UD share on exFat over smb is flakey if "Enhanced macOS interoperability" is enabled. I have not observed this problem with any other partition type, including FAT32, which also doesn't support extended attributes. Manually editing the share's samba config, removing streams_xattr, and restarting samba seems to resolve the issue.

-

I am having trouble renaming folders on exFat formatted UD mounted disks. This is an example from Windows 10, although MacOS and Ubuntu are having issues as well. I believe this is a recent problem, since I am often using exFat disks in UD since they are OS agnostic. Unraid 6.11.5 or 6.12.6 UD 2023.12.15 UD Plus 2023.11.30 The share is public. un6126p-diagnostics-20231225-1627.zip

-

aim60 started following ZFS write speed issue (array disk, no parity) on 6.12.0-rc6

-

aim60 started following The OVMF won't save my settings

-

Sometime in the future, might shares on array disks, that have been confined to one disk (via Included Disks) be considered as Exclusive, and be bind-mounted.

-

I’m running 6.11.5, and after updating the plugin to 2023.02.20, I’m still having instances of folders in the recycle bin with their files missing. However, my usage is probably uncommon. My apps are configured to use user shares, but interactively, I use disk shares. During testing, deletes were done from a Windows client. In all test cases, I am deleting: \\Servername\DiskOrPoolName\UserShare\FolderWithFile If the user share resides on an array disk (they are all Use cache pool=no), the folder and file show up in the disk’s .Recyele.Bin folder as expected. If the user share resides on the cache pool (they are all Use cache pool=only), the folder appears in the pool’s .Recycle.Bin without the file. The strange thing is, during testing I created a new user share in the cache pool, and it is working as expected. None of my other pools had previously defined user shares, and newly defined shares work ok. tower7-diagnostics-20230403-1145.zip

-

An interesting observation, assuming disk shares are enabled: If you create a folder off the root of a zfs array disk or pool, a share is created If instead you create the share using the shares tab, a dataset is created with the name of the share, and it's mounted in the expected location

-

Sorry, was on the wrong docker hub tag page

-

@binhex, FYI Trying to explicitly specify Repository binhex/arch-qbittorrentvpn:1.31.1.6733-1-01 fails with Unable to find image 'binhex/arch-qbittorrentvpn:1.31.1.6733-1-01' locally docker: Error response from daemon: manifest for binhex/arch-qbittorrentvpn:1.31.1.6733-1-01 not found: manifest unknown: manifest unknown. Building with :latest works fine, which assume pulls the same version.