juchong

Members-

Posts

39 -

Joined

-

Last visited

Content Type

Profiles

Forums

Downloads

Store

Gallery

Bug Reports

Documentation

Landing

Everything posted by juchong

-

I run a massive Plex server built on Unraid, so in my world, stability is critical. I highly recommend using a USB drive that exclusively uses SLC NAND flash. They're not cheap, but I personally use a 4GB Swissbit drive for my server (link). I've had it in operation for almost two years with zero flash-related downtime. Here's a link to a few USB drives that should also offer similar reliability. All drives are guaranteed to meet industrial specifications and come with datasheets!

-

Unraid cannot manage, create, or add pools with > 30 drives

juchong commented on juchong's report in Prereleases

@JorgeB it's quite unfortunate that admin edits to posts are not viewable by the public. Your initial response was not very professional and highly dismissive. I'll be reaching out to support about this and requesting a refund. You can't claim to support ZFS without supporting n number of drives. -

Unraid cannot manage, create, or add pools with > 30 drives

juchong commented on juchong's report in Prereleases

This is a garbage limitation. I'm trying to optimize space utilization and hard links. How am I supposed to do that? How can Unraid claim to support ZFS when it can't even support a fundamental feature of ZFS by imposing an artificial limit?! -

GUI does not properly assign device numbers when removed from a zfs pool

juchong commented on juchong's report in Prereleases

I assume it shouldn't happen. This is why I'm reporting a bug. 🙂 -

I have a server with 45 x 18TB drives in it. XFS is not an option because an array of >28 data drives is not supported and the rebuild times on such a massive array is gross. ZFS arrays expanded via the command line to contain > 30 drives seemingly break the GUI. The specific configuration I'm trying to set up is: RAIDZ-2, 15 drives per VDEV, 3 VDEVs total I'm trying to add all the drives in the attached image to a single pool (ZFS or otherwise).

-

GUI does not properly assign device numbers when removed from a zfs pool

juchong commented on juchong's report in Prereleases

Try this: Create a ZFS pool Assign drives Start the array Stop the array Remove the drives from the pool Delete the pool Watch as the GUI assigns duplicate "Dev X" numbers (see image above) -

It looks like I've also run into some of the Docker issues others have found recently. I'm not able to edit or start containers for some reason. I've verified that the docker.img and destination drive (/mnt/containers/) has sufficient space. Docker containers seem to be unstable after a reboot and cannot be edited. Help! docker run -d --name='unpackerr' --net='bridge' -e TZ="America/Los_Angeles" -e HOST_OS="Unraid" -e HOST_HOSTNAME="thecloud" -e HOST_CONTAINERNAME="unpackerr" -e 'PUID'='99' -e 'PGID'='100' -e 'UMASK'='002' -l net.unraid.docker.managed=dockerman -l net.unraid.docker.icon='https://hotio.dev/webhook-avatars/unpackerr.png' -v '/mnt/containers/appdata/unpackerr/':'/config':'rw' -v '/mnt/user/data':'/data':'rw' 'cr.hotio.dev/hotio/unpackerr' 90b192dd883262e3983c7995d8a1160d2b7016fd747470472e6b8876ea458afd docker: Error response from daemon: failed to start shim: symlink /var/lib/docker/containerd/daemon/io.containerd.runtime.v2.task/moby/90b192dd883262e3983c7995d8a1160d2b7016fd747470472e6b8876ea458afd /var/run/docker/containerd/daemon/io.containerd.runtime.v2.task/moby/90b192dd883262e3983c7995d8a1160d2b7016fd747470472e6b8876ea458afd/work: no space left on device: unknown. The command failed. thecloud-diagnostics-20230530-2238.zip

-

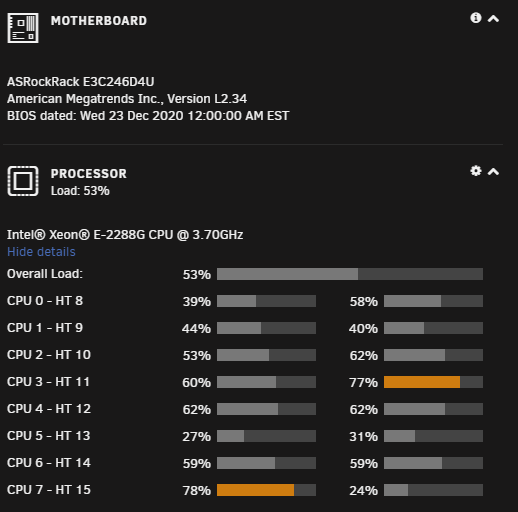

Hi folks, I wanted to ask for feedback on a massive shift in methodology that I'm considering for my server. If you take a look at my signature, you'll see that I'm running some serious hardware. However, things are about to get even crazier since I've recently acquired a Storinator chassis and a 64-core EPYC CPU. I'm at a crossroads; here are my options: Continue using Unraid and XFS - My overall server capacity is limited and rebuilds take ~3 days to complete. Things are working well-enough, but everything is slow. I feel like I've outgrown XFS. Continue using Unraid, but swap the array to ZFS - ZFS on Unraid is still a beta feature. I'm also not sure whether it's possible to set up ZFS as the primary array format in the Unraid GUI. How stable is Unraid + ZFS? Move to TrueNAS and leave Unraid behind - This will involve LOTS of time investment to bring up services like I have on Unraid already. It's an entirely new system to learn, tune, optimize. The community isn't as vibrant and helpful. Note: Don't worry about moving/storing data between drive format changes. I will have enough drives available to temporarily hold all the data. Also, most of the drives in the array are currently 18TB, so it's not a big deal for me to build ZFS pools with similar drives. PLEASE leave your comments below. I'm very eager to hear everyone's thoughts on this.

-

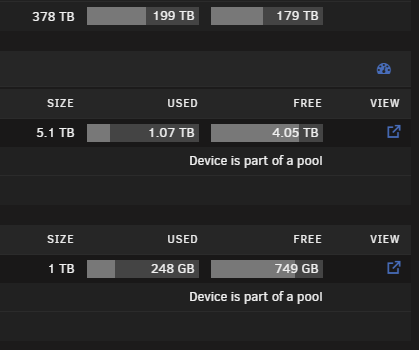

I've had good luck finding 18TB enterprise drives for cheap, so the array has grown significantly since I last posted an update. My signature lists out the hardware, so instead, I'll share a picture of the drive breakdown shown in Unraid, plus a glamour shot of the server itself. Note: This was taken before I added the second P1000 and moved a few cards around. The server is currently colocated rather than being kept at home.

-

Hi folks! I recently put together a Docker container that builds and runs shapeshifter-dispatcher, a utility designed to obfuscate packets to evade IDS, firewalls, and other censorship tools. I'm personally using this tool to evade IDS systems at event venues by obfuscating OpenVPN packets, but I figured others could benefit from it, too. The container does not have a GUI or any other fancy features, but it is very effective at what it's designed to do. Dockerhub: https://hub.docker.com/r/juchong/shapeshifter-docker GitHub: https://github.com/juchong/shapeshifter-docker Base Project GitHub: https://github.com/OperatorFoundation/shapeshifter-dispatcher Enjoy!

-

I posted my setup a while ago, but it's seen quite a few updates since then. I consolidated the backup drives into the primary system, so it's now sitting at 378TB usable on the main array. The "unpacking" cache array is sitting at 5.1TB (U.2 NVMEs) usable space, and "containers" array is sitting at 1TB (M.2 NVMEs). All the drives live inside a 36-bay, 4U Supermicro chassis. The server is currently colocated inside a local datacenter. 😁

-

Hi! Long-time Unraid user here. I recently had to run to the datacenter to replace a component and discovered that I was unable to click (tap?) on any dialog boxes that popped up using any mobile browser. I'm using an iPhone 13 Pro running the latest iOS version. No matter what browser I tried (Chrome, Firefox, Safari), I was not able to tap on the "Proceed" button that appears when you initiate a shutdown. I had to resort to using the technician's "crash cart" to log into the Unraid console and shut off the server that way. Is there anything I can do to help debug the issue? Maybe there's another way to manage the server using a mobile browser? Thanks!

-

It's been a while since I played with this since I ended up selling my fusionIO drive in favor of U.2 NVMEs, but ich777 is correct. You can absolutely use his Docker container to build the kernel modules as a plugin, but it'll be a massive pain to keep up with releases. Here's my repository with the build script I was using for testing. YMMV: https://github.com/juchong/unraid-fusionio-build

-

I wanted to thank the team for how seamless they've made the backup and restore process! My USB drive failed last night, but luckily, the latest configuration was stored safely in the cloud. Restoring the configuration was extremely easy and got the server up-and-running in just a few minutes. A+ work, everyone!

-

Intel Socket 1151 Motherboards with IPMI AND Support for iGPU

juchong replied to Hoopster's topic in Motherboards and CPUs

Your post is what tipped me off to there being a DOS-based utility available. I was already contemplating manually programming the ROM using an external programmer. -

Intel Socket 1151 Motherboards with IPMI AND Support for iGPU

juchong replied to Hoopster's topic in Motherboards and CPUs

I recently transplanted my E3C246D4U into a new case and accidentally got the UID button on the back of the board stuck. Once I powered the server on, I ran into the dreaded "BMC Self Test Status Failed" error. After a bit of digging, I found a utility posted by ASRock that allows the BMC to be flashed in DOS. This utility, along with the most up-to-date BMC firmware downloaded from their website, got me up-and-running! Here's a link to the ASRock website. I've also attached the utility to this post in case it disappears from the internet. socflash v1.20.00.zip -

I'm having a similar issue as well... What's going on?!

-

Hey! I'm seeing a similar issue on my server as well. Here's the relevant syslog: Feb 17 19:46:21 thecloud root: error: /plugins/unassigned.devices/UnassignedDevices.php: wrong csrf_token Feb 17 19:46:25 thecloud root: error: /plugins/unassigned.devices/UnassignedDevices.php: wrong csrf_token Feb 17 19:46:29 thecloud root: error: /plugins/unassigned.devices/UnassignedDevices.php: wrong csrf_token Feb 17 19:46:33 thecloud root: error: /plugins/unassigned.devices/UnassignedDevices.php: wrong csrf_token Feb 17 19:46:37 thecloud root: error: /plugins/unassigned.devices/UnassignedDevices.php: wrong csrf_token Feb 17 19:46:41 thecloud root: error: /plugins/unassigned.devices/UnassignedDevices.php: wrong csrf_token Feb 17 19:46:45 thecloud root: error: /plugins/unassigned.devices/UnassignedDevices.php: wrong csrf_token Feb 17 21:09:50 thecloud vsftpd[24107]: connect from 10.0.10.1 (10.0.10.1) Feb 17 21:09:50 thecloud in.telnetd[24108]: connect from 10.0.10.1 (10.0.10.1) Feb 17 21:09:50 thecloud sshd[24109]: Connection from 10.0.10.1 port 36967 on 10.0.10.4 port 22 rdomain "" Feb 17 21:09:50 thecloud sshd[24109]: error: kex_exchange_identification: Connection closed by remote host Feb 17 21:09:50 thecloud sshd[24109]: Connection closed by 10.0.10.1 port 36967 Feb 17 21:09:50 thecloud vsftpd[24113]: connect from 10.0.10.1 (10.0.10.1) Feb 17 21:09:55 thecloud telnetd[24108]: ttloop: peer died: EOF Feb 17 21:10:01 thecloud smbd[24110]: [2021/02/17 21:10:01.582271, 0] ../../source3/smbd/process.c:341(read_packet_remainder) Feb 17 21:10:01 thecloud smbd[24110]: read_fd_with_timeout failed for client 10.0.10.1 read error = NT_STATUS_END_OF_FILE. Feb 17 21:53:56 thecloud kernel: Linux version 5.10.1-Unraid (root@Develop) (gcc (GCC) 9.3.0, GNU ld version 2.33.1-slack15) #1 SMP Thu Dec 17 11:41:39 PST 2020 Feb 17 21:53:56 thecloud kernel: Command line: BOOT_IMAGE=/bzimage initrd=/bzroot console=ttyS0,115200 console=tty0 Feb 17 21:53:56 thecloud kernel: x86/fpu: Supporting XSAVE feature 0x001: 'x87 floating point registers' Feb 17 21:53:56 thecloud kernel: x86/fpu: Supporting XSAVE feature 0x002: 'SSE registers' Feb 17 21:53:56 thecloud kernel: x86/fpu: Supporting XSAVE feature 0x004: 'AVX registers' Feb 17 21:53:56 thecloud kernel: x86/fpu: Supporting XSAVE feature 0x008: 'MPX bounds registers' Feb 17 21:53:56 thecloud kernel: x86/fpu: Supporting XSAVE feature 0x010: 'MPX CSR' Feb 17 21:53:56 thecloud kernel: x86/fpu: xstate_offset[2]: 576, xstate_sizes[2]: 256 Feb 17 21:53:56 thecloud kernel: x86/fpu: xstate_offset[3]: 832, xstate_sizes[3]: 64 Feb 17 21:53:56 thecloud kernel: x86/fpu: xstate_offset[4]: 896, xstate_sizes[4]: 64 Feb 17 21:53:56 thecloud kernel: x86/fpu: Enabled xstate features 0x1f, context size is 960 bytes, using 'compacted' format. Feb 17 21:53:56 thecloud kernel: BIOS-provided physical RAM map: Feb 17 21:53:56 thecloud kernel: BIOS-e820: [mem 0x0000000000000000-0x000000000009ebff] usable Feb 17 21:53:56 thecloud kernel: BIOS-e820: [mem 0x000000000009ec00-0x000000000009ffff] reserved Feb 17 21:53:56 thecloud kernel: BIOS-e820: [mem 0x00000000000e0000-0x00000000000fffff] reserved Feb 17 21:53:56 thecloud kernel: BIOS-e820: [mem 0x0000000000100000-0x000000003fffffff] usable Feb 17 21:53:56 thecloud kernel: BIOS-e820: [mem 0x0000000040000000-0x00000000403fffff] reserved @gdeyoung do you happen to have a Ubiquiti device acting as your gateway/router? The reason I ask is because my USG Pro was actively trying to log into the server via ssh/telnet when it hung.

-

Intel Socket 1151 Motherboards with IPMI AND Support for iGPU

juchong replied to Hoopster's topic in Motherboards and CPUs

Hi folks, I appreciate the recommendation (and the efforts on getting the updated BIOS from ASRock)! I swapped my old setup over to the new board, processor, and RAM without any issues. -

Intel Socket 1151 Motherboards with IPMI AND Support for iGPU

juchong replied to Hoopster's topic in Motherboards and CPUs

IPMI is high on my list of "wants" for sure. I assumed that the M.2 slot was shared with Slot #5, but now I'm not sure after reading through the product page. My current setup uses an i9 9900k and a C9Z390-PGW, so it's not "bad", but I really miss IPMI, accurate temperature readings, and ECC. -

Intel Socket 1151 Motherboards with IPMI AND Support for iGPU

juchong replied to Hoopster's topic in Motherboards and CPUs

Hi everyone! I know folks have been talking about the E3C246D4U (mini-ITX), but does anyone know if there's a similarly well-supported ATX board that you'd recommend? I have a U.2 drive that I use as my main cache drive along with an M.2, Intel fiber card, and the JBOD controller, so a mini-ITX board won't really cut it. -

Hi folks, I ended up inadvertently running into an issue with the fan speed plugin over the weekend that I thought might be important to report. It's worth pointing out that the fan plugin worked perfectly for several months. My motherboard is a Supermicro C9Z390-PGW, the driver loaded is coretemp nct6775. I've been putting off a BIOS update for several months, so I finally took the time to shut the server down and take care of it. After booting everything back up, I discovered that the UnRAID GUI would stop working (crash) a few seconds after the boot process finished. Docker, VMs, network shares, etc., all continued working, and only the GUI would crash. Digging through the logs, I traced the problem back to the auto fan plugin. It seems that, for some reason, the plugin was causing the GUI to crash. I managed to remove the plugin using the console, and after doing so, the GUI started up again. I suspect that the BIOS update somehow changed the path to a hardware resource that the plugin didn't correctly handle. I'm not sure what the scripts that make up the plugin look like, but it might be worth adding a check at start-up to handle any exceptions that might be thrown if allocated resources are missing. The plugin worked as expected after re-installation. I hope this helps!

-

Here's my contribution to the storage party. The main server has 224TB usable, the backup server has 126TB usable. The main server drives are housed in a Chenbro NR40700 case and the backup server is housed in a Supermicro CSE-826. I'll need to upgrade the backup server at some point since every drive bay is full.