foo_fighter

Members-

Posts

204 -

Joined

-

Last visited

Content Type

Profiles

Forums

Downloads

Store

Gallery

Bug Reports

Documentation

Landing

Everything posted by foo_fighter

-

Single Parity is a Mirror, but you can also use a ZFS Pool of 2 devices and assign 1 throw away device to the array using a usb flash drive. Then you get scrub/bit-rot protection.

-

Try to get cameras compatible with RSTP/ONVIF. Frigate with coral TPU seem like a popular NVR choice: https://frigate.video

-

Fractal Design chassis comparison - Meshify 2 vs Define R5

foo_fighter replied to Rajahal's topic in Hardware

Depending on your budget, I would throw this case in for consideration: https://www.silverstonetek.com/en/product/info/computer-chassis/cs382/ -

Problems with Unraid if the owner passes away....

foo_fighter replied to TRusselo's topic in General Support

Have you tried a microSD card reader with a high endurance SD card? Also, only use USB2.0 ports. -

I meant "it exists" as in the hardware(4BayPlus has been at CES and in the hands of dozens of reviewers), not that you could buy it on Amazon yet. The older 4-bay version has existed for years in the Chinese domestic market and was also sent out to Beta testers to test the built-in software. I think the hardware is close to the final revisions, but the software is still baking that's why a lot people are asking to run 3rd party OSes like unRaid or TrueNAS or OMV.

-

Is my brand new 6tb WD Red bad?

foo_fighter replied to TheSnuke's topic in Storage Devices and Controllers

Were they all pre-cleared? -

Umm, for one thing it exists?! (sorry to those who backed storaxa) Kickstarter was a strange approach for an established company, but it did generate a lot of publicity. I see it as a nice off the shelf hardware solution that can hopefully run unRaid well, similar to the LincStation but in more varieties. I think it would be difficult to build something at the price point that it was initially offered. It ships with its own OS, but it's not fully mature and not as flexible as unRaid.

-

Anyone get in on the Kickstarter? Which model? Hopefully there will be enough critical mass of UnRaid users to get everything working, like Fan control, S3 sleep, WoL, LED control, 10Gbe ethernet drivers, Lowest Power C-States(It uses the ASM116X Sata controllers), even the watchdog timer.

-

why not use an external usb drive and unassigned devices?

-

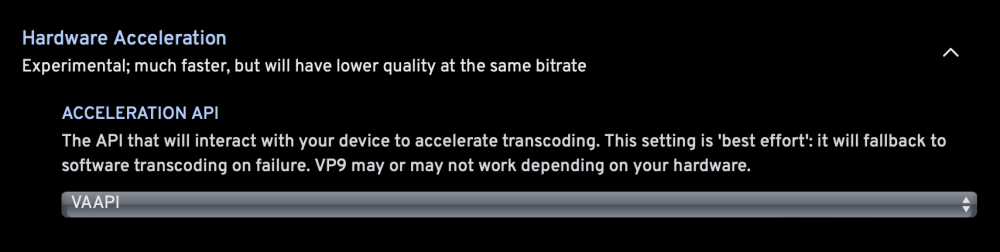

Edit: Seeing some instability(frozen machine) after the edits, I'm not sure if it's related to Immich/PostGres16 but there are some other reports of hung machines. It wasn't working for me either, but I just updated the containers and switched to SIO's postgres container and it seems like it may be working now. I used to just see tons of FFMPEG errors in the log and turned off HW accel. The quick sync setting seemed to generate ffmpeg error. I'm trying VVAPI:

-

You can use post commands to restore the speed(and other things).

-

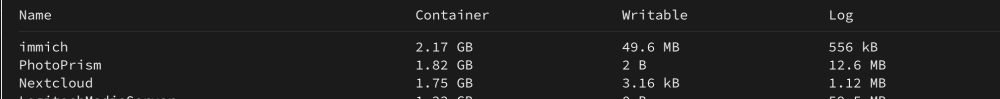

I have about 100k photos/videos. I haven't figured out how to get the iGPU/quicksync to work yet so it's using software transcoding. ML is turned on for facial recognition but that just uses CPU I believe (I don't have any HW accelerators). Have you checked your paths? All generated data should be outside of the docker image right?

-

-

I see this endless cycle in syslog. But you're saying that the read SMART is caused by the spin up, not the other way around?: Feb 18 06:23:32 Tower s3_sleep: All monitored HDDs are spun down Feb 18 06:23:32 Tower s3_sleep: Extra delay period running: 18 minute(s) Feb 18 06:24:32 Tower s3_sleep: All monitored HDDs are spun down Feb 18 06:24:32 Tower s3_sleep: Extra delay period running: 17 minute(s) Feb 18 06:25:32 Tower s3_sleep: All monitored HDDs are spun down Feb 18 06:25:32 Tower s3_sleep: Extra delay period running: 16 minute(s) Feb 18 06:25:34 Tower emhttpd: read SMART /dev/sdf Feb 18 06:26:32 Tower s3_sleep: Disk activity on going: sdf Feb 18 06:26:32 Tower s3_sleep: Disk activity detected. Reset timers. Feb 18 06:27:33 Tower s3_sleep: Disk activity on going: sdf Feb 18 06:27:33 Tower s3_sleep: Disk activity detected. Reset timers. Feb 18 06:28:33 Tower s3_sleep: Disk activity on going: sdf Feb 18 06:28:33 Tower s3_sleep: Disk activity detected. Reset timers. Feb 18 06:29:33 Tower s3_sleep: All monitored HDDs are spun down Feb 18 06:29:33 Tower s3_sleep: Extra delay period running: 25 minute(s) Feb 18 06:30:14 Tower emhttpd: spinning down /dev/sde Feb 18 06:30:33 Tower s3_sleep: All monitored HDDs are spun down Feb 18 06:30:33 Tower s3_sleep: Extra delay period running: 24 minute(s) Feb 18 06:31:33 Tower s3_sleep: All monitored HDDs are spun down Feb 18 06:31:33 Tower s3_sleep: Extra delay period running: 23 minute(s) Feb 18 06:31:41 Tower emhttpd: read SMART /dev/sde Feb 18 06:32:34 Tower s3_sleep: Disk activity on going: sde Feb 18 06:32:34 Tower s3_sleep: Disk activity detected. Reset timers. Feb 18 06:32:34 Tower emhttpd: read SMART /dev/sdh Feb 18 06:33:34 Tower s3_sleep: Disk activity on going: sdh

-

Is rsync rsync a typo? Would you consider adding -X to preserve extended attributes for things like the Dynamic File Integrity plugin?

-

the only way to do this with a live file system is with replication like on ZFS(or BTRFS). You can use sanoid/syncoid to setup replication of ZFS snapshots.

-

Anyone used a HBA in a m.2 -> pcie adapter?

foo_fighter replied to dopeytree's topic in Storage Devices and Controllers

I have 6 MB Sata ports on an old MB with no M.2 slots and a LSI HBA, but I'm thinking of consolidating to only MB ports to save power. The HBA prevents lower C-states. I only have 4TB-8TB drives with 1 14TB Parity so I could easily consolidate to 2 or 3 18TB drives. 18TB drives were $200 during the BF sales and ~$150 for pre-owned server pulls. They were the sweet spot at the end of last year. Some M.2 slots are only PCIe 3x1....so 6 Sata drives would saturate the BW. I've also been looking at some pre-built systems with 4-6 HDDs and 2 M.2s. There I'd really need the high capacity HDDs. -

Anyone used a HBA in a m.2 -> pcie adapter?

foo_fighter replied to dopeytree's topic in Storage Devices and Controllers

Why would you need one, do you need more than 6 ports? There are 6 port M.2 to Sata adapters based on the ASM1166 chipset. -

If 1 external drive can hold all of the data, that would be a convenient way to go. Rsync should work fine. You'd want to make sure /mnt/disk*/* is sync'd over to the backup drive. Another way would be to use Unbalace(d) to zero out and convert one drive at a time by moving one disk's contents to the other disks. That's assuming you have the spare capacity. Curious why you want convert the entire Array over. You'll get some benefits but not all the benefits of ZFS(you can check for corrupted files but not repair them for example)

-

6.12.6 Open main tab spinup all disks in Array

foo_fighter commented on zixuan's report in Stable Releases

If you use zfs_master, set the refresh to manual. -

You can find the PAR2 util in nerd-pack/tools.

-

Both NTFS and exFAT can store extended attributes so yes, DFI(or bunker from the command line) can run on those drives and files.

-

With Mover, they are treated as normal files, only the primary, most recent non-snapshotted files are moved over into the array. With Syncoid/Sanoid you can choose individual datasets and sub datasets. It can be used in the array. For example, I have my cache drive as ZFS and converted 1 array drive to ZFS so I could use it as a replication target.(ZFS->ZFS) The other drives in my array are XFS. I have my app data(dockers and docker data living on cache) replicated over to the ZFS drive for backups. It can be. RAID is not backup, so for catastrophic cases(any filesystem) that would be the recovery mechanism. I was only referring to the ZFS_Master plugin spinning up ZFS drives. It didn't touch any of my XFS drives. I actually set the plugin to manual refresh and it seems to have stopped the spinning up and writing.