bnevets27

Members-

Posts

576 -

Joined

-

Last visited

Content Type

Profiles

Forums

Downloads

Store

Gallery

Bug Reports

Documentation

Landing

Everything posted by bnevets27

-

Parity Checks Bus limited?

bnevets27 replied to bnevets27's topic in Storage Devices and Controllers

I had 2 problems. Chipset/northbridge/bus limit. The max my board will do is 10 Gbps = 1.25 GB/s /17 disks = 73.5 MB/s per disk CPU limit. Since parity calculations are single threaded, calculating parity for both my parity disks maxed out the CPU and therefore it can't write any faster then 75MB/s, its waiting for the CPU. At least that's how I understood it. It is odd that the bus limit and the CPU limit end up being the same number of 75 MB/s though. New hardware will defiantly remove my bus/MCH/PCH/northbridge (whatever it's called) limit as the new board has a ton of bandwidth. Not sure the minimum requirements to insure the CPU isn't limiting the speeds though. -

Parity Checks Bus limited?

bnevets27 replied to bnevets27's topic in Storage Devices and Controllers

Thanks Johnnie! I feel more sane now 😁 That explains why my parity speeds dropped when adding the second 8TB drive. I had thought that the speeds would increase as it was a faster drive but I obviously had a few bottlenecks. Glad I had already put an upgrade in motion. I'll be running dual Intel Xeon X5650 in the new board (not that dual will help as you mentioned parity calculations are single threaded which is disappointing) which has a single thread rating of 1231. Unfortunately not that much faster, will that CPU still be a bottleneck? -

Parity Checks Bus limited?

bnevets27 replied to bnevets27's topic in Storage Devices and Controllers

I had a feeling I was doing a terrible job explaining/forming that question. Maybe this will explain the question better. Would the following statements be correct? Scenario 1: 8TB Parity 1 4TB Parity 2 When CPU limited, after passing the 4TB mark, the CPU is free to do calculations solely for the 8TB drive. Scenario 2: 8TB Parity 1 8TB Parity 2 When CPU limited, after passing the 4TB mark (of the data disks), the CPU has to split the single thread work load of calculating parity for both parity drives. Scenario 1 would result in faster speeds for the last 4TB then scenario 2, This is because in scenario 2 the last 4TB being calculated will be slower as the CPU has to calculate parity for 2 drives. -

Parity Checks Bus limited?

bnevets27 replied to bnevets27's topic in Storage Devices and Controllers

Well a bug might actually explain it. Unfortunately I don't have good record keeping of when I did updates to unraid. But if you look at my parity speeds, they took a hit beginning in december. I know I installed my first 8TB drive as a parity drive on 2018-03-13. The system had been running dual 4TB parity before that time. I know I had a 4TB WD black as parity 1 and either a blue or green WD 4TB as parity 2. So the for the most part my limit was due to the blue/green WD. When I added the 8TB, it replaced the blue/green. At that time I then had 8TB seagate compute and a 4TB WB black as parity drives. Both should have been decently fast. Add to that, that there was only the parity itself that was larger than 4TB, which means when it built the last 4TB of parity it wasn't limited to any other disk speed. Which would likely explain the very fast average speed of that parity sync (282 MB/s) When I upgraded my second parity drive to 8TB I think it was then when I noticed my speeds were lower. I had tried a few different things at the time, though never let the parity sync complete as I was fixated on getting about the 74 MB/s which I now know would never happen. @johnnie.black would that explain what I saw then with running a single 8TB drive? Since the parity calculations were only having to be done on 1 of the 2 parity disks, after 4TB? What I'm trying to say is if I am CPU limited then I would be less limited with only one drive having parity calculated over 4TB. As soon as I added the the second 8TB it split my limit between the two drives and therefore lowered my speed after 4TB. I didn't realize parity checks were single threaded, I had sworn I thought I saw all my cores active and not just a single one maxed out but I must have been mistaken. (I had nothing else running at the time) Is there any information out there about the CPU specs needed to not slow down a parity check due to an underpowered CPU? Recommend single threaded passmark score? 2018-12-21, 07:56:45 1 day, 6 hr, 50 min 72.1 MB/s OK 1 2018-12-19, 06:45:33 1 day, 9 hr, 27 min 66.4 MB/s OK 0 2018-12-17, 00:11:47 17 hr, 59 min, 36 sec 123.5 MB/s OK 0 2018-12-15, 23:03:58 1 day, 4 hr, 54 min 76.9 MB/s OK 0 2018-12-04, 21:47:51 1 day, 51 min, 6 sec 89.4 MB/s OK 0 2018-12-04, 21:47:51 1 day, 51 min, 6 sec 89.4 MB/s OK 0 2018-12-01, 02:35:10 1 day, 6 hr, 2 min 74.0 MB/s OK 549 2018-08-19, 11:57:07 12 hr, 56 min, 43 sec 171.7 MB/s OK 0 2018-03-13, 23:50:01 7 hr, 52 min, 25 sec 282.3 MB/s OK 0 2018-02-08, 17:10:59 21 hr, 32 min, 20 sec 103.2 MB/s OK 0 -

Parity Checks Bus limited?

bnevets27 replied to bnevets27's topic in Storage Devices and Controllers

Yeah I had just thought of that after hitting submit. That part still doesn't make sense. So the new question is why doesn't the speed of my 3 8TB disks increase after the bus is freed up by not reading any of the 4TB drives. It looks as if the 8 TB drives continue at the "capped" speed of 74 MB/s even after the bottleneck is removed. It doesn't make sense. Because while all the drives are being read, HBA2 which contains both my parity (8TB) disks and 6 other drives can easily do ~ 592 MB/s (74 MB/s x 8). But then drops down to 148 MB/s (74 MB/s x 2) (on that HBA) when only 2 drives are being read from. It almost seems like a bug?? -

Parity Checks Bus limited?

bnevets27 replied to bnevets27's topic in Storage Devices and Controllers

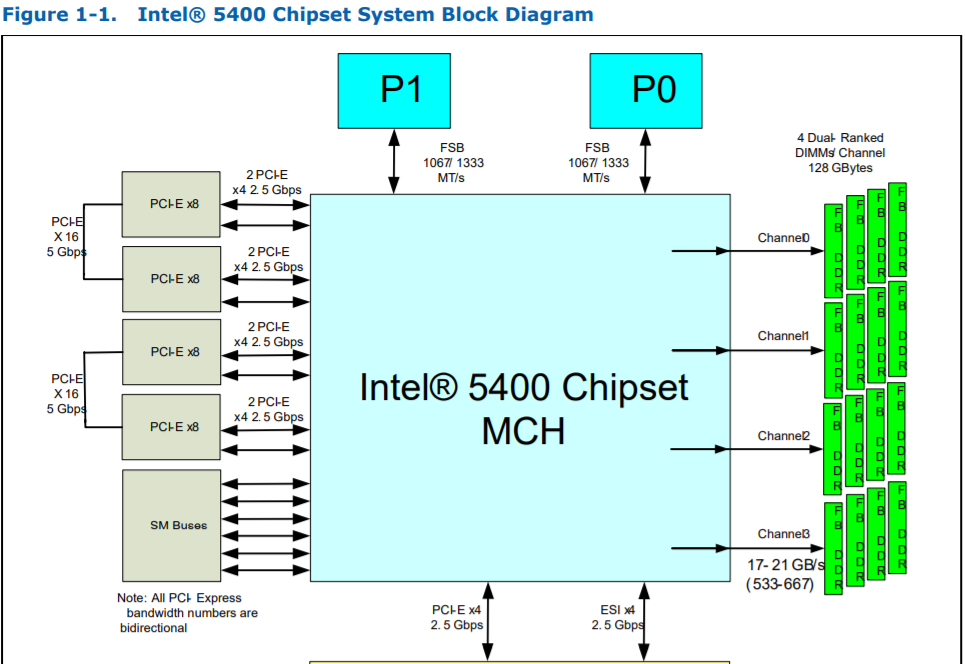

As I was just about to post the following I finally figured it out. The max bandwidth of my northbridge must be 10 Gbps. 10 Gbps = 1.25 GB/s 10 Gigabit per second = 1.25 Gigabyte per second I can't believe I messed up the Gigabit/Gigabyte thing (I always remember it when talking about networking/internet) So it all makes sense now, why I'm limited to 1.25 GB/s overall. I am bus/chipset limited. 1.25 GB/s /17 = 73.5 MB/s Thanks for kicking me into figuring this out. Except it does leave one question, why doesn't my speeds increase when the parity check is only accessing my 3 8TB drives. Shouldn't the speed of the 8TB drives go way up after its done with the 4TB drives? I'm having a hard time finding a concrete answer to the the max bandwidth the northbridge/MCH has. This is the block diagram from intel: Which makes is seem like the MCH should have 2.5 Gbps x 4 = 10 Gbps Here's the supermicro block diagram. I have a HBA in J8, J5 and J9. The HBA in J9 should obviously be moved to a x8 slot, not sure why it is not but I do know at one point it was due it not fitting in a different slot due to physical interference with another card. Which also means I guess I was wrong to say all are attached to the MCH as J9 goes through the southbridge. I would have to confirm what's going on with HBA3 as I haven't been inside the server in a while. Either way, HBA3 only has 1 disk on it currently, that being the one 8TB data drive. The 3 8TB drives are on the following HBA Parity 1 and 2 are both on HBA2 Disk 17 is the one and only disk on HBA3 The disk speed docker is reporting HBA1 = Current & Maximum Link Speed: 5GT/s width x8 (4 GB/s max throughput) HBA2 = Current & Maximum Link Speed: 5GT/s width x8 (4 GB/s max throughput) HBA3 = Current Link Speed: 2.5GT/s width x8 (2 GB/s max throughput) In the coming weeks this server is getting a new motherboard, CPUs and memory. But only slightly more modern. New motherboard is the supermicro X8DAH+-F. Looking at the datasheet for the intel 5520 chipset, it shows pcie gen2 x16 x2 + pcie gen2 x4 = 18 GBps and this motherboard has two of these chipsets so a total of 36 Gbps. So it too should have enough bandwidth. The current motherboard is going into another server so I did want to get to the bottom of this for a few reasons. 1) So the issue doesn't follow into the next server 2) So I don't have the same issue with the new motherboard 3) So I can understand whats going on. -

Parity Checks Bus limited?

bnevets27 replied to bnevets27's topic in Storage Devices and Controllers

Just completed another parity build. Updated the OP with the speeds of a few more parity checks. I get the feeling this has to do with the new 8TB seagate compute drives but that theory is hard to believe due to the fact that the first 8TB drive was installed on 2018-03-13 with a speed of 282.3 MB/s. Now I'm guessing that might be due to the fact it was the only 8TB and it wouldn't have been limited after 4TB or 50% of the parity check as all my other drives are smaller than 4TB. Furthermore the 8TB drives report a much higher speed in the diskspeed test compared to the parity check speed (74.7 MB/s). This is from the start of the parity check, while all disks were being read simultaneously. This is after the parity check finished. This is the speeds I'm getting on my 8TB drives, after the parity check is done with the 4TB drives. When all drives are being read for the parity check, all my drives report a max speed of 74.7 MB/s (with very small fluctuations from that speed) So that would make it seem like the parity drives are the bottleneck but I don't understand why drives that are clearly capable of sustaining over 100 MB/s don't ever even get to the speed. -

Hopefully this isn't a thread jack as I have a somewhat similar situation. I currently have both onboard NICs bonded going to a managed switch via LACP. I also have a 4 port intel NIC that I would like to utilize if it can provide me with some sort of benefit. The main question would be, would isolating plex to its own port free up the bonded connection and allow less overhead on the bonded connection? Therefore giving some benefit to moving plex to a dedicated NIC port? Plex is running in a docker in this case. A good example of a heavy network usage time would be say multiple plex streams which transferring data to a backup server. Would putting plex on a deticated port help in this situation?

-

Parity Checks Bus limited?

bnevets27 replied to bnevets27's topic in Storage Devices and Controllers

Ran the following command dskt2 s m r k h g f e l p n o d c b This is the result sds = 164.02 MiB/sec sdm = 179.66 MiB/sec sdr = 151.12 MiB/sec sdk = 148.25 MiB/sec sdh = 115.86 MiB/sec sdg = 133.69 MiB/sec sdf = 131.94 MiB/sec sde = 140.57 MiB/sec sdl = 109.92 MiB/sec sdp = 164.80 MiB/sec sdn = 100.93 MiB/sec sdo = 148.13 MiB/sec sdd = 143.46 MiB/sec sdc = 126.61 MiB/sec sdb = 175.05 MiB/sec I'm running dual L5420's so while not modern, more than enough for parity sync. -

Parity Checks Bus limited?

bnevets27 replied to bnevets27's topic in Storage Devices and Controllers

I was just trying to report what I see. On the main GUI, when the parity sync is running, the total transfer speed, at the bottom of all the disk speeds doesn't get higher then 1.0 GB/s. Also from what I recall, the stats page doesn't show a speed slow down as the parity sync gets closer to finishing. The graph is flat. According to johnnie.black that should indicate some sort of limit. Thing is I don't what's causing the limit. I would like to know so I can understand and hopefully remove the bottleneck. I have a new motherboard on its ways so there will be a hardware change but this current hardware will be going into another server. I'll have to give that a try UhClem. Unless I missed something, it does pretty much the same thing as the diskspeed docker with the exception of testing all drive simultaneously? What I'm struggling to understand is. If no drive is as slow as 80 MB/s (at the start/outside of the disk). And if non of the controllers are the bottleneck, as shown from the speed tests via diskspeed. The only thing left is the bus/motherboard which has more than enough bandwidth also. -

^ That is definitely true as I had tried to view it and that's what I saw. A backup/copy could be a good idea. Backups in general are a good idea but I had made a change from my last backup and therefore the backup wasn't useful.

-

DiskSpeed, hdd/ssd benchmarking (unRAID 6+), version 2.10.8

bnevets27 replied to jbartlett's topic in Docker Containers

Just wanted to thank John for this awesome application. I think I have used it before but haven't in a while and used it again today. Just simply incredible and nice to use. About the only thing I am looking forward to is when its able to test all drives at the same time to test out bus limitations. I see that's planned for the future. Great work John! -

EDIT: Turns out I am bus/chipset limited. The max bandwidth of my northbridge is 10 Gbps. 10 Gbps = 1.25 GB/s 10 Gigabit per second = 1.25 Gigabyte per second 1.25 GB/s /17 disks = 73.5 MB/s per disk -------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------- I would like to get down to the bottom of why my parity checks seem to be capped at 1.0GB/s total for all my drives or an average of around 80 MB/s per disk for a parity sync. That's what unraid reports for my total read bandwidth during most of my parity checks. Motherboard is: Supermicro: X7DWN+ Block diagram in the manual pdf here CPU: L5420 x2 HBA: SuperMicro AOC-USAS2-L8I x2 Flashed to IT mode HBA: Dell H310 flashed to IT mode x1 HBA1 = SuperMicro AOC-USAS2-L8I is in a pcie x8 gen2 slot HBA2 = H310 is in a pcie x8 gen2 slot HBA3 = SuperMicro AOC-USAS2-L8I is in either a pcie x8 gen1 All of those slots are connect directly to the northbridge. HBA3 ideally should be on a pcie x8 gen2 slot of course but that's currently not possible. There are no other cards in the system (though there has been in the past a TV tuner) HBA1 = 8 Drives HBA2 = 8 drives (Parity 2) HBA3 = 1 drives (Parity) Onboard SATA = 2 x SSD Cache Better yet, look at the attached pictures from the DiskSpeed docker. This is my history of parity checks. I don't have any idea why some are so fast. For example 282 MB/s happened once and I have no idea how. There has been some hardware changes over time. Replaced a failed disk or two, changed my parity 1 and 2, added another HBA. I was expecting to see maybe something in the diskspeed tests but unless I'm not reading them right I don't see any bottleneck. I am aware and not surprised that my two 3TB drives are the slowest but until they die or I have a actual need to upgrade them they will stay. 2018-12-21, 07:56:45 1 day, 6 hr, 50 min 72.1 MB/s OK 1 2018-12-19, 06:45:33 1 day, 9 hr, 27 min 66.4 MB/s OK 0 2018-12-17, 00:11:47 17 hr, 59 min, 36 sec 123.5 MB/s OK 0 2018-12-15, 23:03:58 1 day, 4 hr, 54 min 76.9 MB/s OK 0 2018-12-04, 21:47:51 1 day, 51 min, 6 sec 89.4 MB/s OK 0 2018-12-04, 21:47:51 1 day, 51 min, 6 sec 89.4 MB/s OK 0 2018-12-01, 02:35:10 1 day, 6 hr, 2 min 74.0 MB/s OK 549 2018-08-19, 11:57:07 12 hr, 56 min, 43 sec 171.7 MB/s OK 0 2018-03-13, 23:50:01 7 hr, 52 min, 25 sec 282.3 MB/s OK 0 2018-02-08, 17:10:59 21 hr, 32 min, 20 sec 103.2 MB/s OK 0 2018-01-19, 12:09:34 13 hr, 31 min, 8 sec 82.2 MB/s OK 0 2017-12-28, 17:16:15 16 hr, 20 min, 48 sec 68.0 MB/s OK 0 2017-11-15, 04:54:55 11 hr, 44 min, 18 sec 94.7 MB/s OK 0 2017-09-01, 19:52:04 14 hr, 16 min 77.9 MB/s OK 0 2017-08-22, 13:25:43 14 hr, 32 min, 49 sec 76.4 MB/s OK 0 2017-08-01, 15:20:19 14 hr, 26 min, 26 sec 77.0 MB/s OK 0 2017-07-26, 16:31:32 14 hr, 17 min, 1 sec 77.8 MB/s OK 0 2017-07-21, 17:01:41 16 hr, 13 min, 51 sec 68.5 MB/s OK 0 2017-06-27, 12:27:54 17 hr, 2 min, 27 sec 65.2 MB/s OK 0 2017-06-13, 00:50:53 23 hr, 52 min, 29 sec 46.5 MB/s OK 0 2017-06-06, 13:59:25 19 hr, 18 min, 41 sec 57.5 MB/s OK 0 2017-05-23, 05:13:46 16 hr, 3 min, 7 sec 69.2 MB/s OK 0 2017-04-26, 17:03:55 18 hr, 17 min, 27 sec 60.8 MB/s OK 0 2017-04-21, 04:11:16 21 hr, 22 min, 16 sec 52.0 MB/s OK 11 2017-04-19, 05:14:44 16 hr, 32 min, 2 sec 67.2 MB/s OK 10 2017-03-15, 20:43:38 16 hr, 6 min, 2 sec 69.0 MB/s OK 2017-03-13, 16:01:31 13 hr, 19 min, 26 sec 83.4 MB/s OK 2017-02-06, 00:42:16 11 hr, 45 min, 30 sec 94.5 MB/s OK 2016-11-10, 07:12:34 8 hr, 39 min, 34 sec 128.3 MB/s OK 2016-10-29, 09:57:38 9 hr, 11 min, 50 sec 120.8 MB/s OK 2016-10-28, 11:23:42 9 hr, 56 min, 58 sec 111.7 MB/s OK Sep, 23, 11:18:22 11 hr, 20 min, 57 sec 97.9 MB/s OK May, 13, 12:20:11 11 hr, 59 min, 48 sec 92.6 MB/s OK Mar, 23, 11:35:34 11 hr, 6 min, 8 sec 100.1 MB/s OK Feb, 21, 23:50:35 1 hr, 4 min, 48 sec 64.3 MB/s OK

-

True. Well I did a new config and trust parity so I'm back up. Would like to know what happen to have caused that but oh well. Corrupt super.dat?

-

Not since changing the parity and doing a parity build. The backup is a week old and has a different disk config. I had a 8tb Parity and a 4tb Parity 2. Changed out the 4tb Parity 2 for a 8tb. Built parity, everything looked good. Upgraded the OS, and rebooted. And this is what I was presented with. My backup is from before the parity 2 upgrade. I'm ok with doing another Parity build, though not happy about it. But I don't want this to happen again and seems like a bug or corruption of some sort.

-

https://forums.unraid.net/topic/76129-wrong-disk-but-sn-matches/&share_tid=76129&share_fid=18593&share_type=t

-

Server is in a remote location so I can't do this easily. But I did open a thread. Though I get the feeling you are right, and the super.dat got corrupted. Would be nice if that could get fixed without doing another parity rebuild.

-

Finished building parity. Everything was good so figured I would update from 6.6.0 to 6.6.6. Rebooted and was greeted by this. I assume I could just rebuild the parity but something is definitely not right.

-

Just updated. What the hell? Why does unraid think this disk was 4TB? What do I do here? I could of course just start it and let it build parity but there should be no reason to need to do so.

-

Ability to install GPU Drivers for Hardware Acceleration.

bnevets27 replied to AnnabellaRenee87's topic in Feature Requests

What about AMD then? As I've seen reports of users running an AMD gpu under Linux with hardware transcoding working. I don't really think we are waiting on Plex. We need drivers. Even if plex officially supported Nvidia and AMD it won't matter if unraid doesn't have the drivers. It's the same as unraid dvb. Plex supports tuners but plex can't see or do anything with those tuners without the drives being supplied by the unraid dvb plugin. The issue is rather simple, if the right drivers are installed into unraid then the gpu can be passed to the plex docker. The support of that gpu then falls on Plex. The problem is adding drivers for gpus into unraid is not an easy or simple task. I highly doubt Lime-tech wants to spend the time/money/effort into doing so. Even though I think it may attract more customers. Therefore it would fall on the community. For an idea of how much work is involved, listen to CHBMB. He knows much work is involved with compiling drivers into each new build of unraid. I really want this to happen. But from what I've read and been told is not something thats going to come easy. -

Yeah I don't know why the 64bit doesn't work for me. Both VMs I do have internet, YouTube/chrome work. But tunein says it's offline so it's thinks there is no internet but there is. I have an feeling (with no evidence at all) that tunein is not seeing the app on a device it suspects and is preventing accessing the Internet. Which is really annoying. The play store works fine in the image you used. It doesn't work however from the iso I tried for my other VM. Unfortunately I haven't found many options to record streaming audio via a docker container. And I didn't want a full fledged win or Linux VM. And tunein is setup to record on a schedule which I want.

-

Thanks kimocal! I got android installed by following your instructions. At first I accidently tried with 64bit and it wouldn't boot. Tried 32bit and it worked. Navigation is a pain that's for sure so if anyone figures it out please report back. So for I've figured out you can see and move the cursor when clicking the left button but that does cause havoc. After finding out 64bit didn't work with you instructions I went back and tried to install android-x86-8.1-rc2.iso from this iso: https://osdn.net/projects/android-x86/releases/69704 I was able to use that iso is the install media, and install it to a vdisk. It boots and works the same. Except it seems I can't sign into google play store. The main reason I wanted this was to use tunein radio to record a radio show but unfortunately tunein thinks there is no network connection. Can't seem to figure out why.

-

[Support] Linuxserver.io - Kodi-Headless

bnevets27 replied to linuxserver.io's topic in Docker Containers

Assuming you just want to have kodi run an update on a schedule. You can do that with cron and curl I think it was. Just look up how to update kodi via cmd line. Here it is put that in user scripts and run it as frequently as you want -

Well it looks like the docker container is still on docker hub and the docker template is still on github. Wonder if you could still install it that way and just replace the script within the container with the latest version of the script? There is also this: https://github.com/johnodon/zapscrape This was definitely a crappy way for this docker to die. Would an alternative be the webgrab+ plus? Look like it can grab from zap2it also but I haven't tired it yet. Nevermind. I found a working solution. And I bet you know what that solution was. For everyone else. Look around, usual places. Only not linking to anything because I don't want to take away from johnodon's work and help the zap2xml dev find and ruin another project.