-

Posts

296 -

Joined

-

Last visited

Content Type

Profiles

Forums

Downloads

Store

Gallery

Bug Reports

Documentation

Landing

Everything posted by TexasDave

-

You have been a big help - no worries at all. And the plugin is much appreciated. Question: I assume I can still use my UPS short term? It will just supply power if I lose power but does not have any smarts so unRAID will not shutdown. So all it does now is keep it up and running and I have to hope the power comes back on before it runs out of charge?

-

The cables seem fine. I can plug them into a printer and a Windows laptop sees them... I strongly suspect the usb port on the actual UPC - it seems very "loose" - not sure how that happened. May be time for a new UPS. 😞

-

NUT Debug Package also attached n ow - sorry - I missed that out. I agree with your assessment - I do not think the USB connection is being picked up. Will see if I can get the UPS and USB cable to be recognized by a windows - Thanks! nut-debug-20240309210916.zip

-

I have had NUT working fine for some time. But to be fair, have not looked for a month or two too see if it was working and had no warnings or alerts. I had to move my server today as we are painting and noticed when bringing it back up, it has stopped working. My UPC is a APC BX700U Series. Motherboard is Supermicro X10SL7-F The APC UPS daemon is NOT activated I have tried two different USB cables and 3 different USB ports. I have ordered a new cable and will try that. I have tried both the current version and the 2.7.4 version (with restart) I am attaching my diagnostics. Here is what I am getting in the SYSLOG: Mar 9 16:42:28 Zack-unRAID root: Writing NUT configuration... Mar 9 16:42:30 Zack-unRAID root: Updating permissions for NUT... Mar 9 16:42:30 Zack-unRAID root: Checking if the NUT Runtime Statistics Module should be enabled... Mar 9 16:42:30 Zack-unRAID root: Enabling the NUT Runtime Statistics Module... Mar 9 16:42:31 Zack-unRAID root: Network UPS Tools - Generic HID driver 0.55 (2.7.4.1) Mar 9 16:42:31 Zack-unRAID root: USB communication driver 0.43 Mar 9 16:42:31 Zack-unRAID root: No matching HID UPS found Mar 9 16:42:31 Zack-unRAID root: Driver failed to start (exit status=1) Mar 9 16:42:31 Zack-unRAID root: Network UPS Tools - UPS driver controller 2.7.4.1 Mar 9 16:45:56 Zack-unRAID pulseway: First reporting data collection finished Mar 9 16:51:00 Zack-unRAID root: Fix Common Problems Version 2024.02.29 Mar 9 16:52:02 Zack-unRAID ool www[14520]: /usr/local/emhttp/plugins/nut-dw/scripts/start Mar 9 16:52:03 Zack-unRAID root: Writing NUT configuration... Mar 9 16:52:05 Zack-unRAID root: Updating permissions for NUT... Mar 9 16:52:05 Zack-unRAID root: Checking if the NUT Runtime Statistics Module should be enabled... Mar 9 16:52:05 Zack-unRAID root: Enabling the NUT Runtime Statistics Module... Mar 9 16:52:06 Zack-unRAID root: Network UPS Tools - Generic HID driver 0.55 (2.7.4.1) Mar 9 16:52:06 Zack-unRAID root: USB communication driver 0.43 Mar 9 16:52:06 Zack-unRAID root: No matching HID UPS found Mar 9 16:52:06 Zack-unRAID root: Driver failed to start (exit status=1) Mar 9 16:52:06 Zack-unRAID root: Network UPS Tools - UPS driver controller 2.7.4.1 Here is what I am getting in NUT USB Diagnostics... ### ### USB DEVICES ### Bus 002 Device 002: ID 8087:8000 Intel Corp. Integrated Rate Matching Hub Bus 002 Device 001: ID 1d6b:0002 Linux Foundation 2.0 root hub Bus 001 Device 002: ID 8087:8008 Intel Corp. Integrated Rate Matching Hub Bus 001 Device 001: ID 1d6b:0002 Linux Foundation 2.0 root hub Bus 004 Device 001: ID 1d6b:0003 Linux Foundation 3.0 root hub Bus 003 Device 004: ID 0557:2419 ATEN International Co., Ltd Virtual mouse/keyboard device Bus 003 Device 003: ID 0557:7000 ATEN International Co., Ltd Hub Bus 003 Device 002: ID 0781:5571 SanDisk Corp. Cruzer Fit Bus 003 Device 001: ID 1d6b:0002 Linux Foundation 2.0 root hub ### ### USB DEVICES (TREE VIEW) ### /: Bus 04.Port 1: Dev 1, Class=root_hub, Driver=xhci_hcd/2p, 5000M ID 1d6b:0003 Linux Foundation 3.0 root hub /: Bus 03.Port 1: Dev 1, Class=root_hub, Driver=xhci_hcd/10p, 480M ID 1d6b:0002 Linux Foundation 2.0 root hub |__ Port 1: Dev 2, If 0, Class=Mass Storage, Driver=usb-storage, 480M ID 0781:5571 SanDisk Corp. Cruzer Fit |__ Port 7: Dev 3, If 0, Class=Hub, Driver=hub/4p, 480M ID 0557:7000 ATEN International Co., Ltd Hub |__ Port 1: Dev 4, If 0, Class=Human Interface Device, Driver=usbhid, 1.5M ID 0557:2419 ATEN International Co., Ltd Virtual mouse/keyboard device |__ Port 1: Dev 4, If 1, Class=Human Interface Device, Driver=usbhid, 1.5M ID 0557:2419 ATEN International Co., Ltd Virtual mouse/keyboard device /: Bus 02.Port 1: Dev 1, Class=root_hub, Driver=ehci-pci/2p, 480M ID 1d6b:0002 Linux Foundation 2.0 root hub |__ Port 1: Dev 2, If 0, Class=Hub, Driver=hub/6p, 480M ID 8087:8000 Intel Corp. Integrated Rate Matching Hub /: Bus 01.Port 1: Dev 1, Class=root_hub, Driver=ehci-pci/2p, 480M ID 1d6b:0002 Linux Foundation 2.0 root hub |__ Port 1: Dev 2, If 0, Class=Hub, Driver=hub/4p, 480M ID 8087:8008 Intel Corp. Integrated Rate Matching Hub ### ### USB POWER MANAGEMENT SETTINGS ### ON: ALWAYS ON / AUTO: SYSTEM MANAGED ### ==> /sys/bus/usb/devices/1-1/power/control <== auto ==> /sys/bus/usb/devices/2-1/power/control <== auto ==> /sys/bus/usb/devices/3-1/power/control <== on ==> /sys/bus/usb/devices/3-7.1/power/control <== on ==> /sys/bus/usb/devices/3-7/power/control <== auto ==> /sys/bus/usb/devices/usb1/power/control <== auto ==> /sys/bus/usb/devices/usb2/power/control <== auto ==> /sys/bus/usb/devices/usb3/power/control <== auto ==> /sys/bus/usb/devices/usb4/power/control <== auto ### ### USB RELATED KERNEL MESSAGES ### [ 0.381770] usbcore: registered new interface driver usbfs [ 0.381877] usbcore: registered new interface driver hub [ 0.381986] usbcore: registered new device driver usb [ 0.425339] pci 0000:00:1a.0: quirk_usb_early_handoff+0x0/0x63b took 16379 usecs [ 0.442337] pci 0000:00:1d.0: quirk_usb_early_handoff+0x0/0x63b took 16475 usecs [ 1.988551] usbcore: registered new interface driver usb-storage [ 1.988989] usbcore: registered new interface driver synaptics_usb [ 1.991126] usbcore: registered new interface driver usbhid [ 1.991234] usbhid: USB HID core driver [ 2.208343] usb 1-1: new high-speed USB device number 2 using ehci-pci [ 2.224346] usb 3-1: new high-speed USB device number 2 using xhci_hcd [ 2.224470] usb 2-1: new high-speed USB device number 2 using ehci-pci [ 2.352117] usb-storage 3-1:1.0: USB Mass Storage device detected [ 2.352581] scsi host0: usb-storage 3-1:1.0 [ 2.466334] usb 3-7: new high-speed USB device number 3 using xhci_hcd [ 2.890359] usb 3-7.1: new low-speed USB device number 4 using xhci_hcd [ 3.002104] input: HID 0557:2419 as /devices/pci0000:00/0000:00:14.0/usb3/3-7/3-7.1/3-7.1:1.0/0003:0557:2419.0001/input/input1 [ 3.054596] hid-generic 0003:0557:2419.0001: input,hidraw0: USB HID v1.00 Keyboard [HID 0557:2419] on usb-0000:00:14.0-7.1/input0 [ 3.056387] input: HID 0557:2419 as /devices/pci0000:00/0000:00:14.0/usb3/3-7/3-7.1/3-7.1:1.1/0003:0557:2419.0002/input/input2 [ 3.057039] hid-generic 0003:0557:2419.0002: input,hidraw1: USB HID v1.00 Mouse [HID 0557:2419] on usb-0000:00:14.0-7.1/input1 My concern is it the the USB ports on my server or the USB port into the UPC. Ideas on what else I can do to try to debug and/or fix this? zack-unraid-diagnostics-20240309-1631.zip

-

Thanks for the help - much appreciated!

-

Hello, During my usual monthly parity check, I received some read errors concerning one of my parity drives: Event: Unraid array errors Subject: Warning [ZACK-UNRAID] - array has errors Description: Array has 1 disk with read errors Importance: warning Parity disk - WDC_WD60EFRX-68MYMN1_WD-WX51DA47619X (sdd) (errors 256) After the parity check completed, I ran a smart disk extended test on the parity drive but all seemed fine. See attached. This morning I received another warning about this drive. I fear the end is near: Event: Fix Common Problems - Zack-unRAID Subject: Errors have been found with your server (Zack-unRAID). Description: Investigate at Settings / User Utilities / Fix Common Problems Importance: alert * **parity (WDC_WD60EFRX-68MYMN1_WD-WX51DA47619X) has read errors** I attach the smart drive tests and my unRAID server data. Is there anything I can do to either confirm the drive is close to failing or that these errors are anomalies? The drive in question is old...Thanks! zack-unraid-smart-20230806-1150.zip zack-unraid-diagnostics-20230806-1152.zip

-

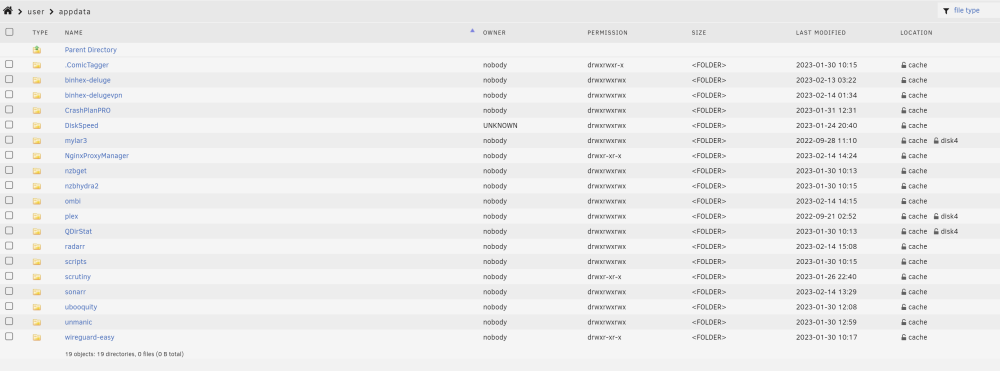

Cleaning up, Appdata docker config path - cache v user?

TexasDave replied to TexasDave's topic in General Support

All the stuff on disk 4 was old. I just backed it up (actually renamed it OLD) just in case. Turned off dockers, updated config info, and all seems to be working. Many thanks both!! -

Cleaning up, Appdata docker config path - cache v user?

TexasDave replied to TexasDave's topic in General Support

Thank you! There is a tiny bit on Disk 4... Can I do the following: 1) Stop the three dockers 2) Move the appdata from disk4 to cache 3) Edit three dockers, change them to use cache. 4) Start dockers? Or is there a better way? It is weird as the three dockers (mylar3, plex and QDirSTat) should be using cache? Maybe that is old stuff? Thanks!! -

Cleaning up, Appdata docker config path - cache v user?

TexasDave replied to TexasDave's topic in General Support

Just wondering if anyone has any advice on this please? -

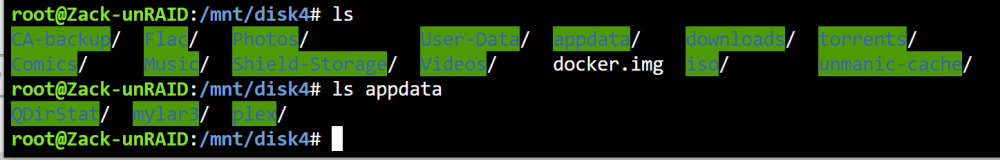

[Support] Josh5 - Unmanic - Library Optimiser

TexasDave replied to Josh.5's topic in Docker Containers

OK - So I know it is far from scientific but this is what I am seeing. I ran four samples on Plex (Original, GPU-Medium, GPU-Slow, CPU-Medium). GPU is a 1050Ti..... GPU is definitely faster than CPU. About 7-8x (5 minutes v 40 minutes). Quality on GPU is about the same between SLOW and MEDIUM. GPU produces a better bit rate which seems to be my issue. I attach a few screen shots. Like I say, I know it is far from scientific. I am running Plex on unRAID, through Shield TV and on to a Hisense Roku TV which is decent but certainly not high end. I know there is a bitrate flag and I may try bumping that up. Even on the original there is some minor pixelation. The photos make it look worse than it is. But I can tell a difference between the original and the ones that have been processed. Changing the brightness on the TV from Brightest to Dark made the most difference. 🙂 Besides bumping the bitrate up - any other ideas? I think I will just live with it. I am on an average TV in average viewing conditions. Friends that access Plex do not complain so... -

[Support] Josh5 - Unmanic - Library Optimiser

TexasDave replied to Josh.5's topic in Docker Containers

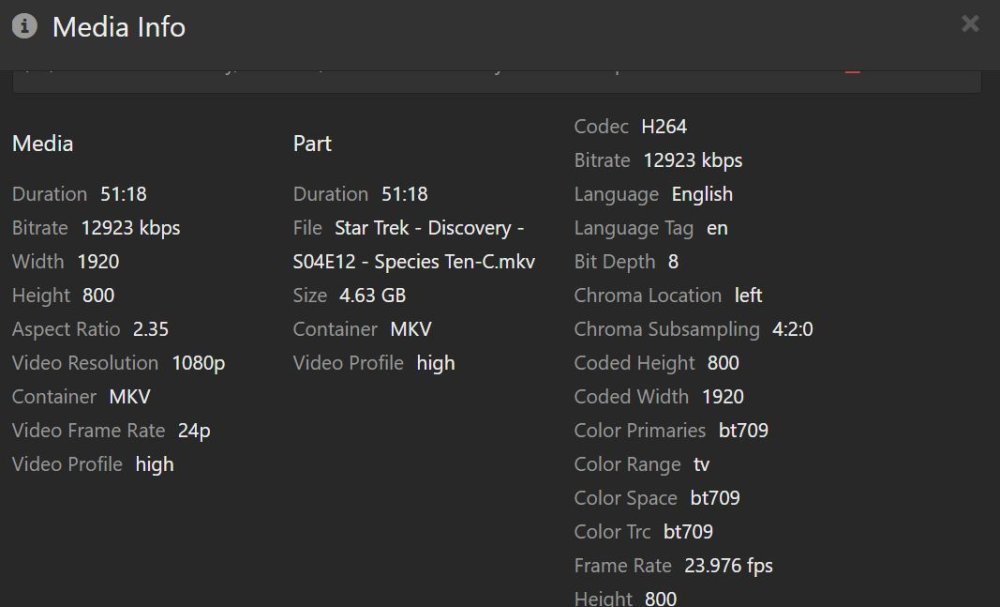

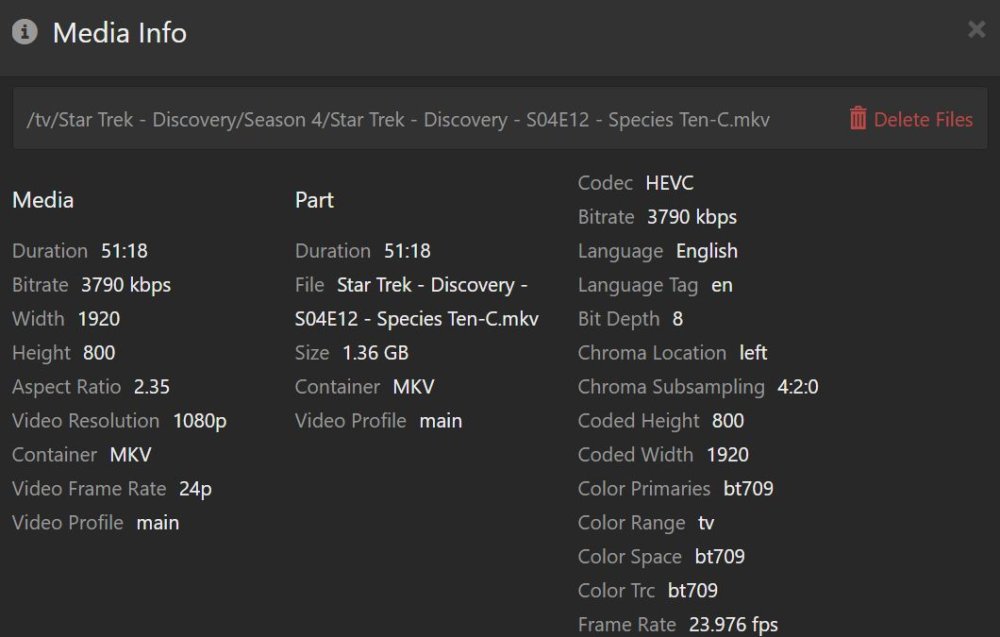

I am using Video Encoder H265/HEVC - hevc_nvenc (NVIDIA GPU) Command is the following: ffmpeg -hide_banner -loglevel info -i /library/tv/Star Trek - Discovery/Season 4/Star Trek - Discovery - S04E12 - Species Ten-C.mkv -strict -2 -max_muxing_queue_size 2048 -threads 4 -map 0:v:0 -map 0:a:0 -map 0:s:0 -map 0:s:1 -map 0:s:2 -map 0:s:3 -map 0:s:4 -map 0:s:5 -map 0:s:6 -map 0:s:7 -map 0:s:8 -map 0:s:9 -map 0:s:10 -map 0:s:11 -map 0:s:12 -c:v:0 hevc_nvenc -profile:v:0 main -preset medium -c:a:0 copy -c:s:0 copy -c:s:1 copy -c:s:2 copy -c:s:3 copy -c:s:4 copy -c:s:5 copy -c:s:6 copy -c:s:7 copy -c:s:8 copy -c:s:9 copy -c:s:10 copy -c:s:11 copy -c:s:12 copy -y /tmp/unmanic/unmanic_file_conversion-jotzv-1675293877/Star Trek - Discovery - S04E12 - Species Ten-C-jotzv-1675293877-WORKING-1-1.mkv I think everything is working fine. I am assuming that if you compress, you lose bitrate, I am just wanting to try to see if I can get it a bit better. I do not mind some increased size if I can get rid of the black pixelization. -

[Support] Josh5 - Unmanic - Library Optimiser

TexasDave replied to Josh.5's topic in Docker Containers

I am getting pixelation on dark (black) backgrounds. I believe this is due to bitrate? I attach a screen shot of the pixelation. I also attach "before" and "after" details on the file. Is there a "compromise" (or ideas) on how I can increase my bitrate? Thanks!! -

[Support] Djoss - CrashPlan PRO (aka CrashPlan for Small Business)

TexasDave replied to Djoss's topic in Docker Containers

I have a ton of files - mostly restart logs - in my log folder. 7904 objects: 2 directories, 7902 files (330 MB total) I assume I can get rid of most of these? Thanks! -

Cleaning up, Appdata docker config path - cache v user?

TexasDave replied to TexasDave's topic in General Support

I would be willing to consider buying a 3rd SSD for a cache pool if this would buy me anything...Or splitting my current cache pool. -

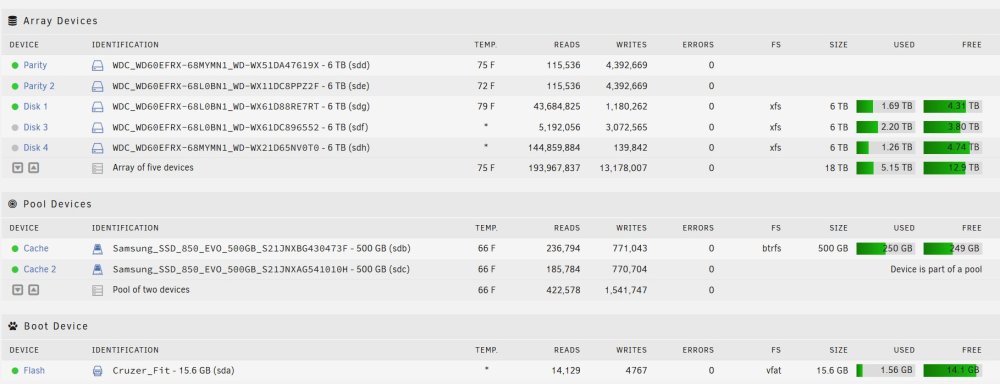

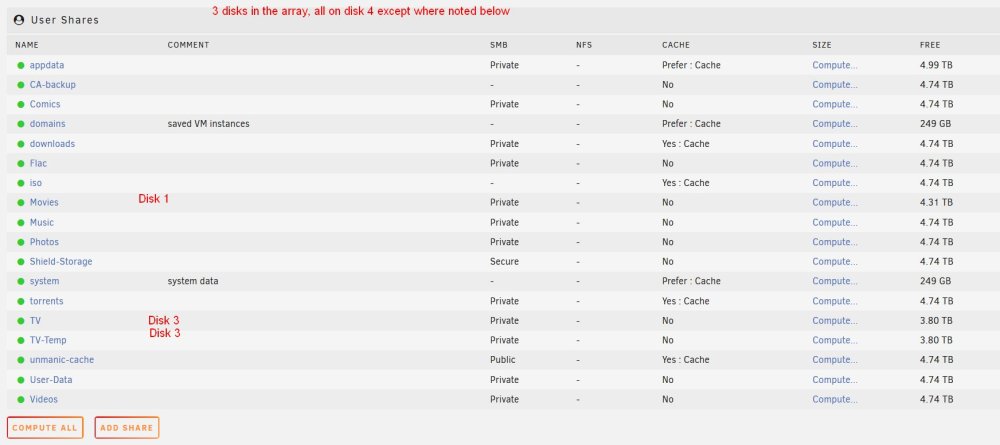

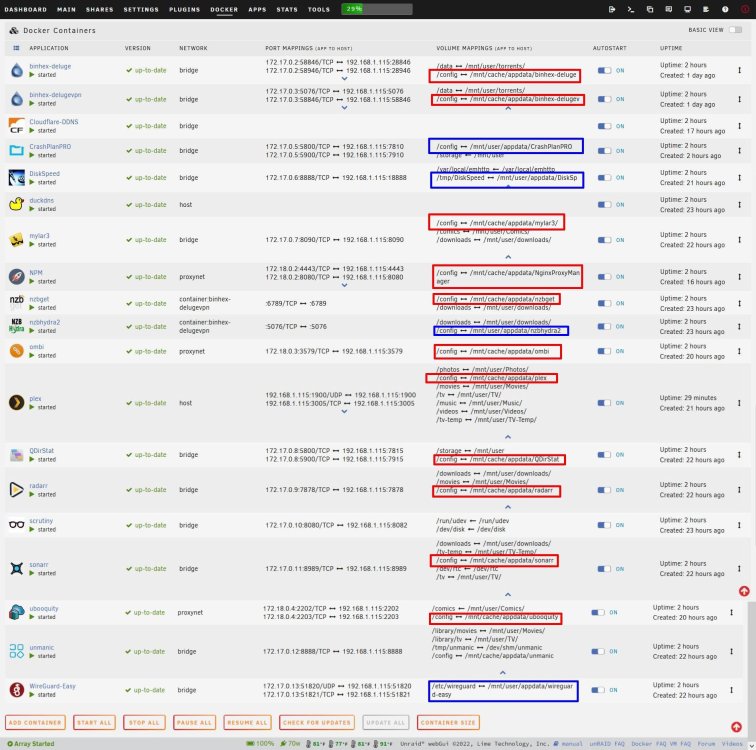

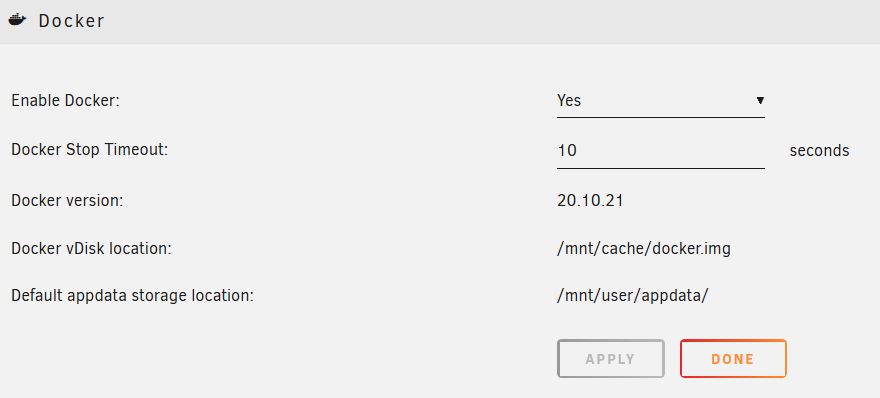

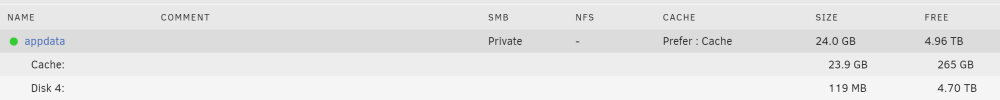

I am in the process of tidying things up on my server and this is one of the last things I am considering harmonizing. My array is pretty old (some disks 7 years) and some of these settings were done when I first started. I have 3 data disks (2 old, one fairly new), 2 parity (1 old, 1 new) and two cache drives (both old). See attached. I run 19 dockers (screenshot attached): 12 are using /mnt/cache/appdate 4 are using /mnt/user/appdata 3 do not have a config file My docker setup defaults to /mnt/user/appdata (see atatched) My shares are attached. Appdata is set to "Prefer : Cache". All shares are on DIsk 4 except for TV+TV_Share (Disk 3) and Movies (Disk 1). (see attached) Now my question: I feel like I should be consitant on where I keep my appdata. And I am leaning to moving it all to /mnt/user/appdata. Thoughts? If I did this - does this process make sense? Taken from another post here. stop Docker; change the default appdata storage location in Docker settings to the default /mnt/user/appdata/ (DONE) check the share properties of the appdata share to ensure it is either cache only or cache preffeed (as is the unRAID default) (DONE) move all your folders from /mnt/cache/appdate share to /user/cache/appdate (not sure about this) turn off autostart on any containers you have start docker - check that each config path is set to the /user/cache/appdate path and not the /mnt/cache/appdate path - start my containers - set them back to start automatically if required - delete your empty /user/cache/appdate share if required (But this may "fill up" as things are cached so do not mess with it?) I would like to be consitant but worried I may mess something up and all works fine now. Any thoughts or other ideas/optimizations/suggestions welcome...THANKS! zack-unraid-diagnostics-20230131-1123.zip

-

Many thanks - that's it!!

-

@wgstarks - thank you for that! I cannot believe I missed it... Just started seeing this in my logs. Anything to be concerned about? Thanks! Jan 30 15:56:47 Zack-unRAID root: error: /plugins/ca.backup2/include/backupExec.php: wrong csrf_token Jan 30 15:56:48 Zack-unRAID root: error: /plugins/ca.backup2/include/backupExec.php: wrong csrf_token Jan 30 15:56:50 Zack-unRAID root: error: /plugins/ca.backup2/include/backupExec.php: wrong csrf_token Jan 30 15:56:52 Zack-unRAID root: error: /plugins/ca.backup2/include/backupExec.php: wrong csrf_token

-

Thank you for the work on this plugin - much appreciated! I seem to recall a setting to tell the plugin how many backups to keep? I can not find this after searching and googling. I do appdata backups every two weeks and want to keep the last three. I am sure this is obvious and am sorry I cannot find it. Thanks!

-

Added 2nd Parity - now getting a variety of errors

TexasDave replied to TexasDave's topic in General Support

I am (mostly) back up. All is working except two things: My NPM proxy setup for two services (Ombi and ubooquity) CrashPlan Pro - can not login to local Web GUI But mostly there. Everyone's help is greatly appreciated - Thank you! -

Added 2nd Parity - now getting a variety of errors

TexasDave replied to TexasDave's topic in General Support

Yes - I have restored my appdata backup. I am slowly adding back my dockers (I only have 12 or so) and seem to be making progress. Having issues on a few but will circle back on them (NPM and CrashPlan Pro) but others seem to be picking up the backed up information. I think I am just going to have to add the dockers again.... -

Added 2nd Parity - now getting a variety of errors

TexasDave replied to TexasDave's topic in General Support

OK - a mix of "good news" and "bad news".... @JorgeB and @itimpi thank you for helping me get this fair and sorting my disk issue! Good News Array and disks are backup and healthy from what I can see. Disk 2 was bad (as noted) I moved my Movies to disk 1, TV to disk 3, everything else to disk 4... Shrunk array and removed bad disk. Been meaning to shrink it anyways.... All seems fine.... Bad New My dockers have all disappeared. I suspect this is because the docker information was on cache and in trying to move everything off disk 2, this may have caused issues. I also seem to have moved stuff from cache onto disk 4. When I went to start up my dockers - I got the message that "/mnt/cache/appdata" was missing so I made this "/mnt/user/appdata" and the dockers started. But it looks like stuff got out of sync. I started adding in the dockers as with the "restore" function this is not too bad. But in checking the dockers, the first few were behaving weird and did not have app data. For instance, Nginx Proxy Manager lost my login. I restored appdata using the plugin and it fixed NPM. But some dockers like NZBget and NZBHydra2 (which I router through binhex delugeVPN) no longer are accessible from the web UI. CrashPlan Pro will not allow me to login in with my account details on unRAID but works find on the external code42 website with my credentials. Before I try to add the rest of my dockers - any ideas? Like I said, I think I have "good" app data on /mnt/disk4/appdata (and /mnt/user/appdata) but do not want to keep going without some kind of sanity check. But I may have messed up and may have to re-configure all of my dockers which would kind of bite.... Diagnostics attached. Thanks! zack-unraid-diagnostics-20230130-1104.zip -

Shrink Array - calculate time to clear array?

TexasDave replied to TexasDave's topic in General Support

After 3 plus days - I gave up. I figure the bad disk was causing too many errors. I went with the "Remove Drives Then Rebuild Parity" Method and this worked fine. Took about 12 hours. Thanks! -

Shrink Array - calculate time to clear array?

TexasDave replied to TexasDave's topic in General Support

I was hoping 1-2 days max but that does not appear to be the case 🙂 -

I am shrinking my array - dropping an old 3TB drive (which is close to failure) and will just use the remaining 3 6TB drives which have plenty of room. I kicked off the "clear array drive" script for my 3TB drive. It has been running for 48 hours. Is there any way to see how much longer it will take or where it is at in the process? Attaching current status and read/write speeds. Thanks!

-

Added 2nd Parity - now getting a variety of errors

TexasDave replied to TexasDave's topic in General Support

That second data disk in my array is throwing up errors again. This time, I will let the Data Rebuild complete. Once that is done - I will likely replace that data disk....

.thumb.jpg.a3f6fcf57686c4a9acc56641713ae7cd.jpg)