-

Posts

202 -

Joined

-

Last visited

Content Type

Profiles

Forums

Downloads

Store

Gallery

Bug Reports

Documentation

Landing

Everything posted by thestraycat

-

Yeah ive tried those. The container dosnt seem to honour the variable: KEY: THEME_DARK_PRIMARY VALUE: #E58325

-

@CatDuck Tried the variable for 'dark mode' on the new latest ... but still not working.

-

Hi, - I currently run Unraid 6.12.4 - IPVLAN mode - I have 2 NIC's and use 1 NIC for all docker traffic, VM's and and host UI. I have a single container that lives on my network bridge (BR0) with the IP Address 192.168.1.40. Everything works as expected, But i cant create a DHCP reservation for this container in my router as the MAC Address shows as the same MAC address as my Unraid Server Host. And i'd l need to be unique to save the DHCP reservation. Do i need to move my networking mode over to MACVLAN to get a unique MAC Address for my Fixed IP Address container on BR0? As it stands i've limited the DHCP scope to give me 50 or so fixed addresses for issues like this so it's not crucial, but i find it more convenient to keep track of DHCP reservations on the router than find and update servers with fixed addresses.. Any advice? Cheers.

-

hmmm.. Running the development build of this plugin, and whenever i try and save a pre-script or post-script it never finishes processing and never saves and just hangs for ever until i refresh to screen (and dosnt save the scripts) Anyone else have that issue? Can anyone test there's by just typing something superficial like the below and hitting save? tl;dr - Just want to know if you can save the info below into the vm_backup > 'upload scripts' > pre-script tab. #!/bin/bash echo "Hello World"

-

I believe it's coming, but for now he's very respondant on the github issues page.... https://github.com/EideardVMR/unraid-easybackup

-

Anyone tried the 'Easy Backup' plugin for backing up unraid vm's? it seems relatively new and beta but at least is actively maintained... I will miss the pre and post script options of the vm_backup plugin however.

-

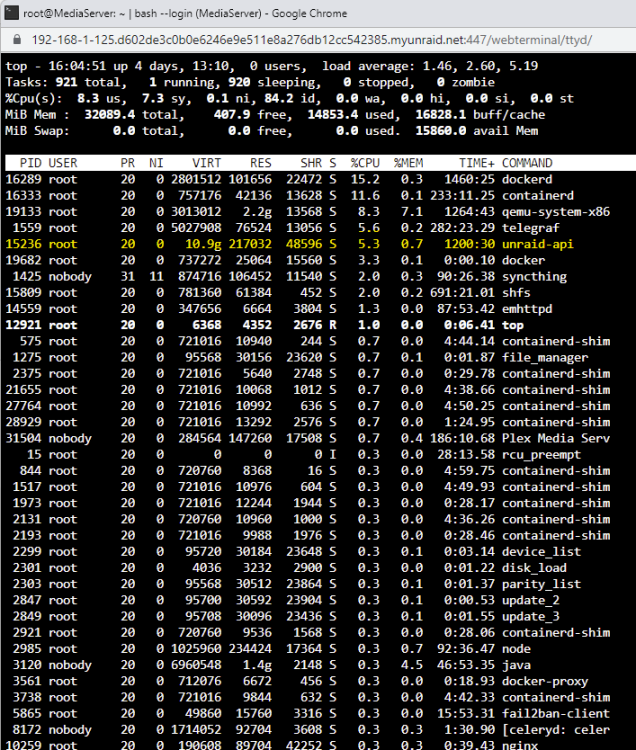

Yup same here.....I'd observed a few changes tbh since updating to the last 2 stable releases. - My idle cpu stats are much more chaotic than they have been on older unraid versions. (I usually idle between 8%-16% cpu now i idle anywhere between 15-50% which has tripled to what it would usually be a few versions back. - After boot my CPU sits at 100% on all cores for around 5 minutes before settling down which is strange behaviour seems to be when loading docker. Didnt do this on previous unraid versions. - Unraid-API seems to always be one of my biggest mem hogs which i hadn't really noticed going back a few versions but it's quite considerable. I've just rebooted the server and unraid-api is straight back up to 10.9g from a cold boot. Maybe the memory handling has changed in a recent version and what im seeing is normal, but with the process being called 'Unraid-API' it dosn't feel like it should be so high. However i have i'm unaware of what this process is doing. I was hoping to not have to go through the painstaking process of inspecting every container and plugin and thought i'd post to see if anyone was experiencing similar...

-

same here.. its been happening for weeks.

-

In-use Docker XML Templates (for actively running containers)

thestraycat replied to thestraycat's topic in General Support

Thanks for the info - Yup already running the template authoring mode. Trying to understand why some containers are available directly through the search in CA and other needs you to maually click on "view dockerhub"? I thought this was the difference between 'compliant with unraid' and 'everything else on dockerhub' -

In-use Docker XML Templates (for actively running containers)

thestraycat replied to thestraycat's topic in General Support

Thanks - So are you saying the XML is auto generated at the time of 'adding a new container' from the Community Applications plugin? As opposed to being made independantly... -

In-use Docker XML Templates (for actively running containers)

thestraycat replied to thestraycat's topic in General Support

Yeah sure. Anything confidential in there though stored in the variables? UPDATE: I've sorted it out now. There were a few dubious files that i've now removed, they may have been down to 2nd instances at some time or failed updates? Either way i had a load of xxx(1).xml files that i've deleted seems to have cleared up the 'installed apps' view nicely. my-radarr.xml my-radarr(1).xml <--deleted. my-radarr.bak @squid - Is there any official documentation for creating containers from scratch using dockerfiles that you want accessible through CA docker apps? I've had to fudge my own XML file to pull down my container nicely... (This was after i noticed the issue with dupes in my 'installed app' view ) -

In-use Docker XML Templates (for actively running containers)

thestraycat replied to thestraycat's topic in General Support

@JonathanM @Squid Hey Jonathan, yeah i know of the installed/previous option. However it dosn't quite work how i expected. For example, if i view the 'installed apps' and filter by 'docker' i have many 'duplicate entries' in that view, so i'm not sure whether that's a live view or displaying cached entries from somewhere else. nearly all but one of the duplicates are CA containers too. One is imported from dockerhub i believe. Furthermore, If i delete the one of the duplicate instances from 'installed apps' both dissapear, and then when i reinstall the container from 'previous apps' i end up back with 2 identical instances back in 'installed apps'. So i can't even fix it. So as the view is currently polluted with stale entries i cant use it for what which is getting a list of running containers. Is there an API call for grabbing all known running containers built into Unraid or should i just query and filter all this from the native docker engine commands? Bonus points - Is there an unraid container building/compliancy guide on the forum that you know of that i can use to contribute containers to the community and have them display in CA? -

Docker unresponsive, Unraid at 100% cpu, eventual system crash.

thestraycat replied to Dreytac's topic in General Support

Did you get to the bottom of it? -

Hi guys I know the templates live in: /boot/config/plugins/dockerMan/templates-user However any old templates also live in that folder and also old instance and attempts of by-gone years! How can i generate a list of currently live/used XML files? I was wondering if i could query the dockerman parser for a list of XML files that are actively being used right now, but being a parser assumed it's likely unaware of it's state. There must be some info to query to get that list as the containers are obviously re-started ater reboot. Thought i'd ask the forum prior to putting something together to do this, but was hoping for an easier solution. @Squid - Maybe one for you?

-

Hi guys - Quick one. I'm trying to troubleshoot some time issues in a container that i use (healthchecks.io) and to outrule any issues being passed to the container i'm looking for some confirmation on how the unraid time is displayed. The time at the time of writing this was 13:32pm when i look in unraid in settings > date and time (UTC, via NTP.) it shows as 13:32pm when i goto the console in unraid and type 'date' i get 13:32pm (which i assume is NTP adjusting my time for BST?) when i goto the console in unraid and type 'date -u' i get 12:32pm (which i assume is forcing UTC time which shows as 12.32pm!) Can someone explain to me why UTC looks different in both? Lastly if i goto the unraid console and view time as per the timezone i see if i set London/Europe time displays as the correct BST time. And when i force UTC timezone i see the older and incorrect time. (time was 13.55pm when i ran the command) In the mean time i'm going to check the BIOS time. But i'm fully expecting this to be correct.

-

Been battling deluge all morning. My Deluge Web UI was prompting me for a password as is usual, and wouldn't accept my usual password. Some how deluges web ui password had defaulted back to 'deluge'. However, when i tried to reset the password back to my previous peronsal password in the web ui, deluge would hang and crash and need a reboot to become responsive. Seemed that my web.conf file was rammed full of stale sessions as the web.conf file had bloated up to 40MB in size and i think deluge was crasing due to it. Since deleting 99% of the stale sessions in my web.conf deluge loads up a lot quicker from a cold restart, is more responsive and also now allows me to save my password again in the web ui. Leaving it here in case anyone else has this issue! @binhex Should the stale sessions not be removed from the web.conf at some time?

-

@u.stu - Perfect! Thank you.

-

Hi, i was wondering if there was a place where i can see/track the current list of packages for nerdtools? I'm hanging back on 6.10 with the older 'nerdpack' until a few of the packages that i rely on are added to nerdtools. is there a list of packages on github or similar?

-

@JorgeB - Sorry it was just a bad example of a made up unraid share (I didnt mean to reference the /user share of unraid! My bad!) /work or similar would have been more fitting!