-

Posts

810 -

Joined

-

Last visited

Content Type

Profiles

Forums

Downloads

Store

Gallery

Bug Reports

Documentation

Landing

Posts posted by casperse

-

-

Thanks for helping @itimpi 🙂

My path movies\Movies is just before everyone starting to do data\Movies so yes that is intentional

I found 3.7TB in the recycle plugin that I have emptied and I did a MC commander in a terminal window and did a disc to disc move

After freeing up some space the mover started running and the cache drive is now back to normal

But looking in the log I can see that I still get the "No space left"

Feb 8 11:25:03 Server shfs: copy_file: /mnt/cache/tv shows/TV Shows/Magnum P.I. (2018) [tvdb-350068]/Season 04/Magnum P.I. (2018) - S04E12 - Angels Sometimes Kill [WEB-DL.720p][8bit][h264][AC3 5.1]-EGEN.mkv /mnt/disk2/tv shows/TV Shows/Magnum P.I. (2018) [tvdb-350068]/Season 04/Magnum P.I. (2018) - S04E12 - Angels Sometimes Kill [WEB-DL.720p][8bit][h264][AC3 5.1]-EGEN.mkv (28) No space left on device

I think I found a change in one folder that could impact the split level?

Some time ago I setup Lidarr to a subdir that might have broken the split level I use for music? (All the other split levels has ben working for years)

Looking at the path I have:

music\Albums new\Artist\album\files

The share is set like this:

Looking at the cfg for shares I have cfg from when I imported data from my Synology NAS years ago

Is it ok to delete all cfg files that dosent correspond to a shared folder on my Unraid server?

Thanks again!

-

Update: The mover just stopped it cant write to the drives? Even if there is plenty of space to the other drives?

-

Hi All

I have been searching this and I have not found any of the issues on my server?

Drive 11:

And I can see more drives running full I have 80G set as minimum free space?

Its really strange I have no new shares? I created them a long time ago all like this

My Diagnostic file attached below

I really don't like seeing drives this full especially when other drives has lot of empty space?

I have Fixit running and I found one mounted drive in a docker that should have been mounted as Read/Write - Slave - I have corrected this but it look likes its still filling up my other drives to 100% so it must be somethings else?

I can see this looks like it started when the cache drive ran full but that shouldnt cause this should it?

I have the mover to run every night so normally this is not a problem, even now when I run a manual move it looks like it writes to drives with >500MB - I can see keeps trying to write to the these drives:

Feb 8 07:44:09 shfs: share cache full

Feb 8 07:44:10 shfs: cache disk full

Feb 8 07:44:15 shfs: cache disk full

Feb 8 07:44:21 shfs: share cache full

Feb 8 07:44:24 shfs: share cache full

Feb 8 07:44:25 shfs: cache disk fullI have now found 4 drives with less than 500MB free space?

Should I run unBalance?

As always your help is really appreciated & needed 🙂

-

would a chmod 777 of the error.log.1 be a solution?

UPDATE: Sorry didnt see your reply Squid - Thanks looking forward to the next release 🙂

-

Hi All

I started getting this error message from Unraid on a e-mail, and sofar I havent ben able to find the cause?

(No new changes? - I am running Nginx-Proxy-Manager instead of Swag)

My Diag file: here

-

On 1/9/2022 at 11:25 PM, ReDew said:

@casperse There is a section at the bottom of the ui that displays your ip:port with either a green check mark or a red exclamation mark saying the port is open or closed. This will determine your connectability. I found I had to change my settings in the rtorrent.rc file to make them load on boot. The format for identifying the port must be #-# (5678-6789 for example). My vpn (Mullvad) only lets me generate random individual ports to associate with my wireguard key, so my input for the rtorrent.rc file had to be 5678-5678 to limit it to that one port on boot.

Thanks! I found this line in the rtorrent.rc file:

# Port range to use for listening.

#

#network.port_range.set = 49160-49160So is it enough to just uncomment this line and set it to ex.

network.port_range.set = 58550-58550

-

On 1/7/2022 at 6:47 PM, casperse said:

Thanks for helping me!

I did a remove all docker templates and docker image and did yet another re-install and I also removed the "perms.txt" just to make sure perms where not the problem and I got the UI to start! 🙂

But my speeds are really slow?

So I wonder if I am missing any portforwarding?

So far I thought I only needed the wireguard port 51820/UDP?

Should it be the whole range 51820 - 65535 UDP?

Other ports shouldnt be needed when I only want it to use the Wireguard VPN right?

I Also have Strict Port forwarding set to yes...

So close now... LOL

Thanks @ReDew that must have ben it! - during re-install I kept it default to /Data no "-" on the incomplete folder like before

I have it downloading but its not uploading any data (0) so I am back to ports opening?

How to check if I am connectable to seed?

Running through PIA Wiregurad what ports should I create NAT for?

I am running in "Bridge mode" and not on Proxynet

-

50 minutes ago, binhex said:

can you attach your rtorrent config file, it should be located at /config/rtorrent/config/rtorrent.rc

Thanks for helping me!

I did a remove all docker templates and docker image and did yet another re-install and I also removed the "perms.txt" just to make sure perms where not the problem and I got the UI to start! 🙂

But my speeds are really slow?

So I wonder if I am missing any portforwarding?

So far I thought I only needed the wireguard port 51820/UDP?

Should it be the whole range 51820 - 65535 UDP?

Other ports shouldnt be needed when I only want it to use the Wireguard VPN right?

I Also have Strict Port forwarding set to yes...

So close now... LOL

-

On 11/30/2021 at 5:19 PM, casperse said:

Almost ready to give up and move on to another solution but I must admit I have most trust on Binhex and the security built in these dockers...Can anyone give any input on why this isnt working? Cheers

I really need some help trouble shooting this!

1) I got the delugevpn working but I would like to use the rTorrent 🙂

2) I had this working a year ago, but after doing a total re-install I cant get the rTorrent to run? (No VPN or Strict port forwarding)

My configuration is as shown below:

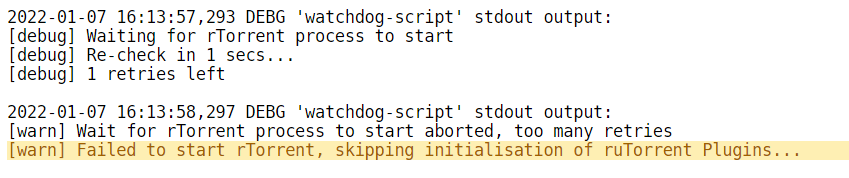

Log:

Error log:

1641568550 C Caught exception: 'Error in option file: ~/.rtorrent.rc:61: Bad return code.'.

The access rights is fine (even did a chmod 777, just to make sure)

-

I keep getting this error:

Googling it said that the file should be chmod 600 I did this but I still get this error?

I am using the same conf file for wirguard as used in Binhex but I can see that he defines the user and password in the docker and you write only for openvpn?

So is the conf file different? sorry havent found much information about this 🙂

-

On 12/23/2021 at 1:48 PM, SimonF said:

Hi @casperse

it shouldn't make any difference. That is the serial number provided which I use for the key from udev.

I can look to use the Model from Database on the Vendor:Product Column.

Have you tried setting the Automapping on the device or port? Then you dont need to manual hotplug. Toggle the switch to enable.

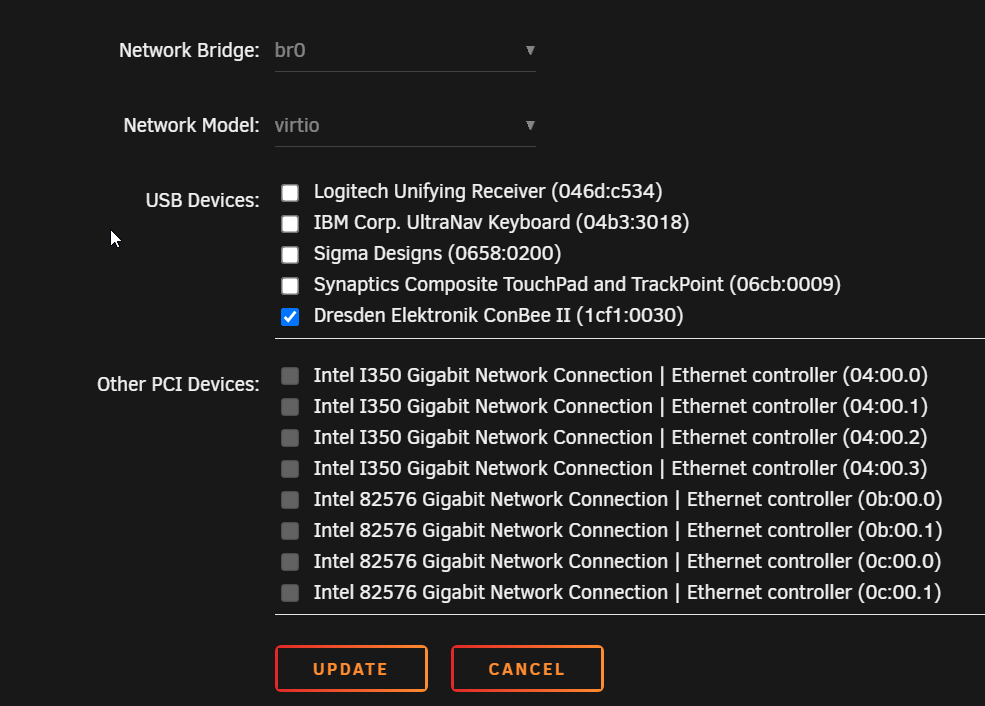

Perfect! - Sorry for the late answer I had to many Christmas duties LOL

I didn't find this option before? I have now done this for both USB needed in the Home assistant!

Guess this will solve all my problems thanks allot! This is a great plugin for Unraid

-

Thanks! seems to be working 🙂

After first reboot it only mapped one of the USB drives but after the secound it looks to be fine, both are mapped:

Under the VM's and the USB manager Sigma designs is listed as 1-11 0658_0200 but all others USB's use the vendor name?

Could this have anything to do with this one not being attached after reboot?

-

-

4 hours ago, SimonF said:

If you hover over the error it will say most proberly already inuse.

Yes you dont need them on the VM so they can be removed.

I am looking for a way to identify allocation/usage outside of my plugin but not found an easy way as yet.

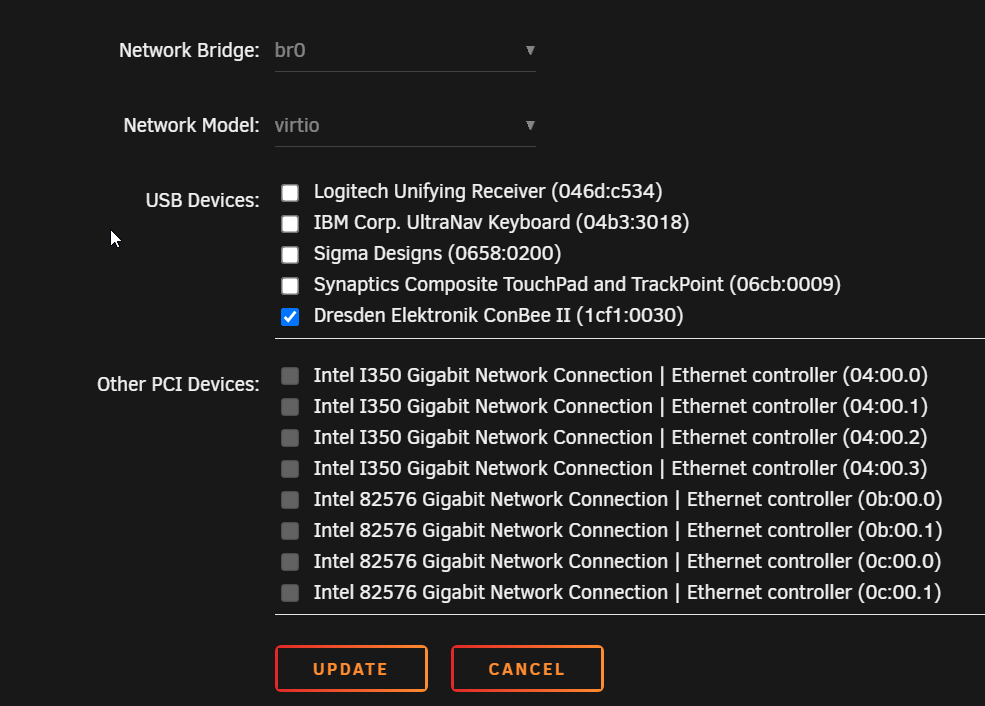

I have removed the USB X from the VM UI but I can see some USB settings in the XML view?

Also here if I try to detach and retach the USB

Another thing is that this plugin doesn't list the USB name of the Z-wave stick but I dont think thats related to the error?

The plugin shows the Sigma as:

Should I bind the driver before using the tool to attach the usb to the VM?

-

This is a Great application!

Having Home assistant on a VM with USB's for Zigbe and Z-wave is very troublesome!

I have never gotten the VM setting to work? after every reboot I needed another plugin to detach and re-attach the USB's

I now have your plugin installed and it seems to work but I have warnings here:

Should I just remove all USB settings from the VM config and let the plugin handle things?

What does the warning mean?

Again thanks for creating this app its very cool!

-

On 11/26/2021 at 11:59 PM, casperse said:

I have tried changing the NAME SERVERS from

209.222.18.222,84.200.69.80,37.235.1.174,1.1.1.1,209.222.18.218,37.235.1.177,84.200.70.40,1.0.0.1

To:

84.200.69.80,37.235.1.174,1.1.1.1,37.235.1.177,84.200.70.40,1.0.0.1

Still cant start it....tried binhex-qbittorrentvpn and that also works just not rTorrent?

Update: I have them all on my "Proxynet" and only the binhex-privoxyvpn on "Bridge"

(I have 5 PIA licenses but I cant run Deluge & privoxyvpn at the same time?

My ports for the rTorrent is matching the ones on the docker:

Docker:

I can also see that the wg0.conf is generated !

Anyone have any input to what I am doing wrong? 🙂

Logfile:

I did try to add the above port:49184 but that didnt work either

Almost ready to give up and move on to another solution but I must admit I have most trust on Binhex and the security built in these dockers...Can anyone give any input on why this isnt working? Cheers

-

I have tried changing the NAME SERVERS from

209.222.18.222,84.200.69.80,37.235.1.174,1.1.1.1,209.222.18.218,37.235.1.177,84.200.70.40,1.0.0.1

To:

84.200.69.80,37.235.1.174,1.1.1.1,37.235.1.177,84.200.70.40,1.0.0.1

Still cant start it....tried binhex-qbittorrentvpn and that also works just not rTorrent?

Update: I have them all on my "Proxynet" and only the binhex-privoxyvpn on "Bridge"

(I have 5 PIA licenses but I cant run Deluge & privoxyvpn at the same time?

My ports for the rTorrent is matching the ones on the docker:

Docker:

I can also see that the wg0.conf is generated !

Anyone have any input to what I am doing wrong? 🙂

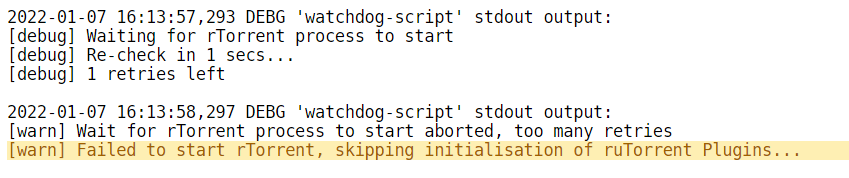

Logfile:

Quote2021-11-27 11:07:20,401 DEBG 'watchdog-script' stdout output:

[debug] Waiting for rTorrent process to start

[debug] Re-check in 1 secs...

[debug] 2 retries left

2021-11-27 11:07:21,406 DEBG 'watchdog-script' stdout output:

[debug] Waiting for rTorrent process to start

[debug] Re-check in 1 secs...

[debug] 1 retries left

2021-11-27 11:07:22,410 DEBG 'watchdog-script' stdout output:

[warn] Wait for rTorrent process to start aborted, too many retries

2021-11-27 11:07:22,410 DEBG 'watchdog-script' stdout output:

[warn] Failed to start rTorrent, skipping initialisation of ruTorrent Plugins...

[debug] VPN incoming port is 49184

[debug] rTorrent incoming port is 49184

[debug] VPN IP is 10.1.204.252

[debug] rTorrent IP is 0.0.0.0I did try to add the above port:49184 but that didnt work either

-

Just moved back to PIA (Black Friday) and I am following the Wireguard guide and I have everything working for Deluge

But for some reason rTorrent just dosent want to startup?

I have checked that the ports are open (Same as before) and I have enabled debug.

Also tried to re-install the docker and removed the App data

Error Log:

Quote2021-11-26 23:10:29,752 DEBG 'watchdog-script' stdout output:

[debug] Waiting for rTorrent process to start

[debug] Re-check in 1 secs...

[debug] 3 retries left

2021-11-26 23:10:30,756 DEBG 'watchdog-script' stdout output:

[debug] Waiting for rTorrent process to start

[debug] Re-check in 1 secs...

[debug] 2 retries left

2021-11-26 23:10:31,760 DEBG 'watchdog-script' stdout output:

[debug] Waiting for rTorrent process to start

[debug] Re-check in 1 secs...

[debug] 1 retries left

2021-11-26 23:10:32,764 DEBG 'watchdog-script' stdout output:

[warn] Wait for rTorrent process to start aborted, too many retries

2021-11-26 23:10:32,764 DEBG 'watchdog-script' stdout output:

[warn] Failed to start rTorrent, skipping initialisation of ruTorrent Plugins...

[debug] VPN incoming port is 50344

[debug] rTorrent incoming port is 50344

[debug] VPN IP is 10.14.238.230

[debug] rTorrent IP is 0.0.0.0

Tried to change so many things (I was sure I could get this working, if I just kept trying LOL)

-

On 10/27/2021 at 9:51 PM, casperse said:

Hi binhex

I think you are the one to ask

Over some years I have transferred data one way --> to my Unraid server

FTP, Synchting, Resilio Sync, and latest LFTP using Seedsync Docker & Ubuntu VM Last one very unstable)

Could I use your binhex rclone to connect to my off-site server and do a oneway synct to my unraid server?

I have read all of the post and they are mostly about google drive and big vendors?

I was able to install rclone on the remote server doing this:

Would this make it possible to use your docker?

And would the configuration be simpler?

Sorry I am still trying to get my head around settings this up to just run and work (FAST

Would this docker work for this problem?

-

Thanks for the info and your work creating this docker!

I also think Trilium looks more modern but Joplin did have a native app for both Android and IOS - I cant find any apps for mobile devices only desktop apps?

-

I am now facing this very old problem again after updating my Nextcloud to version 22.2.3 and moving from SWAG to Nginx Proxy Manager

I deleted the default config: \appdata\nextcloud\nginx\site-confs\default and got the new version and that removed the dreeded error:

Your web server is not properly set up to resolve "/.well-known/webfinger". Further information can be found in the documentation

But I got the other old error instead:

The "Strict-Transport-Security" HTTP header is not set to at least "15552000" seconds. For enhanced security, it is recommended to enable HSTS as described in the security tips

So I went back into the default configuration and uncommented the line:

add_header Strict-Transport-Security "max-age=15768000; includeSubDomains; preload;" always;

Which removed the error but resulted in me getting the old error back:

So I am in a Time-loop and just cant get rid of these two errors any input on how to solve this?

My Nginx Proxy Manager is really simple:

And the default file:

upstream php-handler { server 127.0.0.1:9000; } server { listen 80; listen [::]:80; server_name _; return 301 https://$host$request_uri; } server { listen 443 ssl http2; listen [::]:443 ssl http2; server_name _; ssl_certificate /config/keys/cert.crt; ssl_certificate_key /config/keys/cert.key; # Add headers to serve security related headers # Before enabling Strict-Transport-Security headers please read into this # topic first. add_header Strict-Transport-Security "max-age=15768000; includeSubDomains; preload;" always; # # WARNING: Only add the preload option once you read about # the consequences in https://hstspreload.org/. This option # will add the domain to a hardcoded list that is shipped # in all major browsers and getting removed from this list # could take several months. # set max upload size client_max_body_size 512M; fastcgi_buffers 64 4K; # Enable gzip but do not remove ETag headers gzip on; gzip_vary on; gzip_comp_level 4; gzip_min_length 256; gzip_proxied expired no-cache no-store private no_last_modified no_etag auth; gzip_types application/atom+xml application/javascript application/json application/ld+json application/manifest+json application/rss+xml application/vnd.geo+json application/vnd.ms-fontobject application/x-font-ttf application/x-web-app-manifest+json application/xhtml+xml application/xml font/opentype image/bmp image/svg+xml image/x-icon text/cache-manifest text/css text/plain text/vcard text/vnd.rim.location.xloc text/vtt text/x-component text/x-cross-domain-policy; # HTTP response headers borrowed from Nextcloud `.htaccess` add_header Referrer-Policy "no-referrer" always; add_header X-Content-Type-Options "nosniff" always; add_header X-Download-Options "noopen" always; add_header X-Frame-Options "SAMEORIGIN" always; add_header X-Permitted-Cross-Domain-Policies "none" always; add_header X-Robots-Tag "none" always; add_header X-XSS-Protection "1; mode=block" always; # Remove X-Powered-By, which is an information leak fastcgi_hide_header X-Powered-By; root /config/www/nextcloud/; # display real ip in nginx logs when connected through reverse proxy via docker network set_real_ip_from 172.0.0.0/8; real_ip_header X-Forwarded-For; # Specify how to handle directories -- specifying `/index.php$request_uri` # here as the fallback means that Nginx always exhibits the desired behaviour # when a client requests a path that corresponds to a directory that exists # on the server. In particular, if that directory contains an index.php file, # that file is correctly served; if it doesn't, then the request is passed to # the front-end controller. This consistent behaviour means that we don't need # to specify custom rules for certain paths (e.g. images and other assets, # `/updater`, `/ocm-provider`, `/ocs-provider`), and thus # `try_files $uri $uri/ /index.php$request_uri` # always provides the desired behaviour. index index.php index.html /index.php$request_uri; # Rule borrowed from `.htaccess` to handle Microsoft DAV clients location = / { if ( $http_user_agent ~ ^DavClnt ) { return 302 /remote.php/webdav/$is_args$args; } } location = /robots.txt { allow all; log_not_found off; access_log off; } # Make a regex exception for `/.well-known` so that clients can still # access it despite the existence of the regex rule # `location ~ /(\.|autotest|...)` which would otherwise handle requests # for `/.well-known`. location ^~ /.well-known { # The following 6 rules are borrowed from `.htaccess` location = /.well-known/carddav { return 301 /remote.php/dav/; } location = /.well-known/caldav { return 301 /remote.php/dav/; } # Anything else is dynamically handled by Nextcloud location ^~ /.well-known { return 301 /index.php$uri; } try_files $uri $uri/ =404; } # Rules borrowed from `.htaccess` to hide certain paths from clients location ~ ^/(?:build|tests|config|lib|3rdparty|templates|data)(?:$|/) { return 404; } location ~ ^/(?:\.|autotest|occ|issue|indie|db_|console) { return 404; } # Ensure this block, which passes PHP files to the PHP process, is above the blocks # which handle static assets (as seen below). If this block is not declared first, # then Nginx will encounter an infinite rewriting loop when it prepends `/index.php` # to the URI, resulting in a HTTP 500 error response. location ~ \.php(?:$|/) { fastcgi_split_path_info ^(.+?\.php)(/.*)$; set $path_info $fastcgi_path_info; try_files $fastcgi_script_name =404; include /etc/nginx/fastcgi_params; fastcgi_param SCRIPT_FILENAME $document_root$fastcgi_script_name; fastcgi_param PATH_INFO $path_info; fastcgi_param HTTPS on; fastcgi_param modHeadersAvailable true; # Avoid sending the security headers twice fastcgi_param front_controller_active true; # Enable pretty urls fastcgi_pass php-handler; fastcgi_intercept_errors on; fastcgi_request_buffering off; } location ~ \.(?:css|js|svg|gif)$ { try_files $uri /index.php$request_uri; expires 6M; # Cache-Control policy borrowed from `.htaccess` access_log off; # Optional: Don't log access to assets } location ~ \.woff2?$ { try_files $uri /index.php$request_uri; expires 7d; # Cache-Control policy borrowed from `.htaccess` access_log off; # Optional: Don't log access to assets } location / { try_files $uri $uri/ /index.php$request_uri; } } -

I am looking to get away from my Synology DS Note station application

Its one app that synchronize between everything IOS/Android Apps and a Desktop App - and it have a webUI that I can access through a proxy

I am not sure but would this then require all these 4 dockers:

joplin/server:latest

postgresql14-joplin

acaranta/docker-joplin

https://github.com/jlesage/docker-baseimage-gui

The last one I couldn't find in the Unraid store?

And this would provide the same functionality? (Except the webUI would be through VNC?

Sorry I have been reading and its not really stated very clearly whats required? or I am just not finding it in my searches

Br

Casperse

-

I am also looking to migrate from DSNote (Running on a VM on Unraid (Just for this and Photo station)

So my goal is to find replacements and ditch the VM

I actually found your post by searching for Joplin!

Question is it correct that in order to run the Joplin server you need to first setup a Postgres Database docker?

For such a small note app I find it strange that it would need a separate DB?

I want a solution that synch across PC/IOS/WEBPAGE and with support for saving webpages to the note app

So far it seems that Joplin crosses all the boxes, but I haven't tested it yet

-

On 11/14/2021 at 9:43 AM, mgutt said:

Yes. Finally you have the same result.

It seems so. I tested it and Plex creates the complete movie file, before it is downloaded through the client. My RAM Disk is limited to 6GB and as you can see it is automatically removed after the file hits this limit:

I did not tested multiple users, but I think this will be even more. So we really need to be able to change the download transcoding path somehow or we are forced to use an SSD for all transcodes or install much more RAM 🙈

I came to the same conclusion I did a test of 3 streams and one download, and since I have installed and are running allot more dockers and never had a issue utilizing the max 8G for /tmp/PlexRamScratch it "almost" utilized the server 98%

I decided to disable all synch/download on the Plex server for now (I don't want to loose the gain I have using RAM)

Lets hope Plex creates a new path in the future for these synch/download conversions

HELP! - Disk 100% full (With High water settings)

in General Support

Posted

So the split level for TV shows should be set at level 3?

I have looked at all the shares and they are the same split level as when I started running Unraid

the 3 important shared folders and the one that the cache drive is trying to move folders from is these with paths

Just luck I havent seen this before I guess?

Any other of these that should have a different spli level?

\music\Albums new\Mudvayne\Album (2000)\CD1\files

\movies\Movies\title (2001)\Filenames

\tv shows\TV Shows\Title (2022)\Season 01\files