-

Posts

11265 -

Joined

-

Last visited

-

Days Won

123

Content Type

Profiles

Forums

Downloads

Store

Gallery

Bug Reports

Documentation

Landing

Report Comments posted by mgutt

-

-

On 9/2/2021 at 3:45 PM, bonienl said:

rc2 will have version 1.1.1l

Yes, but still without a fixed release date. Most other OS have already fixed this issue.

-

My PC woke up and is now reloading a Webterminal infinitely. Even a new opened Webterminal does it. While this happens the logs were filled with the error of this bug report:

nginx: <datetime> [alert] 8330#8330: worker process <random_number> exited on signal 6

Now I try to investigate the problem. At first what happens on the network monitor of the browser:

/webterminal/token is requested as follows:

GET /webterminal/token HTTP/1.1 Host: tower:5000 Connection: keep-alive Pragma: no-cache Cache-Control: no-cache User-Agent: Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/92.0.4515.159 Safari/537.36 Accept: */* Referer: http://tower:5000/webterminal/ Accept-Encoding: gzip, deflate Accept-Language: de-DE,de;q=0.9,en-US;q=0.8,en;q=0.7 Cookie: users_view=user1; db-box2=2%3B0%3B1; test.sdd=short; test.sdb=short; test.sdc=short; diskio=diskio; ca_startupButton=topperforming; port_select=eth1; unraid_11111111621fb6eace5f11d511111111=11111111365ea67534cf76a011111111; ca_dockerSearchFlag=false; ca_searchActive=true; ca_categoryName=undefined; ca_installMulti=false; ca_categoryText=Search%20for%20webdav; ca_sortIcon=true; ca_filter=webdav; ca_categories_enabled=%5Bnull%2C%22installed_apps%22%2C%22inst_docker%22%2C%22inst_plugins%22%2C%22previous_apps%22%2C%22prev_docker%22%2C%22prev_plugins%22%2C%22onlynew%22%2C%22new%22%2C%22random%22%2C%22topperforming%22%2C%22trending%22%2C%22Backup%3A%22%2C%22Cloud%3A%22%2C%22Network%3A%22%2C%22Network%3AFTP%22%2C%22Network%3AWeb%22%2C%22Network%3AOther%22%2C%22Plugins%3A%22%2C%22Productivity%3A%22%2C%22Tools%3A%22%2C%22Tools%3AUtilities%22%2C%22All%22%2C%22repos%22%2Cnull%2Cnull%2Cnull%2Cnull%2Cnull%2Cnull%5D; ca_selectedMenu=All; ca_data=%7B%22docker%22%3A%22%22%2C%22section%22%3A%22AppStore%22%2C%22selected_category%22%3A%22%22%2C%22subcategory%22%3A%22%22%2C%22selected_subcategory%22%3A%22%22%2C%22selected%22%3A%22%7B%5C%22docker%5C%22%3A%5B%5D%2C%5C%22plugin%5C%22%3A%5B%5D%2C%5C%22deletePaths%5C%22%3A%5B%5D%7D%22%2C%22lastUpdated%22%3A0%2C%22nextpage%22%3A0%2C%22prevpage%22%3A0%2C%22currentpage%22%3A1%2C%22searchFlag%22%3Atrue%2C%22searchActive%22%3Atrue%2C%22previousAppsSection%22%3A%22%22%7D; col=1; dir=0; docker_listview_mode=basic; one=tab1

response:

HTTP/1.1 200 OK Server: nginx Date: Sat, 28 Aug 2021 16:35:53 GMT Content-Type: application/json;charset=utf-8 Content-Length: 13 Connection: keep-alive

content:

{"token": ""}ws://tower:5000/webterminal/ws is requested:

GET ws://tower:5000/webterminal/ws HTTP/1.1 Host: tower:5000 Connection: Upgrade Pragma: no-cache Cache-Control: no-cache User-Agent: Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/92.0.4515.159 Safari/537.36 Upgrade: websocket Origin: http://tower:5000 Sec-WebSocket-Version: 13 Accept-Encoding: gzip, deflate Accept-Language: de-DE,de;q=0.9,en-US;q=0.8,en;q=0.7 Cookie: users_view=user1; db-box2=2%3B0%3B1; test.sdd=short; test.sdb=short; test.sdc=short; diskio=diskio; ca_startupButton=topperforming; port_select=eth1; unraid_11111111621fb6eace5f11d511111111=11111111365ea67534cf76a011111111; ca_dockerSearchFlag=false; ca_searchActive=true; ca_categoryName=undefined; ca_installMulti=false; ca_categoryText=Search%20for%20webdav; ca_sortIcon=true; ca_filter=webdav; ca_categories_enabled=%5Bnull%2C%22installed_apps%22%2C%22inst_docker%22%2C%22inst_plugins%22%2C%22previous_apps%22%2C%22prev_docker%22%2C%22prev_plugins%22%2C%22onlynew%22%2C%22new%22%2C%22random%22%2C%22topperforming%22%2C%22trending%22%2C%22Backup%3A%22%2C%22Cloud%3A%22%2C%22Network%3A%22%2C%22Network%3AFTP%22%2C%22Network%3AWeb%22%2C%22Network%3AOther%22%2C%22Plugins%3A%22%2C%22Productivity%3A%22%2C%22Tools%3A%22%2C%22Tools%3AUtilities%22%2C%22All%22%2C%22repos%22%2Cnull%2Cnull%2Cnull%2Cnull%2Cnull%2Cnull%5D; ca_selectedMenu=All; ca_data=%7B%22docker%22%3A%22%22%2C%22section%22%3A%22AppStore%22%2C%22selected_category%22%3A%22%22%2C%22subcategory%22%3A%22%22%2C%22selected_subcategory%22%3A%22%22%2C%22selected%22%3A%22%7B%5C%22docker%5C%22%3A%5B%5D%2C%5C%22plugin%5C%22%3A%5B%5D%2C%5C%22deletePaths%5C%22%3A%5B%5D%7D%22%2C%22lastUpdated%22%3A0%2C%22nextpage%22%3A0%2C%22prevpage%22%3A0%2C%22currentpage%22%3A1%2C%22searchFlag%22%3Atrue%2C%22searchActive%22%3Atrue%2C%22previousAppsSection%22%3A%22%22%7D; col=1; dir=0; docker_listview_mode=basic; one=tab1 Sec-WebSocket-Key: aaaaaaaa3CNW7Y3Waaaaaaaa Sec-WebSocket-Extensions: permessage-deflate; client_max_window_bits Sec-WebSocket-Protocol: tty

response:

HTTP/1.1 101 Switching Protocols Server: nginx Date: Sat, 28 Aug 2021 16:35:53 GMT Connection: upgrade Upgrade: WebSocket Sec-WebSocket-Accept: aaaaaaaaFh/OM7XjuLssaaaaaaaa Sec-WebSocket-Protocol: tty

content:

data:undefined,

EDIT: Ah, damn it. I closed one of the still open GUI-Tabs and by that the WebTerminal does not reload anymore 😩

So it seems to be a connection between the background process which loads notifications and the WebTerminal. I will try to investigate the problem when it happens again.

But we can compare against the requests which happen if this bug is not present. This time it loads three different URLs:

ws://tower:5000/webterminal/ws

GET ws://tower:5000/webterminal/ws HTTP/1.1 Host: tower:5000 Connection: Upgrade Pragma: no-cache Cache-Control: no-cache User-Agent: Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/92.0.4515.159 Safari/537.36 Upgrade: websocket Origin: http://tower:5000 Sec-WebSocket-Version: 13 Accept-Encoding: gzip, deflate Accept-Language: de-DE,de;q=0.9,en-US;q=0.8,en;q=0.7 Cookie: users_view=user1; db-box2=2%3B0%3B1; test.sdd=short; test.sdb=short; test.sdc=short; diskio=diskio; ca_startupButton=topperforming; port_select=eth1; unraid_11111111621fb6eace5f11d511111111=11111111365ea67534cf76a011111111; ca_dockerSearchFlag=false; ca_searchActive=true; ca_categoryName=undefined; ca_installMulti=false; ca_categoryText=Search%20for%20webdav; ca_sortIcon=true; ca_filter=webdav; ca_categories_enabled=%5Bnull%2C%22installed_apps%22%2C%22inst_docker%22%2C%22inst_plugins%22%2C%22previous_apps%22%2C%22prev_docker%22%2C%22prev_plugins%22%2C%22onlynew%22%2C%22new%22%2C%22random%22%2C%22topperforming%22%2C%22trending%22%2C%22Backup%3A%22%2C%22Cloud%3A%22%2C%22Network%3A%22%2C%22Network%3AFTP%22%2C%22Network%3AWeb%22%2C%22Network%3AOther%22%2C%22Plugins%3A%22%2C%22Productivity%3A%22%2C%22Tools%3A%22%2C%22Tools%3AUtilities%22%2C%22All%22%2C%22repos%22%2Cnull%2Cnull%2Cnull%2Cnull%2Cnull%2Cnull%5D; ca_selectedMenu=All; ca_data=%7B%22docker%22%3A%22%22%2C%22section%22%3A%22AppStore%22%2C%22selected_category%22%3A%22%22%2C%22subcategory%22%3A%22%22%2C%22selected_subcategory%22%3A%22%22%2C%22selected%22%3A%22%7B%5C%22docker%5C%22%3A%5B%5D%2C%5C%22plugin%5C%22%3A%5B%5D%2C%5C%22deletePaths%5C%22%3A%5B%5D%7D%22%2C%22lastUpdated%22%3A0%2C%22nextpage%22%3A0%2C%22prevpage%22%3A0%2C%22currentpage%22%3A1%2C%22searchFlag%22%3Atrue%2C%22searchActive%22%3Atrue%2C%22previousAppsSection%22%3A%22%22%7D; col=1; dir=0; docker_listview_mode=basic; one=tab1 Sec-WebSocket-Key: aaaaaaaaqoOk/3z+aaaaaaaa Sec-WebSocket-Extensions: permessage-deflate; client_max_window_bits Sec-WebSocket-Protocol: tty

response

HTTP/1.1 101 Switching Protocols Server: nginx Date: Sat, 28 Aug 2021 16:51:11 GMT Connection: upgrade Upgrade: WebSocket Sec-WebSocket-Accept: aaaaaaaaDWIMhZ8VeZoxaaaaaaaa Sec-WebSocket-Protocol: tty

This time, there was no content!

/webterminal/ request:

GET /webterminal/ HTTP/1.1 Host: tower:5000 Connection: keep-alive Pragma: no-cache Cache-Control: no-cache Upgrade-Insecure-Requests: 1 User-Agent: Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/92.0.4515.159 Safari/537.36 Accept: text/html,application/xhtml+xml,application/xml;q=0.9,image/avif,image/webp,image/apng,*/*;q=0.8,application/signed-exchange;v=b3;q=0.9 Referer: http://tower:5000/Docker Accept-Encoding: gzip, deflate Accept-Language: de-DE,de;q=0.9,en-US;q=0.8,en;q=0.7 Cookie: users_view=user1; db-box2=2%3B0%3B1; test.sdd=short; test.sdb=short; test.sdc=short; diskio=diskio; ca_startupButton=topperforming; port_select=eth1; unraid_11111111621fb6eace5f11d511111111=11111111365ea67534cf76a011111111; ca_dockerSearchFlag=false; ca_searchActive=true; ca_categoryName=undefined; ca_installMulti=false; ca_categoryText=Search%20for%20webdav; ca_sortIcon=true; ca_filter=webdav; ca_categories_enabled=%5Bnull%2C%22installed_apps%22%2C%22inst_docker%22%2C%22inst_plugins%22%2C%22previous_apps%22%2C%22prev_docker%22%2C%22prev_plugins%22%2C%22onlynew%22%2C%22new%22%2C%22random%22%2C%22topperforming%22%2C%22trending%22%2C%22Backup%3A%22%2C%22Cloud%3A%22%2C%22Network%3A%22%2C%22Network%3AFTP%22%2C%22Network%3AWeb%22%2C%22Network%3AOther%22%2C%22Plugins%3A%22%2C%22Productivity%3A%22%2C%22Tools%3A%22%2C%22Tools%3AUtilities%22%2C%22All%22%2C%22repos%22%2Cnull%2Cnull%2Cnull%2Cnull%2Cnull%2Cnull%5D; ca_selectedMenu=All; ca_data=%7B%22docker%22%3A%22%22%2C%22section%22%3A%22AppStore%22%2C%22selected_category%22%3A%22%22%2C%22subcategory%22%3A%22%22%2C%22selected_subcategory%22%3A%22%22%2C%22selected%22%3A%22%7B%5C%22docker%5C%22%3A%5B%5D%2C%5C%22plugin%5C%22%3A%5B%5D%2C%5C%22deletePaths%5C%22%3A%5B%5D%7D%22%2C%22lastUpdated%22%3A0%2C%22nextpage%22%3A0%2C%22prevpage%22%3A0%2C%22currentpage%22%3A1%2C%22searchFlag%22%3Atrue%2C%22searchActive%22%3Atrue%2C%22previousAppsSection%22%3A%22%22%7D; col=1; dir=0; docker_listview_mode=basic; one=tab1

response:

HTTP/1.1 200 OK Server: nginx Date: Sat, 28 Aug 2021 16:51:11 GMT Content-Type: text/html Content-Length: 112878 Connection: keep-alive content-encoding: gzip

content:

<!DOCTYPE html><html lang="en"><head><meta charset="UTF-8"><meta http-equiv="X-UA-Compatible" content="IE=edge,chrome=1"><title>ttyd - Terminal</title> ...

/webterminal/token request

GET /webterminal/token HTTP/1.1 Host: tower:5000 Connection: keep-alive Pragma: no-cache Cache-Control: no-cache User-Agent: Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/92.0.4515.159 Safari/537.36 Accept: */* Referer: http://tower:5000/webterminal/ Accept-Encoding: gzip, deflate Accept-Language: de-DE,de;q=0.9,en-US;q=0.8,en;q=0.7 Cookie: users_view=user1; db-box2=2%3B0%3B1; test.sdd=short; test.sdb=short; test.sdc=short; diskio=diskio; ca_startupButton=topperforming; port_select=eth1; unraid_11111111621fb6eace5f11d511111111=11111111365ea67534cf76a011111111; ca_dockerSearchFlag=false; ca_searchActive=true; ca_categoryName=undefined; ca_installMulti=false; ca_categoryText=Search%20for%20webdav; ca_sortIcon=true; ca_filter=webdav; ca_categories_enabled=%5Bnull%2C%22installed_apps%22%2C%22inst_docker%22%2C%22inst_plugins%22%2C%22previous_apps%22%2C%22prev_docker%22%2C%22prev_plugins%22%2C%22onlynew%22%2C%22new%22%2C%22random%22%2C%22topperforming%22%2C%22trending%22%2C%22Backup%3A%22%2C%22Cloud%3A%22%2C%22Network%3A%22%2C%22Network%3AFTP%22%2C%22Network%3AWeb%22%2C%22Network%3AOther%22%2C%22Plugins%3A%22%2C%22Productivity%3A%22%2C%22Tools%3A%22%2C%22Tools%3AUtilities%22%2C%22All%22%2C%22repos%22%2Cnull%2Cnull%2Cnull%2Cnull%2Cnull%2Cnull%5D; ca_selectedMenu=All; ca_data=%7B%22docker%22%3A%22%22%2C%22section%22%3A%22AppStore%22%2C%22selected_category%22%3A%22%22%2C%22subcategory%22%3A%22%22%2C%22selected_subcategory%22%3A%22%22%2C%22selected%22%3A%22%7B%5C%22docker%5C%22%3A%5B%5D%2C%5C%22plugin%5C%22%3A%5B%5D%2C%5C%22deletePaths%5C%22%3A%5B%5D%7D%22%2C%22lastUpdated%22%3A0%2C%22nextpage%22%3A0%2C%22prevpage%22%3A0%2C%22currentpage%22%3A1%2C%22searchFlag%22%3Atrue%2C%22searchActive%22%3Atrue%2C%22previousAppsSection%22%3A%22%22%7D; col=1; dir=0; docker_listview_mode=basic; one=tab1

response

HTTP/1.1 200 OK Server: nginx Date: Sat, 28 Aug 2021 16:51:11 GMT Content-Type: application/json;charset=utf-8 Content-Length: 13 Connection: keep-alive

content:

{"token": ""}EDIT: Ok, had this bug again. This time the shares and main tab were parallel open while the Terminal reloaded. After closing the main tab, it stopped. This time I leave only the main tab open to verify the connection to this page.

-

30 minutes ago, pervin_1 said:

My understanding containers are not aware that /tmp is not real "Ram Disk". So the writes go to SSD instead.

Correct.

-

8 hours ago, pervin_1 said:

Nextcloud was in the tmp folder. Added extra parameter to mount the tmp folder in RAM disk ( not sure if you script handles the tmp in docker containers ).

My script covers only /docker/containers. Everything what happens inside the container isn't covered as its in the /docker/overlay2 or /docker/image/brtfs path. So yes, it was a good step to add a RAM disk path for the /tmp folder of Nextcloud.

8 hours ago, pervin_1 said:The OnlyOffice, mainly writes go to /run/postgresql every one minute or so

This is something which I would not touch. PostgreSQL is a database. It contains important data which shouldn't be in tbe RAM. Note: if you link a container's /tmp path to a RAM Disk, all data inside this path will be deleted on server reboot.

Note: Using /tmp as a RAM disk is the default behavior of Unraid, Debian and Ubuntu. It seems not to be the default for Alpine, but as such popular distributions use RAM disks for /tmp, I think the application developers do not store important data in /tmp.

-

14 hours ago, pervin_1 said:

Does it mean I have some other dockers in the appfolder writing something ( besides status and log files ) to my cash drives,

Yes. Execute this command multiple times and check which files are updated frequently:

find /var/lib/docker -type f -print0 | xargs -0 stat --format '%Y :%y %n' | sort -nr | cut -d: -f2- | head -n30

Execute this to find out which folder name belongs to which container:

csv="CONTAINER ID;NAME;SUBVOLUME\n"; for f in /var/lib/docker/image/*/layerdb/mounts/*/mount-id; do sub=$(cat $f); id=$(dirname $f | xargs basename | cut -c 1-12); csv+="$id;" csv+=$(docker ps --format "{{.Names}}" -f "id=$id")";" csv+="/var/lib/docker/.../$sub\n"; done; echo -e $csv | column -t -s';'14 hours ago, boomam said:Let me know if you want/think some diagnostic logs would help diagnose.

Please post them (and the time when the pool stopped working).

-

5 hours ago, ungeek67 said:

/mnt/cache/system/docker/docker/containers

/var/lib/docker/containers

I see the same exact same events on both

Yes, this drove me crazy as well. Don't ask me why, but because one path mounts to the other, both return the same activity results from the RAM-Disk although I would expect that /mnt/cache reflects the SSD content?!

The only way to see the real traffic on the SSD, is to mount the parent dir:

mkdir /var/lib/docker_test mount --bind /var/lib/docker /var/lib/docker_test

Now you can monitor /var/lib/docker_test and you see the difference. That's why I needed to use the same trick to create a backup every 30 minutes. It took multiple days to find that out 😅

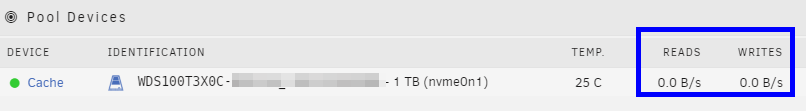

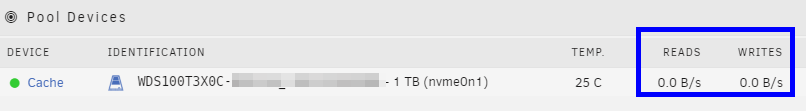

Screenshot:

If you use the docker.img it is easier. It has the correct timestamp every 30 minutes. 🤷♂️

PS: You can see the higher write activity on your SSD in the Main tab if you wait for 30 minute backup (xx:00 and xx:30).

Unmount and remove the test dir, when you finished your tests:

umount /var/lib/docker_test rmdir /var/lib/docker_test

-

1

1

-

-

8 hours ago, boomam said:

I'd have to look into it closer

I did. It was not as easy as I thought, but finally I was successful. At first I though I could open two terminals and watch for smartctl and hdparm processes (which Unraid uses to set standby):

while true; do pid=$(pgrep 'smartctl' | head -1); if [[ -n "$pid" ]]; then ps -p "$pid" -o args && strace -v -t -p "$pid"; fi; done

while true; do pid=$(pgrep 'hdparm' | head -1); if [[ -n "$pid" ]]; then ps -p "$pid" -o args && strace -v -t -p "$pid"; fi; done

But I found out that some of the processes were to fast to monitor. So I changed the source code of hdparm and smartctl and added in both apps a sleep time of 1 second (Trick 17, we say in Germany ^^). Then I used this command to watch for the processes:

while true; do for pid in $(pgrep 'smartctl|hdparm'); do if [[ $lastcmd != $cmd ]] || [[ $lastpid != $pid ]]; then cmd=$(ps -p "$pid" -o args); echo $cmd "($pid)"; lastpid=$pid; lastcmd=$cmd; fi; done; done

After that I pressed the spin down icon of an HDD which returned:

COMMAND /usr/sbin/hdparm -y /dev/sdb (5766)

After the disk spun down, Unraid starts to spam the following comand every second:

COMMAND /usr/sbin/hdparm -C /dev/sdb (5966)

I think by that Unraid's WebGUI is able to update the Icon as fast as possible if a process wakes up the Disk.

Then I pressed the spin up icon which returns this:

COMMAND /usr/sbin/hdparm -S0 /dev/sdb (27296)

And several seconds later, after the disk spun up, this command appeared (Unraid checks SMART values):

COMMAND /usr/bin/php /usr/local/sbin/smartctl_type disk1 -A (28152) COMMAND /usr/sbin/smartctl -A /dev/sdb (28155)

The next step was to click on the spin down icon of the SSD... but nothing happened. So this icon has no function. Buuh ^^

Now I set my Default spin down delay to 15 minutes and waited... and then this appeared:

COMMAND /usr/sbin/hdparm -y /dev/sdb (5826) COMMAND /usr/sbin/hdparm -y /dev/sde (6203) COMMAND /usr/sbin/hdparm -y /dev/sdc (6204)

And Unraid is spamming again:

COMMAND /usr/sbin/hdparm -C /dev/sde (6465) COMMAND /usr/sbin/hdparm -C /dev/sdb (6555) COMMAND /usr/sbin/hdparm -C /dev/sdc (6643)

But as I thought.. no command mentions /dev/sdd, which is my SATA SSD. So Unraid never sends any standby commands to your SSD.

I remember that one of the unraid devs said in the forums, that SSDs do not consume measurable more energy if they are in standby state, so they did not implement equivalent commands.

Conclusion: As you did not change any setting which covers your SSDs power management and as they are working now, your problem should be something else.

-

On 8/22/2021 at 7:59 PM, boomam said:

so setting it to 'never' should help

As far as I know Unraid does not send any sleep commands to non rotational disks. So "never" should only touch HDDs.

-

30 minutes ago, boomam said:

saying that the script affects the drives sleep mode

This was only a guess. Maybe the problem lies somewhere else?! As long nobody else has this problem and it isn't verified, it does not make sense to warn everyone. By now you are the only one who had this problem. And as I said, if it is related to the power management, it can happen all the time. Not only because of this modification.

30 minutes ago, boomam said:Whilst your script doesn't directly affect that, it does dramatically increase the likelihood of it

If this was your problem, then yes, but by the same argumentation Unraid would need to throw a warning if you disable Docker or if you create multiple pools or...?!

PS Wait a week or so. If it does not happen again, revert your sleep setting and we will see if this was the reason. By the way: How did you disable sleep? For SATA these methods exist:

max_performance medium_power med_power_with_dipm min_power

Which was active in your setup?

-

48 minutes ago, boomam said:

For others, it could be worth updating the original post

Your hardware problem has nothing to do with this modification. If it is related to the power management of your SSD, it would even happen if you disable docker.

-

3 hours ago, boomam said:

both my cache drives have gone offline, at the same time....investigating....

Sounds like you are using ASPM (NVMe) or ALPM (SATA). This means, because of my code your SSD went the very first time in sleep state and this kills your BTRFS RAID (should not happen = nvme firmware or bios bug). Check your logs for errors.

-

5 hours ago, Sunsparc said:

What specifically needs to go in the script for the job? The entire contents of the paste or just a certain part?

I updated the above code. Now it automatically syncs the RAM-Disk every 30 minutes. You can change it in the Go file to a lower value if you like to.

5 hours ago, boomam said:Will this work if the docker.img is on a different drive?

For myself, '/mnt/cache-b/docker/docker.img" instead of "/mnt/cache/docker/docker.img"

The location of your docker.img is irrelevant. You can even use a docker directory, which is a method I recommend as it reduces write amplification.

-

I solved this issue as follows and successfully tested it in:

- Unraid 6.9.2

- Unraid 6.10.0-rc1

- Add this to /boot/config/go (by Config Editor Plugin):

-

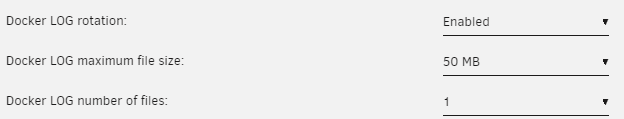

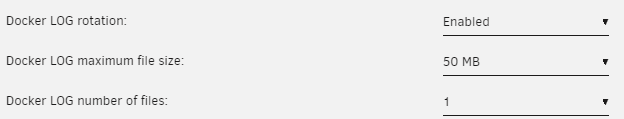

Optional: Limit the Docker LOG size to avoid using too much RAM:

- Reboot server

Notes:

- By this change /var/lib/docker/containers, which contains only status and log files, becomes a RAM-Disk and therefore avoids wearing out your SSD and allows a permanent sleeping SSD (energy efficient)

- It automatically syncs the RAM-Disk every 30 minutes to your default appdata location (for server crash / power-loss scenarios). If container logs are important to you, feel free to change the value of "$sync_interval_minutes" in the above code to a smaller value to sync the RAM-Disk every x minutes.

- If you like to update Unraid OS, you should remove the change from the Go File until it's clear that this enhancement is still working/needed!

Your Reward:

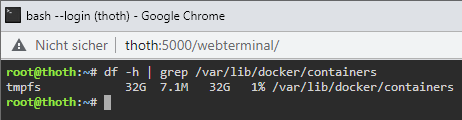

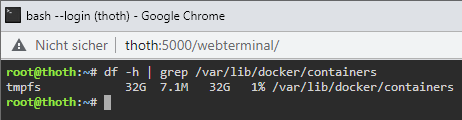

After you enabled the docker service you can check if the RAM-Disk has been created (and its usage):

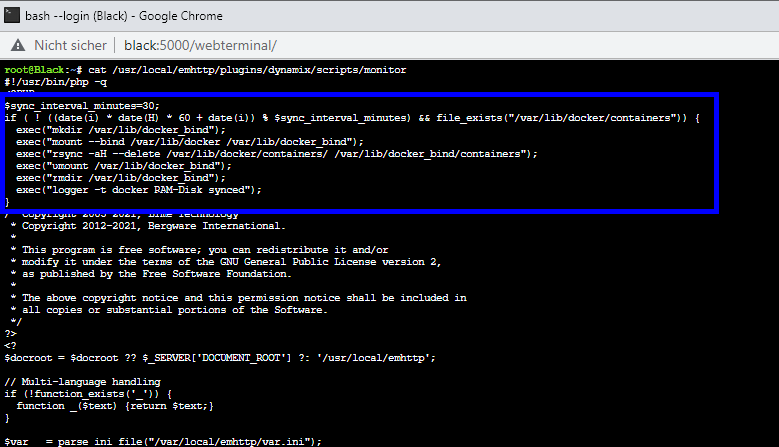

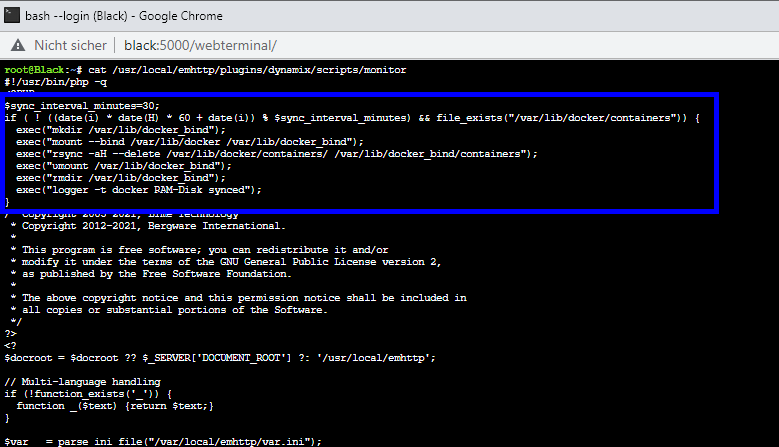

Screenshot of changes in /etc/rc.d/rc.docker

and /usr/local/emhttp/plugins/dynamix/scripts/monitor

-

18

18

-

2

2

-

So with Unraid 6.10 it should work by adding this to the Go file?

echo ":msg,contains,\"Router Advertisement from\"" stop > /etc/rsyslog.d/02-custom.conf /etc/rc.d/rc.rsyslogd restart

-

17 hours ago, ich777 said:

only asking because if you've bought a key

I'm not sure if I really like to bind my keys to this moderator account.

PS I really wonder why Limetech binds their license sales to a forum software which had serious loading problems in the last months. And I really wonder why to Invision. Invision is the company which canceled all lifetime licenses with the release of version 4. And its still a slow, expensive and buggy forum software compared to eg Xenforo (Froala = best editor).

-

-

23 minutes ago, ich777 said:

is then bound to your Forum account

Is it possible to unlink and relink with a different forum account?

-

1

1

-

-

22 minutes ago, ich777 said:

Without the My Servers plugin nothing is collected, it is only used for the Key management.

I think the main problem is that the release notes only describe what happens if a user likes to try out Unraid and buys the license afterwards. For me as a license owner it's still unclear what I need to do. Do I need to login if I update to Unraid 6.10 and if yes, why? Does it change something to the license key file?!

-

8 hours ago, limetech said:

Security Changes

It is now mandatory to define a root password. We also created a division in the Users page to distinguish root from other user names. The root UserEdit page includes a text box for pasting SSH authorized keys.

For new configurations, the flash share default export setting is No.

For all new user shares, the default export setting is No.

For new configurations, SMBv1 is disabled by default.

For new configurations, telnet, ssh, and ftp are disabled by default.

We removed certain strings from Diagnostics such as passwords found in the 'go' file.

👍

8 hours ago, limetech said:Starting with this release, it will be necessary for a new user to either sign-in with existing forum credentials or sign-up, creating a new account via the UPC in order to download a Trial key. All key purchases and upgrades are also handled exclusively via the UPC.

Signing-in provides these benefits:

No more reliance on email and having to copy/paste key file URLs in order to install a license key - keys are delivered and installed automatically to your server.

Notification of critical security-related updates. In the event a serious security vulnerability has been discovered and patched, we will send out a notification to all email addresses associated with registered servers.

Ability to install the My Servers plugin (see below).

Posting privilege in a new set of My Servers forum boards.

I don't understand this part. Is it still possible to use a paid license without internet access or not? And why is it needed to have an account for security-related notifications? Why don't you simply use the e-mail address which was used to buy the license (or the newsletter)?! So I'm forced to use My Servers if I want to receive security notifications?!

And how is it possible to pull data from my server if I did not open any ports? You explained it through a reverse-proxy relay. This means a registered server has a 24/7 connection to the relay?!

Qnap and Ubiquiti proved that it is a bad idea to use such services and having a random DDNS url with a random port is only security through obscurity. This is why I don't plan to use My Servers.

-

1

1

-

2

2

-

-

18 hours ago, boomam said:

i'm a little confused why i'm still seeing an amount of IO

Your containers are reading from / writing on files in /mnt/cache/appdata

-

7 hours ago, boomam said:

put in 2x 250Gb SSDs to act as a second cache pool, strictly for the docker.img file.

I would use one SSD, format it as XFS and set docker to path mode. By that the write amplification is even more reduced. Disabling healthcheck on all containers is an additional easy task.

-

1

1

-

-

5 minutes ago, TexasUnraid said:

but for some reason the system will lock up when I did that until writes were flushed at times so had to revert back.

Did you flush it manually?! Linux is caching all the time, so it would be strange that it causes a lock up.

-

Another idea. Are you using "powertop --auto-tune"? If yes, it reduces "vm.dirty_expire_centisecs" to "1500" = 15 seconds. You can check your settings as follows:

sysctl -a | grep dirty

The default value is "3000". You could raise it to "12000" (2 minutes). By that all writes to the disks are cached for 2 minutes in the RAM:

sysctl vm.dirty_expire_centisecs=12000

Of course you should have an UPS to avoid data loss through power outage.

I'm using "6000" and raised vm.dirty_ratio to "50", so writes are using 50% of my free RAM.

-

51 minutes ago, TexasUnraid said:

database

So it's a file based database like SQLite? Any support for external databases like MySQL?

Mover does not work if Sharename contains a whitespace and cache is set to Prefer

in Stable Releases

Posted

Changed Status to Solved