-

Posts

408 -

Joined

-

Last visited

Content Type

Profiles

Forums

Downloads

Store

Gallery

Bug Reports

Documentation

Landing

Everything posted by Leifgg

-

First Build, please review (SuperMicro, Xeon, Fractal 804)

Leifgg replied to nukeman's topic in Unraid Compulsive Design

If you look in my signature you will find a similar build. I would say that first you need to decide maximum number of disks you are going to use. The motherboard has 6 SATA ports so if you need more than that in the future you might use another motherboard or just add a PCI card with more ports later. The number of disks is also important when you select PSU. The fans in the 804 have three fixed settings and are powered directly from the PSU so you will not get any fan speed monitoring. I have modified this with 3 separate fan regulators where I set a fixed speed but still do fan speed monitoring trough the motherboard. Even if I have cash pool with 2 disks I believe you would be fine with just one, you can always upgrade later. I am using Samsung 840 EVO and that was not the best decision I ever made. https://lime-technology.com/forum/index.php?topic=27855.msg366396#msg366396 -

I am using 2 x 840 EVO as cache drives in my unRAID box and this specific drive has some “issues” that might be good to know. The initial firmware did have some performance issues related to read performance so Samsung released a something they call Performance Restoration Software to fix it. The other issue you can find with these drives is the write speed for large amount of data. This is due to the fact that the drive gets rather hot during write and there is an inbuilt throttling to prevent overheating. The result is that the transfer speed can drop to almost nothing until the drive cools down again. Personally I would have chosen another drive, at least as cache drive, if I had understood the consequences of the throttling. However I have difficulties understanding what I mentioned above could cause the problems you are having with your Laptop.

-

I am using the Docker app and have 4.1 TB data (76000 files). 1.6 TB remaining to backup (around 1100 files). Data de-duplication = Automatic, Compression = On and Encryption enabled. Works fine so far.

-

Just to be on the safe side.... Do you have the possibility to check power consumption of the server on full load? (I think you are ok) Remember that capacity is with new batteries so some margin is good. Looks to be from same series that I am using and that one works just fine.

-

unRAID supports APC and I wouldn’t expect this UPS to work out of the box but I don’t know for sure. Maybe you can contact the manufacturer and/or look in the manual to see if the protocol is compatible with APC UPS? The size of the UPS is probably capable of keeping your server running at least ½ to 1 hour but this depends on the load.

-

Docker and appdata folder - safe to use folders with leading periods?

Leifgg replied to kaiguy's topic in Docker Containers

You can make a share called appdata on your cache drive and set it to “Cache only”, that way the mover leaves it where it is. Same goes for all folders you make under that share. You can change the SMB settings for appdata share if you like “hide it” from your Mac. -

Options other than snap to copy things via SATA to my server?

Leifgg replied to gaikokujinkyofusho's topic in General Support

Not done this myself but found this in the Wiki: http://lime-technology.com/wiki/index.php/Copy_files_from_a_NTFS_drive This is probably written for unRAID5. If you need the ntfs-3g driver its different for unRAID5 than unRAID6 (32/64 bit OS). -

This is my setup: http://lime-technology.com/forum/index.php?topic=38554.msg358683#msg358683 There are many different ways to do backups and my setup is very basic and it will probably change when I have some spare time.

-

Have a look here: http://lime-technology.com/forum/index.php?topic=38554.msg358683#msg358683 I use rsync for backup and you can probably get some hits on how to do it if you would go that route.

-

One option is to use rsync to transfer between our unraid servers. Assuming you have a Gbit network it would still take a number of days to do this! Syncback would take at least the twice the time since everything needs to go through your PC and back again… Another tool to us could be TeraCopy if you still would like to use your PC and you probably will for the Flexraid server unless you can use rsync on that server as well? When I did a preclear of my 6 TB drives (3 passes) it took 100 hours. You can preclear several drives at the same time but the bandwidth of disk controller might influence the speed as well disk speed.

-

Silverstone DS380 / ASRock C2550D4I Build

Leifgg replied to Jomp's topic in Unraid Compulsive Design

There is a firmware update for the Marvell 9230 to disable the Raid function. According to ASRock this should make the 9230 “more stable”. I am using the C2750D4I in my Backup server but the current setup where I use the 9230 ports has only been running for a few weeks, so far without problems. Initially I used the board in my main server with the old BIOS (2.50) and without firmware update for the 9230 and that was, to put it mildly, painful! Note that this is for the C2750D4I and I am not sure if this is applicable for the C2550D4I board as well. -

Looking to buy a few new drives, WD Red vs Red Pro

Leifgg replied to xdriver's topic in General Support

Another brand to look at could be HGST and their Deskstar NAS drives. Haven’t used them myself but they have, from what I have read, very low failure rate. Price is a about the same as the Red. Would be interesting to hear from someone that has some hands on experience with these. -

The method using hard links looks interesting but honestly I never got that far understanding what it actually meant and what it could be used for.... I guess I have some reading to do now that I get a better feel for how it can be used. Thanks!

-

Never checked that before but found a link for you indicating that some checking is (or can be) done: http://unix.stackexchange.com/questions/30970/does-rsync-verify-files-copied-between-two-local-drives

-

Ok… first the script “CompleteRemoteBackup.txt” (rename to .sh) contains a full backup of shares from my main server to the backup server. You need to make this executable, chmod +x file.name I have made a folder on the USB flash drive where I store all my scripts /boot/scripts To have the script to run every night you need to create a cron job (task). The file “CronEntryCompleteRemoteBackup.txt” (remove .txt) contains info on when to run the job and what to do. To make this persistent (so it survives a reboot of the main server) you need an entry that creates this job at boot. The following lines should be placed in your go file: # Setup cron job for backup to remote share cat /boot/scripts/CronEntryCompleteRemoteBackup >> /var/spool/cron/crontabs/root I am running the backups as root user so the cron jobs are stored in the directory for that user. As you can see in the backup script I am also using user root on my backup server that is on 192.168.1.102. On difficulty here is that connecting to the backup server should be done via SSH. This means that you need to generate the keys and copy them to the remote server. I am running unRAID6 so I don’t know how this (SSH) would work on unRAID5 (if you are using that). Here is a tutorial, follow step 1, 2 and 3: http://www.thegeekstuff.com/2008/11/3-steps-to-perform-ssh-login-without-password-using-ssh-keygen-ssh-copy-id/ You will find the keys stored in the home folder for user root in /root/.ssh Sadly this location doesn’t survive a reboot either so you now need to copy the content of this folder to your USB drive. I have chosen to make a folder /boot/scripts/my_ssh and copied them there. The script “SSHInstall.txt” (rename to .sh and make executable) copies the files back again after reboot if you add one more entry in the go file: # Install my ssh keys and known remote hosts /boot/scripts/SSHInstall.sh When playing with Rsync remember that this is a power full tool! Always test first with test data and also use the option dry run until you are sure on what is going on. There is a lot of guides on Rsync and it has a tremendous amount of options to play with. Also a warning on the option --delete. This will delete files on the destination if they no longer exists on the source. That’s normally ok but what Rsync does is that it first builds a file list on what to delete and what to copy (and what to leave untouched, not needed to be copied) then it starts the actual job by first deleting files and then copying new files. This means that if you interrupt the script you can end up with missing files on the destination since it was interrupted before the new once got copied. Have fun! Leif CompleteRemoteBackup.txt CronEntryCompleteRemoteBackup.txt SSHInstall.txt

-

My setup is that I do a scheduled backup locally on my main server every night with Rsync (since I have enough space). I also do a scheduled backup every night to my backup sever, also with the help of Rsync. In addition to that I am running CrashPlan on the main server. Once it has been configured from my Windows PC it runs unattended. Pls let me know if you need some Rsync examples …

-

Have ordered the Fractal Node 804 and will do a build next week. Initially with 8 drives and two SSD:s. From what I understand it’s possible to install two additional 3.5” drives without any problems.

-

The CrashPlan docker doesn’t have any UI! Instead you setup the CrashPlan client on a PC (Windows 8 in my case) and configure that so it can connect to your server. You basically do all your setup on your pc and only need to specify the config folder and the data share (as you have done). You might consider changing your data share to: /data -> /mnt/user/ This gives you the possibility to backup other shares as well, not only Pictures. You do the setup in the client later. There are changes needed in the config folders on both the server and client side to get it to work. Have a look on this thread http://lime-technology.com/forum/index.php?topic=33864.0 as a starter….

-

Probably you can backup open files, from what I can see Crashplan supports that, but I am not sure why you should… I have same configuration and have an Rsync script that I run manually to copy the files from the cache drive to a folder on the array and then I have Crashplan to backup that folder. However I always shut down my VMs and Dockers before running the Rsync script!

-

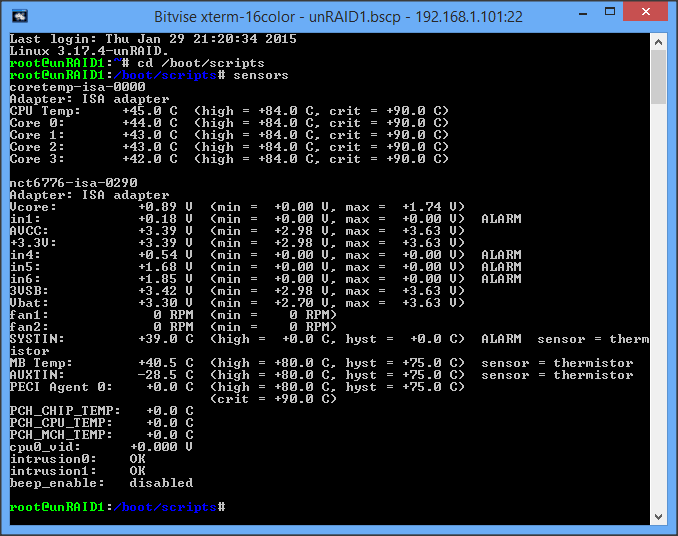

This one works for me. sensors.conf file: # sensor configuration for unRAID1 chip "coretemp-isa-0000" label temp1 "CPU Temp" chip "nct6776-isa-0290" label temp2 "MB Temp" go file entry: # Load drivers for temperature monitoring modprobe coretemp modprobe nct6775 /usr/bin/sensors -s