eek

Members-

Posts

69 -

Joined

-

Last visited

Content Type

Profiles

Forums

Downloads

Store

Gallery

Bug Reports

Documentation

Landing

Everything posted by eek

-

Kaldek is correct that the real attack vector isn't going to be attacks from the internet but rather attacks from malicious code hidden within existing plugins. Now thankfully most of these plugins are small python that can be easily checked but some checks are going to be needed somewhere to stop malicious changes being made. And probably an education effort (even if it's just a warning banner) saying Dockers and VMs should 1) only come from trusted sources 2) only grant access to the folders they need access to and absolutely NOTHING else.

-

Not the biggest issue but potentially an issue if you aren't paying attention as I almost pressed the reboot button even though the server was in the closing stages of rebuilding the parity disk (14tb drives take far longer to run than 4tb drives). Ideally whatever logic displays the banner should check if a parity operation is in progress and stop it appearing or at least check that no parity operation is in place when if you reboot the server (I don't know if that is already the case, I noticed that the parity operation hadn't finished before I pressed the reboot option).

-

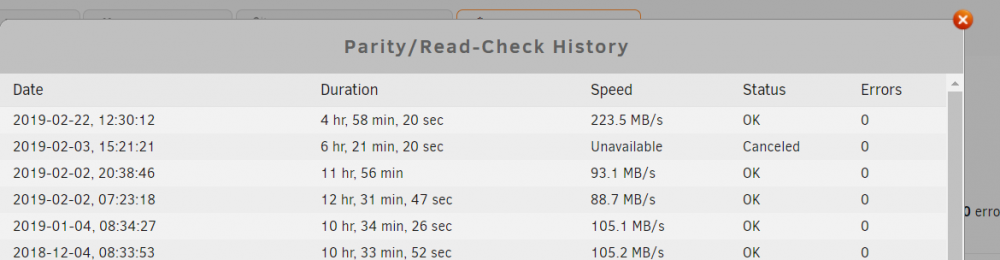

A minor issue (one I can live with if it's impossible to fix) is that the duration is reset to zero when you restart / unpause a check. This means you end up with speeds like the one attached - I was in a meeting otherwise I would have paused and restarted with a minute to go to really demonstrate the issue.

-

It's included in 6.7 see the releasr notes for rc1 above (unraid is currently at rc4)

-

Yes - my mistake as I'm not at home to look at the portal. Without ZFS and as btrfs is really not ready for Array use it's not really a goer...

-

https://github.com/zfsonlinux/zfs-auto-snapshot/wiki/Samba has a nice outline for what is required to do this with instructions for Debian. It doesn't look that difficult if the drives are configured for ZFS (as I suspect is most people's arrays will be nowadays) and the files are written directly to the array - cache drives would add a lot of additional complexity...

-

For those who won't have seen it now the new look and feel as part of 6.7 is a great improvement. One (very minor) suggestion would be to start paging at 24 apps rather than 25 as you currently end up with a lone app at the bottom of the screen.

-

That's not a bug - whilst I disagree with the approach Unraid use* - the latest release in the next version branch is the 6.6.0-rc4 release. * Personally the next release branch should contain all (appropriate) production releases as well as rc releases.

-

Just checked my log files and I can confirm that cron isn't running as the scripts that should be triggered aren't running. tower-diagnostics-20181108-1318.zip

-

[6.6.0.rc4] The next branch doesn't announce the release of 6.6.0 final

eek commented on eek's report in Prereleases

Sorry but public testing should be about ensuring more hardware combinations are tested than would otherwise be the case. Hence you do need multiple people to test the software as ideally you want your test team to reflect all your customer base. And regardless of that I do visit the site - I do visit it prior to installing any rc release (to ensure there isn't an obvious showstopper making installing it pointless) and then afterwards to see if there are any issues that could impact what I use the system for. The issue here is that 3 days after installing it (unless I see problems that I needed to report ) I have no need to revisit the forums... -

[6.6.0.rc4] The next branch doesn't announce the release of 6.6.0 final

eek commented on eek's report in Prereleases

And that argument is fine. However, it would mean that unless I had visited this forum I would have continued to run 6.6.0-rc4 until the Next branch revealed 6.6.1-rc1 and my system prompted me to update it (after all previously rc releases have been the latest "test" release for months).. And you can test a system without visiting this forum, the only reason for doing so would be if something went wrong and you needed to report the bug.. As I said it's a slight annoyance - I just wanted to highlight the risk that unless people are told about the final (general / production) release when we get to 6.6.1-rc1 a lot of testers could be moving to that from 6.6.0-rc4 rather than 6.6.0... -

Not exactly a big issue but the Next Branch of the update system doesn't have a record of 6.6.0 being released so the first I knew about it was when I visited the forum. Now I know that the differences between 6.6.0rc4 and 6.6.0 are negligible, but it would be nice if the next branch featured the final releases so that testers move from 6.6.0rc4 to 6.6.0 (final) and then to 6.6.1rc1.

-

unRAID OS version 6.5.3-rc1 available - TESTERS NEEDED

eek replied to limetech's topic in Announcements

I've not currently got any VM's on the server worth testing but I did do a parity check 6.5.3-rc1 - 2018-05-20, 19:51:00 10 hr, 35 min, 16 sec 105.0 MB/s OK 0 6.5.2-rc1 - 2018-05-04, 10:13:55 12 hr, 13 min, 54 sec 90.9 MB/s OK 0 100MB/s to 105MB/s so this is in the normal range. M/B: ASRock - Z77 Pro4-M CPU: Intel® Core™ i7-3770 CPU @ 3.40GHz HVM: Enabled IOMMU: Enabled Cache: 1024 kB, 128 kB, 8192 kB Memory: 32 GB (max. installable capacity 32 GB) Network: eth0: 1000 Mb/s, full duplex, mtu 1500 -

Thanks, that fixed it.

-

It seems that a recent change to Mylar has broken a lot of container based installations including my instance https://github.com/evilhero/mylar/issues/1929 has the bug report and a suggested fix

-

How much RAM do you have installed in your unRAID server?

eek replied to harmser's topic in Unraid Polls

32gb having bought an additional 16gb last week. That's for a couple of VMs I'm planning to add as soon as I get a chance... Until now the 16gb available was enough for the Dockers I'm using... -

I think there's a typo in the release notes. Not much but could annoy someone... Won't be deploying to the weekend so no further comment...

-

eek, like you, i have installed joch's s3backup docker. i also have a "+" in my AWS secret code field. i dont see any indication that the docker has ever run and the docker log file is nearly empty (it has no err msgs). what specifically did you change to finally get this docker running? thanks, Kevin Sorry never noticed the question until now. I just regenerated my secret code until I got one without a + in it....

-

I think its intentional to allow a single kernel to be used across both Intel and AMD based systems. Better a warning message that can be safely ignored than people having to choice the appropriate download (AMD / Intel) and possibly getting it wrong....

-

Possibly but that's not the point especially when no encoding should be involved between a webform post request building up and executing a command line instruction. The container's portal page give you a textbox in which you enter your access key and another one in which you enter your secret key. Everything I enter there should be transposed (without change) to the command line that launches the container. That wasn't the case resulting in the docker failing to run. Can you tell me where the appropriate place to raise a bug for the following:- Containers may inadvertently not run due to an inappropriate urldecode call transposing values entered in a container's config webform before the command line instruction is executed resulting in the container being passed incorrect values (that differ from those entered by the user on the container's config webform). As an example AWS randomly generates access and secret keys that may or may not contain + symbols. Where the key containers that + symbol joch's s3backup's container will fail to run due to the + symbol being replaced by spaces in the command line instruction generated by the config page that is used to start the container.

-

Just to say that this works brilliantly provided your account's access key / secret key does not contain the + symbol. It took me ages to work out why it wasn't working but I eventually saw that the + symbol had been transposed somewhere along the line into a space character resulting in an invalid command being sent to aws. I'm now happily transferring 20gb of photos to AWS...

-

Regardless of the fact I have moved away from unraid to a debian / snapraid solution this is just wrong. You can only release beta's occasionally when you are at a stable point to give people time to effectively test them. Beta testers are made up of a number of different breeds, those willing to take the release immediately come what may, and others who will wait a week or so before installing the beta once initial testing has been done... This means that beta tests take weeks to perform so Limetech should not be releasing every update they make. Beta releases should only be made when:- a) feedback is required or b) sections are feature complete with everything else stable. And there is no point Limetech release anything now until they are somewhere towards b....

-

[POLL] Docker Container Organisation for the unRAID community

eek replied to ironicbadger's topic in Docker Containers

Unfortunately I can't join the game at the moment. I upgraded to beta 6 last night but Xen dies and as docker needs btrfs which isn't in beta 5 and I do want a working system I'll have to wait until the Xen issues are fixed before playing... Consider switching to KVM for the moment? That way you can keep archVM, get the b6 updates, and of course have access to docker to play with. that is what I'm probably going to try doing here in the very near future because I'm personally probably always want a VM running even if I do someday start using dicker. I probably will do but it means finding a solid block of time to do the initial work as I do need squeezeserver running overnight otherwise I'll have a very annoyed wife. -

I'm in the same boat and stuck on beta 5 as Xen wasn't stable for me on beta 6. Most of my VMs would happily run as dockers (sab, sickbeard, couch potato) so I'm not that concerned as to the final Xen / KVM decision but I don't think this release is a sensible time to pick a runner.

-

There are a number of people on here who used Xen on beta 5 who have had problems with Xen on beta 6 and have had to revert back. While Docker is looking like the sensible plugin replacement, I don't think we can sensibly recommend one of Xen / KVM at the moment. Although I expect KVM is going to be the winner that could all change with the next beta.