-

Posts

767 -

Joined

-

Last visited

Content Type

Profiles

Forums

Downloads

Store

Gallery

Bug Reports

Documentation

Landing

Everything posted by craigr

-

Water Cooled Unraid Monster Finally Singing

craigr replied to alitech's topic in Unraid Compulsive Design

The back of your PODS (cages) loos entirely different? -

Water Cooled Unraid Monster Finally Singing

craigr replied to alitech's topic in Unraid Compulsive Design

I use Norco SS-500 drive cages. These look similar but are maybe knock offs? Here are the Norco's: https://www.sg-norco.com/pdfs/SS-500_5_bay_Hot_Swap_Module.pdf craigr -

Water Cooled Unraid Monster Finally Singing

craigr replied to alitech's topic in Unraid Compulsive Design

I am also interested in which hard drive pods, cages, or whatever you want to call them are. They look similar to my Norco PODS, but they are not the same ones. -

Looks like a nice budget case that will keep drives cool. Grat build that will grow with you as you add drives. Nice.

-

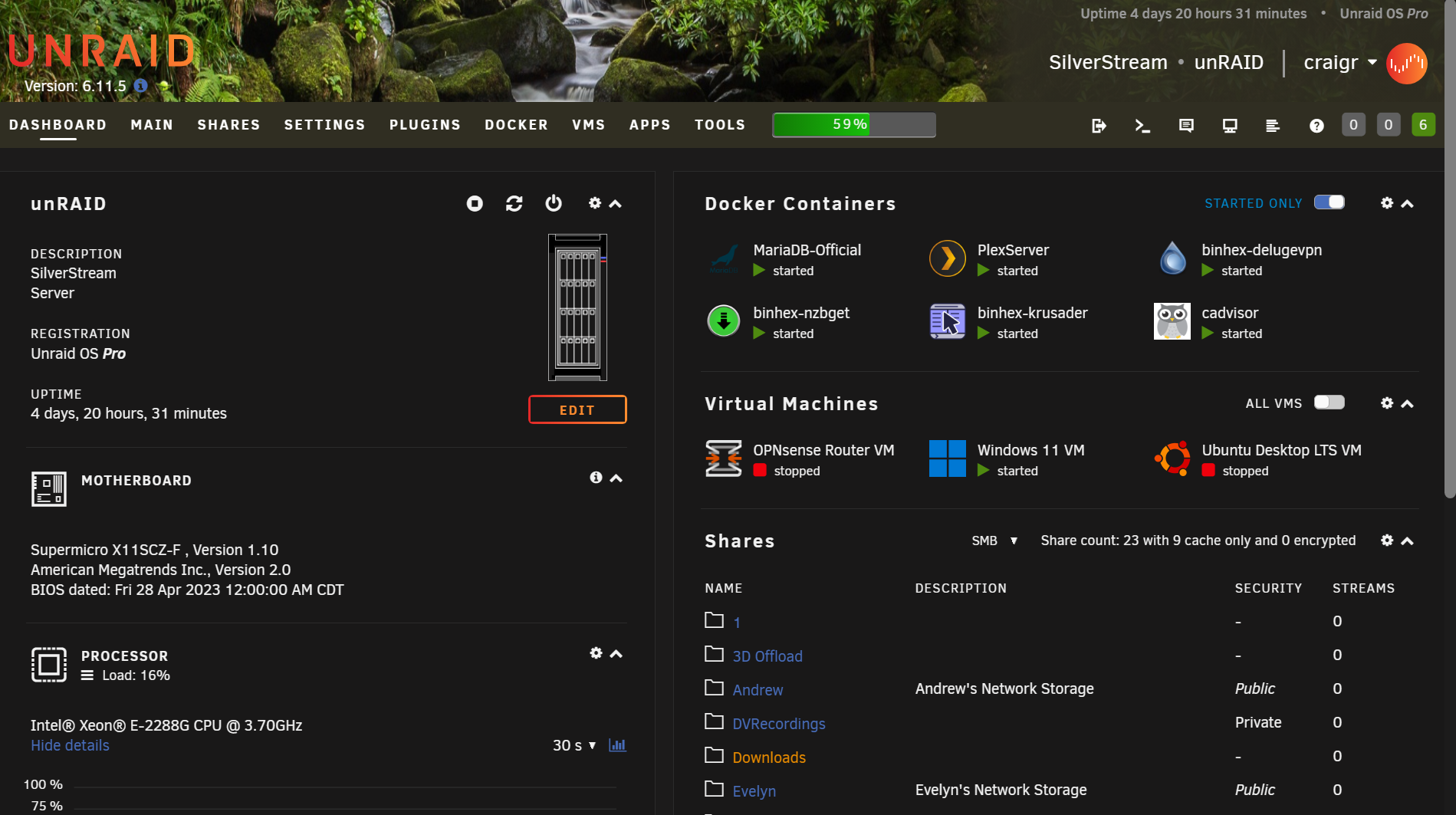

Never thought I would really care, but lately I've been dolling up the server. The original build started in a different case around 14 years ago, but the server has resided in this Xigmatek Elysium for ~10 years. Hardware changes all the time it seems. My current hardware is below but is usually up to date in my signature. Server Hardware: SuperMicro X11SCZ-F • Intel® Xeon® E-2288G • Micron 128GB ECC • Mellanox MCX311A • Seasonic SSR-1000TR • APC BR1350MS • Xigmatek Elysium • 4x Norco SS-500's Hard Drive PODs • 11x Noctura Fans. Array Hardware: LSI 9305-24i • 190TB Dual Parity on WD Helium RED's • 5x 14TB, 9x 12TB, 5x 8TB • Cache: 1x 10TB • Pool1: 2x Samsung 1TB 850 PROs RAID1 • Pool2: 2x Samsung 4TB 860 EVOs RAID0. Dedicated VM Hardware: Samsung 970 PRO 1TB NVMe • Inno3D Nvidia GTX 1650 Single Slot. Forgot to add that I custom built all the power cables for all the hard drives and bays. I use 16 AWG pure copper primary wire. It's fun to keep all the black wires in the correct order and not fry anything, but I proved it doable 🙂. Having a modular power supply is nice. I was able to split 10x "spinners" per power cable in order to stay within the amperage limits of the modular power connectors and maintain wire runs without significant voltage drop. Here are some pics... 10GB fiber goes from the Mellanox MCX311A to a Brocade 6450 switch (finally ran the fiber after much procrastination). That feeds the house and branches off. 5GB comes into the 6450 switch from my modem and I typically get around 3GB (+/-0.50GB) from my ISP. It's a main line directly to my server from which I run VM's and use as my primary workstation. I really love unRAID and how easy virtualization is. craigr

-

You sure did score some very nice hardware and make great use of it. The CSE-846 is one of my dream cases, but I don't have the space. 256GB is a lot especially if you want to get power usage down. Unless you are running loads of VM's and dockers you are probably fine with 64GB or even 32GB with moderate virtualization. I'm well under 100 watts idle and still under 300 watts all out. Really nice build though.

-

Originally I had a Windows 10 VM setup to run on my NVMe bare-metal using spaces_win_clover.img. I then upgraded to Windows 11 and eliminated the need for spaces_win_clover.img (I think it was not compatible with Windows 11 or maybe I just didn't want to use it anymore because it was no longer needed in unRAID). However, after I did that I could no longer get my Windows 11 VM to boot properly or consistently. This is the thread where I sort of sorted it out or at least got my VM working again: With the release of unRAID 6.11.2 and 6.11.3 I have encountered the problem all over again. With this line in my xml file the VM will boot properly every time, the key line being <boot dev='hd'/> <os> <type arch='x86_64' machine='pc-i440fx-7.1'>hvm</type> <loader readonly='yes' type='pflash'>/usr/share/qemu/ovmf-x64/OVMF_CODE-pure-efi-tpm.fd</loader> <nvram>/etc/libvirt/qemu/nvram/1a8fdacb-aad4-4bbb-71ea-732b0ea1051a_VARS-pure-efi-tpm.fd</nvram> <boot dev='hd'/> I have completely removed the VM (not the VM and disk, just the VM) and started over. I have assigned the NVMe to boot order 1 which adds the line for boot order in the xml file <hostdev mode='subsystem' type='pci' managed='yes'> <driver name='vfio'/> <source> <address domain='0x0000' bus='0x08' slot='0x00' function='0x0'/> </source> <boot order='1'/> <address type='pci' domain='0x0000' bus='0x00' slot='0x0c' function='0x0'/> </hostdev> When I do that, there is no line <boot dev='hd'/> generated by the template. Here is where it gets really weird. Only on the first boot after doing this will OVMF finish loading and boot Windows 11. If I shut down or restart Windows, OVMF will freeze and I cannot boot again until I go back, edit the xml to remove the boot order line, and restore the <boot dev='hd'/> ?!? When I boot with <boot dev='hd'/> this is what I get: I can also press esc and enter the OVMF BIOS. I have tried removing the TWO windows boot managers that are in there and put the NVMe as the first boot device and saved (I've done this like 20 times). However, every time I go back into the OVFM BIOS all the boot options are back as if I never deleted or reordered them 😖. On my first boot with an xml containing <boot order='1'/> I get the exact same above screen and can enter the OVFM Bios with esc. But as stated, once I shutdown Windows and reboot I will only get the first "Windows Boot Manager," OVFM will not respond to esc, and it just freezes there. Finished. Done. I have to go back to the xml, remove boot order and restore boot dev=hd. Why do I have two windows boot managers, why can't I delete them, why can I boot fine directly from my NVMe when I am in the OVFM BIOS and launch there, why can't I use boot order in my xml and boot more than once? Here is my entire current xml that boots: <?xml version='1.0' encoding='UTF-8'?> <domain type='kvm' id='2'> <name>Windows 11</name> <uuid>1a8fdacb-aad4-4bbb-71ea-732b0ea1051a</uuid> <metadata> <vmtemplate xmlns="unraid" name="Windows 11" icon="windows11.png" os="windowstpm"/> </metadata> <memory unit='KiB'>38273024</memory> <currentMemory unit='KiB'>38273024</currentMemory> <memoryBacking> <nosharepages/> </memoryBacking> <vcpu placement='static'>8</vcpu> <cputune> <vcpupin vcpu='0' cpuset='4'/> <vcpupin vcpu='1' cpuset='12'/> <vcpupin vcpu='2' cpuset='5'/> <vcpupin vcpu='3' cpuset='13'/> <vcpupin vcpu='4' cpuset='6'/> <vcpupin vcpu='5' cpuset='14'/> <vcpupin vcpu='6' cpuset='7'/> <vcpupin vcpu='7' cpuset='15'/> </cputune> <resource> <partition>/machine</partition> </resource> <os> <type arch='x86_64' machine='pc-i440fx-7.1'>hvm</type> <loader readonly='yes' type='pflash'>/usr/share/qemu/ovmf-x64/OVMF_CODE-pure-efi-tpm.fd</loader> <nvram>/etc/libvirt/qemu/nvram/1a8fdacb-aad4-4bbb-71ea-732b0ea1051a_VARS-pure-efi-tpm.fd</nvram> <boot dev='hd'/> </os> <features> <acpi/> <apic/> <hyperv mode='custom'> <relaxed state='on'/> <vapic state='on'/> <spinlocks state='on' retries='8191'/> <vendor_id state='on' value='none'/> </hyperv> </features> <cpu mode='host-passthrough' check='none' migratable='on'> <topology sockets='1' dies='1' cores='4' threads='2'/> <cache mode='passthrough'/> </cpu> <clock offset='localtime'> <timer name='hypervclock' present='yes'/> <timer name='hpet' present='no'/> </clock> <on_poweroff>destroy</on_poweroff> <on_reboot>restart</on_reboot> <on_crash>restart</on_crash> <devices> <emulator>/usr/local/sbin/qemu</emulator> <disk type='file' device='cdrom'> <driver name='qemu' type='raw'/> <source file='/mnt/pool/ISOs/virtio-win-0.1.225-2.iso' index='1'/> <backingStore/> <target dev='hdb' bus='ide'/> <readonly/> <alias name='ide0-0-1'/> <address type='drive' controller='0' bus='0' target='0' unit='1'/> </disk> <controller type='usb' index='0' model='qemu-xhci' ports='15'> <alias name='usb'/> <address type='pci' domain='0x0000' bus='0x00' slot='0x07' function='0x0'/> </controller> <controller type='pci' index='0' model='pci-root'> <alias name='pci.0'/> </controller> <controller type='ide' index='0'> <alias name='ide'/> <address type='pci' domain='0x0000' bus='0x00' slot='0x01' function='0x1'/> </controller> <controller type='virtio-serial' index='0'> <alias name='virtio-serial0'/> <address type='pci' domain='0x0000' bus='0x00' slot='0x03' function='0x0'/> </controller> <interface type='bridge'> <mac address='52:54:00:29:50:c9'/> <source bridge='br0'/> <target dev='vnet1'/> <model type='virtio-net'/> <alias name='net0'/> <address type='pci' domain='0x0000' bus='0x00' slot='0x02' function='0x0'/> </interface> <serial type='pty'> <source path='/dev/pts/0'/> <target type='isa-serial' port='0'> <model name='isa-serial'/> </target> <alias name='serial0'/> </serial> <console type='pty' tty='/dev/pts/0'> <source path='/dev/pts/0'/> <target type='serial' port='0'/> <alias name='serial0'/> </console> <channel type='unix'> <source mode='bind' path='/var/lib/libvirt/qemu/channel/target/domain-2-Windows 11/org.qemu.guest_agent.0'/> <target type='virtio' name='org.qemu.guest_agent.0' state='connected'/> <alias name='channel0'/> <address type='virtio-serial' controller='0' bus='0' port='1'/> </channel> <input type='mouse' bus='ps2'> <alias name='input0'/> </input> <input type='keyboard' bus='ps2'> <alias name='input1'/> </input> <tpm model='tpm-tis'> <backend type='emulator' version='2.0' persistent_state='yes'/> <alias name='tpm0'/> </tpm> <audio id='1' type='none'/> <hostdev mode='subsystem' type='pci' managed='yes'> <driver name='vfio'/> <source> <address domain='0x0000' bus='0x01' slot='0x00' function='0x0'/> </source> <alias name='hostdev0'/> <rom file='/mnt/pool/vdisks/vbios/My_Inno3D.GTX1650.4096.(version_90.17.3D.00.95).rom'/> <address type='pci' domain='0x0000' bus='0x00' slot='0x04' function='0x0' multifunction='on'/> </hostdev> <hostdev mode='subsystem' type='pci' managed='yes'> <driver name='vfio'/> <source> <address domain='0x0000' bus='0x01' slot='0x00' function='0x1'/> </source> <alias name='hostdev1'/> <address type='pci' domain='0x0000' bus='0x00' slot='0x04' function='0x1'/> </hostdev> <hostdev mode='subsystem' type='pci' managed='yes'> <driver name='vfio'/> <source> <address domain='0x0000' bus='0x00' slot='0x1f' function='0x3'/> </source> <alias name='hostdev2'/> <address type='pci' domain='0x0000' bus='0x00' slot='0x06' function='0x0'/> </hostdev> <hostdev mode='subsystem' type='pci' managed='yes'> <driver name='vfio'/> <source> <address domain='0x0000' bus='0x00' slot='0x14' function='0x0'/> </source> <alias name='hostdev3'/> <address type='pci' domain='0x0000' bus='0x00' slot='0x08' function='0x0'/> </hostdev> <hostdev mode='subsystem' type='pci' managed='yes'> <driver name='vfio'/> <source> <address domain='0x0000' bus='0x00' slot='0x1f' function='0x5'/> </source> <alias name='hostdev4'/> <address type='pci' domain='0x0000' bus='0x00' slot='0x09' function='0x0'/> </hostdev> <hostdev mode='subsystem' type='pci' managed='yes'> <driver name='vfio'/> <source> <address domain='0x0000' bus='0x08' slot='0x00' function='0x0'/> </source> <alias name='hostdev5'/> <address type='pci' domain='0x0000' bus='0x00' slot='0x0a' function='0x0'/> </hostdev> <memballoon model='none'/> </devices> <seclabel type='dynamic' model='dac' relabel='yes'> <label>+0:+100</label> <imagelabel>+0:+100</imagelabel> </seclabel> </domain> PLEASE SOMEBODY HELP ME. I HAVE SPENT HOURS!!!

-

Well, I updated from 6.11.1 to 6.11.3 and the problem did not reoccur. My cache disks are all assigned and intact. I have rebooted multiple times to test and it's all fine. Something with the upgrade between 6.11.2 to 6.11.3 obviously went sideways that did not happen after I reverted back to 6.11.1 and upgraded from there. Oh well. Now if I could only get my Windows 11 VM to boot more than once with boot order enabled... but I have a work around for that so I can live with it for now. Thanks, craigr

-

I can try 6.11.3 again later when I have more time and get the log files. Right now, I just need a functioning computer.

-

I don't know. I've been using unRAID for over ten years and have had very few problems with complicated setups running VM's and dockers. I use unRAID on many of my client's systems who pay me to build them servers for their home theaters. I have found support to be quite good and developers have stepped in when necessary. My experience has been great with only a hiccup here and there, but what I am doing is complex and I would expect this.

-

Yes, my pool devices were assigned again (thank the maker). I did of course have a backup from 6.11.1. I recreated the flash drive from scratch using a fresh download of 6.11.1 and copied over my config directory. Funny thing too is that my SSD cache pools use the SATA ports on the MB and the cache drive uses a port on my LSI controller. Why lose ALL cache drives?!?

-

I just tried to upload my hardware configuration with hardware profiler and that failed, "Sorry, an error occurred. Please try again later." However, all of my hardware is in my signature.

-

Well, after updating from 6.11.2 to 6.11.3 and rebooting, my cache drive, and both of my cache pools disks became unassigned. This caused libvert to be unfindable obviously so none of my dockers or VM's started. Last night on 6.11.2 my Windows VM stopped booting (which I was afraid would happen because of the boot order issue I asked about in the 6.11.2 thread). I have had to revert all the way back to 6.11.1 to get a working machine again. Please let me know what I may do to help. However, my day is pretty full. craigr

-

Done as well.

-

I had to screw with it a little bit, but it wasn't bad. I upgraded unRAID successfully and everything is working well (VM's and Dockers). Remember, I did not have any "boot order" defined in my xml before (it was in the OVMF BIOS setup I think). If I tried to use boot order before, my VM would not start. I had to work around this way: <os> <type arch='x86_64' machine='pc-i440fx-6.2'>hvm</type> <loader readonly='yes' type='pflash'>/usr/share/qemu/ovmf-x64/OVMF_CODE-pure-efi-tpm.fd</loader> <nvram>/etc/libvirt/qemu/nvram/2c4db39b-fc53-1762-d1ba-9c768e033e45_VARS-pure-efi-tpm.fd</nvram> <boot dev='hd'/> </os> This VM was built on Windows 10 and spaces_win_clover.img to load the NVMe bare-metal. Upgrading from Windows 10 to 11 and then removing the clover image caused unique issues for me I suspect. Anyway, I upgraded unRAID, put a check for my NVMe in the new Windows template, defined its boot order as "1", saved the template, compared it to my old xml, modified my old xml to remove the <boot dev='hd'/> lines and add <boot order='1'/>. Everything started up on the first try. No issues to report so far. The unRAID update is working well. Thanks all! craigr

-

Thank you! So if you were to check your NVMe drive in form view, what line(s) would be added to your XML? I really don't want to have to parse through all my XML customizations when they get wiped out, and then potentially fiddle with the OVMF BIOS as well

-

I'm a bit worried to update due to this. I have Windows 11 booting directly from an NVMe already. Had to fight this boot order thing a long way to get the VM back before. I've attached my current XML. Anyone think I may have a problem after this update? Thanks! craigr win11 NOV 16 2022.xml

-

I follow the same practice.

-

It's funny, I changed my SAS and SATA cables to silver Supermicro to rid my server of UDMA CRC errors, and that made me rename my server to SilverStream, and that made me want to change my banner to an actual 'silver stream', and that made me want to post here 🙂 ...and that made me buy the silver Noctua NH-U12S CPU cooler just now for which I will substitute my grey (silver) Redux fan... ...which made me buy the red sound absorbers, which resulted in the need to change the zipties to red... *The banner is 2000x96 pixels instead of 1270x96 pixels because on my 4k monitor I thought it stretches and squeezes better with windows resizing.

-

Well, all dolled up and no more UDMA Checksum Errors! I know you're not supposed to tie cables together, but I've never had problems doing it with good shielded cables like these. New SAS > SATA and matching SATA > SATA cables installed. I renamed the server SilverStream 😉. I think she looks great!

-

If someone could please provide instructions on just this step for setting up WireGaurd for PIA I would be most appreciative. I think I may need to do this through the counsel? I'm just not sure what file contains the parameter. I went through many files in the config directory with nano and didn't see it anywhere. Thanks, craigr

-

Depending on your UPS and whether or not it's buggy with unRAID you have two options to monitor power and shutdown unRAID. This first is built in; Settings > UPS Settings: That worked for me until I upgraded my UPS and unRAID thought the UPS was disconnecting multiple times each day. I then switch to the app call NUT which you may download and install through community applications. It has more capabilities and has worked much better for me: Both are pretty easy to setup and use. Enjoy and let me know!

-

I cannot get WireGuard working with PIA. I have followed the FAQ and never had trouble getting anything else to work with this docker. The one line in the FAQ that puzzles me a bit is this one: I don't have anything in the docker that says "--cap-add=NET_ADMIN". I have this, but I am not sure this is what the FAQ refers to: This is the only think I think it can be because I have followed the rest to the letter. This is all that is in my wg0.conf file. [Interface] Address = 10.24.146.122 PrivateKey = kGZ/uf0H5v7drJOsaCNPxo+hERNkfIh7cyUcDpNQdGg= PostUp = '/root/wireguardup.sh' PostDown = '/root/wireguarddown.sh' [Peer] PublicKey = OOy/RLnU33EAXZkQWfTrOA7CgmK88HUjgPm2Ky5pKFw= AllowedIPs = 0.0.0.0/0 Endpoint = nl-amsterdam.privacy.network 1337 There is no list of endpoints in my supervisord.log. Here is the supervisord.log. It seems to be telling me it can't resolve nl-amsterdam.privacy.network 1337. Created by... ___. .__ .__ \_ |__ |__| ____ | |__ ____ ___ ___ | __ \| |/ \| | \_/ __ \\ \/ / | \_\ \ | | \ Y \ ___/ > < |___ /__|___| /___| /\___ >__/\_ \ \/ \/ \/ \/ \/ https://hub.docker.com/u/binhex/ 2022-10-19 00:03:32.359749 [info] Host is running unRAID 2022-10-19 00:03:32.376784 [info] System information Linux b997ff38d197 5.19.14-Unraid #1 SMP PREEMPT_DYNAMIC Thu Oct 6 09:15:00 PDT 2022 x86_64 GNU/Linux 2022-10-19 00:03:32.397742 [info] OS_ARCH defined as 'x86-64' 2022-10-19 00:03:32.417220 [info] PUID defined as '99' 2022-10-19 00:03:32.548834 [info] PGID defined as '100' 2022-10-19 00:03:32.757009 [info] UMASK defined as '000' 2022-10-19 00:03:32.780403 [info] Permissions already set for '/config' 2022-10-19 00:03:32.813122 [info] Deleting files in /tmp (non recursive)... 2022-10-19 00:03:32.834943 [info] VPN_ENABLED defined as 'yes' 2022-10-19 00:03:32.852974 [info] VPN_CLIENT defined as 'wireguard' 2022-10-19 00:03:32.870207 [info] VPN_PROV defined as 'pia' 2022-10-19 00:03:33.173614 [info] WireGuard config file (conf extension) is located at /config/wireguard/wg0.conf 2022-10-19 00:03:33.200710 [info] VPN_REMOTE_SERVER defined as 'nl-amsterdam.privacy.network 1337' 2022-10-19 00:03:33.243004 [info] VPN_REMOTE_PORT defined as '1337' 2022-10-19 00:03:33.258570 [info] VPN_DEVICE_TYPE defined as 'wg0' 2022-10-19 00:03:33.274097 [info] VPN_REMOTE_PROTOCOL defined as 'udp' 2022-10-19 00:03:33.291612 [info] LAN_NETWORK defined as '192.168.1.0/24' 2022-10-19 00:03:33.309441 [info] NAME_SERVERS defined as '1.1.1.1,1.0.0.1' 2022-10-19 00:03:33.326958 [info] VPN_USER defined as 'xxxxxxxxxx' 2022-10-19 00:03:33.344674 [info] VPN_PASS defined as 'xxxxxxxxxx' 2022-10-19 00:03:33.362778 [info] STRICT_PORT_FORWARD defined as 'yes' 2022-10-19 00:03:33.380827 [info] ENABLE_PRIVOXY defined as 'no' 2022-10-19 00:03:33.400403 [info] VPN_INPUT_PORTS not defined (via -e VPN_INPUT_PORTS), skipping allow for custom incoming ports 2022-10-19 00:03:33.419120 [info] VPN_OUTPUT_PORTS not defined (via -e VPN_OUTPUT_PORTS), skipping allow for custom outgoing ports 2022-10-19 00:03:33.436771 [info] DELUGE_DAEMON_LOG_LEVEL not defined,(via -e DELUGE_DAEMON_LOG_LEVEL), defaulting to 'info' 2022-10-19 00:03:33.454386 [info] DELUGE_WEB_LOG_LEVEL not defined,(via -e DELUGE_WEB_LOG_LEVEL), defaulting to 'info' 2022-10-19 00:03:33.473525 [info] Starting Supervisor... 2022-10-19 00:03:33,725 INFO Included extra file "/etc/supervisor/conf.d/delugevpn.conf" during parsing 2022-10-19 00:03:33,725 INFO Set uid to user 0 succeeded 2022-10-19 00:03:33,728 INFO supervisord started with pid 7 2022-10-19 00:03:34,731 INFO spawned: 'start-script' with pid 168 2022-10-19 00:03:34,733 INFO spawned: 'watchdog-script' with pid 169 2022-10-19 00:03:34,733 INFO reaped unknown pid 8 (exit status 0) 2022-10-19 00:03:34,742 DEBG 'start-script' stdout output: [info] VPN is enabled, beginning configuration of VPN 2022-10-19 00:03:34,742 INFO success: start-script entered RUNNING state, process has stayed up for > than 0 seconds (startsecs) 2022-10-19 00:03:34,742 INFO success: watchdog-script entered RUNNING state, process has stayed up for > than 0 seconds (startsecs) 2022-10-19 00:03:34,747 DEBG 'start-script' stdout output: [info] Adding 1.1.1.1 to /etc/resolv.conf 2022-10-19 00:03:34,751 DEBG 'start-script' stdout output: [info] Adding 1.0.0.1 to /etc/resolv.conf 2022-10-19 00:04:30,124 DEBG 'start-script' stdout output: [crit] 'nl-amsterdam.privacy.network 1337' cannot be resolved, possible DNS issues, exiting... 2022-10-19 00:04:30,124 DEBG fd 8 closed, stopped monitoring <POutputDispatcher at 23200439382896 for <Subprocess at 23200439382800 with name start-script in state RUNNING> (stdout)> 2022-10-19 00:04:30,125 DEBG fd 10 closed, stopped monitoring <POutputDispatcher at 23200438224880 for <Subprocess at 23200439382800 with name start-script in state RUNNING> (stderr)> 2022-10-19 00:04:30,125 INFO exited: start-script (exit status 1; not expected) 2022-10-19 00:04:30,125 DEBG received SIGCHLD indicating a child quit Thanks for any help! craigr