-

Posts

789 -

Joined

-

Days Won

15

Content Type

Profiles

Forums

Downloads

Store

Gallery

Bug Reports

Documentation

Landing

Posts posted by falconexe

-

-

48 minutes ago, ChatNoir said:

I think it is for most people.

Remember that the only people posting on the forums are those who have problems, not the large number of users form whom it works fine.

Took me 2 minutes, I did everything from the webgui and didn't touch any kind of files.

Same for me. I just posted to let everyone know that I didn't run into any issues, and that everyone should take this seriously and do it. Glad everyone is figuring it out.

-

It is LEGIT. I got the email, and everyone I know did. Just changed everything without any issues. Make sure you guys click the sign out of all devices checkbox as well. Had everyone who wasn't using MFA implement it. I suggest everyone do the same. I'm not worried, and I am glad they were up front with what happened. Other than that get LIFELOCK and HOME TITLE LOCK to round out your identity protection package. It has saved my butt a couple of times!

-

6 hours ago, FreeMan said:

It's got NOTHING to do with what I thought it did...

I had a totally unrelated docker going crazy with log files growing to > 7GB of space. That docker's been deprecated (any wonder why), so I shut it down and deleted it.

I've just hit 100% utilization of my 30GB docker.img file, and I think I've nailed the major culprit down to the influxdb docker that was installed to host this dashboard. It's currently at 22.9GB of space.Is anyone else anywhere near this usage? Is there a way to trim the database so that it's not quite so big?I'm trying to decide whether I want to keep this (as cool as it was when it was first launched, it's wandered into a lot of Plex focus on later updates and, since I'm not a Plex user, they don't interest me, plus I've grown a touch bored with this much info overload), or if it's time to just scrap it.I totally appreciate the time and effort that went into building it and @falconexe's efforts and responsiveness in fixing errors and helping a myriad of users through the same teething pains. I'm just not sure it's for me anymore...

Glad you found the TRUE culprit. I was just coming on here to defend the UUD ha ha. Just so you guys know, you can set retention policies for INFLUXDB and this will ensure you don't eat up space. I have a 2TB cache drive, so I put it at 10 years, but others may just want a rolling 30 days, that is perfectly acceptable, and totally doable.

@FreeMan If you don't like the Plex panels, feel free to remove them and the related datasources. You can run a UUD "Lite" if you want to. I just put everything that anyone would want in one dash for the sake of development. By all means, you do you.

Cheers!

-

On 6/20/2022 at 2:34 PM, Skibo30 said:

This dashboard really is the Ultimate! Thank you for putting all the time into it over the years.

As I was fiddling around with it to fit my system, I found a rather minor bug (spelling error, actually). And I couldn't find where to 'officially' report a bug: In the Disk Overview section, many of the column headers read "File Sytem" instead of "File System" (missing the second 's'). I think you could do an easy find/replace in the json. Don't forget to also check the Overrides that link to it. Minor, I know... but something.

Thanks. I'll get that fixed in 1.7.

-

Hey everyone. We just hit a huge milestone with the UUD. The official forum topic has now surpassed 200K views, making it one of the most viewed topics of all time in the User Customizations section of the UNRAID forums. Thanks again to everyone who has contributed to this accomplishment!

Also, Happy Father's Day everyone!

-

2

2

-

1

1

-

-

On 6/15/2022 at 9:51 AM, JudMeherg said:

Well butter my butt and call me a biscuit..... Page 34 release notes for 1.6 and there is the JSON datasource. Yep I missed that when I was just following the instructions on the first post in this thread. Just me being a bad user and not reading all the documentation.

As for 1.7, I just got 1.6 running and am still tweaking it for my system lol

I am sure the other users that have had it running for a while are looking for a new hit of the good stuff.

Thanks again for the work, and to everyone else that contributes. One day I hope to be a real contributor to projects like this.

@JudMeherg You got your wish. I appreciate your recent help and the fact that someone used your tips to fix their issues. Congrats!

-

2

2

-

-

11 hours ago, JudMeherg said:

Hey Everyone!

New UUD user here and I have had a good bit of fun settings things up! Thank you so much to all the contributors in this thread and others.

@falconexe You are an amazing dev and looking at your announcement/documentation posts has been great!

I would like to add my two cents here for anyone else coming to this thread looking to set up their dashboards.

Hey @JudMeherg I just wanted to personally say THANKS FOR YOUR DONATION and supporting the UUD. It's been a while ha ha. My new Wife thought that it was amazing that someone would actually pay me for my work on a side project. Looking back on this project, I have put in over 1,000 hours since September, 2020. This was never about money, but I sure do friggen appreciate kudos, compliments, and all around kind gestures. This is the type of stuff that keeps me going and keeps the fire burning. When you guys are enjoying it as much as I do, it is super fulfilling. The UUD is really a community project with many contributors, and I would not have been able to produce this monster if it wasn't for people who paved the way and continue to assist. THANKS AGAIN TO EVERYONE who has ever downloaded, used, shared, or contributed to the UUD!

Now, back to business... In the release notes post for UUD 1.6, I did mention adding the JSON API datasource (unless I'm completely losing my mind, but I'm almost positive it is out there). But thanks for pointing this out again.

Regarding the Grafana Image Plugin, it was unsigned at the time I wrote that, but it appears the dev got it updated and it is now signed. SO, this will no longer be deprecated in UUD 1.7! Thanks for update.

I'm almost finished with the UUD 1.7 update. I am trying to get one MAJOR addition in there. If I can't figure it out pretty soon, I'll push it to the UUD 1.8 update. I have made tons of changes, tweaks, added new panels and more fine tuning for 1.7.

What do you guys think? Should I just release what I have for 1.7? Maybe it's time LOL. Let me know!

-

2

2

-

-

You could try the Ultimate UNRAID Dashboard.

-

5 hours ago, Agent531C said:

It looks like some recent changes in the radarr api makes it so the :latest varken tag doesn't work. Ive switched over fully to develop, so it may be worth it to migrate now instead of later. Im not sure why they haven't pushed a single update to the main repo in 2 years, but the develop one has been moving strong with a lot of new features (like Overseerr support)

Thanks for sharing this info. I don't use any of the "Arrs" but good to know. I'm sure they'll eventually push an update to main.

-

3 hours ago, falconexe said:

So I am performing the data load now. I noticed that it did wipe the existing Varken DB content and reimports everything. I went back 10 years (not that I have that much, but I wanted an obtuse number just to be sure ha ha), so I used "-D 3650". I'm sure this will take a while so I'll let it run.

Once I've confirmed it is working, I plan on putting the docker back to the original tag. From what I can see, you only need the "develop" tag to get the script to run. Once the data is backloaded, it should work again on the latest tag.

I'll report back in a few hours once this is done.

@Agent531C You are LEGEND! That worked. I changed the docker tag back to the default after the historical data load, and all is working great. AND everything flowed into the UUD 1.7 (In Development) perfectly! I can't thank you enough for this tip. I even added some new panels to UUD 1.7 because of this historical data ability that I am excited to share!

-

23 hours ago, Agent531C said:

To get started, change your varken container to the 'develop' tag. From there, open the console for it and run these commands:

This moves the script to a location it can recognize the varken python import frommv /app/data/utilities/historical_tautulli_import.py /appFrom there, run the script with a few arguments:

python3 /app/historical_tautulli_import.py -d '/config' -D 365-d points to your appdata/varken.ini

-D is the number of days to run it for

That should backfill everything into your influxdb.

I tried to run this on the 'latest' tag, but unfortunately the script is broken in it, and doesn't fill any data, so you have to use the develop tag.

So I am performing the data load now. I noticed that it did wipe the existing Varken DB content and reimports everything. I went back 10 years (not that I have that much, but I wanted an obtuse number just to be sure ha ha), so I used "-D 3650". I'm sure this will take a while so I'll let it run.

Once I've confirmed it is working, I plan on putting the docker back to the original tag. From what I can see, you only need the "develop" tag to get the script to run. Once the data is backloaded, it should work again on the latest tag.

I'll report back in a few hours once this is done.

-

-

2 hours ago, Agent531C said:

If you haven't figured it out, I was able to backfill all of my Tautulli data into influxdb using their backfill script on the 'develop' tag. Unfortunately, it does have some side effects with what seems like data structure changes, but it fills out enough that its usable. I've mainly noticed some fields act up, like 'Time', 'Plex Version', and 'Stream Status'. Sometimes the 'Location' would show as 'None - None' as well, but it usually got it correctly.

To get started, change your varken container to the 'develop' tag. From there, open the console for it and run these commands:

This moves the script to a location it can recognize the varken python import frommv /app/data/utilities/historical_tautulli_import.py /appFrom there, run the script with a few arguments:

python3 /app/historical_tautulli_import.py -d '/config' -D 365-d points to your appdata/varken.ini

-D is the number of days to run it for

That should backfill everything into your influxdb.

I tried to run this on the 'latest' tag, but unfortunately the script is broken in it, and doesn't fill any data, so you have to use the develop tag.

@Agent531C 😁 You are a freakin Wizard! I am excited to give this a shot. 🤛

So what happens to your existing data in Varken? Does it merge dupes, overwrite them, or replace the entire DB? Did you see any issues with your latest data having corruption or schema issues?

Did you lose any data? I really don't mind if old data doesn't match up exactly, I'm just trying to save my lengthy history and be able to see it in the UUD rather than Tautulli itself. I do care however that present data is presented accurately and normally in the UUD just like it is currently.

Finally, how does this play with Varken retention policies within InfluxDB? Any changes there? I set mine to retain the last 25 years, so I should be good backloading a few years with your method Thanks!!!

-

On 5/31/2022 at 8:45 PM, Agent531C said:

Hey, I saw talk about turning the project into a single container to rule them all. Obviously testdasi has done it, so it is possible. I felt like docker-compose was more appropriate for the use case, so I wrote up a compose file and guide on how to set it up on Unraid using the Docker Compose Manager plugin: https://github.com/HStep20/Ultimate-Unraid-Dashboard-Guid

I can't wait till they add native support, but this works great in the meantime.If you are down to help me, we can work together on getting this into all in one docker that is actually maintained and and updated regularly. I have zero experience with Docker development, but it seems pretty straight forward to do this with preconfigured config files. I looked at the GUS structure and schema and it seems like all I need is the tool/knowledge of how to build and publish the Docker. I setup GitHub so I am ready whenever. I'll start googling...

Thanks for putting this workaround together and linking back to this forum and recognizing the developer. I appreciate it.

-

2

2

-

-

On 5/19/2022 at 9:48 PM, falconexe said:

Never seen this before. Since the release of Grafana 8.4, the dependent plugins for this portion of the UUD no longer work, so I was planning on deprecating the UNRAID API panels in UUD 1.7. Let us know if you figure it out.

On 5/31/2022 at 4:23 AM, valiente said:I am running Grafana 8.5.3 and UNRAID API mostly works.

Otherwise it is still working an I would vouch for not deprecating it...

I should have clarified. The Dynamic Image Panel plugin is not signed by Grafana. I also believe the JSON API one is also in the same boat. But if you got it working in 8.4+, then I take another look. Perhaps I just need to configure those as a known exception in Grafana and install anyway. Thanks for the feedback. I'll likely keep these then.

-

7 hours ago, tatzecom said:

Gentlemen,

Ive been scratching my head about this issue for a few days now and i have no idea how to fix this:

The UUD or Grafana in general really doesnt want to use the unraid api docker. The docker works, the docker shows me my box on the webui, i could theoretically manage what i want on it. When i query it through the browser, i get the expected response with a json file containing everything its supposed to.

However, when Grafana queries the API, I get this error (taken from the logs of the unraid-api docker):

ERROR Unexpected token B in JSON at position 0 at JSON.parse (<anonymous>) at default (api/getServers.js:30:43) at call (node_modules/connect/index.js:239:7) at next (node_modules/connect/index.js:183:5) at next (node_modules/connect/index.js:161:14) at next (node_modules/connect/index.js:161:14) at SendStream.error (node_modules/serve-static/index.js:121:7) at SendStream.emit (node:events:520:28) at SendStream.emit (node:domain:475:12) at SendStream.error (node_modules/send/index.js:270:17)

lowkey no idea how to fix that. Anyone got any ideas? At this point im willing to sacrafice a lesser animal.

EDIT: i also put the JSON i manually queried through a validator and it returned that the JSON is valid

Never seen this before. Since the release of Grafana 8.4, the dependent plugins for this portion of the UUD no longer work, so I was planning on deprecating the UNRAID API panels in UUD 1.7. Let us know if you figure it out.

-

On 2/21/2022 at 4:07 PM, falconexe said:

DEVELOPER UPDATE - VARKEN DATA RETENTION

Something else I wanted to bring to your attention in VARKEN... For the life of me, I could not figure out why my Plex data was only going back 30 days. It has been bothering me for a while, but I did not have a ton of time to look into it. Yesterday, I found out the issue, and it really stinks I did not find this sooner, but the sooner the better to tell you ha ha.

While looking into the InfluxDB logs, I found the following oddity:

All queries were being passed a retention policy of .\"varken 30d-1h\". in the background!

So apparently when Varken gets installed, it sets a DEFAULT RETENTION Policy of 30 days with 1 Hour Shards (Data File Chunks) when it creates the "varken" database within InfuxDB.

This can be found in the Varken Docker "dbmanager.py" Python install script here:

https://github.com/Boerderij/Varken/blob/master/varken/dbmanager.py

InfluxDB Shards:

https://docs.influxdata.com/influxdb/v2.1/reference/internals/shards/

What this means is InfluxDB will delete any Tautulli (Plex) data on a rolling 30 days basis. I can't believe I didn't see this before, but for my UUD, I want EVERYTHING going back to the Big Bang. I'm all about historical data analytics and data preservation.

So, I researched how to fix this and it was VERY SIMPLE, but came with a cost.

IF YOU RUN THE FOLLOWING COMMANDS, IT WILL PURGE YOUR PLEX DATA FROM UUD AND YOU WILL START FRESH

BUT IT SHOULD NOT BE PURGED MOVING FORWARD (AND NOTHING WILL BE REMOVED FROM TAUTULLI - ONLY UUD/InfluxDB)

You have a few different options, such as modifying the existing retention policy, making a new one, or defaulting back to the auto-generated one, which by default, seems to keep all ingested data indefinitely. Since this is what we want, here are the steps to set it to "autogen" and delete the pre-installed Varken retention policy of "varken 30d-1h".

- STEP 1: Bash Into the InfluxDB Docker by Right Clicking the Docker Image on the UNRAID Dashboard and Select Console

- STEP 3: Run the Show Retention Policies Command

- STEP 4: Set the autogen Retention Policy As the Default

- STEP 5: Verify "autogen" is Now the Default Retention Policy

- STEP 6: Remove the Varken Retention Policy

- STEP 7: Verify That Only the "autogen" Retention Policy Remains

Once you do this, your UUD Plex data will start from that point forward and it should grow forever so long as you have the space in your appdata folder for the InfluxDB docker (cache drive). Let me know if you have any questions!

Sources:

https://community.grafana.com/t/how-to-configure-data-retention-expiry/26498

https://docs.influxdata.com/influxdb/v2.1/reference/cli/influx/

I've been doing some more poking around when I have time. To follow-up on this topic, I further modified the retention policy to 25 YEARS, so that's a pretty good archive moving forward ha ha.

Command: ALTER RETENTION POLICY "autogen" ON "varken" DURATION 1300w REPLICATION 1 DEFAULT

1300 Weeks = 218400 Hours / 24 Hours in a Day = 9,100 Days / 365.25 Days in a Year = ~ 25 Years

Stop and Start the InfluxDB Docker to apply!

-

Congrats everyone. I’ll be diving in on my 2 servers later tonight!

-

2

2

-

-

-

2 minutes ago, RichJacot said:

I get redirected to login, it just never pulls/shows them. I've done every icon one at time. It's something with chrome, Firefox and safari work as expected after the redirect and login.

Maybe you guys should compare notes on Chrome settings/security/version and see if there are any differences. I know my Chrome version 99 works after I get redirected to the UNRAID login screen and authenticate. Suddenly the icons magically appear. Let us know if you guys figure this out as it is outside of the UUD.

-

On 3/24/2022 at 11:44 PM, gvkhna said:

Hey OP,

I'm a developer with enough experience with docker where I could probably help out with this. I setup UUD for the most part, new to unraid but i'm currently setting it up to manage a couple of in-house apps I want to self host. I'm specifically trying to setup grafana for log tailing this app but I haven't used it before so our experience could be mutually beneficial and I'm happy to help where I can. I see GUS is deprecated. Also I see the versions recommended for UUD for telegraf and influxdb are out of date, I had some difficulty setting them up.

I could see utility in just having a separate grafana instance for UUD although it doesn't seem like it would interfere too much if folks wanted to use it for other things, especially if it already included a working UUD. It's the other dependencies that made installing it a bit of a hassle. Also a lot of my panels don't work because it's just too much setup and too deep into grafana for me. But anyway if you care to sync happy to chat.

Thanks for the offer @gvkhna!

I'm really busy with work right now, but I WOULD like to look into this with you. If we can get a unified Docker with all dependencies already configured, that would take care of a lot of the manual install work. Then users could just focus on getting their dashboard configured to their servers. Ideally we would build the Docker in a way where users can still get to their config files on the backend if they want to further customize. If you look at the GUS docker and review the architecture, that should point you in the right direction. I think we can improve upon that project in various way. I look forward to getting with you in the future. I'll DM you when I'm ready to dive in.

-

On 3/21/2022 at 12:07 PM, kolepard said:

Similar situation here. I have a Q30 as my main server and a Rosewill RSV-4500 configured for 15 HDDs as my backup. The Rosewill case is ok, but is a bit of a pain to work in, and makes me really appreciate the Q30. The airflow in the Rosewill is better than what I used to have with my 5-in-3 drive cages, but still not as good as in the Q30.

The backup server still runs fine, but was my first build about 10 years ago, and fairly recently transplanted into the Rosewill case. It uses an older Supermicro board (C2SEA) with a quad-core Core processor but I'd like to have my backup server use ECC RAM like the main one does.

I probably won't be able to answer your question about the GPU. I haven't moved into using VMs or GPU-based transcoding at this point.

Good luck.

Kevin

Fair enough...I wonder if they will sell it without a Power Supply so I can stick a 1000W Corsair in there. I'm pretty sure it will fit. I just want their metal ha ha. I'm gonna get mine custom painted Midnight Blue if I pull the trigger.

-

3 hours ago, kolepard said:

On a lark, I contacted them to see if they were selling enclosures only again, and they are.

Cases are fully assembled, with steel chassis, modular drive cage, drive cabling, case fans, direct wired backplanes, sliding rails and a power supply. They take ATX motheboards (not eATX).

They said they aren't designed to power or fit a GPU, but looking inside the Q30 I have now, it looks like there'd be plenty of space, but I guess you'd want to measure to be sure.

They are not cheap (prices I was emailed today, 3/13/2022):

AV15 (650W non-redundant PSU) - $1,683.80 (USD)

Q30 (850W non-redundant PSU) - $2,151.13 (USD)

S45 (1200W redundant PSU) - $2,922.29 (USD)

XL60 (1200W redundant PSU) - $3,353.88 (USD)

Hope this helps someone.

Kevin

Thanks. I appreciate the info. I have a Storinator Q30 Turbo as my primary server, and want to move an old NORCO 4224 (my backup server) to their much nicer chassis for heat flow, but use the same guts. I was also looking to drop a RTX 3080 into one, once I upgrade my main gaming rig to the RTX 4000 series later this year (or next).

Let me know if you figure out how to drop in a full size Nvidia video card. I'd love to see pics and the complete component breakdown.

-

9 hours ago, RichJacot said:

I replaced my telegraf.conf with yours and changed the outputs.influxdb section and the interface. No errors in the telegraf longs (shown below). I'm going to try to remove telegraf altogether and reinstall it. You can see in the telegraf log that telegraf is picking up my hostname though, so that's odd.

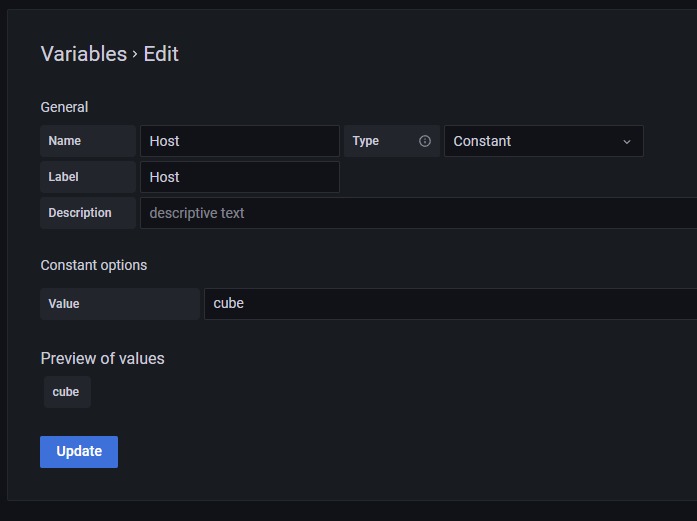

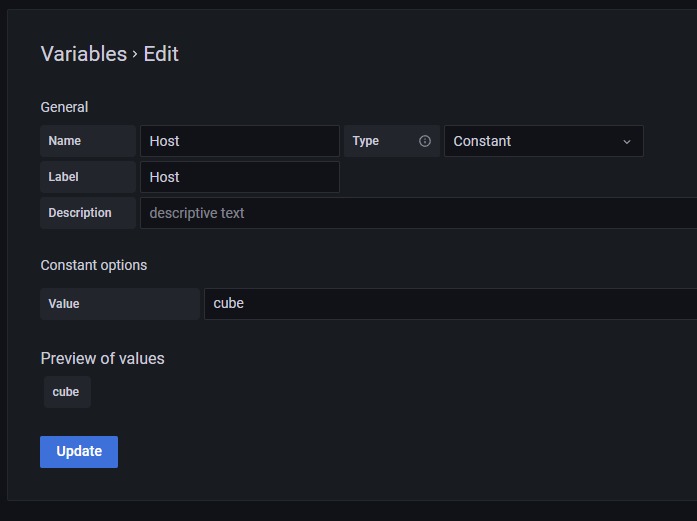

Try setting your host variable manually to a constant and see if that changes anything. If you are only running 1 UNRAID server, then you can safely do this. Not sure what is going on, but the below work around should fix it.

Ultimate UNRAID Dashboard (UUD)

in User Customizations

Posted

I don't use the UNRAID API or related panels in my personal UUD dash (they are not the best part for me), and I will now remove them from UUD 1.7 due to this news. If you are using the UNRAID API panels in the UUD 1.6, I would highly recommend you delete them/related dependencies, and remove the UNRAID API Docker. At least until this issue is resolved by that developer (if they ever get around to it).

I'm almost done with with UUD 1.7 that adds a bunch of new and useful panels, and overhauls a number of existing ones. Thanks for the heads up on the UNRAID API memory leak. That is unfortunate and not worth a dirty shutdown with the array needing a hard reset and resulting parity check upon reboot due to this docker crashing.

Just to be clear, the UUD and it's developer (me) have nothing to do with the UNRAID API or its docker. If anyone hears that the issue is resolved, I would be happy to put it back into the UUD at a future point since many of you do like that part of the UUD.