-

Posts

475 -

Joined

-

Last visited

Content Type

Profiles

Forums

Downloads

Store

Gallery

Bug Reports

Documentation

Landing

Everything posted by CS01-HS

-

[6.8.3] shfs error results in lost /mnt/user

CS01-HS commented on JorgeB's report in Stable Releases

I've triggered it a few times with SMB file operations from my Mac client where the folder/file structure was stale. Since then I force a refresh by e.g. navigating down a directory then back up before every SMB move or copy. Tedious but so far it hasn't failed. -

Done. I'm getting strange results. It works except for a handful of files which always error out. If I skip them it copies fine. And they copy fine to a standard unraid share. I checked their permissions, etc, no issues. These files might have thrown off my earlier reports of what was or wasn't working. I think you've caught the major issue though, some conflict with the "MacOS interoperability" setting. Thanks for helping me solve it.

-

Attached. Errors in Windows 10 are consistent now but Mac is fine. Strange. The "Windows" is actually Parallels VM. I thought that might affect it but the same VM writes to standard unRAID shares without issue. In case it's helpful: # smbstatus Samba version 4.17.5 PID Username Group Machine Protocol Version Encryption Signing ---------------------------------------------------------------------------------------------------------------------------------------- 15540 windows users 10.0.1.3 (ipv4:10.0.1.3:49285) SMB3_11 - partial(AES-128-CMAC) Service pid Machine Connected at Encryption Signing --------------------------------------------------------------------------------------------- file_history 15540 10.0.1.3 Mon Mar 27 03:08:49 PM 2023 EDT - - Locked files: Pid User(ID) DenyMode Access R/W Oplock SharePath Name Time -------------------------------------------------------------------------------------------------- 15540 99 DENY_NONE 0x100081 RDONLY NONE /mnt/disks/file_history . Mon Mar 27 15:08:49 2023 nas-diagnostics-20230327-1510.zip

-

I did and it seems to be working now both on Windows and Mac, thanks. In windows event log I see entries like below referencing the share but maybe they're expected: Are there specific tests you'd like me to run before I restore 'macOS Interoperability' ? EDIT: Darn, I spoke too soon. The copy operation in windows just failed with another entry like the above in the event log.

-

I'm trying to use unassigned devices to share an exFat-formatted flash drive over SMB. It looks like it should work, the share is visible and I can read files fine but write errors out – on Windows eventually and on Mac immediately (all copied files are 0 bytes.) I thought it might be the flash drive but with the CLI I can copy multi-gig files to its mount point under /mnt/disks/ consistently. I've tried with SMB security set to Public and Private, force user 'nobody' yes and no, same result. I'm on 6.12.0-rc2 - could that be the issue? I haven't tried with earlier versions. nas-diagnostics-20230327-0814.zip

-

-

Ah. I only have the one UPS so I can't test what's particular vs generic. Something else that might affect it: I have unRAID set up as client to a NUT server on an RPi. While I have you (in case it's helpful) here are additional state codes I gathered: > 'OL CHRG' => 'Online: charging', > 'OL CHRG LB' => 'Online: charging', > 'OL DISCHRG' => 'Online: discharging', > 'OL DISCHRG LB' => 'Online: discharging', > 'OL BOOST' => 'Online: low voltage', > 'OB DISCHRG' => 'Offline: On battery',

-

Right these tweaks are to your plugin (thanks by the way, works beautifully.) Did I post in the wrong thread?

-

For anyone interested I did a quick-and-dirty tweak to match the display formatting to 6.12's default: Requires changing two files, presented below in diff format. nut_status.php: nutFooter.page:

-

The weekly scheduled boot of the VM that's caused this went off last night without a hitch – 2GB swap used (out of 4GB.) If it goes smoothly next week I'll mark this as solved, thanks.

-

I've only seen OOM errors while running a 4GB VM (in addition to my standard apps.) I haven't booted that VM since installing the plugin. But now that swap's available unRAID is using it. Interesting.

-

It's about a once-a-month thing. Apparently I had a very superficial understanding of linux memory allocation. I've installed the plugin, fingers crossed.

-

I got another out of memory error warning (killed a VM): Mar 6 02:48:28 NAS kernel: oom-kill:constraint=CONSTRAINT_NONE,nodemask=(null),cpuset=/,mems_allowed=0,global_oom,task_memcg=/machine/qemu-4-macOS.libvirt-qemu,task=qemu-system-x86,pid=15774,uid=0 Mar 6 02:48:28 NAS kernel: Out of memory: Killed process 15774 (qemu-system-x86) total-vm:4771316kB, anon-rss:4437356kB, file-rss:0kB, shmem-rss:26488kB, UID:0 pgtables:9400kB oom_score_adj:0 Seems like I just ran out of memory. But reporting (just prior to the error) showed 9GB used and 7GB in cache out of 16GB total: Shouldn't the OS clear the cache before killing processes? I wonder if the Folder Caching plugin I run has something to do with it.

-

I got this last night. I suspect the macOS VM (which I usually don't run) pushed me over the edge. Diagnostics attached. If you spot anything out of the ordinary I'd appreciate the assistance. nas-diagnostics-20230206-0732.zip

-

Search of SMB shares not working (MacOS client)

CS01-HS commented on CS01-HS's report in Prereleases

Any update here? More than 2 years and no official fix. I appreciate all the updates since but it's frustrating to see a pretty basic function not working and not addressed. -

Search of SMB shares not working (MacOS client)

CS01-HS commented on CS01-HS's report in Prereleases

Am I missing something? What's the relevance to unRAID? -

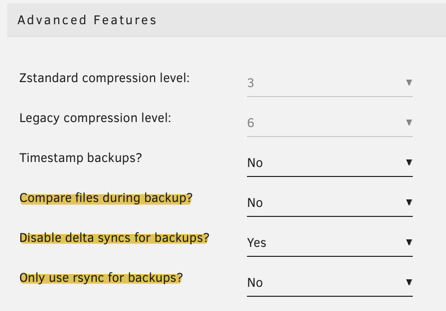

At some point in the last couple/few months my backups started taking longer e.g. it takes about 40 minutes to copy this 50GB disk image: I remember fixing this a year or two ago by disabling rsync to the extent possible. It's still disabled: But the log entry above and grafana, which shows mostly reads on both the source (as expected) AND destination (Active -> Array) during the backup period, suggests it's using rsync. Any ideas? I have reconstruct write enabled but even if reporting tracks that as reads I doubt the above shows a copy operation. 12MB/s write is well below my array's capacity.

-

Not to get too far off the main topic but isn't the solution there a secondary DNS like Google or OpenDNS, unless the concern is custom local resolution.

-

The simpler and IMHO more robust solution would be to imitate unraid's start process: stop all containers backup the first container start the first container backup the second container start the second container etc. Avoid the headache of debugging user- or container-specific dependencies - if boot works, backup works. And shorter downtime for primary containers (relative to the current version) should reduce the need to exclude, reducing errors.

-

My fix was to move the spinning disks from the integrated ASM controller to the integrated intel. I've had no problems running autotune with only SSDs on the ASM. Before that, with mgutt's explicit config/go commands as a basis, I excluded the ASM's host3 and host4 from power-saving - intel are 1 and 2. linkpm_count=1 for i in /sys/class/scsi_host/host*/link_power_management_policy; do echo 'med_power_with_dipm' > $i if [ $linkpm_count -eq 2 ]; then break fi ((linkpm_count++)) done

-

Awesome update. One big advantage (maybe for a future version :) is with separate archives there's no need to wait until all backups are complete to restart individual containers, which will minimize downtime.

-

[Support] Josh5 - Unmanic - Library Optimiser

CS01-HS replied to Josh.5's topic in Docker Containers

Is persistent load from the unmanic-service process expected? Pausing workers (which were already inactive) and disabling file monitors didn't affect it, and there are no entries in the log (even with debug enabled) indicating activity. top within the container: top in unraid: -

Also consider a transcoding path outside the docker image. I have mine use RAM (to minimize SSD writes) but a path to a cached share works as well. NOTE 1: Emby will create the subdirectory transcoding-temp under your specified path. (In my case that's /dev/shm/transcoding-temp) NOTE 2: As an alternative to the nightly restart I've written a monitoring script: SCRIPT TO KEEP EMBY'S TRANSCODING DIRECTORY FROM FILLING UP

-

Had an issue with 4.5 where memory ballooned to about 4GB, best I can tell. My system should have been able to handle it but I couldn't investigate – GUI was non-responsive and even simple bash commands would throw "resource fork" errors (maybe nproc limits?) I managed to restart the server and downgrade cleanly, no problems since.

-

Nope - hasn't happened since. Either a fluke or whatever caused it was fixed.