-

Posts

58 -

Joined

-

Last visited

Content Type

Profiles

Forums

Downloads

Store

Gallery

Bug Reports

Documentation

Landing

Everything posted by sreknob

-

Recommend way is to use the Replace the USB flash device procedure from the guide. When you do this, all of your current settings and shares will be preserved. If you want to start "fresh", then you'd use a brand new flash drive set up as new, boot the fresh drive and then use the "New Config" tool, reassigning all your drives to their proper assignments and selecting the "parity is already valid" checkbox. If you don't select the proper assignments though, there is risk of data loss here. Alternatively, you can set up a new USB drive and just copy the /config folder from your current drive, which should preserve your array and shares configuration as well. I would suggest using the method from the guide - what are you hoping to achieve by "starting fresh"?

-

Thanks for the information - I was not aware of the overly file system issue. Having said this, I've never had a problem in the past, so perhaps I'm just lucky. It would make sense if this was somehow integrated into the new update workflow in this case. There is no reason to overwrite the "previous" folder file as the intermediate version never was booted, so the previous should say as is. I suppose it could be tracked by comparing the versions between what is loaded and what is in the previous folder before copying over the current files.

-

Thanks itimpi - I am aware of the manual process, it's just nicer to hit a button 🙂 Maybe more of a suggestion for the new method as it matures further. For now, using the Update Assistant tool seems to work around this issue. Thanks

-

Hi All - I have one server that I hit the update button on but didn't reboot yet, and now there is a new version. Is there any way with the new upgrade workflow to complete a "double upgrade", meaning skipping an intermediate version if you previously hit upgrade and didn't reboot or if an upgrade came out shortly after starting an upgrade to the previous version? In the past, I could just use the update tool again to get to the current version prior to rebooting, but it appears that there is no easy way to do this "double upgrade" anymore. I now need to reboot to get to the intermediate version, then reboot a second time after updating again to get to latest (save manually doing the upgrade on the flash). Have we lost this ability to do this easily with the new enforced workflow? If so, any chance this could be address in future versions of the new update workflow? Or is this already possible and am I missing something? Thanks! EDIT - Looks like I was able to work around this for now by using the old update method. I use the Update Assistant which checked for a new version as part of it and then click on the banner notification at the top to update the server. Then it used the old update method to successfully complete it. Still, it would be nice to include this ability in the new update workflow instead.

-

Back to the post thread topic here - Is there any way with the new upgrade workflow to complete a "double upgrade". For example, I have one server that I hit the update button on but didn't reboot yet, and now there is a new version. In the past, I could just use the update tool again to get to the current version prior to rebooting, but it appears that there is no easy way to do this "double upgrade" anymore. I now need to reboot to get to the intermediate version, then reboot again to get to latest (save manually doing the upgrade on the flash). Any insights here or am I missing something? Thanks! Edit - for reference, I was able to get this done by using the old method, triggered by using the Update Assistant Tool, more discussion here. Perhaps this could incorporated into the new update workflow somehow by comparing the version in the previous folder to the running version and allowing a new update without overwriting the previous folder again during the upgrade.

-

Unraid 6.11.1 Docker Update All infinite loop

sreknob commented on NickI's report in Stable Releases

Did this, made no difference. I always check for updates prior to running an update all. Just had another loop of 2 with the full update and then a cycle of restart. Just to mention, in case it's related - anyone else with this issue running the Docker Folder plugin? Only bring this up because I have to refresh my page after the updates to have them show as up to date. -

Unraid 6.11.1 Docker Update All infinite loop

sreknob commented on NickI's report in Stable Releases

Used to do it twice, just got a persistent loop today. Still an issue 😕 6.11.5 -

For those that time machine backups are important, it does seem that the TimeMachine docker application works. It's not native and an additional step, but some appear to have found it useful

-

I just logged onto the forum to get some help with a 12TB drive I was adding to my array that wasn't formatting after pre-clear... If only that upgrade notification came earlier! Will report back if 6.11.3 doesn't solve the problem... Update - Formatting working as expected now. Thanks!

-

Oops, I guess that's what you get when you have multiple shells open and try in on a server on 6.10 by accident! 🙃

-

...and if you could add iperf3, that would be amazing as well 🙂

-

Requesting ddrescue! Thanks for this!

-

@shawnngtq @myusuf3 @troian @mykal.codes @wunyee @kavinci @cheesemarathon For the minio filesystem error, I resolved this by using directIO rather than shfs, which removes the fuse layer from the equation. The only downside is that you need to use a single disk. Rest of settings are default. /mnt/disk1/minio So far uploaded a couple of GBs without issue

-

Welcome Eli!

-

Hard to do much with a 750 with 2GB. Options are something with a small DAG file size (UBIQ) or a coin without a DAG file, like TON. T-rex doesn't support either of those options, however.

-

You are correct - I was having a look while you were as well. Tag cuda10-latest works fine but 4.2 and latest give the autotune error My config had both lhr-autotune-interval and lhr-autotune-step-size set to "0", which are both invalid. I also don't use autotune but it was set to auto "-1" from the default config that I used. I set the following in my config to resolve it "lhr-autotune-interval" : "5:120", "lhr-autotune-mode" : "off", "lhr-autotune-step-size" : "0.1", For those that do use autotune, you should leave "lhr-autotune-mode" at "-1" Thanks for the update and having a look. Perhaps this will also help someone else 🙂

-

FYI, on latest tag just pushed, I'm now getting ERROR: Can't start T-Rex, LHR autotune increment interval must be positive Cheers!

-

Can you remove the "--runtime=nvidia" under extra parameters - should be able to start without the runtime error then. See where that gets you... Beside the point though, there should be no reason that you need to use GUI mode to get Plex going. If I were you, I'd edit the containers preferences.xml file and remove the PlexOnlineToken and PlexOnlineUsername and PlexOnlineMail to force the server to be claimed next time you start the container. You can find the server configuration file under \appdata\plex\Library\Application Support\Plex Media Server\Preferences.xml

-

Warning: Unraid Servers exposed to the Internet are being hacked

sreknob replied to jonp's topic in Announcements

This would be a really nice addition! -

Reallocated sectors are ones that have been successfully moved. A gross over-simplification is that with this number going up (especially if more than once recently) is an indicator that the disk is likely to fail soon. Your smart report shows that you have been slowly gathering uncorrectable errors on that drive for almost a year. Although technically you could rebuild to that disk, it has a high chance of dropping out again. I would vote just replace it!

-

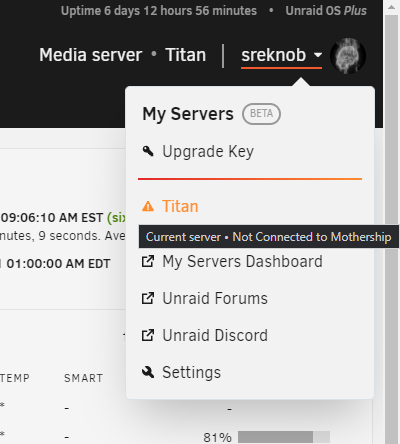

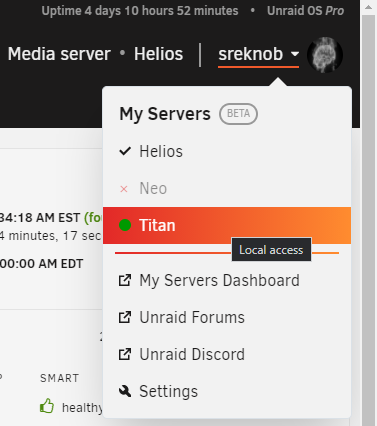

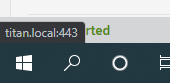

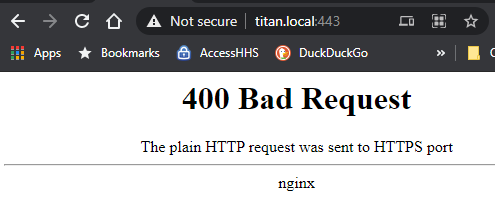

Having the same issue with on server not connected to the mothership, as The funny thing is that it is working from the "My Servers" webpage but when I try and launch it from another server, I have another problem. It tries to launch a webpage with HTTP (no S) to the local hostname at port 443 so I get a 400 (https to non https port --> http://titan.local:443) See the screenshots below and let me know if you want any more info! The menu on the other server shows all normal, but the link doesn't work like it should as noted above - launching http://titan.local:443 instead of https://hash.unraid.net So when I select that, I get a 400: but all launches well from the webui launching the hash.unraid.net properly! EDIT1: The mothership problem is fixed with a `unraid-api restart` on that server but not the incorrect address part. EDIT2: A restart of the API on the server providing the improper link out corrected the second issue - all working properly now. Something wasn't updating the newly provisioned link back to that server from the online API.

-

Sorry I'm late getting back. I just used unBalance and moved on! unBalance shows my cache drive and allows me to move to/from it without issues, so not sure why yours is missing it @tri.ler Seeing that both of us had this problem with the same plex metadata files, I think it must be a mover issue. @trurl I didn't see anything in my logs either, other than the file not found/no such file or directory errors. My file system was good. I'm happy to try and let mover give it another go to try to reproduce, but to what end if that is the only error? Let me know if I can help test any hypotheses...

-

Just throwing an idea out there regarding the VM config that came to mind as I read this thread... feel free to entirely disregard. I'm not sure how the current VM XML is formed in the webUI, but could this be improved by either: 1) Allow adding tags that prevent an update of certain XML elements from the UI. 2) Parse the current XML config and only update the changed elements on apply in the webUI.

-

Certificate Provision Issue: DNS Rebinding in UDM Pro?

sreknob replied to takkkkkkk's topic in General Support

FWIW, I'm running two unRAID servers behind a UDMP right now with this working properly. I'm on 1.8.6 firmware. No DNS modifications, using ISP DNS. The first server worked right away when I set it up. The second one was giving me the same error as you yesterday but provisioned fine today. -

@limetech thanks so much for addressing some of the potential security concerns. I think that despite this, there still needs to be a BIG RED WARNING that port forwarding will expose your unRAID GUI to the general internet and also a BIG RED WARNING about the recommended complexity of your root password in this case. One way to facilitate this might be that you must enter your root password to turn on the remote webUI feature and/or have a password complexity meter and/or requirement met to do so. The fact that most people will think that they can access their server from their forum account might make them assume that this is the only way to access their webUI, rather than directly via their external IP. Having 2FA on the WebUI would be SUPER nice also 🙂 Yes, this is a little onerous, but probably what is required to keep a large volume of "my server has been hacked" posts happening around here...