UhClem

Members-

Posts

282 -

Joined

-

Last visited

Content Type

Profiles

Forums

Downloads

Store

Gallery

Bug Reports

Documentation

Landing

Everything posted by UhClem

-

Parity Errors then an Unexplained Parity Drive Error

UhClem replied to geofbennett's topic in Storage Devices and Controllers

I don't think it's a cable problem. There are (suggestive) indications. in the syslog, that your problem with your Disk3 is due to a flaky SATA port (ata6) on your motherboard (chipset). I would swap connections at the motherboard between Disk3 and another DiskN. If the problems DO "transfer" to DiskN (and stay on ata6), that does eliminate Disk3 and its cable, and nails it to the board. If not, ... Disclaimer: not an Unraid user (just like fun problems) -

Odd ... Your reported symptoms (only writing is slow; and speed @ 10-20 MB/sec) are precisely indicative of a drive's write-caching disabled. But, there's no arguing with your test result (-W saying w.c. is ON) [assuming that /dev/sdb IS one of the two slowpokes] Back to "Plan A" ... maybe Squid & others can see something from your Diagnostics [upload].

-

You need to enable write cache (on those drives). See man hdparm

-

Can't be two jmb585 without a PCIe switch (or a very bizarrely configured [chipset] M.2 slot with "self-bifurcation"). More likely ONE jmb585; and one jmb575 port multiplier. Cute, still ...

-

Adventures of building an external enclosure

UhClem replied to charlesshoults's topic in Storage Devices and Controllers

Depends where you buy them 😀 [Link] $22 vs $80 ?? ... [Inflation is everywhere. Is this the "exception that proves the rule"?] -

New server any pitfalls with this hardware? Also Drive reocmndations?

UhClem replied to budgidiere's topic in Hardware

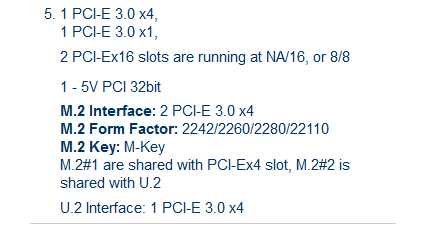

Yes. In addition to having the x16/x0 or x8/x8, it even provides for bifurcating the (2nd) x8 to x4/x4, allowing 2 NVMe's to feed off the slot. And there is also a X4 (x16 mechanical) slot (plus 2/3 x1 slots). But it's not a budget mobo (like the MSI one)--I'd expect that a mobo with similar functionality and flexibility could be found at an intermediate price point. I'm not familiar with the MB scene nowadays, so I have no recommendation. -

New server any pitfalls with this hardware? Also Drive reocmndations?

UhClem replied to budgidiere's topic in Hardware

I completely agree ...but (for a 2 SAS port card) ONLY if the HBA is given 8 lanes AND never an expander [ie max # drives is 8]. Given that the proposed case has 15 x 3.5, I want to allow for growing into that. [Also, given that case, I'm confused by OP's OP ?? ] I see 2 GPUs in that list, plus mention of a 10G NIC. Also, looking to the future, it's a good idea to provide for additional NVMe's--a real boon for speeding up many workflow scenarios. Sounds like a tight "budget" for slots and lanes. Toward these ends, I would want to use a Gen3 HBA since it offers the option of 3200+ M/s bandwidth using ONLY 4 lanes; and Gen3/SAS2 HBAs are only a few $ more than Gen2/SAS2 these days [frugality ON]. -

New server any pitfalls with this hardware? Also Drive reocmndations?

UhClem replied to budgidiere's topic in Hardware

Don't get a mobo w/all 16 (CPU) PCIe lanes going to a single x16 slot. Look for boards that (at least) offer the option of (2 slots as) x16/x0 OR x8/x8. Also, don't waste potential bandwidth by using Gen2 HBAs in a Gen3 ecosystem. -

You do NOT want a Mobo with the PCIe config like that MSI you linked. It has all 16 of the CPU's PCIe lanes going to ONE of its x16 slots. (The other two x16-wide slots, one of them x4 lanes & the other x1 lane, are connected to the chipset.) Nothing wrong with the Z390 chipset itself, but look for a mobo that allocates the CPU's 16 lanes among two slots, either as x16/x0 (when that 2nd slot is empty) OR as x8/x8 (when both are occupied). Even better, but I've never seen it!!, would be three CPU-connected slots, configured as: x16/x0/x0 or x8/x8/x0 OR x8/x4/x4.

-

Yeah, that's why I put shares in "". (Since that is the word that Supermicro uses on their X11SCA product page [Link] (see attached) It is really either/or. [I.e., one will be disabled.]

-

Note that you are going to lose your M.2 #1 ("shares" the x4 slot). See your MB manual.

-

The (unique/specific) C246 chipset on your own [3Server] motherboard is flaky. (You should get a direct replacement.) The best documentation (readily available) for the issue is the output of: grep -e "ATA-10" -e "AER: Corr" -e "FPDMA QUE" -e "USB disc" syslog.txt Use the syslog.txt from the 20210930-0723 .zip file. It has all 4 HDDs throwing errors. The "ATA-10" pattern just documents which HDD is ata[1357].00 . I'm pretty sure there are also relevant NIC errors in there, but I'm networking-ignorant. Note that all of these errors emanate from devices on the C246. Please examine a syslog.txt from your test-run on your Gen10 MS+; that box also uses the C246, but its syslog.txt will have none of these errors. Attached is the output from the above command (filtered thru uniq -c, for brevity). c246.txt

-

Recommended controllers for Unraid

UhClem replied to JorgeB's topic in Storage Devices and Controllers

Understood. Given the ~20 (non-empty) reviews [mostly Russia/E. Eur], the product is as-advertised and functions properly; only negative appears to be seller's (lack of padded) packaging. I'm sure the community will welcome your report. Mazel tov. -

Recommended controllers for Unraid

UhClem replied to JorgeB's topic in Storage Devices and Controllers

I have some 4TB's that do 200 MB/s (typical 4TBs max ~150-160); typical 8TBs ~200; 12TBs ~240; 16TBs 260+ . [ Kafka wrote: "Better to have and not need, than to need and not have." ] 170+ orders & 60+ reviews (@4.8/5) [for what it's worth ??] -

Recommended controllers for Unraid

UhClem replied to JorgeB's topic in Storage Devices and Controllers

No !!!! NOT that card. Unless you really want/need to restrict yourself to a x1 physical slot; hence limiting your total throughput to ~850 MB/s. If you have a x4 physical slot (which is at least x2 electrical) this one looks like an excellent value: https://www.aliexpress.com/item/4001269633905.html getting full PCIe3 x2 throughput of ~1700 MB/s (at < 30 $USD) -

Any pcie 3.0 x16 controllers?

UhClem replied to xxredxpandaxx's topic in Storage Devices and Controllers

As for the 7GB/s "estimate", note that it assumes that the 9300-8i itself actually has the muscle. It probably does, but it is likely that it will be PCIe limited to slightly less than 7.0 GB/s (ref: your x4 test which got 3477; [i.e. < 7000/2]) Interesting. The under-performance of my script suggests another deficiency of the PMC implementation (vs LSI) : My script uses hdparm -t which does read()'s of 2MB (which the kernel deconstructs to multiple 512KB max to the device/controller). Recall that LSI graphic you included which quantified the Databolt R/W throughput for different Request sizes (the x-axis). There was a slight decrease of Read throughput at larger request sizes (64KB-512KB). I suspect that an analogous graph for the PMC edge-buffering expander would show a more pronounced tail off. -

Weird drive bandwidth cap until reboot

UhClem replied to jbartlett's topic in Storage Devices and Controllers

Smells like Sata 1 -

Any pcie 3.0 x16 controllers?

UhClem replied to xxredxpandaxx's topic in Storage Devices and Controllers

Try the B option. It might help, or not ... Devices (and buses) can act strange when you push their limits. The nvmx script uses a home-brew prog instead of hdparm. Though I haven't used it myself, you can check out fio for doing all kinds of testing of storage. I completely agree with you. I do not completely agree with this. I'll send you a PM. -

Any pcie 3.0 x16 controllers?

UhClem replied to xxredxpandaxx's topic in Storage Devices and Controllers

Certainly ... but as an old-school hardcore hacker, I wonder if it could have been (at least a few %) better. I have to wonder if any very large, and very competent, potential customer (e.g., GOOG, AMZN, MSFT), did a head-to-head comparison between LSI & PMC before placing their 1000+ unit chip order. That lays the whole story out--with good quantitative details. I commend LSI. And extra credit for "underplaying" their hand. Note how they used "jumps from 4100 MB/s to 5200 MB/s" when their own graph plot clearly shows ~5600. (and that is =~ your own 5520) I suspect that the reduction in read speed, but not write speed, is due to the fact that writing can take advantage of "write-behind" (similar to HDD's and OS's), but reading can not do "read-ahead" (whereas HDD's and OS's can). Thanks for the verification. -

Any pcie 3.0 x16 controllers?

UhClem replied to xxredxpandaxx's topic in Storage Devices and Controllers

You're getting there ... 😀 Maybe try a different testing procedure:. See the attached script. I use variations of it for SAS/SATA testing. Usage: ~/bin [ 1298 ] # ndk a b c d e /dev/sda: 225.06 MB/s /dev/sdb: 219.35 MB/s /dev/sdc: 219.68 MB/s /dev/sdd: 194.17 MB/s /dev/sde: 402.01 MB/s Total = 1260.27 MB/s ndk_sh.txt Speaking of testing (different script though) ... ~/bin [ 1269 ] # nvmx 0 1 2 3 4 /dev/nvme0n1: 2909.2 MB/sec /dev/nvme1n1: 2907.0 MB/sec /dev/nvme2n1: 2751.0 MB/sec /dev/nvme3n1: 2738.8 MB/sec /dev/nvme4n1: 2898.5 MB/sec Total = 14204.5 MB/sec ~/bin [ 1270 ] # for i in {1..10}; do nvmx 0 1 2 3 4 | grep Total; done Total = 14205.8 MB/sec Total = 14205.0 MB/sec Total = 14207.5 MB/sec Total = 14205.8 MB/sec Total = 14203.3 MB/sec Total = 14210.6 MB/sec Total = 14207.0 MB/sec Total = 14208.0 MB/sec Total = 14203.4 MB/sec Total = 14201.9 MB/sec ~/bin [ 1271 ] # PCIe3 x16 slot [on HP ML30 Gen10, E-2234 CPU] nothing exotic -

Any pcie 3.0 x16 controllers?

UhClem replied to xxredxpandaxx's topic in Storage Devices and Controllers

Excellent evidence! But, to me, very disappointing that the implementations (both LSI & PMC, apparently)] of this feature are this sub-optimal.. Probably a result of cost/benefit analysis with regard to SATA users (the peasant class--"Let them eat cake."). Also surprising that this hadn't come to light previously. Speaking of the LSI/PMC thing ... Intel's SAS3 expanders (such as the OP's) are documented, by Intel, to use PMC expander chips. How did you verify that your SM backplane actually uses a LSI expander chip (I could not find anything from Supermicro themself; and I'm not confident relying on a "distributor" website)? Do any of the sg_ utils expose that detail? The reason for my "concern" is that the coincidence of both OP's & your results, with same 9300-8i and same test (Unraid parity check) [your 12*460 =~ OP's 28*200] but different??? expander chip is curious. -

Any pcie 3.0 x16 controllers?

UhClem replied to xxredxpandaxx's topic in Storage Devices and Controllers

Please keep things in context. OP wrote: Since the OP seemed to think that an x16 card was necessary, I replied: And then you conflated the limitations of particular/"typical" PCIe3 SAS/SATA HBAs with the limits of the PCIe3 bus itself. In order to design/configure an optimal storage subsystem, one needs to understand, and differentiate, the limitations of the PCIe bus, from the designs, and shortcomings, of the various HBA (& expander) options. If I had a single PCIe3 x8 slot and 32 (fast enough) SATA HDDs, I could get 210-220 MB/sec on each drive concurrently. For only 28 drives, 240-250..(Of course, you are completely free to doubt me on this ...) And, two months ago, before prices of all things storage got crazy, HBA + expansion would have cost < $100. ===== Specific problems warrant specific solutiions. Eschew mediocrity. -

Any pcie 3.0 x16 controllers?

UhClem replied to xxredxpandaxx's topic in Storage Devices and Controllers

In my direct, first-hand, experience, it is 7100+ MB/sec. (I also measured 14,200+ MB/sec on PCIe3 x16). I used a PCIe3 x16 card supporting multiple (NVMe) devices. [In a x8 slot for the first measurement.] [Consider: a decent PCIe3 x4 NVMe SSD can attain 3400-3500 MB/sec.] That table's "Typical" #s are factoring in an excessive amount of transport layer overhead. I'm pretty certain that the spec for SAS3 expanders eliminates the (SAS2) "binding" of link speed to device speed. I.e., Databolt is just Marketing. Well, that's two tests of the 9300, with different dual-link SAS3 expanders and different device mix, that are both capped at ~5600 ... prognosis: muscle deficiency [in the 9300]. -

Any pcie 3.0 x16 controllers?

UhClem replied to xxredxpandaxx's topic in Storage Devices and Controllers

It looks to me like you are not limited by PCIe bandwidth. PCIe gen3 @ x8 is good for (real-world) ~7000 MB/sec. If you are getting a little over 200 MB/sec each for 28 drives, that's ~6000 MB/sec. (You are obviously using a Dual-link connection HBA<==>Expander which is good for >> 7000 [9000].) Either your 9300 does not have the muscle to exceed 6000, or you have one (or more) drives that are stragglers, handicapping the (parallel) parity operation. (I'm assuming you are not CPU-limited--I don't use unraid.)