IronBeardKnight

Members-

Posts

69 -

Joined

-

Last visited

Content Type

Profiles

Forums

Downloads

Store

Gallery

Bug Reports

Documentation

Landing

Everything posted by IronBeardKnight

-

This does not seem to be a profile specific issue from my end it seems like a bug to me or incorrect usage of regex or something. As I'm not allowed to have just one backup even with a custom profile.

-

I believe the issue could be on line 299 of https://github.com/JTok/unraid.vmbackup/blob/v0.2.2/source/Vmbackup1Settings.page although this pattern string does not make much sense to me anyway, does ^(0|([1-9]))$ not work?

-

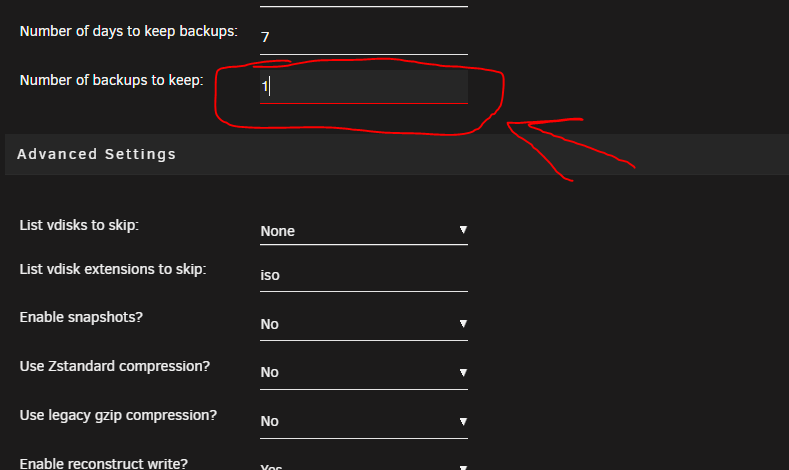

I have discovered what I think may be a bug with this plugin. I'm sure this one could be a simple fix but its possible I might be missing something. Using 2 for this field allows the plugin to work as expected. for some reason I cannot select 1 backup for the amount of copies as I only want one backup and then I use other tools to ship a copy encrypted offsite. Please see below how it turns read then will not allow you apply 1 even thought the vm only has 1 vdisk. You cannot proceed and press apply when its red.

-

It almost seems like if you download a clean docker pull from scratch again it works but then as soon as its restarted by automation it breaks. It feels like permissions or something is getting changed for this docker by the system even.

-

Also was getting this issue when enabling vpn yes on the latest tag. I loose all access to the gui. Rolled back as per the previous posts has brought me back up and running. Obviously not a full solution. Found this is the Supervisord.log Edit: Found that this still did not fix the issue as after I did a CA Backup and the container auto started again it was back to no gui and this error above. Please help

-

Problems mit appdata rights since 6.10

IronBeardKnight replied to Retrogamer137's topic in Docker Engine

Also experiencing strange appdata behaviour with docker containers loosing there permissions. -

(SOLVED) 6.10-rc2 Dockers permission to appdata folder

IronBeardKnight replied to Bouxer's topic in General Support

Yes please do tell -

Dashboard crash on 6.10.1 - GUI not working anymore

IronBeardKnight commented on Zonediver's report in Stable Releases

Also having this same ui error. Also having some very strange cache issues further testing on my end needed but problems only started after update. Hardware is not the issue. -

6.10 release should be backwards compatible no ? its always better to dev on the latest main. will look into these links in a bit

-

If I get some time over the next few days ill give it a shot otherwise our own or derived version of (active io streams) or (open files) plugin would need to be created to keep track of accessed / last accessed files which would give you your list.

-

mmm Everything is possible with time, however, I don't believe there has been significant need or request for something like this yet as what we have currently caters for 95% of situations, however I have come across a couple of situations where having temporary delayed moving on a file/folder level would have been good. So to state your request another way, basically wanting a timer option that can be set per file/folder/share? As for getting this done, the best point to start would be then to modify this current "File list path" option to do what you want E.g you would just add as normal your file/ folder locations with a (space at the end followed by 5d, 7h, 9m, 70s) this would be the time it stays on cache for when using the parent share folder with cache Yes. Code changes would need to change from: looped if (exists) to something like: looped if (exists && current date < $variableFromLine). Not actual code ^ but you get the hint The problem with this is that the script now has to store dates and times of arrival on cache for said folder / file per line but on every subsequent child file/folder and give them individual id's and times to reference you can imagine how this now grows very fast in both compute, io, that the mover would need not to mention now a database need, etc etc, a mover database which won't be as easy as first through to implement with a bash script which is what is run for the mover is a-lot of extra coding to be required and potentially this edges on complete mover redesign. I cannot see this being implemented from my point of view. @-Daedalus thank you for raising it here though. New Idea! What could potentially solve your issue and would be very cool in nearly every single case is if we where to use for example the code from the " Active-IO-Streams" and/or "Open-Files" plugins then modify a little to then advise the mover what files where frequently most accessed by way of access count and time open and have the mover be bi-directional, as in taking those frequent files and moving them to the cache. Having the option to auto add said files to the "exclude file list option" of the mover would also be great as this stops the files from being moved back so soon if your like me and have your mover run hourly or so, at this point of adding to the exclude list you could have each and every file and or folder added to the list automatically ( basically using the txt file as a db ) which would allow you to then add a blanket timeframe to each entry as well if you want for your original needs OR instead of a blanket have the timeframe auto configure based on the usage of the file or folder e.g accessed > 9 times etc The mover then becomes essentially a proper bidirectional cache and your system gets a little smarter by making what is accessed frequently available on the faster drives but again basically a mover plugin redesign. I would be happy to help out getting something like this out the door but as this is not my plugin and my time is limited its a decision that is not up to me. @hugenbdd not sure if your down to go this far but its all future potential and idea's Pardon the grammer and spelling was in a rush.

-

Has anyone actually done a perf test of different size files using xfs vs btrfs with mover to help speed things up. I know that xfs yes less features has better io in general on linux, however, with the mover choking on larger amounts of small files eg kb's using btrfs I wonder has anyone actually tested move time / perf of the two file systems for the purpose of the the mover?

-

this is correct it is used for keeping things on the cache (excluding from the move) Situation example: You have a share set to cache yes data is read written to the cache until criteria is met for the mover to run, the mover runs and normally every bit of that data currently under that share that is on the cache is moved to the array. Let;s say you have a bunch of sub files or folders in that share that you would like to have stay on the array when the mover runs so that applications that depend on that data can run faster using the cache. having this option allows you to have less shares created and increase speed of some application you have used it for. e.g Nextcloud requires a share for user data which includes docs. thumbnails, photos etc, if you set that share to cache_yes the all the data that was once on the cache becomes very slow now especially small files after the mover runs and it gets transfered/moved to the array as things like thumbnails etc have to be then read from the array instead of the cache. Enter this mover feature! Allowing you to find the thumbnail sub sub sub folder or what ever else you want and set it to stay on the cache regardless of mover run, however, all the actual pictures docs etc not specified get moved to the array still, keeping your end user experience nice and fast in the gui/webpage of nextcloud as you cached your thumbnails but allowing you to optimize your used storage of your cache by having the huge files sit in slower storage as they are not regularly accessed, Summery is that this feature of mover allows for more: Granular cache control cache Space Saving Application/docker performance Less Mover run time faster load times of games: if you set assets or .exe files etc to stay on cache etc etc

-

check your share settings as you may have had something going to cache that has now been set to only use the array thus the files get left on the cache and never moved. Correct procedure if changing your caching of shares to not use the cache anymore is always to stop what ever is feeding that share, run a full mover run then change the share setting back to array. I hope this helps

-

This is already a feature via exclude location list.

-

I have fixed this issue for anyone wanting to know what the problem is step 1: Grafana Step 2: Grafana make sure this is your local ip if your not exposing unraid or grafana to the internet and your keeping it local. Step 3: Grafana Confirm you have the correct Encryption method selected or if your running non engcryption and apply. Do this for every graph that uses the Unraid-API

-

Still not able to see icons. Everything else is working just not the icons. Linking back to that post on the first form page does nothing it does not explain whats going on or perhaps how to fix it.

-

-

6.9.2 broke my vm's with nvidia gpu's

IronBeardKnight replied to Brydezen's topic in VM Engine (KVM)

I believe from memory I just wiped the kvm image from unraid but left the vdisk in tact then recreated the kvm and pointed it to the old disk and it worked. -

6.9.2 broke my vm's with nvidia gpu's

IronBeardKnight replied to Brydezen's topic in VM Engine (KVM)

Same for me the server now has to be fully rebooted just to bring kvm back up. did you guys get any updates on this? -

tried editing in GEN_SSL but it just completely breaks the container.

-

man I'm having so much trouble with this container .env trick then get chown error for it in the setup page, so chow whole app directory as the ,env does not exist anymore. Wooo hoo one sep further. then straight up after submit on setup page straight up 500 error. and so many errors in the logs child 37 said into stderr: "src/Illuminate/Pipeline/Pipeline.php(149): Illuminate\Cookie\Middleware\EncryptCookies->handle(Object(Illuminate\Http\Request), Object(Closure)) #36 etc etc. would be so good if this worked properly. Not sure why this container self generates ssl cert through lets encrypt but most people running the container will be using reverse proxies anyway.

-

[Support] selfhosters.net's Template Repository

IronBeardKnight replied to Roxedus's topic in Docker Containers

Currently Invoiceninja unraid container is not working at all and no instructions to navigate the errors. It appears your docker container is broken. Not only do you have to run php artisan migrate but after you get the db and everything setup for this you run into the below errors along with many more of the same type. [15-Mar-2021 14:19:32] WARNING: [pool www] child 31 said into stderr: "[2021-03-15 04:19:32] production.ERROR: ***RuntimeException*** [0] : /var/www/app/vendor/turbo124/framework/src/Illuminate/Encryption/Encrypter.php [Line 43] => The only supported ciphers are AES-128-CBC and AES-256-CBC with the correct key lengths. {"context":"PHP","user_id":0,"account_id":0,"user_name":"","method":"GET","user_agent":"Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/89.0.4389.86 Safari/537.36","locale":"en","ip":"192.168.1.14","count":1,"is_console":"no","is_api":"no","db_server":"mysql","url":"/"} []" [15-Mar-2021 14:19:32] WARNING: [pool www] child 31 said into stderr: "[2021-03-15 04:19:32] production.ERROR: [stacktrace] 2021-03-15 04:19:32 The only supported ciphers are AES-128-CBC and AES-256-CBC with the correct key lengths.: #0 /var/www/app/vendor/turbo124/framework/src/Illuminate/Encryption/EncryptionServiceProvider.php(28): Illuminate\Encryption\Encrypter->__construct('7kg2Ca9E8BTaSa8...', 'AES-256-CBC') #1 /var/www/app/vendor/turbo124/framework/src/Illuminate/Container/Container.php(749): Illuminate\Encryption\EncryptionServiceProvider->Illuminate\Encryption\{closure}(Object(Illuminate\Foundation\Application), Array) #2 /var/www/app/vendor/turbo124/framework/src/Illuminate/Container/Container.php(631): Illuminate\Container\Container->build(Object(Closure)) #3 /var/www/app/vendor/turbo124/framework/src/Illuminate/Container/Container.php(586): Illuminate\Container\Container->resolve('encrypter', Array) #4 /var/www/app/vendor/turbo124/framework/src/Illuminate/Foundation/Application.php(732): Illu...