IronBeardKnight

Members-

Posts

69 -

Joined

-

Last visited

Content Type

Profiles

Forums

Downloads

Store

Gallery

Bug Reports

Documentation

Landing

Everything posted by IronBeardKnight

-

[Support] selfhosters.net's Template Repository

IronBeardKnight replied to Roxedus's topic in Docker Containers

Hey guys having this issue trying to get the container started have used the documentation and template to get the xml with MySQL db backend also using ChatGPT for some rough Gutenberg and having no luck as I keep getting the below. text error warn system array login at java.base/jdk.internal.util.xml.impl.Parser.ent(Parser.java:1994) at java.base/jdk.internal.util.xml.impl.Parser.step(Parser.java:471) at java.base/jdk.internal.util.xml.impl.ParserSAX.parse(ParserSAX.java:477) at java.base/jdk.internal.util.xml.impl.ParserSAX.parse(ParserSAX.java:411) at java.base/jdk.internal.util.xml.impl.ParserSAX.parse(ParserSAX.java:374) at java.base/jdk.internal.util.xml.impl.SAXParserImpl.parse(SAXParserImpl.java:97) at java.base/jdk.internal.util.xml.PropertiesDefaultHandler.load(PropertiesDefaultHandler.java:83) ... 19 more 2024-02-23 22:53:04 ERROR: Main method error - - SAXParseException (... < Config:46 < <gener:-1 < *:-1 < ... < Main:116 < ...) Exception in thread "main" java.lang.RuntimeException: com.google.inject.CreationException: Unable to create injector, see the following errors: 1) [Guice/ErrorInjectingConstructor]: RuntimeException: Configuration file is not a valid XML document at Config.<init>(Config.java:42) at MainModule.configure(MainModule.java:116) while locating Config Learn more: https://github.com/google/guice/wiki/ERROR_INJECTING_CONSTRUCTOR 1 error ====================== Full classname legend: ====================== Config: "org.traccar.config.Config" MainModule: "org.traccar.MainModule" ======================== End of classname legend: ======================== at org.traccar.Main.run(Main.java:146) at org.traccar.Main.main(Main.java:110) Caused by: com.google.inject.CreationException: Unable to create injector, see the following errors: 1) [Guice/ErrorInjectingConstructor]: RuntimeException: Configuration file is not a valid XML document at Config.<init>(Config.java:42) at MainModule.configure(MainModule.java:116) while locating Config Learn more: https://github.com/google/guice/wiki/ERROR_INJECTING_CONSTRUCTOR 1 error ====================== Full classname legend: ====================== Config: "org.traccar.config.Config" MainModule: "org.traccar.MainModule" ======================== End of classname legend: ======================== at com.google.inject.internal.Errors.throwCreationExceptionIfErrorsExist(Errors.java:589) at com.google.inject.internal.InternalInjectorCreator.injectDynamically(InternalInjectorCreator.java:190) at com.google.inject.internal.InternalInjectorCreator.build(InternalInjectorCreator.java:113) at com.google.inject.Guice.createInjector(Guice.java:87) at com.google.inject.Guice.createInjector(Guice.java:69) at com.google.inject.Guice.createInjector(Guice.java:59) at org.traccar.Main.run(Main.java:116) ... 1 more Caused by: java.lang.RuntimeException: Configuration file is not a valid XML document at org.traccar.config.Config.<init>(Config.java:64) at org.traccar.config.Config$$FastClassByGuice$$9a3fc4.GUICE$TRAMPOLINE(<generated>) at org.traccar.config.Config$$FastClassByGuice$$9a3fc4.apply(<generated>) at com.google.inject.internal.DefaultConstructionProxyFactory$FastClassProxy.newInstance(DefaultConstructionProxyFactory.java:82) at com.google.inject.internal.ConstructorInjector.provision(ConstructorInjector.java:114) at com.google.inject.internal.ConstructorInjector.construct(ConstructorInjector.java:91) at com.google.inject.internal.ConstructorBindingImpl$Factory.get(ConstructorBindingImpl.java:300) at com.google.inject.internal.ProviderToInternalFactoryAdapter.get(ProviderToInternalFactoryAdapter.java:40) at com.google.inject.internal.SingletonScope$1.get(SingletonScope.java:169) at com.google.inject.internal.InternalFactoryToProviderAdapter.get(InternalFactoryToProviderAdapter.java:45) at com.google.inject.internal.InternalInjectorCreator.loadEagerSingletons(InternalInjectorCreator.java:213) at com.google.inject.internal.InternalInjectorCreator.injectDynamically(InternalInjectorCreator.java:186) ... 6 more Caused by: java.util.InvalidPropertiesFormatException: jdk.internal.org.xml.sax.SAXParseException; at java.base/jdk.internal.util.xml.PropertiesDefaultHandler.load(PropertiesDefaultHandler.java:85) at java.base/java.util.Properties.loadFromXML(Properties.java:986) at org.traccar.config.Config.<init>(Config.java:46) ... 17 more Caused by: jdk.internal.org.xml.sax.SAXParseException; at java.base/jdk.internal.util.xml.impl.ParserSAX.panic(ParserSAX.java:652) at java.base/jdk.internal.util.xml.impl.Parser.ent(Parser.java:1994) at java.base/jdk.internal.util.xml.impl.Parser.step(Parser.java:471) at java.base/jdk.internal.util.xml.impl.ParserSAX.parse(ParserSAX.java:477) at java.base/jdk.internal.util.xml.impl.ParserSAX.parse(ParserSAX.java:411) at java.base/jdk.internal.util.xml.impl.ParserSAX.parse(ParserSAX.java:374) at java.base/jdk.internal.util.xml.impl.SAXParserImpl.parse(SAXParserImpl.java:97) at java.base/jdk.internal.util.xml.PropertiesDefaultHandler.load(PropertiesDefaultHandler.java:83) ... 19 more -

[Support] selfhosters.net's Template Repository

IronBeardKnight replied to Roxedus's topic in Docker Containers

Hey guys having this issue trying to get the container started have used the documentation and template to get the xml with MySQL db backend also using ChatGPT for some rough Gutenberg and having no luck as I keep getting the below. text error warn system array login at java.base/jdk.internal.util.xml.impl.Parser.ent(Parser.java:1994) at java.base/jdk.internal.util.xml.impl.Parser.step(Parser.java:471) at java.base/jdk.internal.util.xml.impl.ParserSAX.parse(ParserSAX.java:477) at java.base/jdk.internal.util.xml.impl.ParserSAX.parse(ParserSAX.java:411) at java.base/jdk.internal.util.xml.impl.ParserSAX.parse(ParserSAX.java:374) at java.base/jdk.internal.util.xml.impl.SAXParserImpl.parse(SAXParserImpl.java:97) at java.base/jdk.internal.util.xml.PropertiesDefaultHandler.load(PropertiesDefaultHandler.java:83) ... 19 more 2024-02-23 22:53:04 ERROR: Main method error - - SAXParseException (... < Config:46 < <gener:-1 < *:-1 < ... < Main:116 < ...) Exception in thread "main" java.lang.RuntimeException: com.google.inject.CreationException: Unable to create injector, see the following errors: 1) [Guice/ErrorInjectingConstructor]: RuntimeException: Configuration file is not a valid XML document at Config.<init>(Config.java:42) at MainModule.configure(MainModule.java:116) while locating Config Learn more: https://github.com/google/guice/wiki/ERROR_INJECTING_CONSTRUCTOR 1 error ====================== Full classname legend: ====================== Config: "org.traccar.config.Config" MainModule: "org.traccar.MainModule" ======================== End of classname legend: ======================== at org.traccar.Main.run(Main.java:146) at org.traccar.Main.main(Main.java:110) Caused by: com.google.inject.CreationException: Unable to create injector, see the following errors: 1) [Guice/ErrorInjectingConstructor]: RuntimeException: Configuration file is not a valid XML document at Config.<init>(Config.java:42) at MainModule.configure(MainModule.java:116) while locating Config Learn more: https://github.com/google/guice/wiki/ERROR_INJECTING_CONSTRUCTOR 1 error ====================== Full classname legend: ====================== Config: "org.traccar.config.Config" MainModule: "org.traccar.MainModule" ======================== End of classname legend: ======================== at com.google.inject.internal.Errors.throwCreationExceptionIfErrorsExist(Errors.java:589) at com.google.inject.internal.InternalInjectorCreator.injectDynamically(InternalInjectorCreator.java:190) at com.google.inject.internal.InternalInjectorCreator.build(InternalInjectorCreator.java:113) at com.google.inject.Guice.createInjector(Guice.java:87) at com.google.inject.Guice.createInjector(Guice.java:69) at com.google.inject.Guice.createInjector(Guice.java:59) at org.traccar.Main.run(Main.java:116) ... 1 more Caused by: java.lang.RuntimeException: Configuration file is not a valid XML document at org.traccar.config.Config.<init>(Config.java:64) at org.traccar.config.Config$$FastClassByGuice$$9a3fc4.GUICE$TRAMPOLINE(<generated>) at org.traccar.config.Config$$FastClassByGuice$$9a3fc4.apply(<generated>) at com.google.inject.internal.DefaultConstructionProxyFactory$FastClassProxy.newInstance(DefaultConstructionProxyFactory.java:82) at com.google.inject.internal.ConstructorInjector.provision(ConstructorInjector.java:114) at com.google.inject.internal.ConstructorInjector.construct(ConstructorInjector.java:91) at com.google.inject.internal.ConstructorBindingImpl$Factory.get(ConstructorBindingImpl.java:300) at com.google.inject.internal.ProviderToInternalFactoryAdapter.get(ProviderToInternalFactoryAdapter.java:40) at com.google.inject.internal.SingletonScope$1.get(SingletonScope.java:169) at com.google.inject.internal.InternalFactoryToProviderAdapter.get(InternalFactoryToProviderAdapter.java:45) at com.google.inject.internal.InternalInjectorCreator.loadEagerSingletons(InternalInjectorCreator.java:213) at com.google.inject.internal.InternalInjectorCreator.injectDynamically(InternalInjectorCreator.java:186) ... 6 more Caused by: java.util.InvalidPropertiesFormatException: jdk.internal.org.xml.sax.SAXParseException; at java.base/jdk.internal.util.xml.PropertiesDefaultHandler.load(PropertiesDefaultHandler.java:85) at java.base/java.util.Properties.loadFromXML(Properties.java:986) at org.traccar.config.Config.<init>(Config.java:46) ... 17 more Caused by: jdk.internal.org.xml.sax.SAXParseException; at java.base/jdk.internal.util.xml.impl.ParserSAX.panic(ParserSAX.java:652) at java.base/jdk.internal.util.xml.impl.Parser.ent(Parser.java:1994) at java.base/jdk.internal.util.xml.impl.Parser.step(Parser.java:471) at java.base/jdk.internal.util.xml.impl.ParserSAX.parse(ParserSAX.java:477) at java.base/jdk.internal.util.xml.impl.ParserSAX.parse(ParserSAX.java:411) at java.base/jdk.internal.util.xml.impl.ParserSAX.parse(ParserSAX.java:374) at java.base/jdk.internal.util.xml.impl.SAXParserImpl.parse(SAXParserImpl.java:97) at java.base/jdk.internal.util.xml.PropertiesDefaultHandler.load(PropertiesDefaultHandler.java:83) ... 19 more -

As very long user of unraid and someone who has brought on many customers with home servers I think its great your looking at ways to bring money back into your company to increase your feature profile and the stability of the product. I don't however believe that charging customers for security updates of which many are in fact bug fixes should be in any part of an update or subscription based model and that to me should be simply protested out of existence, however if your introducing a completely now way of doing security or an implementation that increases security giving features and benefits not just fixing the bugs you missed in your previous release then this may be ok. Example: Ok to pay: Introduced new version of docker and have take some of those features and created new unraid unique features NOT OK TO PAY The community has raised an issue with the you that for example the docker driver your using has been implemented incorrectly and to fix it you should make a default config change. That in my opinion is not something you should be allowed to charge your community for. We as Unraiders never want to be put in a position where we feel like we are being charged to be the beta testers for your product. A very clear and in dept explanation per update is going to have to be given in your change logs to justify update price but charging a heavy fee for something like a docker or some other third party applications hard work is not something ill ever pay for and would probably end up moving to a different software. In this example I understand that work may need to be done to integrate with a new system but for me to pay for it I would like to know what I'm truly paying for. In a digital world where security is of upmost importance and I think if your going to truly tackle security issues and even ones other third party companies or containers that have been neglected for so long and you can justify that then sure the price may be right. I personally would love to seen docker image inspection features like what wiz or Prisma do obviously not as advanced but stuff that lets me know if the dockers i'm running are vulnerable or have out of date libraries being used or trigger CVE's and give me a runtime option to fix these issues. That is the type of security features I expect to see for upgrade prices not just other peoples hard work. Otherwise where this goes remains to be seen and I can only hope this leads a product I love to even greater heights.

-

Immich docker self-hosted google photos setup

IronBeardKnight replied to rutherford's topic in General Support

anyone have any information on enabling cuda on the new version just being released as it appears that they have gotten rid of any inkling that the separate tensort models are still needed or not. I have just added the additional variable to the container for "cuda" to be added however not sure its actually doing anything. As the immich documentation only pertaining to running it normally through docker i'm not sure if I should need to map the docker config files to my local system in unraid so I can persistently edit them in accordance with immich documentation. Any information for fellow immich users is greatly appreciated as its a fantastic application. -

Additional cache pools read speed not being reported to main gui area. From what I can tell this is also affecting telegraf diskio input as well. copying files from a share that is directly associated to the cache pools does not show any read speed, it does however show write speed. Nothing in logs and or diagnostics as I have spent a good deal of time going through diagnostics but if you have any pointers id be happy to attempt finding any info you need besides uploading full diags in the screenshot below you can see the area I speak of. This screenshot shows no evidence however at this time a copy from the array to the cache_ssd_big was under way for nearly 10 minutes being very large files. On boot I can see some activity however soon stops. I cannot narrow this down to a particular plugin or docker container being the issue.

-

SMB & ZFS speeds I don't understand ...

IronBeardKnight replied to MatzeHali's topic in General Support

NVME CACHE I transferred from btrfs to zfs and have had a very noticeable decrease in write speed before and after memory cache has been filled not only that but the ram being used as a cache makes me nerves even though I have a ups and ecc memory, I have noticed that my duel nvme raid1 in zfs cache pool gets full 7000mbps read but only 1500mbps max write which is a far cry from what it should be when using zfs. I will be swtich my appdata pools back to btrfs as it has nearly all the same features as zfs but is much faster from my tests. The only thing that is missing to take advantage of the btrfs is the nice gui plugin and scripts that have been done to deal with snapshots which i'm sure someone could manage to bang up pretty quick using existing zfs scripts and plugins. Its important to note here that my main nvme cache pool was both raid1 in btrfs and zfs local to that file system type of raid1 obviously. ARRAY I also started doing some of my array drives to single disk zfs as per spaceinvadors videos as the array parity and expansion abilities would be handled by unraid which is where I noticed the biggest downside to me personally which was that zfs single disk unlike any zpools is obviously missing a lot of features but more so is very heavily impacted write performance and you still only get single disk read speed obviously once the ram cache was exhausted. I noticed 65% degrading in write speed to the zfs single drive. I did a lot of research into BTRFS vs ZFS and have decided to migrate all my drives and cache to BTRFS and let unraid handle parity much the same as the way spaceinvader is doing zfs but this way I don't see the performance impact I was seeing in zfs and should still be able to do all the same snapshot shifting and replication that zfs does. Doing it this way I avoid the dreaded unstable btrfs local file system raid 5/6 and I get nearly all the same features as ZFS but without the speed bug issues in Unraid. DISCLAIMER I'm sure ZFS is very fast when it comes to an actual zpool and not on a single disk drives situation but it also very much feels like zfs is a deep storage only file system and not really molded for an active so to speak array. Given my testing all my cache pools and the drives within them will be btrfs raid 1 or 0 ( Raid 1 giving you active bitrot protection) and my Array will be Unraid handled parity with individual BTRFS files system single disks Hope this helps others in some way to avoid days of data transfer only to realis the pitfalls. -

NVME CACHE I transferred from btrfs to zfs and have had a very noticeable decrease in write speed before and after memory cache has been filled not only that but the ram being used as a cache makes me nerves even though I have a ups and ecc memory, I have noticed that my duel nvme raid1 in zfs cache pool gets full 7000mbps read but only 1500mbps max write which is a far cry from what it should be when using zfs. I will be swtich my appdata pools back to btrfs as it has nearly all the same features as zfs but is much faster from my tests. The only thing that is missing to take advantage of the btrfs is the nice gui plugin and scripts that have been done to deal with snapshots which i'm sure someone could manage to bang up pretty quick using existing zfs scripts and plugins. Its important to note here that my main nvme cache pool was both raid1 in btrfs and zfs local to that file system type of raid1 obviously. ARRAY I also started doing some of my array drives to single disk zfs as per spaceinvadors videos as the array parity and expansion abilities would be handled by unraid which is where I noticed the biggest downside to me personally which was that zfs single disk unlike any zpools is obviously missing a lot of features but more so is very heavily impacted write performance and you still only get single disk read speed obviously once the ram cache was exhausted. I noticed 65% degrading in write speed to the zfs single drive. I did a lot of research into BTRFS vs ZFS and have decided to migrate all my drives and cache to BTRFS and let unraid handle parity much the same as the way spaceinvader is doing zfs but this way I don't see the performance impact I was seeing in zfs and should still be able to do all the same snapshot shifting and replication that zfs does. Doing it this way I avoid the dreaded unstable btrfs local file system raid 5/6 and I get nearly all the same features as ZFS but without the speed bug issues in Unraid. DISCLAIMER I'm sure ZFS is very fast when it comes to an actual zpool and not on a single disk drives situation but it also very much feels like zfs is a deep storage only file system and not really molded for an active so to speak array. Given my testing all my cache pools and the drives within them will be btrfs raid 1 or 0 ( Raid 1 giving you active bitrot protection) and my Array will be Unraid handled parity with individual BTRFS files system single disks Hope this helps others in some way to avoid days of data transfer only to realis the pitfalls.

-

Having two issues. Mover hangs and the server won't reboot

IronBeardKnight replied to flyize's topic in General Support

Hey guys having major issues with the mover process. it seems to keep hanging and the only thing I can see in its logging is "move: stat: cannot statx xxxxxxxxxx cannot be found" it would apprear that after a while the "find" process then disappears for from the open files plugin but the move button is still grayed out. Mover seems to be a pretty buggy. I have been struggling to move everything off my cache drives to change them out with the mover and have had to resort to doing it manually as the mover keeps stalling or getting hung up on something. No disc activity or cache activity happens This does not seem to directly related to any particular type of file or file in general as it has happened or stalled on quite a number of different appdata files/ folders. I'm happy to help out trying to improve the mover however I am limited time wise. -

While this may be true for cache > array but what about array > cache would it still be the same limitation if the share is spread over multiple drives?

-

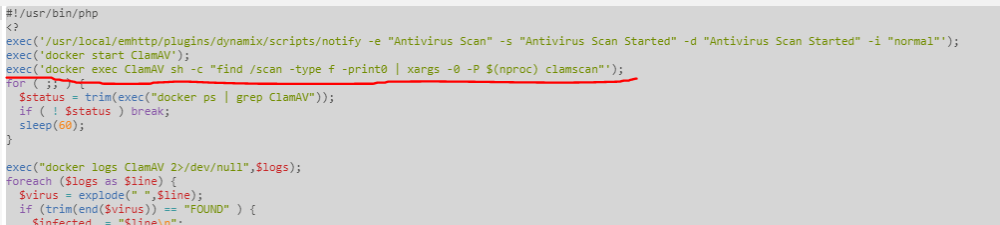

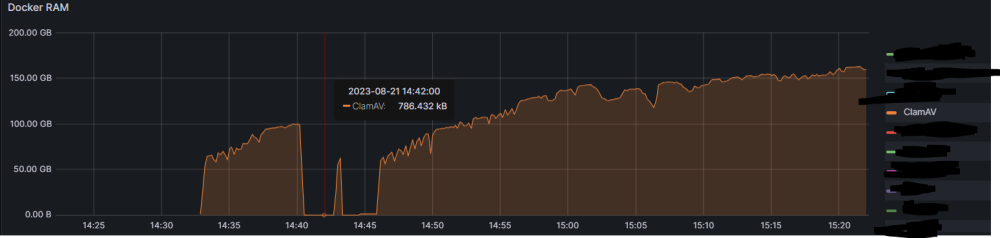

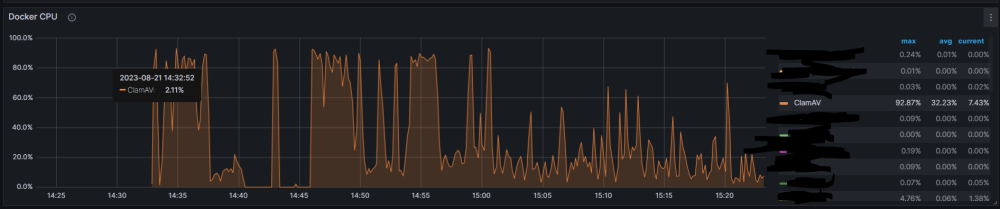

For those of you that have setup the script to go with the ClamAV container but have noticed little to no activity coming from it when running "Docker Stats" this may be the fix to your issue. I don't believe that the container is setup to do a scan on startup so you may have to trigger it by adding this line to the scripts as seen below in the screen shot. I have also figured out how to get multithreading working although be warned when using multi you may want to schedual it for when your not using your server as it can be quite CPU and RAM hungry. Some thoughts for you before you proceed with multithreaded scans are to put a memory limit on your docker through extra parameters. Multi Thread: exec('docker exec ClamAV sh -c "find /scan -type f -print0 | xargs -0 -P $(nproc) clamscan"'); Single Thread: exec('docker exec ClamAV sh -c "clamscan"');

-

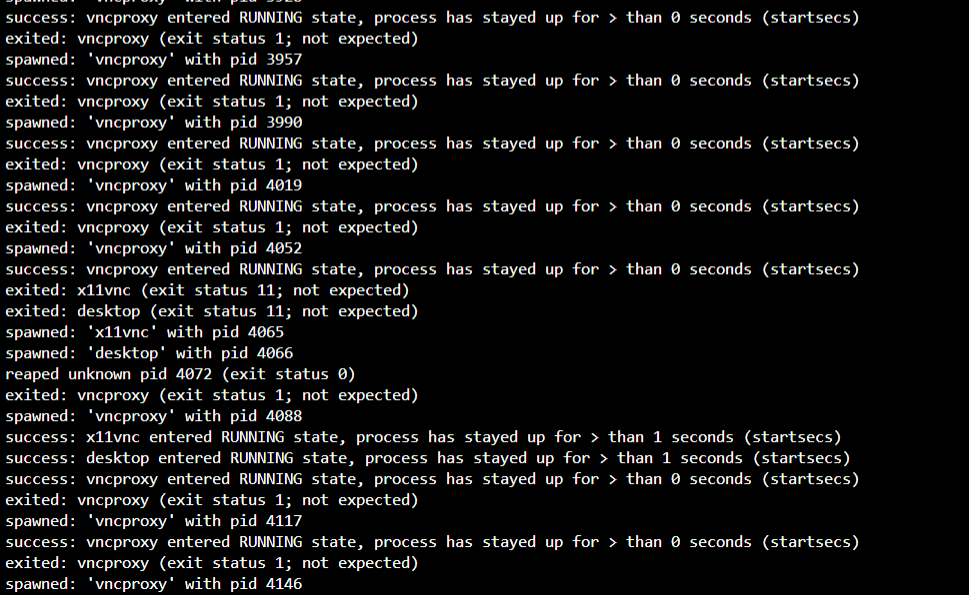

Has anyone able to guide through this issue or even get this docker container working on unraid. Trying to use my primary/only gpu but none of the display options seem to work.

-

Hi guys, I can see others have posted about a similar issue but I cannot seem to find where the solution may have been posted. I cannot seem to get past this error. @Josh.5 any advise mate is greatly appreciated and for others that may also be getting this as well.

-

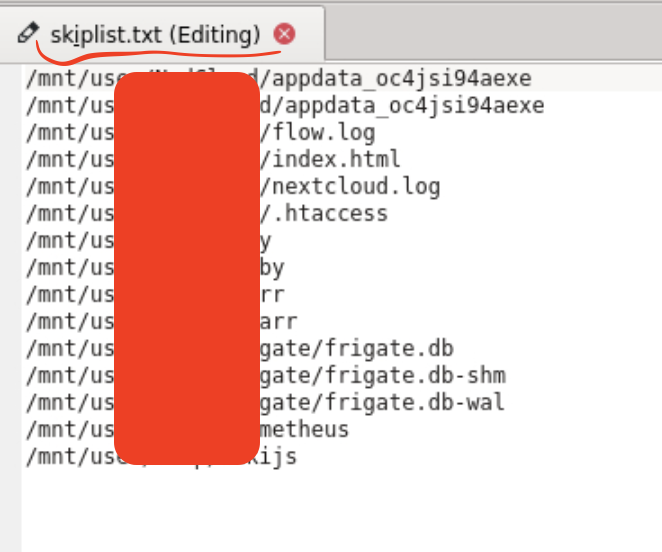

Hello, Perhaps I can provide an explanation of where you going wrong. you have not actually provided a path properly to a list of directories you want skipped. To be clear Ignore file types and Ignore files / folders listed inside of text file are not related they are individual settings and can be used independently. Please see below the examples:

-

Testing now! It will take a while to run through but i'm sure this is the solution. Thank you so much for your help, I just overlooked it lol I vaguely remember having it set to 00 4 previously but that was many unraid revisions ago probably before backup version 2 came out. Ill also try giving your plugin revision a bash over the next couple of weeks

-

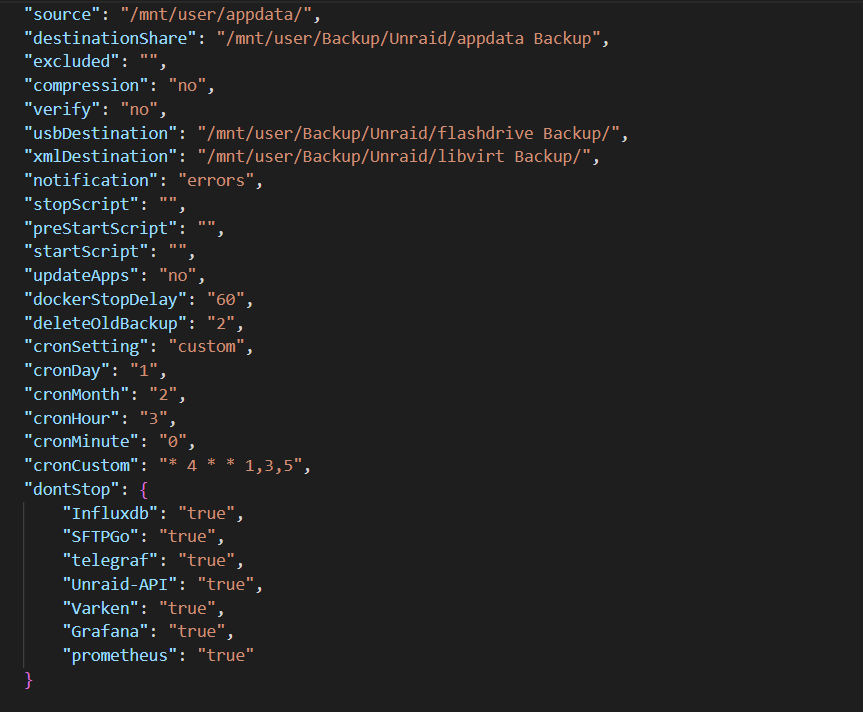

OMG you are right i'm clearly having a major brain cell malfunction lol I should remove the * and replace it with 00 so it looks like this " 00 4 * * 1,3,5 " Do you think that is correct?

-

https://crontab.guru/ is what I used as referance and from my understanding it is supposed to run at 4am every monday, wednesday and friday. From BackupOptions.json :

-

-

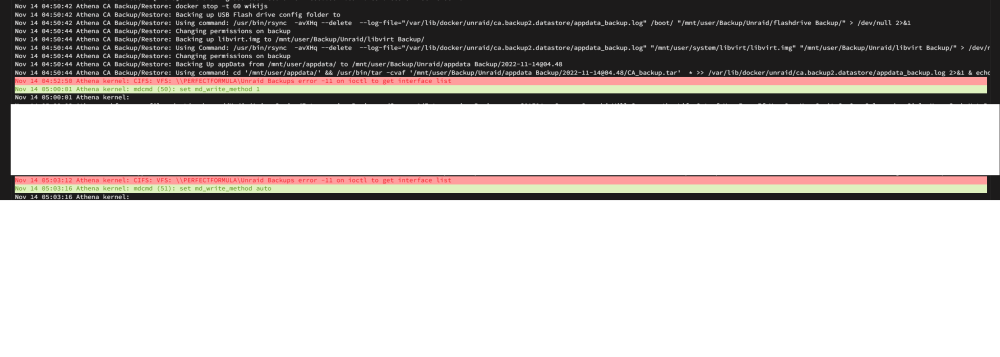

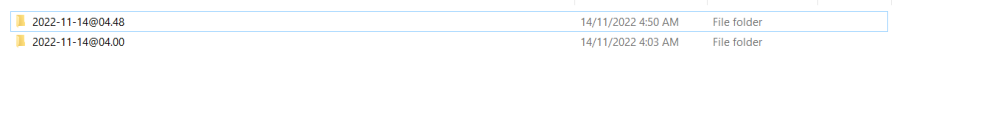

Please see below is a couple of screenshots of the duplicate issue that has me a bit stumped. I have been through the code that lives within the plugin as a brief overview but nothing stood out as incorrect in regards to this issue. "\flash\config\plugins\ca.backup2\ca.backup2-2022.07.23-x86_64-1.txz\ca.backup2-2022.07.23-x86_64-1.tar\usr\local\emhttp\plugins\ca.backup2\" I found an interesting file with paths in it that led me to a backup log file "/var/lib/docker/unraid/ca.backup2.datastore/appdata_backup.log" but as it gets re-written each backup it only shows data for the 4:48am backup and not the 4:00am one. so that is not useful, I did not find any errors within this log either. The fact that its getting re-written most likely in my opinion means that the plugin is having issues interpreting either the settings entered or the cron that I have set

-

Ill have to wait for a backup to run so I can get a screenshot for you of the duplicate issue. Yes restoring of single archives. for example I only want to restore duplicate or nextcloud etc. Currently if you want to do only a particular application/docker archive you need to extract the tar, stop and replace things manually. Looking forward to the merge and release. I would class this plugin as one of unraid's core utilities and its importance is high in the community. I have setup multiple unraid servers for friends and family and its always first on my list of must have things to be installed and configured. Currently I'm using it in conjunction with duplicate for encrypted remote backups between a few different unraid servers, pre-request of my time to help them setup unraid etc and way cheaper than a google drive etc. Being able to break backups apart into single archives without having to rely on post script extraction saves on ssd and hdd io and life. Per docker container / folder would make upload and restoring much easier and faster for example doing a restore from remote having to pull a full 80+gb single backup file can take a very long time and is very susceptible to interruption over only a 2 mb's line for example.

-

Sorry no Repository: binhex/arch-qbittorrentvpn is the repo i still pull from at the moment.

-

I might have run into another issue as well. it seems that when running on a scheduale it will split the backup into two files or 3 some times causing duplication of taken space. I have not been able to figure out what is causing this but my hunch is that it does this when it fills up one disk and has to move to the next or if a move runs on the cache. I have tried direct to array and also to cache first but always duplicate .tar files

-

Amazing work mate! Are you working on separate restore options also ? Can we expect you to merge to original master branch or create your own to be added to Community Applications ? These changes I have been anticipating an am happy to do some testing if need be.