-

Posts

1530 -

Joined

-

Last visited

-

Days Won

1

Content Type

Profiles

Forums

Downloads

Store

Gallery

Bug Reports

Documentation

Landing

Everything posted by danioj

-

While “do-able” I think this is really poor advice. To the @JK252 please see the formal security recommendations from @limetech https://unraid.net/blog/unraid-server-security-best-practices TLDR: don’t expose your unRAID server to the internet. ESPECIALLY the maintenance GUI. Someone gets access and a web based command prompt with root permissions is a click away.

-

My2c. You should be able to do everything that you seek to do - yes those things has been done before. You can get a trial license and give it a go to see if it meets your needs. Generally speaking, unRAID is an excellent product supported by a great and active community. The only word of caution I would give you is, if you're primary interest is virtualization, then there might be better solutions out there for you (e.g. Proxmox). The primary reason for my note of caution is that unRAID requires that the storage array is "started" for other services (KVM, Docker etc) to start. In my experience, if there is a problem with an array disk then this often destabilizes the whole system until the disk problem is fixed. To put that into lay terms - your VM's and Docker Containers aren't going to start unless the array is started and will remain stable and operational as long as your array is in good health.

-

Hi All, I build my bother in law a server that was pretty much a mirror of mine (see sig). 2 years ago he run out of pace and wanted a cheap way to upgrade. I stuck in a Marvell PCIE SATA expander and managed to get it nice and stable. So much so, I even bought one. Long story short, he wants to upgrade again (cheapest possible route is the goal). No more space in the case, no more SATA ports "internally" and he doesn't want to upgrade smaller disks to higher capacity disks (my recommendation originally). He saw this: https://www.scorptec.com.au/product/hard-drives-&-ssds/enclosures/56549-ib-3640su3 Now, that Marvell Technology Group Ltd. 88SE9230 PCIe 2.0 x2 4-port SATA 6 Gb/s RAID Controller (rev 11) card also has 2 external eSATA ports. What sort of stability can he expect from hooking up the above JBOD dock to one of those ports. Will unRAID play nice? Thanks in advance. D

-

Vote Safari please - it needs some love.

-

The New 20TB Seagate has landed (IronWolf Pro)

danioj replied to Lolight's topic in Storage Devices and Controllers

Nice. Once the market normalises to the introduction of this size consumer drive, I wonder how that will change (if at all) the sweet spot drive for $/TB? For me, in Australia, 8TB is currently the sweet spot. -

Update: After roughly 10 days of uptime, I started to get crashes. It seemed to coincide with allot traffic coming to and from the server - which doesn't happen all the time. I have since, removed the need for Host Access to Custom Networks to be enabled. After disabling that option, I have stress tested the server with some serious traffic. Now I have an uptime of 3 weeks with no call traces. I am still doing the fix as per earlier in the thread. Not sure what is providing stability now. Im inclined to think its the disabling of HATCN but I really don't know. Still on macvlan.

-

6.9.2 RC2 "Read SMART" spins up the same 2 disks throughout the day

danioj commented on cholzer's report in Prereleases

-

[unRAID 6.10.0-rc1] - emhttpd: read SMART events keep spinning up array disks

danioj commented on danioj's report in Prereleases

Changed Status to Open Changed Priority to Urgent -

[unRAID 6.10.0-rc1] - emhttpd: read SMART events keep spinning up array disks

danioj commented on danioj's report in Prereleases

I thought I would report back that I was too hasty in reporting that the SMART issue had gone away. Even with all Disk related Plugins removed and as per my actions above I noticed that I was still getting the array SMART data read at random times that was having the effect of spinning up my disks. I downgraded to the latest Stable 6.9.2 and have been running for over a week and not a single unexpected SMART data read or array spin up to speak of. I don't have the time to debug so I am sticking with the Stable. I noticed a set of posts close to this yesterday - I will like this thread in that thread. -

Hey @Squid hope you're doing ok man! I just - randomly - went to the CA AppData Backup / Restore Settings Page and saw this: "NOTE: USB Backup is deprecated on Unraid version 6.9.0 It is advised to use the Unraid.net plugin instead" I kind of get this, given unRAID's own new functionality. However, I would prefer to keep using your functionality instead. I assume (given the settings are still there and the backup keeps working each night) that this feature isn't truly depreciated. I hope you plan on keeping things going!! A local backup is desirable to me and your plugin makes it very easy.

-

The default way to passthrough the device in unRAID doesn't have the device showing up as serial device in Hassio VM - the new auto discovery feature however in HA does still find the device though which makes it confusing as all looks well but you can't setup the ZHA integration or deCONZ add-on. I was able to overcome this by editing the XML and forcing the USB to pass through to the VM as a Serial USB and not the default way unRAID does it. I have my Conbee II passed through to a VM in 6.10.0-rc1 and working perfectly. <serial type='dev'> <source path='/dev/serial/by-id/<yourusbid>'/> <target type='usb-serial' port='1'> <model name='usb-serial'/> </target> <alias name='serial1'/> <address type='usb' bus='0' port='4'/> </serial> The new auto discovery in HA won't work with this method - which is odd - but the device is there in HA and you can setup the ZHA integration or deCONZ add-on just fine. https://forums.unraid.net/topic/113301-passthrough-of-conbee-ii-zigbee-usb-gateway-to-home-assistant-virtual-machine/?tab=comments#comment-1031051

-

[unRAID 6.10.0-rc1] - emhttpd: read SMART events keep spinning up array disks

danioj commented on danioj's report in Prereleases

Changed Status to Closed Changed Priority to Other -

[unRAID 6.10.0-rc1] - emhttpd: read SMART events keep spinning up array disks

danioj commented on danioj's report in Prereleases

Tested thoroughly. Not an issue with this release. -

[unRAID 6.10.0-rc1] - emhttpd: read SMART events keep spinning up array disks

danioj commented on danioj's report in Prereleases

*Penny Drops* Yes I do. I remember now back in 6.2 when this option was officially introduced. I don’t have the plug-in *anymore* but I have reconstruct write enabled - and if I recall correctly that’s the mode that spins up the disks to calculate parity in a quicker way than the traditional to speed up writes. *slaps forehead really hard* This whole thread is a non event and has to do with my settings. God I’m pissed at myself. To anyone who has invested / wasted any time at all as a result of this thread - I’m sorry!! -

[unRAID 6.10.0-rc1] - emhttpd: read SMART events keep spinning up array disks

danioj commented on danioj's report in Prereleases

Downgraded to 6.9.2 and the behaviour is the same. When mover initiates, all disks spin up to be read as part of the write operation irrespective of the number of disks being written to. When a disk spins up SMART data is read as designed. As I have mover initiating every hour (and mover logging is off) queue the endless cycles of SMART reads as disks are spun up. I feel a bit stupid here. Years and years of unRAID use and I never noticed this is how things work. -

[unRAID 6.10.0-rc1] - emhttpd: read SMART events keep spinning up array disks

danioj commented on danioj's report in Prereleases

Feel like I am spamming - but is my thread so I'll go ahead. I can now replicate what is happening. Here is what I did: I spun down the array manually. I opened my personal user share on my iMac. This share has files which are spread across 4 disks. As expected those 4 disks spun up so the share could be read. I copied a small 1MB picture to the share. The file was written to Cache as expected. I initiated mover manually. All disks spun up. SMART was read from each spun up disk (the initial reported problem). The file was written to one of the disks and each disk was read. Mover stopped. I have done this 5 times now. Each with the same result. Like I posted above. If indeed unRAID needs every disk spun up and read to make a write to a single disk (and I have missed this behaviour all these years) then there is no problem at all and this is completely on me for being ignorant. I didn't think that was the case though. -

[unRAID 6.10.0-rc1] - emhttpd: read SMART events keep spinning up array disks

danioj commented on danioj's report in Prereleases

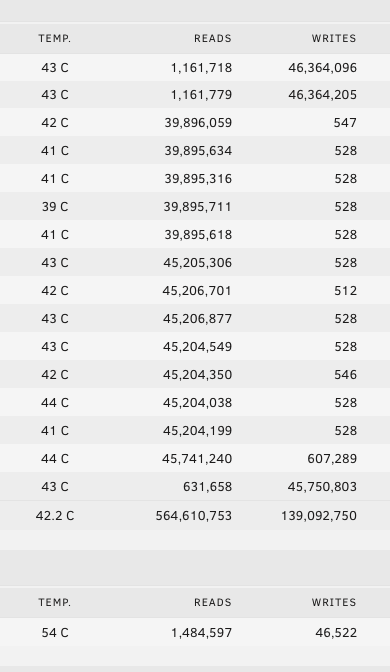

The mover has now stopped. In total there was about ~100GB of data to move at a guess. The picture below shows the effect on the array after moving that 100GB. All those reads in addition to the writes to the single disk and parity. I imagine all the disks will down spin down, until mover initiates again in an hour. At which time, if the disks are spun down and it has to something to move, it will spin up the whole array to do so and at the same time read SMART values of each disk and read each drive will it writes. This is starting to come together. Unless I am completely missing something fundamental in how unRAID works here, this shouldn't be happening right? -

[unRAID 6.10.0-rc1] - emhttpd: read SMART events keep spinning up array disks

danioj commented on danioj's report in Prereleases

OK, this has taken a new turn for me. As part of this test I also disabled Plex (as this the only Docker I could think could have the potential to read disks and cause them to spin up). What I have observed now seems to be related to mover. I have mover set to run every hour. Mostly this captures the odd changed file I want protected or my daily camera feed backup. Well, it just so happens the daily camera feed backup conceded with this test. Now I have my camera feed share isolated to one drive. This to me should mean, that when mover moves these files, that single disk spins up along with the parity disks and they are written. As I understand it, not every drive needs to be spun up even when data is being written. What I am observing here is that the whole array spun up when the mover operation started and in addition to the single disk and parity drives being written to - ALL my array disks are getting significant reads at the same time!! The SMART entries in the log coincide with this and it seems to explain the regularity of the SMART events. My question is, why does mover need to read all my disks at the same time it is writing to a single array disk and parity? -

[unRAID 6.10.0-rc1] - emhttpd: read SMART events keep spinning up array disks

danioj commented on danioj's report in Prereleases

Done. Just rebooted into safe mode now and manually spun the array down. Ill just leave it alone now. I can get a good 5 hours of safe mode in before the family sit down for the night and wan their media server back. -

Hi @fmp4m, not a single one. Uptime 8 days 1 hour 35 minutes

-

[unRAID 6.10.0-rc1] - emhttpd: read SMART events keep spinning up array disks

danioj commented on danioj's report in Prereleases

@ljm42 any thoughts on my suggestion? Is there any feedback from the team on this? -

[unRAID 6.10.0-rc1] - emhttpd: read SMART events keep spinning up array disks

danioj commented on danioj's report in Prereleases

Somewhat detracting from the conversation guys but what is meant by that statement is that while @nuhll was posting about one issue I saw in the logs similar entries to the ones I had. Unrelated conversations. -

Job 1 for you I think is to enable your server to capture the logs prior to a crash. The diagnostics you have posted only show the log after the hard reset - as designed - as the log doesn't persist after a reboot. Follow the instructions here to enable mirror of syslog to /boot Then the next time you recover after a crash you can go to /boot/logs/syslog and see what happened immediatly prior to the crash. Note - don't post the whole file - it won't have sensitive information removed like diagnostics do (if you click that option).

-

[unRAID 6.10.0-rc1] - emhttpd: read SMART events keep spinning up array disks

danioj commented on danioj's report in Prereleases

I decided to do a bit of an investigative test. I amended all my nightly activities (backups etc) so that they were more consistent and completed at ~5am. This would prompt the array to spin down due to inactivity shortly after (there was no-one up using data on the disks at the time). As expected, the array spun down. Then, for some reason I cannot fathom, unRAID started reading SMART data from the disks about an hour later. Sep 6 05:47:17 unraid emhttpd: spinning down /dev/sdg Sep 6 05:47:17 unraid emhttpd: spinning down /dev/sdd Sep 6 05:47:17 unraid emhttpd: spinning down /dev/sde Sep 6 05:47:17 unraid emhttpd: spinning down /dev/sdf Sep 6 05:47:19 unraid emhttpd: spinning down /dev/sdh Sep 6 05:47:19 unraid emhttpd: spinning down /dev/sdn Sep 6 05:47:19 unraid emhttpd: spinning down /dev/sdp Sep 6 05:48:18 unraid emhttpd: spinning down /dev/sdj Sep 6 05:48:18 unraid emhttpd: spinning down /dev/sdc Sep 6 05:48:18 unraid emhttpd: spinning down /dev/sdi Sep 6 05:54:22 unraid emhttpd: spinning down /dev/sdl Sep 6 06:00:02 unraid emhttpd: spinning down /dev/sdo Sep 6 06:00:05 unraid emhttpd: spinning down /dev/sdm Sep 6 06:00:05 unraid emhttpd: spinning down /dev/sdr Sep 6 06:00:07 unraid emhttpd: spinning down /dev/sds Sep 6 06:00:09 unraid emhttpd: spinning down /dev/sdq Sep 6 07:37:02 unraid emhttpd: read SMART /dev/sdi Sep 6 07:37:23 unraid emhttpd: read SMART /dev/sdn Sep 6 07:37:43 unraid emhttpd: read SMART /dev/sdp Sep 6 07:37:56 unraid emhttpd: read SMART /dev/sdq Sep 6 07:38:11 unraid emhttpd: read SMART /dev/sdr Sep 6 07:38:33 unraid emhttpd: read SMART /dev/sdm Sep 6 07:38:45 unraid emhttpd: read SMART /dev/sds Sep 6 07:38:57 unraid emhttpd: read SMART /dev/sdo Sep 6 07:39:11 unraid emhttpd: read SMART /dev/sdd Sep 6 07:39:48 unraid emhttpd: read SMART /dev/sde Sep 6 07:40:03 unraid emhttpd: read SMART /dev/sdf Sep 6 08:00:04 unraid emhttpd: read SMART /dev/sdj Sep 6 08:00:04 unraid emhttpd: read SMART /dev/sdh Sep 6 08:00:04 unraid emhttpd: read SMART /dev/sdg Sep 6 08:00:04 unraid emhttpd: read SMART /dev/sdc Sep 6 08:00:04 unraid emhttpd: read SMART /dev/sdl This is weird because it's not just one disk, it's all of them.