-

Posts

1463 -

Joined

-

Last visited

Content Type

Profiles

Forums

Downloads

Store

Gallery

Bug Reports

Documentation

Landing

Everything posted by dalben

-

6.12.0-rc4 "macvlan call traces found", but not on <=6.11.x

dalben commented on sonic6's report in Prereleases

Thanks. OK, did that, seems up and running. Mild heart attack when the containers wouldn't start. It seems that even though they had Custom eth1 and the correct IP address in the settings, the container needed me to make a change to actually set that. Just deleting the last number of the IP and re-entering it, then Apply, and all came good Some of my containers now seem rate limited. What was at least a 50MB download speed before is now around 10MB. But I also upgraded that latest Unifi network client around the same time so I'll need roll that back to see whats causing this issue. -

6.12.0-rc4 "macvlan call traces found", but not on <=6.11.x

dalben commented on sonic6's report in Prereleases

@JorgeB - Caught the macvlan call trace today. Attached are diagnostics and the call trace snippet below. I have Version: 6.12.4 running on the box. Sep 22 08:39:13 tdm kernel: ------------[ cut here ]------------ Sep 22 08:39:13 tdm kernel: WARNING: CPU: 9 PID: 159 at net/netfilter/nf_conntrack_core.c:1210 __nf_conntrack_confirm+0xa4/0x2b0 [nf_conntrack] Sep 22 08:39:13 tdm kernel: Modules linked in: tun tls xt_nat xt_tcpudp xt_conntrack xt_MASQUERADE nf_conntrack_netlink nfnetlink xfrm_user xfrm_algo iptable_nat nf_nat nf_conntrack nf_defrag_ipv6 nf_defrag_ipv4 xt_addrtype br_netfilter xfs md_mod tcp_diag inet_diag ip6table_filter ip6_tables iptable_filter ip_tables x_tables bridge stp llc macvtap macvlan tap e1000e r8169 realtek intel_rapl_msr zfs(PO) intel_rapl_common zunicode(PO) zzstd(O) i915 x86_pkg_temp_thermal intel_powerclamp coretemp kvm_intel zlua(O) zavl(PO) icp(PO) kvm iosf_mbi drm_buddy i2c_algo_bit ttm zcommon(PO) drm_display_helper drm_kms_helper znvpair(PO) spl(O) crct10dif_pclmul crc32_pclmul crc32c_intel drm ghash_clmulni_intel sha512_ssse3 aesni_intel crypto_simd cryptd mei_hdcp mei_pxp intel_gtt rapl intel_cstate gigabyte_wmi wmi_bmof mpt3sas i2c_i801 nvme agpgart ahci mei_me i2c_smbus syscopyarea raid_class i2c_core intel_uncore nvme_core mei libahci sysfillrect scsi_transport_sas sysimgblt fb_sys_fops thermal fan video wmi Sep 22 08:39:13 tdm kernel: backlight intel_pmc_core acpi_tad acpi_pad button unix [last unloaded: e1000e] Sep 22 08:39:13 tdm kernel: CPU: 9 PID: 159 Comm: kworker/u24:6 Tainted: P O 6.1.49-Unraid #1 Sep 22 08:39:13 tdm kernel: Hardware name: Gigabyte Technology Co., Ltd. B365 M AORUS ELITE/B365 M AORUS ELITE-CF, BIOS F3d 08/18/2020 Sep 22 08:39:13 tdm kernel: Workqueue: events_unbound macvlan_process_broadcast [macvlan] Sep 22 08:39:13 tdm kernel: RIP: 0010:__nf_conntrack_confirm+0xa4/0x2b0 [nf_conntrack] Sep 22 08:39:13 tdm kernel: Code: 44 24 10 e8 e2 e1 ff ff 8b 7c 24 04 89 ea 89 c6 89 04 24 e8 7e e6 ff ff 84 c0 75 a2 48 89 df e8 9b e2 ff ff 85 c0 89 c5 74 18 <0f> 0b 8b 34 24 8b 7c 24 04 e8 18 dd ff ff e8 93 e3 ff ff e9 72 01 Sep 22 08:39:13 tdm kernel: RSP: 0018:ffffc9000032cd98 EFLAGS: 00010202 Sep 22 08:39:13 tdm kernel: RAX: 0000000000000001 RBX: ffff888194440700 RCX: 0d35b370dc56628d Sep 22 08:39:13 tdm kernel: RDX: 0000000000000000 RSI: 0000000000000001 RDI: ffff888194440700 Sep 22 08:39:13 tdm kernel: RBP: 0000000000000001 R08: e03672da4c370c38 R09: 4eea21ed5130b6c5 Sep 22 08:39:13 tdm kernel: R10: 9101b661ea51c81d R11: ffffc9000032cd60 R12: ffffffff82a11d00 Sep 22 08:39:13 tdm kernel: R13: 000000000002fc07 R14: ffff8881af585900 R15: 0000000000000000 Sep 22 08:39:13 tdm kernel: FS: 0000000000000000(0000) GS:ffff88880f440000(0000) knlGS:0000000000000000 Sep 22 08:39:13 tdm kernel: CS: 0010 DS: 0000 ES: 0000 CR0: 0000000080050033 Sep 22 08:39:13 tdm kernel: CR2: 0000149eca3e2484 CR3: 000000000220a002 CR4: 00000000003706e0 Sep 22 08:39:13 tdm kernel: DR0: 0000000000000000 DR1: 0000000000000000 DR2: 0000000000000000 Sep 22 08:39:13 tdm kernel: DR3: 0000000000000000 DR6: 00000000fffe0ff0 DR7: 0000000000000400 Sep 22 08:39:13 tdm kernel: Call Trace: Sep 22 08:39:13 tdm kernel: <IRQ> Sep 22 08:39:13 tdm kernel: ? __warn+0xab/0x122 Sep 22 08:39:13 tdm kernel: ? report_bug+0x109/0x17e Sep 22 08:39:13 tdm kernel: ? __nf_conntrack_confirm+0xa4/0x2b0 [nf_conntrack] Sep 22 08:39:13 tdm kernel: ? handle_bug+0x41/0x6f Sep 22 08:39:13 tdm kernel: ? exc_invalid_op+0x13/0x60 Sep 22 08:39:13 tdm kernel: ? asm_exc_invalid_op+0x16/0x20 Sep 22 08:39:13 tdm kernel: ? __nf_conntrack_confirm+0xa4/0x2b0 [nf_conntrack] Sep 22 08:39:13 tdm kernel: ? __nf_conntrack_confirm+0x9e/0x2b0 [nf_conntrack] Sep 22 08:39:13 tdm kernel: ? nf_nat_inet_fn+0x60/0x1a8 [nf_nat] Sep 22 08:39:13 tdm kernel: nf_conntrack_confirm+0x25/0x54 [nf_conntrack] Sep 22 08:39:13 tdm kernel: nf_hook_slow+0x3a/0x96 Sep 22 08:39:13 tdm kernel: ? ip_protocol_deliver_rcu+0x164/0x164 Sep 22 08:39:13 tdm kernel: NF_HOOK.constprop.0+0x79/0xd9 Sep 22 08:39:13 tdm kernel: ? ip_protocol_deliver_rcu+0x164/0x164 Sep 22 08:39:13 tdm kernel: __netif_receive_skb_one_core+0x77/0x9c Sep 22 08:39:13 tdm kernel: process_backlog+0x8c/0x116 Sep 22 08:39:13 tdm kernel: __napi_poll.constprop.0+0x28/0x124 Sep 22 08:39:13 tdm kernel: net_rx_action+0x159/0x24f Sep 22 08:39:13 tdm kernel: __do_softirq+0x126/0x288 Sep 22 08:39:13 tdm kernel: do_softirq+0x7f/0xab Sep 22 08:39:13 tdm kernel: </IRQ> Sep 22 08:39:13 tdm kernel: <TASK> Sep 22 08:39:13 tdm kernel: __local_bh_enable_ip+0x4c/0x6b Sep 22 08:39:13 tdm kernel: netif_rx+0x52/0x5a Sep 22 08:39:13 tdm kernel: macvlan_broadcast+0x10a/0x150 [macvlan] Sep 22 08:39:13 tdm kernel: macvlan_process_broadcast+0xbc/0x12f [macvlan] Sep 22 08:39:13 tdm kernel: process_one_work+0x1a8/0x295 Sep 22 08:39:13 tdm kernel: worker_thread+0x18b/0x244 Sep 22 08:39:13 tdm kernel: ? rescuer_thread+0x281/0x281 Sep 22 08:39:13 tdm kernel: kthread+0xe4/0xef Sep 22 08:39:13 tdm kernel: ? kthread_complete_and_exit+0x1b/0x1b Sep 22 08:39:13 tdm kernel: ret_from_fork+0x1f/0x30 Sep 22 08:39:13 tdm kernel: </TASK> Sep 22 08:39:13 tdm kernel: ---[ end trace 0000000000000000 ]--- tdm-diagnostics-20230922-1630.zip -

6.12.0-rc4 "macvlan call traces found", but not on <=6.11.x

dalben commented on sonic6's report in Prereleases

I may need to back track out of that statement. I went trawling through my syslog to find I had weekly alerts from the Fix Common Problems plugin that I had macvlan call trace errors, but I couldn't find one in the actual syslog. So ignore the above for now, -

Just set this up and it looks good. I can reach it internally but when I try to reach it externally, via Nginx Proxy Manager reverse proxy, I get 500 Errors. Anyone else see this? Most like misconfigured on my side but most of the options have been tried. Dug around a bit and the logs show *10086 invalid port in upstream as the issue. Not sure what else to try. The config files look like the others. *All sorted now. BDU error. One does not need http:// when nginx requires only the host address.

-

6.12.0-rc4 "macvlan call traces found", but not on <=6.11.x

dalben commented on sonic6's report in Prereleases

The call trace issue, while I have it, doesn't seem to cause me issues. Scanning the logs I get one about once a week. Doesn't halt the system in anyway and I've seen no issues. I reconfigured my network setup based on some guides earlier. 2 NICs in the server, one dedicated to the Docker network. No bridge between NIC 1 and 2. NIC 2 isn't assigned an IP address. I run a Unifi setup and I'd much prefer my network map to be correct. Being borderline OCD i like to give all my dockers a static IP address. So IPVLAN isn't for me. So in summary, I do get the odd call trace, but it doesn't cause my server any problems. Not sure if that's consistent with others or whether I'm one of the lucky ones. -

Why do I NOT have issues with macvlan on docker ?

dalben replied to vw-kombi's topic in General Support

Agree, another Unraid user here and I like being able to see each docker individually on reserved IPs. A quick trial with IPvlan had the Unraid console completely confused and close to unusable for Docker listing. -

I found these entries in /boot/config/ident/cfg USE_WSD="yes" WSD_OPT="" WSD2_OPT="-4 -i br0" Can anyone tell me what they are for or what they do? I've searched the forum with no luck

-

But why would samba want br0? That doesn't make much sense to me.

-

Running 6.12.0 RC5 but I'm not sure if this is release related or some other random warning in my syslog. The only related changes I can think of is I reconfigured my network settings, removing bro from eth0, and moving all Dockers to a second nic which is configured with br1. I checked smb-extra.cfg but everything in there is commented out. Diagnostics attached May 5 12:51:36 tdm ool www[32701]: /usr/local/emhttp/plugins/dynamix/scripts/emcmd 'cmdStatus=Apply' May 5 12:51:36 tdm emhttpd: Starting services... May 5 12:51:36 tdm emhttpd: shcmd (5160097): /etc/rc.d/rc.samba restart May 5 12:51:38 tdm root: Starting Samba: /usr/sbin/smbd -D May 5 12:51:38 tdm root: /usr/sbin/nmbd -D May 5 12:51:38 tdm root: /usr/sbin/wsdd2 -d -4 -i br0 May 5 12:51:38 tdm root: Bad interface 'br0' May 5 12:51:38 tdm root: May 5 12:51:38 tdm root: WSDD and LLMNR daemon May 5 12:51:38 tdm root: Usage: wsdd2 [options] May 5 12:51:38 tdm root: -h this message May 5 12:51:38 tdm root: -d become daemon May 5 12:51:38 tdm root: -4 IPv4 only May 5 12:51:38 tdm root: -6 IPv6 only May 5 12:51:38 tdm root: -u UDP only May 5 12:51:38 tdm root: -t TCP only May 5 12:51:38 tdm root: -l LLMNR only May 5 12:51:38 tdm root: -w WSDD only May 5 12:51:38 tdm root: -L increment LLMNR debug level (0) May 5 12:51:38 tdm root: -W increment WSDD debug level (0) May 5 12:51:38 tdm root: -i <interface> reply only on this interface (br0) May 5 12:51:38 tdm root: -H <name> set host name (tdm) May 5 12:51:38 tdm root: -A "name list" set host aliases () May 5 12:51:38 tdm root: -N <name> set netbios name (TDM) May 5 12:51:38 tdm root: -B "name list" set netbios aliases () May 5 12:51:38 tdm root: -G <name> set workgroup (RADIKAL) May 5 12:51:38 tdm root: -b "key1:val1,key2:val2,..." boot parameters: May 5 12:51:38 tdm root: vendor: unknown May 5 12:51:38 tdm root: model: unknown May 5 12:51:38 tdm root: serial: 0 May 5 12:51:38 tdm root: sku: unknown May 5 12:51:38 tdm root: vendorurl: (null) May 5 12:51:38 tdm root: modelurl: (null) May 5 12:51:38 tdm root: presentationurl: (null) May 5 12:51:38 tdm root: /usr/sbin/winbindd -D May 5 12:51:38 tdm emhttpd: shcmd (5160101): /etc/rc.d/rc.avahidaemon restart tdm-diagnostics-20230506-0959.zip

-

I thought the same but then looking at the logs realised that after loading RC2 rendered my docker.img as a read only volume. btrfs complained of corrupt super block. Not sure if RC2 related or a coincidence. Now in the process of rebuilding my docker image. Edit: Looks like my entire cache pool/drive is read only and also getting read errors. Was working before. Reboot hasn't helped. Need to dig in and see what's happening. Diags attached if anyone wants to dig in deeper. tdm-diagnostics-20230320-0601.zip

-

From what I can work out, with Docker setup to Host Access to custom networks, you get a second network appearing on the same Server IP address. I have 2 NICS, one for the docker vlan one for the server management, both show two networks/MAC addresses at the same IP. Hasn't degregaded performance. What would be nice, and I'm not sure if possible, is to fix/lock a MAC address in for br0 so that my network controller can label it properly. It seems to change at every reboot. It's not a performance thing or show stopper, just helps my OCD cope with my network controller better.

-

A quick that must probably has been answered before but my search fu isn't working today. Due to a catastrophic mobo error, I have to quickly replace my board and get my server up again quickly. The board that's arriving is an ASUS TUF B365M-Plus Gaming. After recently upgrading to 18Tb disks I can use the 6 onboard SATA + 2xM.2 with ports to spare. I also have an LSI 9211-i8 controller that's in the current dead server and a recently purchased 2nd hand Dell HBA, which is an LSI 9207-i8. What's going to give me the best performance, all on the onboard SATA, or on an LSI running on the x4 PCIe slot? Would using the x16 slot make a difference? If we're talking miniscule differences I might go the LSA simply because the cabling will be neater. Any advice would be most welcome.

-

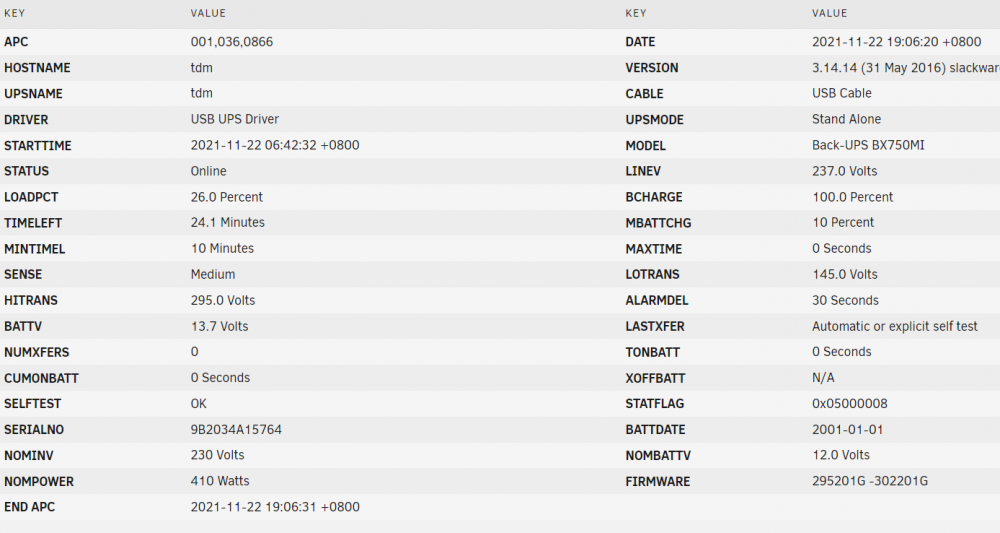

Thanks. No errors prior to NUT losing the USB connection.

-

Still getting this error with the latest update on my APC MODEL Back-UPS BX750MI. Once it loses connection I need to pull the USB cable and plug it in again for it to work. Tried switching to the native Unraid UPS monitor while in this states and it complains of connection error. After resetting the USB cable the Unraid UPS monitor works fine without any issues.

-

I've started seeing parity tuning errors in syslog. Mar 21 06:40:20 tdm root: RTNETLINK answers: File exists Mar 21 06:40:20 tdm Parity Check Tuning: PHP error_reporting() set to E_ERROR|E_WARNING|E_PARSE|E_CORE_ERROR|E_CORE_WARNING|E_COMPILE_ERROR|E_COMPILE_WARNING|E_USER_ERROR|E_USER_WARNING|E_USER_NOTICE|E_STRICT|E_RECOVERABLE_ERROR|E_USER_DEPRECATED Mar 21 06:40:20 tdm emhttpd: nothing to sync Mar 21 06:40:20 tdm sudo: root : PWD=/ ; USER=root ; COMMAND=/bin/bash -c /usr/local/emhttp/plugins/controlr/controlr -port 2378 -certdir '/boot/config/ssl/certs' -showups Mar 21 06:40:20 tdm sudo: pam_unix(sudo:session): session opened for user root(uid=0) by (uid=0) Mar 21 06:40:20 tdm Parity Check Tuning: PHP error_reporting() set to E_ERROR|E_WARNING|E_PARSE|E_CORE_ERROR|E_CORE_WARNING|E_COMPILE_ERROR|E_COMPILE_WARNING|E_USER_ERROR|E_USER_WARNING|E_USER_NOTICE|E_STRICT|E_RECOVERABLE_ERROR|E_USER_DEPRECATED Mar 21 06:40:21 tdm kernel: eth0: renamed from veth505b9c4 They are fairly regular throughout syslog. Noticed them today while running RC3, still there after RC4

-

Not sure if it was addressed in RC4 and if so there was any expectation of the error going away, but it's still there Mar 21 06:40:06 tdm kernel: XFS (md1): Mounting V5 Filesystem Mar 21 06:40:06 tdm kernel: XFS (md1): Ending clean mount Mar 21 06:40:06 tdm emhttpd: shcmd (34): xfs_growfs /mnt/disk1 Mar 21 06:40:06 tdm kernel: xfs filesystem being mounted at /mnt/disk1 supports timestamps until 2038 (0x7fffffff) Mar 21 06:40:06 tdm root: xfs_growfs: XFS_IOC_FSGROWFSDATA xfsctl failed: No space left on device Mar 21 06:40:06 tdm root: meta-data=/dev/md1 isize=512 agcount=32, agsize=137330687 blks Mar 21 06:40:06 tdm root: = sectsz=512 attr=2, projid32bit=1 Mar 21 06:40:06 tdm root: = crc=1 finobt=1, sparse=1, rmapbt=0 Mar 21 06:40:06 tdm root: = reflink=1 bigtime=0 inobtcount=0 Mar 21 06:40:06 tdm root: data = bsize=4096 blocks=4394581984, imaxpct=5 Mar 21 06:40:06 tdm root: = sunit=1 swidth=32 blks Mar 21 06:40:06 tdm root: naming =version 2 bsize=4096 ascii-ci=0, ftype=1 Mar 21 06:40:06 tdm root: log =internal log bsize=4096 blocks=521728, version=2 Mar 21 06:40:06 tdm root: = sectsz=512 sunit=1 blks, lazy-count=1 Mar 21 06:40:06 tdm root: realtime =none extsz=4096 blocks=0, rtextents=0 Mar 21 06:40:06 tdm kernel: XFS (md1): EXPERIMENTAL online shrink feature in use. Use at your own risk! Mar 21 06:40:06 tdm emhttpd: shcmd (34): exit status: 1 Mar 21 06:40:06 tdm emhttpd: shcmd (35): mkdir -p /mnt/disk2 Mar 21 06:40:06 tdm emhttpd: shcmd (36): mount -t xfs -o noatime,nouuid /dev/md2 /mnt/disk2 Mar 21 06:40:06 tdm kernel: XFS (md2): Mounting V5 Filesystem Mar 21 06:40:07 tdm kernel: XFS (md2): Ending clean mount Mar 21 06:40:07 tdm kernel: xfs filesystem being mounted at /mnt/disk2 supports timestamps until 2038 (0x7fffffff) Mar 21 06:40:07 tdm emhttpd: shcmd (37): xfs_growfs /mnt/disk2 Mar 21 06:40:07 tdm root: xfs_growfs: XFS_IOC_FSGROWFSDATA xfsctl failed: No space left on device Mar 21 06:40:07 tdm root: meta-data=/dev/md2 isize=512 agcount=32, agsize=137330687 blks Mar 21 06:40:07 tdm root: = sectsz=512 attr=2, projid32bit=1 Mar 21 06:40:07 tdm root: = crc=1 finobt=1, sparse=1, rmapbt=0 Mar 21 06:40:07 tdm root: = reflink=1 bigtime=0 inobtcount=0 Mar 21 06:40:07 tdm root: data = bsize=4096 blocks=4394581984, imaxpct=5 Mar 21 06:40:07 tdm root: = sunit=1 swidth=32 blks Mar 21 06:40:07 tdm root: naming =version 2 bsize=4096 ascii-ci=0, ftype=1 Mar 21 06:40:07 tdm root: log =internal log bsize=4096 blocks=521728, version=2 Mar 21 06:40:07 tdm root: = sectsz=512 sunit=1 blks, lazy-count=1 Mar 21 06:40:07 tdm root: realtime =none extsz=4096 blocks=0, rtextents=0 Mar 21 06:40:07 tdm emhttpd: shcmd (37): exit status: 1 Mar 21 06:40:07 tdm emhttpd: shcmd (38): mkdir -p /mnt/disk3 Mar 21 06:40:07 tdm emhttpd: shcmd (39): mount -t xfs -o noatime,nouuid /dev/md3 /mnt/disk3 Mar 21 06:40:07 tdm kernel: XFS (md3): Mounting V5 Filesystem Mar 21 06:40:07 tdm kernel: XFS (md3): Ending clean mount Mar 21 06:40:07 tdm kernel: xfs filesystem being mounted at /mnt/disk3 supports timestamps until 2038 (0x7fffffff) Mar 21 06:40:07 tdm emhttpd: shcmd (40): xfs_growfs /mnt/disk3 Mar 21 06:40:07 tdm root: meta-data=/dev/md3 isize=512 agcount=32, agsize=30523583 blks Mar 21 06:40:07 tdm root: = sectsz=512 attr=2, projid32bit=1 Mar 21 06:40:07 tdm root: = crc=1 finobt=1, sparse=1, rmapbt=0 Mar 21 06:40:07 tdm root: = reflink=1 bigtime=0 inobtcount=0 Mar 21 06:40:07 tdm root: data = bsize=4096 blocks=976754633, imaxpct=5 Mar 21 06:40:07 tdm root: = sunit=1 swidth=32 blks Mar 21 06:40:07 tdm root: naming =version 2 bsize=4096 ascii-ci=0, ftype=1 Mar 21 06:40:07 tdm root: log =internal log bsize=4096 blocks=476930, version=2 Mar 21 06:40:07 tdm root: = sectsz=512 sunit=1 blks, lazy-count=1 Mar 21 06:40:07 tdm root: realtime =none extsz=4096 blocks=0, rtextents=0 Mar 21 06:40:07 tdm emhttpd: shcmd (41): mkdir -p /mnt/cache

-

On the pool disks tab. Second pool shows this error: Balance Status btrfs filesystem df: Data, single: total=30.00GiB, used=21.21GiB System, RAID1: total=32.00MiB, used=16.00KiB Metadata, RAID1: total=2.00GiB, used=29.98MiB GlobalReserve, single: total=261.73MiB, used=0.00B btrfs balance status: No balance found on '/mnt/rad' Current usage ratio: Warning: A non-numeric value encountered in /usr/local/emhttp/plugins/dynamix/include/DefaultPageLayout.php(515) : eval()'d code on line 542 0 % --- Warning: A non-numeric value encountered in /usr/local/emhttp/plugins/dynamix/include/DefaultPageLayout.php(515) : eval()'d code on line 542 Full Balance recommended

-

Installed 6.10.0-RC3 and I still get the same error/warning Mar 12 09:50:42 tdm kernel: XFS (md1): Mounting V5 Filesystem Mar 12 09:50:42 tdm kernel: XFS (md1): Ending clean mount Mar 12 09:50:42 tdm kernel: xfs filesystem being mounted at /mnt/disk1 supports timestamps until 2038 (0x7fffffff) Mar 12 09:50:42 tdm emhttpd: shcmd (34): xfs_growfs /mnt/disk1 Mar 12 09:50:42 tdm kernel: XFS (md1): EXPERIMENTAL online shrink feature in use. Use at your own risk! Mar 12 09:50:42 tdm root: xfs_growfs: XFS_IOC_FSGROWFSDATA xfsctl failed: No space left on device Mar 12 09:50:42 tdm root: meta-data=/dev/md1 isize=512 agcount=32, agsize=137330687 blks Mar 12 09:50:42 tdm root: = sectsz=512 attr=2, projid32bit=1 Mar 12 09:50:42 tdm root: = crc=1 finobt=1, sparse=1, rmapbt=0 Mar 12 09:50:42 tdm root: = reflink=1 bigtime=0 inobtcount=0 Mar 12 09:50:42 tdm root: data = bsize=4096 blocks=4394581984, imaxpct=5 Mar 12 09:50:42 tdm root: = sunit=1 swidth=32 blks Mar 12 09:50:42 tdm root: naming =version 2 bsize=4096 ascii-ci=0, ftype=1 Mar 12 09:50:42 tdm root: log =internal log bsize=4096 blocks=521728, version=2 Mar 12 09:50:42 tdm root: = sectsz=512 sunit=1 blks, lazy-count=1 Mar 12 09:50:42 tdm root: realtime =none extsz=4096 blocks=0, rtextents=0 Mar 12 09:50:42 tdm emhttpd: shcmd (34): exit status: 1 Mar 12 09:50:42 tdm emhttpd: shcmd (35): mkdir -p /mnt/disk2 Mar 12 09:50:42 tdm emhttpd: shcmd (36): mount -t xfs -o noatime,nouuid /dev/md2 /mnt/disk2 Mar 12 09:50:42 tdm kernel: XFS (md2): Mounting V5 Filesystem Mar 12 09:50:42 tdm kernel: XFS (md2): Ending clean mount Mar 12 09:50:42 tdm emhttpd: shcmd (37): xfs_growfs /mnt/disk2 Mar 12 09:50:42 tdm kernel: xfs filesystem being mounted at /mnt/disk2 supports timestamps until 2038 (0x7fffffff) Mar 12 09:50:43 tdm root: xfs_growfs: XFS_IOC_FSGROWFSDATA xfsctl failed: No space left on device Mar 12 09:50:43 tdm root: meta-data=/dev/md2 isize=512 agcount=32, agsize=137330687 blks Mar 12 09:50:43 tdm root: = sectsz=512 attr=2, projid32bit=1 Mar 12 09:50:43 tdm root: = crc=1 finobt=1, sparse=1, rmapbt=0 Mar 12 09:50:43 tdm root: = reflink=1 bigtime=0 inobtcount=0 Mar 12 09:50:43 tdm root: data = bsize=4096 blocks=4394581984, imaxpct=5 Mar 12 09:50:43 tdm root: = sunit=1 swidth=32 blks Mar 12 09:50:43 tdm root: naming =version 2 bsize=4096 ascii-ci=0, ftype=1 Mar 12 09:50:43 tdm root: log =internal log bsize=4096 blocks=521728, version=2 Mar 12 09:50:43 tdm root: = sectsz=512 sunit=1 blks, lazy-count=1 Mar 12 09:50:43 tdm root: realtime =none extsz=4096 blocks=0, rtextents=0 Mar 12 09:50:43 tdm emhttpd: shcmd (37): exit status: 1 Mar 12 09:50:43 tdm emhttpd: shcmd (38): mkdir -p /mnt/disk3 Mar 12 09:50:43 tdm emhttpd: shcmd (39): mount -t xfs -o noatime,nouuid /dev/md3 /mnt/disk3 Mar 12 09:50:43 tdm kernel: XFS (md3): Mounting V5 Filesystem Mar 12 09:50:43 tdm kernel: XFS (md3): Ending clean mount Mar 12 09:50:43 tdm emhttpd: shcmd (40): xfs_growfs /mnt/disk3 Mar 12 09:50:43 tdm kernel: xfs filesystem being mounted at /mnt/disk3 supports timestamps until 2038 (0x7fffffff) Mar 12 09:50:43 tdm root: meta-data=/dev/md3 isize=512 agcount=32, agsize=30523583 blks Mar 12 09:50:43 tdm root: = sectsz=512 attr=2, projid32bit=1 Mar 12 09:50:43 tdm root: = crc=1 finobt=1, sparse=1, rmapbt=0 Mar 12 09:50:43 tdm root: = reflink=1 bigtime=0 inobtcount=0 Mar 12 09:50:43 tdm root: data = bsize=4096 blocks=976754633, imaxpct=5 Mar 12 09:50:43 tdm root: = sunit=1 swidth=32 blks Mar 12 09:50:43 tdm root: naming =version 2 bsize=4096 ascii-ci=0, ftype=1 Mar 12 09:50:43 tdm root: log =internal log bsize=4096 blocks=476930, version=2 Mar 12 09:50:43 tdm root: = sectsz=512 sunit=1 blks, lazy-count=1 Mar 12 09:50:43 tdm root: realtime =none extsz=4096 blocks=0, rtextents=0 Mar 12 09:50:43 tdm emhttpd: shcmd (41): mkdir -p /mnt/cache

-

Thanks, that makes sense. Didn't think of that, but yes, I'll try the Unraid way first. Makes life a lot easier

-

Only just started to really use this plugin and dropped adding scripts to crontab. Kicking myself for not migrating to this earlier. Quick question. I have a need to add an entry to the hosts file of about 3 dockers after they start up (a server i am trying to reach has had it's domain name snatched so a hosts file entry is the only way to get to it). Is there a way I can trigger CAUS to to run a script upon docker start? Or maybe 5/10 mins after array start? While I am at it, any tips on writing a script that can be run from the console to had hosts entries in docker containers? Or is there a simpler way to do it?

-

Did this last weekend. Being slighty OCD about my server, there's no way I could leave it with gaps in the disk numbers. 20odd hours for the parity rebuild but server is up and running while it was doing it so there was no impact really.