-

Posts

810 -

Joined

-

Last visited

Content Type

Profiles

Forums

Downloads

Store

Gallery

Bug Reports

Documentation

Landing

Everything posted by casperse

-

Installing v6.12.1 solved it for me.

-

Thanks @OrneryTaurus I actually confronted the seller again that kept claiming this was full height and she told me she got wrong info from the manufactor So I am back to the old case and full extra height for a very big air cooler 🙂 just need to get a better deal. They have 2000W PSU with redundancy but I have never had anything like this? It does support a normal PSU but I can get a dual setup for the same money?

-

I just got the new update 6.12.1 installed and its back to normal. Even the parity check can now run with all services running all at around +60% CPU like before! My only issue is that the "logs" have not been freed up after the update to 6.12.1 - before after problems and a reboot the utilization of my cache drive was back to normal afterwards (I suspect some logging would get flushed) but now after the update I didn't get the space back from my cache drive its still gives warning about high utilization. Is there any command I can run to "clean" up my cache drive?

-

UPDATE: I did status urgent since I could see many people reporting high CPU usage, please change status if needed) After upgrading to 6.12.0 I experienced very high CPU usage to a extend that the whole system unresponsive. Dockers stopped working and i Got the cache drive warning some log is filling it up. Also I had to stop doing a parity check (To few resources, it almost crashed it). The log shows this today?: Diagnostics attached. I also tried to do some PINNING of the cores to get some functions back, that did help opening the Unraid GUI but not on anything else. I am almost ready to do a rollback, any would be really appreciated would like to stay on this version. diagnostics-20230619-2110.zip

-

I upgraded today 3 hours ago and I am now seeing the same problem 100% and unresponsive! Gues I also better rool back to the RC version. Wierd I also got a warning about utilization on my cache drive and found this: Looking at the logs window:

-

diagnostics-20230613-1307.zip

-

I really beleive that I am having the same issue after upgrading as you! Upgraded to v.6.12.0-rc6 and CPU and RAM have been much higher than before! CPU is now always around +80! - services: find and shfs is now always up! At one time I had to cut the power because it stayed unresponsive for hours. Diag file attached.

-

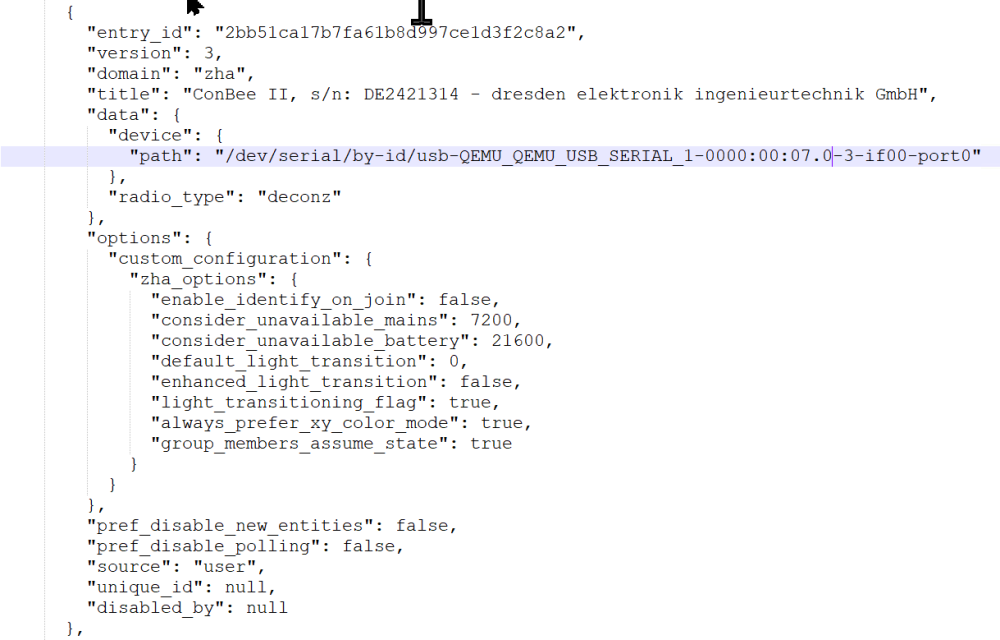

I finally took the courage to delete and re-add the ZHA integration in HA (Hoping it would recover all my devices again!) And the new path is: { "entry_id": "1c7ac4a53b85198687c853f65f3a7fa6", "version": 3, "domain": "zha", "title": "/dev/ttyUSB0", "data": { "device": { "path": "/dev/serial/by-id/usb-QEMU_QEMU_USB_SERIAL_1-0000:00:07.0-4-if00-port0" }, "radio_type": "deconz" }, "options": {}, "pref_disable_new_entities": false, "pref_disable_polling": false, "source": "user", "unique_id": null, "disabled_by": null } And it seem to be working for both USB now! 🙂 But only one is listed as connected? the other one still says "Virsh Error"? Both use the port 4 as "Connect as serial" I have not been able to change them. Can I just ignore this error? it looks like it working ok in HA

-

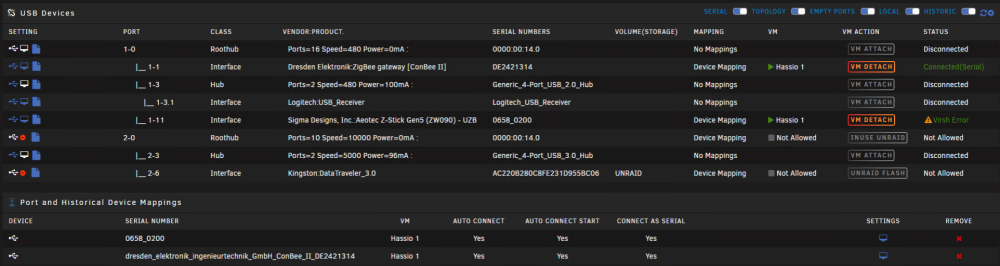

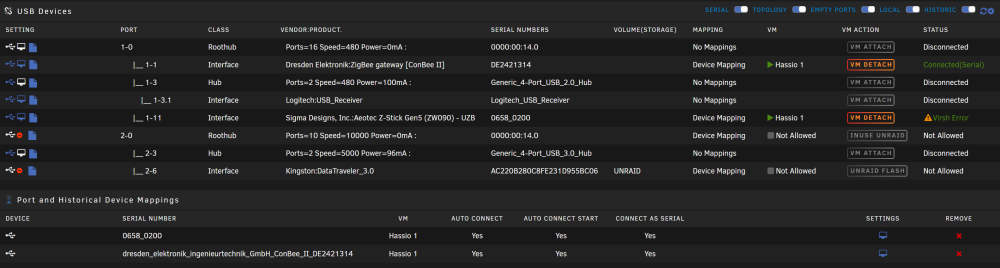

Thanks (Everything worked before the Unraid update) root@:~# cat /usr/local/emhttp/state/usb.ini | grep error virsherror = 1 virsh = "error: Failed to attach device from /tmp/libvirthotplugusbbybusHassio 1-001-059.xml error: XML error: Duplicate USB address bus 0 port 4 root@~# cat /usr/local/emhttp/state/usb.ini | grep vir virsherror = 1 virsh = "error: Failed to attach device from /tmp/libvirthotplugusbbybusHassio 1-001-059.xml root@:~# UPDATE (I am really trying to get this working not a happy house when Hassio isnt running 🙂 I have tried detach and attach and now get this: The one that says connected dosent work in HA? I Dont now what the correct configuration should be in HA? If anyone knows what the Blue line should be then let me know 🙂

-

I found the HA settings, not sure what to change: { "entry_id": "2bb51ca17b7fa61b8d997ce1d3f2c8a2", "version": 3, "domain": "zha", "title": "ConBee II, s/n: DE2421314 - dresden elektronik ingenieurtechnik GmbH", "data": { "device": { "path": "/dev/serial/by-id/usb-QEMU_QEMU_USB_SERIAL_1-0000:00:07.0-4-if00-port0" }, "radio_type": "deconz" }, The mappings now looks like this:

-

I did had access to the flash drive under the rootshare before. But you are right I can just map to the flash from the hostname/IP, I just liked to have all in one rootshare 🙂

-

-

just updated to 6.12.0RC6 and after this I am getting VIRSH errors? And connected outside? not sure what to do? Problem is that without these two USB - Home assistant dosent work 😞

-

I have now made sure that CPU-0 / Core6 is not pinned to anything (Hoping this would give me access to the UI after upgrade). I now face a new issue, seems my "ident.cfg" on my flash is wiped clean, server named TOWER again and so on. I then wanted to just use my flash backup and replace the ident.cfg with the org. one in the flash config folder. But after upgrade to 6.12.0-RC6 I no longer have access to my "flash" when I acces my "Root share". Anyone who can help me out? or is this a bug? (New mount points) I haven't changed anything on the flash: (My windows user have Read/write access:

-

I am looking at also building with the i5, but I really want 128 GB ECC ram, but so far haven't found any modules? (4x32) If anyone have a link then please let me know? I also just noticed that Corsair is coming out with 192 GB DDR5 RAM? (But is not ECC) but it should have some of the ECC funct.? Pushing the Boundaries of DDR5 – CORSAIR® Launches New 48GB, 96GB and 192GB Memory Kits

-

No But I do have a VM with Chrome having open tabs to Unraid? I ended up having to cut the power (Not even SSH terminal could get access) so I rolled back to the stable version. Everything works fine now? (Same open window on my VM.)

-

Hi All I just did the upgrade from the stable to this release candidate rc 6 and after some time the system got really unresponsive and I got this from the log terminal: (Never seen this before?) I then got the diag. (Took forever but I got it in the end) 🙂 Hope some one can share some light on this (Looks like all my dockers and VM are still running) diagnostics-20230521-1109.zip

-

$800 Nice price! (I haven't found anything in that pricerange) I did 'nt know about the Tesla 4 card but I have other cards and I dont want to replace them all with other ones that are 1/2 height 🙂 Also the future of cooling new CPU looks like it will requires bigger fans so the extra height would allow me to use something like a Noctua NH-D15. Yes the shipping and quality of the case would be a risk I agree. But so was buying my last case from the UK and so far that has been working great Old Norco RPC-4224 4U (UK SC-4324S) I am talking to them about another case with 36 drives that they claim have full PCie height? I have written to them again and they say it is full height? In-Win IW-RS436-07 - 2U Server Chassis with 36x SATA/SAS/NVMe Hot-Swap Bays - Includes 1200W CRPS Redundant PSU and Rail Kit - Server Case I think this one is better build quality and the price of this i allot higher! But I will get 2xPSU (1200W) with me in this buy! (Not sure this would have all the new power cables needed for new CPU's & Motherboards/GPU etc. Drawbacks: "Only 36 drives and not room for the extreme high CPU fan coolers" I just cant believe that this is really full height PCie? (The seller claims this, but the pictures doesn't look it? or does it?) So either go for this or try a the Innovision case? Thanks for your feedback! @OrneryTaurus much appreciated! I just really want one new case to "rule them all" the 72 is a beast and if the quality is ok, I might dare to roll the dice on it🙂 or buy some spare parts when ordering? I could even have two Unraid servers running in the same case, and use the old server for backup and experimenting with Proxmox and VM's

-

Hi All I always wanted a Storinator case but the cost is just to high! Lately I might have found a alternative, that might solve allot of my problems? Most cases that have +36 drive bays and above only have half heights on PCie slots! (You cant even have a P2000 in it!) Also the new CPU's are requiring allot more cooling, so having half the Hight for a fan cooler isn't really ideal. So This might be a solution? (I would appreciate any input to possible problems?) I am new to backplane extenders and not sure my existing HBA would be compatible with this case? My HBA is a LSI Logic SAS 9305-24i Host Bus Adapter - x8, PCIe 3.0, 8000 MB/s Would this be able to utilize all these bays? I also have, from my last build: LSI Logic SAS 9201-8i PCI-e Controller / 9211-8i (IT-mode) / Full height bracket (2 connectors = 8 Sata) LSI Logic SAS 9201-16i PCI-e 2.0 x8 SAS 6Gbs HBA Card (4 connectors = 16 Sata) Again I really like the airflow and height of this case for a full height CPU air cooler! and the 8 x 120mm fans (According to the specs) - noise is not an issue High Dense Rack Mount 8U 72bay Top-loaded Storage Server Chassis

-

ERRORS: Emulating two drives during a rebuild! :-(

casperse replied to casperse's topic in General Support

Hi Jorge B. It just finished it must have speeded up in the end....and it says that the array is ok for both drives now? When it was running it only stated it was rebuilding the 18TB drive and not Drive 1 (12TB) but according to Unraid my array is fine now? Anyway I am now looking into building another Unraid server as a Backup server (This was kind of a wakeup call) Again thanks for all your help so happy to be up and running again with parity drives on all drives! -

ERRORS: Emulating two drives during a rebuild! :-(

casperse replied to casperse's topic in General Support

Hi JorgeB Speed is at highest 120MB/sec and now pretty low 8.6 MB/sec most of the time: lspci -d 1000: -vv My current PCie slot and placement of HW: PCIe slot 1: x8 NVIDIA Quadro P2000 PCIe slot 2: x4 NVIDIA GeForce RTX 3060 PCIe slot 3: x8 LSI Logic SAS 9305-24i Host Bus Adapter PCIe slot 4: x4 M.2. NVMe ICY BOX: IB-PCI215M2-HSL adapter New placement? PCIe slot 1: x8 LSI Logic SAS 9305-24i Host Bus Adapter PCIe slot 2: x4 NVIDIA Quadro P2000 PCIe slot 3: x8 NVIDIA GeForce RTX 3060 PCIe slot 4: x4 M.2. NVMe ICY BOX: IB-PCI215M2-HSL adapter -

ERRORS: Emulating two drives during a rebuild! :-(

casperse replied to casperse's topic in General Support

Thanks I will try that after rebuild is done. I think the HBA is in one of the x8 slots Unfortunately look like it is going to take (4-5 days) a very long time for the 18TB + 12TB drives to rebuild. Is there any tweaks I can use to do this faster? (Thinking of the disk settings, most is larger and faster drives) So far I think its very standard values (I have tried to search the forum but haven't found any newer post about this subjetc