-

Posts

11265 -

Joined

-

Last visited

-

Days Won

123

Content Type

Profiles

Forums

Downloads

Store

Gallery

Bug Reports

Documentation

Landing

Everything posted by mgutt

-

Bitte im Terminal das ausführen: find /mnt/user/isos -ls Ergebnis?

-

Yes. Read this post:

-

I found a new container which permanently wrote to my SSD:

-

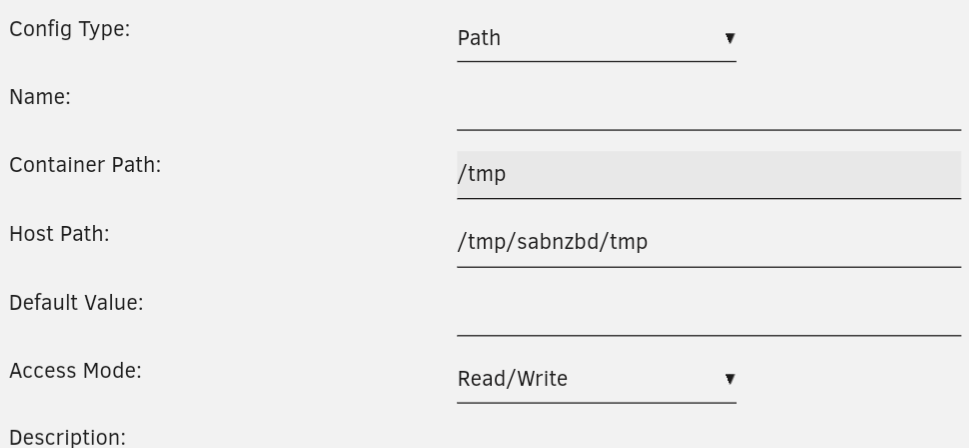

@binhex Could you please add this path to the default config to reduce wear on the SSD: Sab is permanently writing to these files: root@thoth:~# find /tmp/sabnzbd/ /tmp/sabnzbd/ /tmp/sabnzbd/tmp /tmp/sabnzbd/tmp/vpngatewayip /tmp/sabnzbd/tmp/vpnip /tmp/sabnzbd/tmp/getiptables /tmp/sabnzbd/tmp/watchdog-script-stderr---supervisor-jgbmg455.log /tmp/sabnzbd/tmp/watchdog-script-stdout---supervisor-dva2j1vt.log /tmp/sabnzbd/tmp/start-script-stderr---supervisor-5i8155s4.log /tmp/sabnzbd/tmp/start-script-stdout---supervisor-whva770j.log /tmp/sabnzbd/tmp/endpoints And by using /tmp this are written to unRAID's RAM disk.

-

This won't interfere, but you already avoid writing several log files. Maybe those are useful for you? Then you can now enable them by removing "--log-driver none" and "--log-driver syslog --log-opt syslog-address=udp://127.0.0.1:541" parts. Even "--no-healthcheck" could be removed as well.

-

Could you change this in your post to "6.12.8 to 6.12.10"? The link in the first post was updated.

-

NVMe AER Corrected Error und PCie Bus Error

mgutt replied to speedycxd's topic in Anleitungen/Guides

Nur mal als Info: Ich habe eine Samsung 990 Pro 4TB verbaut und jetzt auch die Logs voller AER Fehler. Also Samsung alleine reicht nicht als Empfehlung. Kommt also auch auf das jeweilige Modell an. Nervig 😒 Hier übrigens die Latenz-Tabelle der 990 Pro: Supported Power States St Op Max Active Idle RL RT WL WT Ent_Lat Ex_Lat 0 + 9.39W - - 0 0 0 0 0 0 1 + 9.39W - - 1 1 1 1 0 0 2 + 9.39W - - 2 2 2 2 0 0 3 - 0.0400W - - 3 3 3 3 4200 2700 4 - 0.0050W - - 4 4 4 4 500 21800 Ich versuche es jetzt mal mit der Kernel Option, um dem Einhalt zu gebieten: nvme_core.default_ps_max_latency_us=15000 -

I already commented this in one of the Unraid 6.8.X or 6.9.X update topics after the main dashboard has been completely changed, but it was never fixed. Bug: Clicking on "Disk X" or any other of the clickable icons does randomly nothing. The reason is that Unraid is reloading most of the content through the "devices" API every second: And if you click on an icon while the content is reloading, the click does nothing (race condition). How to solve this: - the "devices" API should return JSON which contains only the relevant data, like temp, speed, usage, etc without any html code - the html content stays fixed and only the values are updated In addition the huge overhead of this API is reduced by >90%, which is nice for mobile vpn connections.

-

I replaced my SSD against a bigger one. I prepared this step by moving appdata from the SSD to the Array (while Docker was set to "no"). After replacing the SSD I was disappointed about the extremely low speed of the mover: As docker is set to "no" I stopped the mover by the "mover stop" command and used this command instead: rsync --remove-source-files --archive /mnt/disk6/appdata/ /mnt/cache/appdata & disown This caused an extreme boost on moving the files, because a simple rsync - instead of the mover - does not check for every single file if it is in use: Feature Request: If the docker service has been stopped and "appdata" files are moved, it should not use the "fuser" overhead. Instead it should move the files directly without any additional checks. This should not happen if "appdata" is an smb/nfs share and starting the docker service should be greyed out as long the mover is active in this special mode. The same could be done for the docker path and all other shares which are not smb/nfs shares I think. Or: Add a checkbox to the mover button, which allows starting the mover manually in "performance mode", which disables smb/nfs, docker and vm services.

-

My share "appdata" used my "cache" pool as an exclusive share. Now I wanted to replace the SSD of the "cache" pool against a bigger one. So I changed (without stopping the array) the "appdata" share configuration from: to: After that I started the mover and several hours later my nextcloud was broken (could not access relevant files). This was solved by rebooting the server. I assume that inside of the nextcloud container the mount to the exclusive share was still present, so it should not be possible to change this setting while docker is active. Sadly I didn't tested it inside of the Nextcloud container so I can't deliver the proof, but this seems to be the only plausible reason for me.

-

Does this work? https://community.synology.com/enu/forum/1/post/140590

-

Bedenke, dass der Docker Dienst läuft. Vielleicht hat er in dem Moment Daten in die Image Datei geschrieben. Das Backup der Datei wäre dann eh kaputt.

-

Das docker.img konnte nicht gelesen werden, was beim laufenden Docker Betrieb evtl die Ursache sein könnte. Allerdings macht es auch keinen Sinn das zu sichern. Die Docker Umgebung (also das Image) enthält keine Nutzerdaten. Die liegen in appdata.

-

Einfach im Log nach fail oder error suchen. Ein Beispiel: Den Pfad gibt es also nicht.

-

You are probably using the host network for your container. The container setting to set the port forwarding has no meaning in this context. It is only active for the bridge network. Feel free to open a feature request at the official GitHub page of NPM to request for: - disable port 80 / http - Request a new VARIABLE to change the default ports 80 and 443 And/or use the bridge network.

-

Delete the container and then delete the directory /mnt/user/appdata/nginx-bla-bla The file manager plugin is useful for this.

-

Du hast nicht geschrieben wie weit der C-State nun runter ging und warum?! Ich würde erstmal eine Messung ohne alles machen. Manchmal sind es einzelne Slots und manchmal bestimmte Hardware.

-

I would say: Delete everything and restart from the beginning. Everything else sounds complicated: https://community.letsencrypt.org/t/certbot-renew-error-please-choose-an-account/206600/9 https://community.letsencrypt.org/t/please-choose-an-account-how-to-delete-an-account/212902

-

Ja, ich kann dir Komponenten raussuchen und bei Bedarf auch zusammensetzen. Am besten telefonieren wir mal und du beschreibst mir deinen Bedarf. Meine Kontaktdaten findest auf meiner Website: https://gutt.it

-

Make ~/.bash_history a symlink to /boot/config/ssh/.bash_history

mgutt replied to mgutt's topic in Feature Requests

This should work: https://askubuntu.com/a/115625 -

Denkbar wären noch Nextcloud und Filebrowser.

-

Effizienterer Ersatz für Syno 918+

mgutt replied to Dennis14e's topic in NAS/Server Eigenbau & Hardware

Naja. Die kommen sicher nicht vom Board und der CPU. Da läuft doch noch anderes im Leerlauf. Was hast du alles verbaut?